What's the Best Way to Move Petabytes of Data to the Cloud?

Summarize this article with:

✨ AI Generated Summary

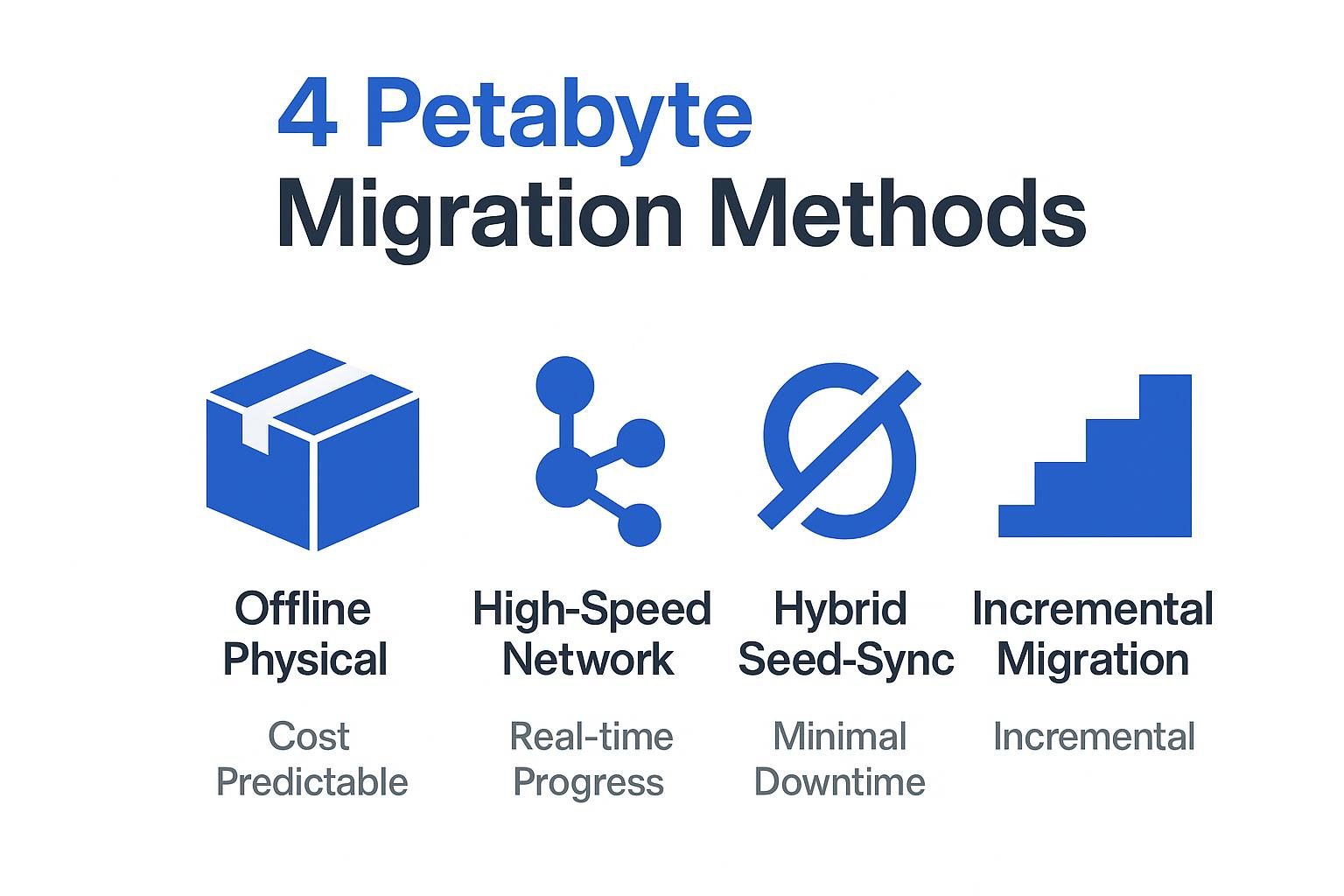

Petabyte-scale data migrations are driven by business transformation, technical modernization, and organizational changes, requiring careful trade-offs between speed, cost, security, and risk. Key migration methods include offline physical transfer, high-speed network transfer, hybrid seed-and-sync, and incremental migration, each suited to different organizational constraints and data characteristics.

- Successful migrations depend on detailed planning, organizational alignment, technical execution, and post-migration readiness.

- Modern platforms like Airbyte simplify complex migrations with CDC connectors, automation, and monitoring to reduce downtime and risk.

- Critical factors include managing costs (network fees, staffing), ensuring security (encryption, access controls), and maintaining business continuity with minimal disruption.

Picture your data team walking into Monday's stand-up to find a new board mandate on the whiteboard: move 50 petabytes to the cloud in just six months. The CFO insists on slashing capital spend, the CTO demands zero downtime, security refuses to budge on end-to-end encryption, and business analysts worry that any hiccup will corrupt the dashboards they refresh every hour. You're suddenly balancing terabytes per hour, people's careers, and an immovable deadline.

Budget overruns and hidden network costs remain the norm for large migrations, even at companies with seasoned engineering teams. Each stakeholder has heard cautionary tales about massive egress fees, week-long outages, and compliance audits gone wrong. Moving data at petabyte scale requires strategic thinking about trade-offs between speed versus cost, simplicity versus security, and risk versus timeline.

This guide helps you choose the right migration approach for your specific constraints. We'll compare offline appliances, high-speed network transfer, hybrid seed-and-sync, and incremental migration strategies, then provide decision frameworks for matching each method to your organization's technical realities, budget limitations, and timeline pressures.

When Do Petabyte Migrations Actually Happen?

You don't move tens of petabytes just because the mood strikes you. The order to migrate lands on your desk when business, technical, and organizational pressures converge into an unavoidable mandate.

Business Transformation Drivers

- Digital transformation initiatives requiring cloud-native capabilities

- Cost optimization mandates to reduce on-premises infrastructure expenses

- Mergers and acquisitions requiring data consolidation across different platforms

- Regulatory or compliance requirements demanding specific cloud capabilities

Technical Modernization Needs

- End-of-life hardware requiring infrastructure replacement decisions

- Scaling limitations of on-premises systems unable to handle growth

- Disaster recovery improvements and business continuity requirements

- Access to cloud-native analytics and machine learning capabilities

Organizational Change Catalysts

- New technical leadership bringing cloud-first strategies

- Competitive pressure requiring faster innovation and deployment capabilities

- Vendor consolidation efforts to reduce operational complexity

- Geographic expansion requiring global data accessibility

Understanding these drivers helps frame migration success criteria beyond just "moving data." Technical leaders need approaches that address the underlying business requirements, not just the technical challenge of data transfer.

What Are Your Migration Approach Options?

Moving petabytes is rarely a one-size-fits-all exercise. Each technique trades speed, cost, and risk differently, so you need to match the method to your constraints rather than forcing your constraints to fit the method.

Offline Physical Transfer

Offline physical transfer relies on vendor-supplied appliances (AWS Snowball or Google Transfer Appliance) that you load in your data center and ship back to the cloud provider. This approach delivers predictable throughput that ignores network bottlenecks, and shipping bulk data often costs less than months of premium bandwidth.

You'll face timing constraints as you wait for devices to arrive, fill, clear customs, and be ingested. You also assume responsibility for chain-of-custody security during transit.

Best For: Organizations that can tolerate a longer overall timeline but want to cap costs, or when your data is scattered across multiple sites with limited connectivity.

High-Speed Network Transfer

High-speed network transfer uses direct-connect links or optimized WAN acceleration to push data continuously. This path provides near-real-time progress visibility and the ability to restart failed jobs without repackaging drives.

Dedicated circuits and premium egress charges can spike operational expenses. Speed without security is pointless: end-to-end encryption and zero-trust controls remain non-negotiable requirements for enterprise teams managing sensitive datasets.

Best For: Organizations that demand tight windows and already own robust connectivity infrastructure.

Hybrid Seed-and-Sync Model

The hybrid seed-and-sync model starts with an appliance seed, then swaps to continuous replication over the network. The offline phase knocks out 90% of the volume quickly; the online phase keeps systems current until cutover.

This approach combines the cost predictability of shipping hardware with the minimal downtime of live sync. However, you manage two workflows and must coordinate their hand-off cleanly. Modern platforms make the sync side far simpler: configure Change Data Capture once and let the platform stream deltas automatically, avoiding days of custom scripting. Platforms like Airbyte offer pre-built CDC connectors for major databases, handling the complexity of log parsing and incremental sync.

Best For: Large datasets requiring minimal downtime with complex timelines.

Incremental Migration

Incremental migration stretches the journey into bite-sized phases. You move a domain or business unit, validate, optimize, then rinse and repeat. This creates a lower blast radius with continuous learn-and-adapt cycles, eliminating massive maintenance windows.

Longer coexistence of old and new systems means duplicated operations work and careful consistency checks. Teams with limited staff or low risk tolerance often start here, then speed up once confidence grows.

Best For: Risk-averse organizations with limited resources or complex data dependencies.

Decision Framework

When you sit down to choose, anchor the discussion on five questions:

- How much downtime can your business tolerate during migration?

- What network bandwidth is realistically available for sustained data transfer?

- How frequently does your data change during typical migration timeframes?

- What are your security and compliance requirements for data in transit and at rest?

- How experienced is your team with large-scale migration projects?

Legacy ETL stacks such as SSIS often lack the parallelism and cloud flexibility required at petabyte scale, making modern replication platforms more attractive. No matter which path wins, a modern integration layer shields you from reinvention. Platforms with extensive connector libraries let you swap sources or destinations, monitor replication health, and automate retries so you can focus on strategic trade-offs, not plumbing.

How Do You Choose the Right Migration Strategy?

When you're moving petabytes of data, your approach has to match your data realities, organizational constraints, and infrastructure capabilities. Work through four key areas (data profile, organizational limits, connectivity, and business continuity) then map each to the trade-offs of offline appliances, high-speed links, hybrid seed-and-sync, or incremental cutovers.

Data Characteristics And Scale

Start by measuring what you're actually moving. If you're dealing with true petabyte volumes, an appliance like AWS Snowball or Azure Data Box often beats the raw economics of network transfer.

Volume alone isn't enough though. Change rate matters just as much: static archives tolerate longer offline windows, while operational tables that update every few seconds need continuous sync or Change Data Capture.

Inventory not just volume but also the characteristics of partitioning (range, hash, or list) across each dataset before you size network capacity.

If your data spans multiple regions, factor in logistics costs of shipping devices to each site versus orchestrating parallel network streams.

Organizational Constraints And Capabilities

Calibrate your approach against timeline, risk tolerance, and team skills. A board mandate that "everything must be in the cloud this quarter" favors seed-and-sync or lift-and-shift tactics that minimize redesign.

If your culture is risk-averse and your stack is mission-critical, the phased safety of incremental moves feels safer even if it stretches the timeline. Missing cloud expertise is one of the top blockers of large data transfers. Modern integration platforms abstract much of that complexity, but you still need engineers who understand dependency mapping and failure domains.

Infrastructure And Connectivity Realities

Bandwidth is the hard governor on any network-based move. A dedicated 10-Gbps line transfers about 100 TB a day under ideal conditions. A double-digit-petabyte job could still take weeks.

Where that math fails, offline appliances reduce schedule risk. Security and compliance layers add their own weight: encrypted drives in transit satisfy auditors who worry about internet exposure, while in-flight TLS is mandatory for high-speed links.

Careful cost modeling is essential. Storage ingress is cheap, but network upgrades and egress fees can destroy budgets if they're not forecast early.

Business Continuity Requirements

Determine how much disruption the business can handle. If the answer is "almost none," combine an initial bulk copy with CDC-driven sync so production systems stay live until the cutover minute.

If weekend maintenance windows already exist, a straight offline transfer followed by verification might be the simplest path. Whatever you choose, build rollback checkpoints and automated data-quality tests. Early validation catches integrity issues before they snowball.

Key Questions To Finalize Your Choice

- How much downtime can you accept, and who signs off on it?

- What sustained bandwidth is truly available once other traffic is accounted for?

- How fast does source data change during the projected window?

- Which compliance rules govern data in transit and at rest?

- Do you have, or can you rent, the skills to run parallel systems for weeks?

Plot those answers on a simple scoring matrix to reveal which strategy (offline lift, high-speed pipe, hybrid seed-and-sync, or phased increments) best balances speed, cost, and risk for your context.

The result isn't a one-size-fits-all prescription. The result is a transparent trade-off map that every stakeholder can understand before the first byte moves.

What Are the Critical Success Factors?

Your petabyte move will only advance the business if it delivers more than raw data transfer speed. Non-technical factors such as leadership, governance, and process management consistently separate successful large-scale transfers from expensive cautionary tales.

- Planning And Preparation Quality: Start by cataloging every dataset, lineage dependency, and compliance boundary. A detailed inventory lets you size network capacity, estimate validation windows, and bake rollback buffers into the schedule. Teams that invest upfront in cost, security, and feasibility assessments avoid the mid-project surprises that drive budget overruns.

- Organizational Alignment And Change Management: Treat your colleagues like customers of the project. Executive sponsorship provides clear authority, but day-to-day success hinges on transparent roadmaps, frequent status touchpoints, and role-specific training. Companies that followed an employee-centric approach reported smoother adoption and lower productivity dips during cutover.

- Technical Execution Excellence: Even with petabyte appliances or CDC pipelines, you still need incremental validation, real-time monitoring, and security checks at every hop. Robust integration frameworks and automation reduce human error, while encryption and access controls close the window attackers target during transit.

- Vendor And Tool Management: A multi-cloud or hybrid footprint keeps you from getting trapped by a single provider's pricing or capacity limits. Define SLAs, escalation paths, and fallback options long before the first byte ships. Continuous compatibility testing across platforms (an approach common in multi-cloud playbooks) prevents a niche connector or appliance from becoming a critical path bottleneck.

- Post-Migration Operational Readiness: The finish line is not "all data landed"; the finish line is reliable day-two operations. Update runbooks, monitoring dashboards, and disaster-recovery drills for the cloud architecture. Establish governance and cost-optimization policies before opening access, a best practice repeatedly stressed in Google's strategic guidance on building a cross-functional transformation journey.

These factors reinforce one another: meticulous planning enables smoother change management; clear ownership accelerates technical troubleshooting; and an operational readiness mindset ensures the business sees value the moment the process completes. Ignore any one of them, and the petabyte scale quickly magnifies minor issues into mission-critical outages.

How Can You Use Airbyte Cloud to Move Your Data?

Airbyte Cloud handles the coordination headaches that turn petabyte migrations into months-long engineering projects. The platform manages orchestration, monitoring, and recovery work that usually requires custom scripts and dedicated staff.

For hybrid seed-and-sync migrations, Airbyte bridges the gap between your initial bulk load and ongoing replication. Ship your petabytes via appliance, then configure CDC connectors to capture changes while you validate and cut over. The platform eliminates custom scripting typically needed to coordinate between offline and streaming phases.

Scalable infrastructure handles large data volumes through configurable batch sizes and parallel processing. When transfers fail, built-in retry logic resumes from the last successful checkpoint instead of starting over. Performance monitoring helps identify bottlenecks during high-volume transfers.

Security and compliance features meet enterprise requirements for large-scale moves:

- End-to-end encryption for data in transit

- Private network connectivity options to avoid public internet exposure

- Comprehensive audit logging for compliance reporting

- Role-based access controls for team management

Migration-focused capabilities that reduce common pain points:

- 600+ pre-built connectors cover major databases and applications

- Automatic schema evolution handling during long migration windows

- Real-time monitoring dashboards for transfer progress and status

- Configurable sync frequencies and batch processing options

For incremental migrations, configure connectors business unit by business unit. Validate each domain in the cloud, then retire source systems as confidence builds. This approach limits risk while building team expertise with each successful phase.

How Do You Manage Migration Risks and Costs?

Petabyte transfers demand control over risk, predictable costs, and steady progress. The most successful data leaders build these priorities into every phase rather than treating them as afterthoughts.

Risk Management Strategies

- Pilot migrations with non-critical data to reveal dependency issues early

- Parallel system operation during transition periods to enable quick rollback

- Incremental cutover approaches that limit blast radius if validation reveals issues

- Comprehensive backup strategies in both environments to guard against failures

Cost Optimization Approaches

- Total cost modeling including network upgrades, security tooling, and staff hours

- Transfer scheduling during off-peak windows to reduce bandwidth costs

- Data tiering to archival classes immediately after landing

- Pre-built connectors that eliminate custom development and maintenance overhead

Security and Compliance Considerations

- End-to-end encryption for data in transit and at rest

- Role-based access controls on both appliance and cloud endpoints

- Audit trail preservation proving governance compliance throughout the move

- Data residency planning before any transfers begin to avoid costly geographic retrofits

Timeline and Resource Management

- Realistic scheduling with validation buffers and contingency plans

- Stakeholder communication to prevent surprise downtime windows

- Quality assurance using checksum comparisons and row-level reconciliation

- Performance benchmarking to demonstrate real improvements beyond just successful transfer

Managing petabyte-scale moves requires disciplined planning: test small, automate where possible, model costs holistically, and verify continuously. When you treat risk, cost, security, and quality as priorities from day one, cloud migration becomes a measured journey rather than a leap of faith.

Ready to evaluate your petabyte migration options? Whether you choose offline appliances, network transfer, or hybrid approaches, modern data integration platforms can simplify the ongoing sync complexity. Explore Airbyte's CDC connectors and see how they fit into your migration strategy.

Frequently Asked Questions

Why do enterprises move data at the petabyte scale?

Petabyte-scale migrations usually stem from business transformation initiatives, hardware end-of-life, compliance requirements, or mergers and acquisitions. It’s rarely just about storage costs—these moves often tie into broader goals like enabling cloud-native analytics, meeting regulatory demands, or consolidating systems after acquisitions.

What are the biggest risks in large-scale cloud migrations?

Common risks include budget overruns from hidden network and egress fees, downtime that disrupts business operations, data corruption during transfer, and compliance violations. Without proper planning, small missteps can escalate into costly outages or failed audits.

How do I choose the right migration strategy?

Your choice depends on downtime tolerance, bandwidth availability, change rates of your data, compliance requirements, and team expertise. Offline appliances suit bulk static data, high-speed transfers fit organizations with robust connectivity, hybrid seed-and-sync minimizes downtime, and incremental migrations reduce risk for resource-limited teams.

What role does Change Data Capture (CDC) play in migration?

CDC keeps cloud and on-premises systems in sync by capturing only new or updated records. This ensures that after an initial bulk transfer, changes are continuously replicated, minimizing downtime and ensuring accurate cutovers. Platforms like Airbyte offer pre-built CDC connectors that simplify this process.

How can organizations control costs during petabyte migrations?

Cost control comes from holistic planning: modeling total costs (network, appliances, staff time), scheduling transfers during off-peak hours, leveraging archival storage tiers, and using pre-built connectors to avoid expensive custom development. Early financial modeling prevents egress fees and hidden costs from derailing budgets.

What security measures are critical for petabyte-scale transfers?

End-to-end encryption, role-based access controls, private network routing, and audit trail preservation are essential. Compliance frameworks like GDPR and HIPAA require provable security during transit and at rest. For physical appliances, maintaining chain-of-custody documentation is also critical.

.webp)

.png)