What's the Difference Between Orchestration and ETL?

Summarize this article with:

✨ AI Generated Summary

The article clarifies the distinction between ETL and orchestration, emphasizing that:

- ETL focuses on extracting, transforming, and loading data reliably between systems.

- Orchestration manages the scheduling, dependencies, and error handling of complex workflows across multiple tools.

- Choosing between ETL, orchestration, or both depends on your primary pain points—data movement challenges call for ETL, while complex multi-step workflows require orchestration.

- Modern data teams often need integrated solutions that separate data handling from workflow coordination to scale efficiently.

Your team is in a stand-up debating next quarter's pipeline roadmap. One engineer insists you need Apache Airflow to "keep everything on schedule." Another argues the real pain point is extracting campaign data from half a dozen ad platforms, so an "ETL tool is the answer." A third worries about stitching together model training, quality checks, and report refreshes in a single flow. The meeting ends with action items but no agreement because everyone is talking about different problems.

The disconnect comes from treating orchestration and ETL as interchangeable, when they actually solve distinct challenges. ETL focuses on what happens to the data: extracting it, transforming it, and loading it into a destination. Orchestration manages when tasks run, in what order, and how failures are handled. You can move data flawlessly yet still miss nightly dashboards if nothing coordinates the jobs.

This guide cuts through that noise. You'll learn why "moving data" and "coordinating work" are separate responsibilities, how to decide which capability you actually need first, and where integrated solutions fit. Instead of circular stand-ups, you'll have a blueprint for smarter architectural decisions.

ETL: Solving Data Movement Headaches

When you need to pull records from Salesforce, flatten semi-structured JSON, or reconcile changing schemas before loading everything into Snowflake, you reach for ETL. Its core job is getting data from point A to point B reliably. That means maintaining connectors to diverse APIs and databases, applying transformations so formats, units, and columns align, detecting schema drift and enforcing data quality rules, and loading the processed data into warehouses, lakes, or operational stores.

ETL tools excel when the challenge is getting data from point A to point B in a predictable, repeatable way. They focus on "what happens to the data." They excel at standardizing inputs so downstream teams can query a single, trusted source. ETL pipelines are typically linear and task-focused, designed for repeatable data movement between well-defined endpoints.

Data Orchestration: Coordinating the Workflow

You already have half a dozen ETL jobs, a dbt transformation project, and a machine-learning model that needs refreshed features every hour. Orchestration tools decide when those tasks run and in what order. Their focus is "when and how tasks execute," not the data manipulation itself. They handle complex scheduling (time-based, event-driven, or ad-hoc) along with dependency graphs, so Job B only starts when Job A finishes successfully. They also manage automated retries, alerts, and failure remediation, plus cross-tool coordination spanning warehouses, BI tools, notebooks, and ML services.

Orchestration sits at a higher level, supervising entire ecosystems rather than individual pipelines.

Core Differences in Functionality

ETL is a subset of the broader orchestration landscape. ETL masters the data itself while orchestration masters the runbook. Understanding this split lets you decide whether you need a better baton, a smarter coach, or both.

When Do You Need ETL vs Orchestration?

You rarely start a data initiative wondering which logo to purchase. You begin with a concrete pain point. Maybe a legacy ERP refuses to speak to your warehouse, or a growing constellation of ML jobs needs tighter control. Deciding whether to lean on ETL, workflow management, or both comes down to pinpointing that primary friction and matching it to the right capability set.

When ETL Is Your Primary Need

ETL tools excel when the challenge is getting data from point A to point B in a predictable, repeatable way. They own the connections, handle schema drift, and perform the necessary format changes so your analytics layer can query a clean table every morning. Platforms such as Informatica, AWS Glue, and SSIS focus on this "what happens to the data" layer. ETL remains the fastest path for reliable batch movement of structured information.

ETL handles your core data movement needs when you're moving information between a handful of systems. Sources demand intricate extraction logic (rate-limited APIs, slowly changing dimensions) and you'd rather not reinvent connectors. Your transformations focus on format or schema alignment, not advanced business logic. Legacy databases or proprietary on-prem software dominate your environment, and schedules are simple enough that manual fixes work for occasional failures.

When Orchestration Takes Priority

Workflow coordination handles "when and how" tasks run. It's the conductor coordinating an entire symphony of jobs. Some might be ETL extracts, some SQL transforms, others containerized Python models. These engines track dependencies, trigger downstream tasks, and retry failures automatically, giving you end-to-end visibility across tool boundaries.

Workflow management takes center stage when you already have reliable data movers but need to stitch them into a coherent workflow. Your pipelines branch or loop based on data quality checks, feature-store updates, pushing new embeddings to a vector database like Pinecone, or model-driven thresholds. Multiple teams contribute pieces of the same pipeline (analytics engineering, ML ops, finance) each running in distinct tools. You need robust retry logic, alerting, and lineage across systems for compliance or SLA reasons. You're building ML pipelines where feature prep, training, evaluation, and deployment have tight, sequential handoffs.

When You Need Both Approaches

You need both when your platform ingests dozens of disparate sources, then feeds transformations, reverse-ETL jobs, and real-time dashboards. Cron alone can't handle the cascade of downstream tasks triggered by each load. Data volume and team count are scaling fast, and separating movement from coordination keeps ownership clear. You're embracing the modern data stack, where best-of-breed tools communicate through standardized hooks and APIs.

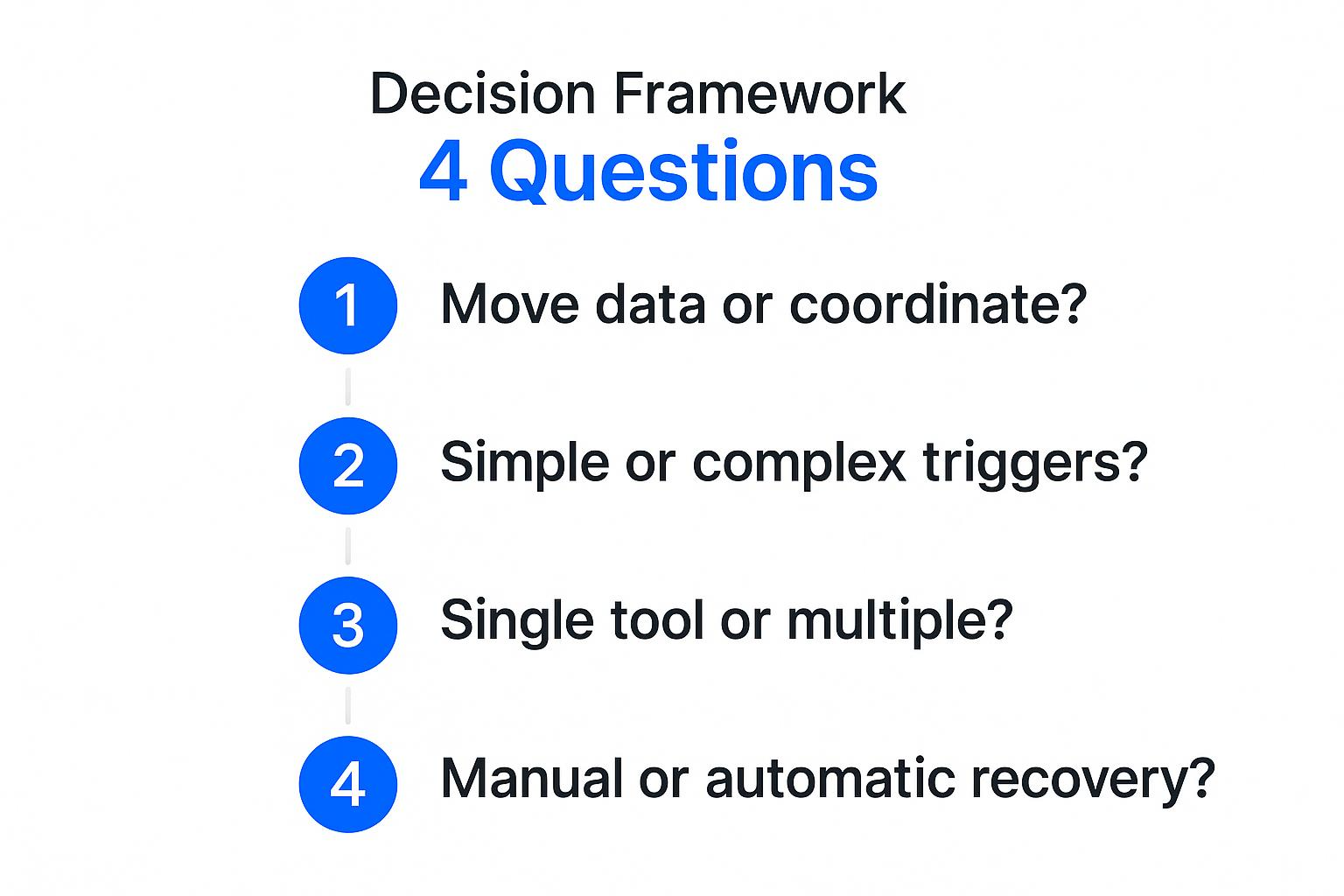

What Should You Choose for Your Team?

Start by framing the decision around your most painful bottleneck. If getting data from dozens of sources into a single warehouse keeps you up at night, you need raw horsepower in extraction and loading. When coordinating complex transformations and downstream refreshes creates chaos, workflow management brings peace of mind.

Choose ELT-focused platforms when:

- Data movement between systems is your primary challenge

- You need a vast connector catalog to avoid custom extract scripts

- Schema drift and data quality issues dominate your time

- Batch scenarios where simplicity and reliability matter most

Choose workflow coordination when:

- Pipelines span multiple systems with complex dependencies

- Feature engineering jobs must finish before scoring models

- Finance reports require reconciliation completion triggers

- You need sophisticated schedulers, conditional branches, and global error handling

You need both when:

- Platform ingests dozens of sources feeding transformations and dashboards

- Teams are scaling fast and need clear ownership boundaries

- Modern data stack tools require standardized hooks and APIs

Organizational context shapes decisions:

- Small teams prefer single platforms minimizing operational overhead

- Large enterprises favor specialized tools with clear ownership boundaries

- Technical fluency determines DIY coordination capability versus managed solutions

Growth planning requires software with open APIs, modular add-ons, and open-source foundations that avoid painful migrations when requirements evolve.

Common evolution patterns:

- Connector-first strategy: Start with tools offering 600+ connectors, add external scheduling when workflows mature

- Workflow-first strategy: Begin with coordination engines having rich data operators, layer in dedicated ELT later

- Hybrid evolution: Graduate from all-in-one solutions to best-of-breed pairs connected through APIs

Airbyte fits across all three patterns with its open-source core, 600+ connector ecosystem, and native hooks for Airflow or Prefect.

Choose platforms that excel at your primary bottleneck while leaving doors open for tomorrow's complexity. Success comes from matching specific challenges to the right capabilities, whether that's robust data movement, sophisticated coordination, or integrated platforms handling both.

Frequently Asked Questions

1. What is the main difference between ETL and orchestration?

ETL focuses on the data itself—extracting, transforming, and loading it into a destination. Orchestration focuses on when and how tasks run—scheduling jobs, managing dependencies, and handling retries or failures.

2. Can ETL tools handle orchestration as well?

Some ETL platforms include basic scheduling features, but they are not designed for complex workflows that span multiple systems. For pipelines with branching logic, conditional triggers, or dependencies across tools, a dedicated orchestration solution like Airflow or Prefect is more effective.

4. Do I need ETL, orchestration, or both?

You need ETL to extract, transform, and load data, and orchestration to manage workflow dependencies, scheduling, and multi-step pipelines. Simple pipelines may need only ETL, but complex, multi-system workflows typically require both for efficiency and reliability.

5. Why do people confuse ETL and orchestration?

The confusion comes from overlap. Many ETL tools offer lightweight scheduling, while some orchestration frameworks provide built-in operators for simple extracts or loads. But the goals differ—ETL is about data movement and transformation, orchestration is about workflow coordination at scale.

.webp)

.png)