What's the Best Way to Track Data Lineage in ETL Pipelines?

Summarize this article with:

✨ AI Generated Summary

Data lineage provides a detailed map of data flow from source to destination, crucial for faster debugging, compliance, and trust in analytics. Key benefits include:

- Rapid root cause analysis by tracing errors to specific transformations

- Meeting regulatory and audit requirements with automated documentation

- Improved collaboration and reduced incident resolution time across teams

Effective lineage strategies combine automatic metadata capture, code analysis, and governance tailored to pipeline complexity and team size, while success is measured by faster issue resolution, higher data confidence, and streamlined compliance processes.

The revenue dashboard your executives rely on flashes an alarming 30-percent dip. Minutes stretch into hours as you comb through dozens of Airflow tasks, dbt models, and custom Python scripts pulling data from sources like Bing Ads, trying to understand where the numbers went wrong. Eventually you discover a silent schema change in a third-party API (one new nullable column) that cascaded through your ETL pipeline and rewrote yesterday's figures.

By the time you fix the query, stakeholders have already started questioning every chart you share. The delay wasn't caused by the bug itself; it was the detective work. Without a clear map of how data moved from the API to the warehouse, you had to reverse-engineer each transformation manually.

That map is data lineage: the end-to-end record of where data originates, what transformations it undergoes, and where it lands in your ecosystem. Data lineage tracks every hop, timestamp, and actor in the pipeline, making trust explicit rather than assumed. When implemented correctly, you resolve incidents faster, satisfy audit requests with a click, and restore faith in analytics before skepticism spreads.

When Does Data Lineage Actually Matter?

You rarely think about data lineage until something breaks. A single malformed record can ripple through your dashboards, creating spurious correlations that mislead decision-makers, raising frantic "where did this come from?" messages.

Debugging and Root Cause Analysis

- Data quality issues that affect business decisions or customer experience

- Pipeline failures requiring quick identification of upstream dependencies

- Performance problems needing impact analysis across dependent systems

- Schema changes that break downstream transformations

Compliance and Governance Requirements

- Regulatory audits requiring data source documentation and transformation logic

- Data privacy compliance (GDPR, CCPA) demanding deletion and modification tracking

- Financial reporting requiring audit trails for data used in regulatory filings

- Risk management needing understanding of data dependencies and single points of failure

Organizational Scale Challenges

- Multiple teams working on shared data pipelines without central coordination

- Complex transformation logic spanning multiple tools and systems

- Legacy systems where original documentation has been lost

- Frequent schema evolution across many data sources

With lineage in place, you can trace that record from the KPI all the way back to the raw source in seconds instead of hours. Column-level lineage pinpoints exactly which transformation introduced an error, turning a needle-in-a-haystack search into a guided tour.

The same traceability shields you during pipeline failures. When a nightly job stalls, lineage diagrams reveal every upstream dependency. You can evaluate blast radius and prioritize fixes before customers notice.

Decision Framework Questions:

- How long does it currently take to debug data quality issues?

- What compliance or audit requirements demand data traceability?

- How many people understand your critical data transformations?

- What would happen if key team members left tomorrow?

If the honest answers make you uneasy, it's time to treat data lineage as essential infrastructure, not a nice-to-have insurance policy.

What Are the Different Approaches to Lineage Tracking?

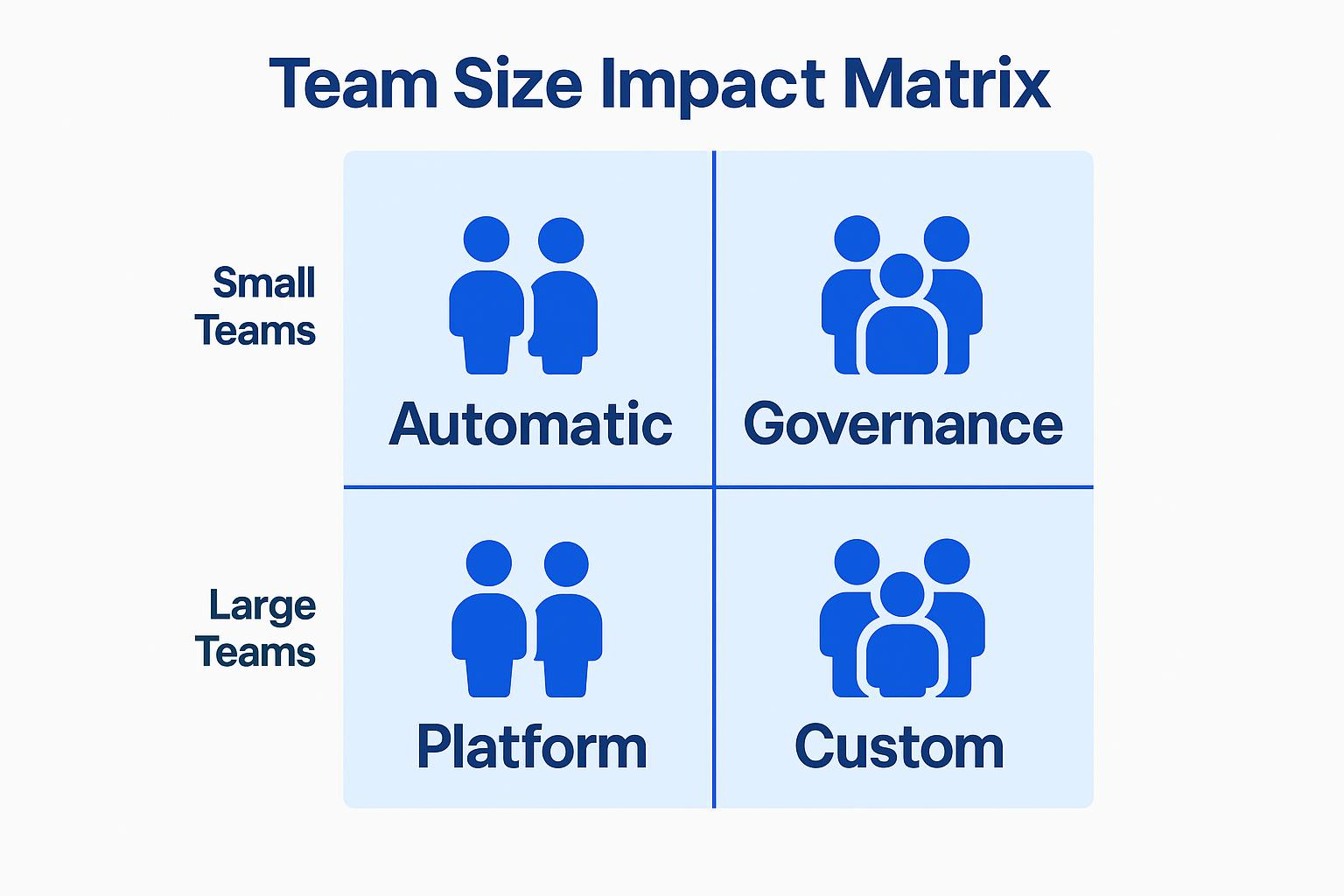

Choosing how to capture lineage involves matching the right mix of automation, code analysis, and governance to your specific pipelines. Here are four core approaches, each with distinct strengths and trade-offs.

Automatic Metadata Capture

Modern ETL tools and ELT platforms like Airbyte emit metadata during data movement operations. This metadata can be pulled into catalogs to show basic source-to-destination mappings. You can enhance this by reading query logs from Snowflake or BigQuery to reconstruct transformation steps, or pair schema registries with CDC streams to track column changes over time.

Benefits:

- Low maintenance overhead, comprehensive coverage of platform-managed operations

- Broad platform coverage that rarely misses standard transformations

Limitations:

- May miss custom code, external scripts, or complex business logic

- Remains blind to legacy processes outside the platform

Code-Based Lineage Generation

When transformations live in dbt models, Spark jobs, or SQL files, static analysis tools parse that code and map data dependencies down to the column level. Teams can add inline annotations or use APIs so every microservice reports the datasets it touches.

Benefits:

- Captures custom business logic that automatic scanners miss

- Column-level precision for detailed dependency tracking

Limitations:

- Requires discipline to keep code parseable and annotated

- Lineage can drift out of sync when refactoring happens without updating documentation

Hybrid Observability Approaches

Real-world stacks mix managed ELT with custom code. The pragmatic solution combines passive metadata harvesting with event-driven hooks: Spark jobs can be configured to emit lineage events using tools like OpenLineage, and dbt models generate artifacts (such as manifest.json) that are used by external tools to update your catalog.

Benefits:

- Flexibility for edge cases while automated for bulk workloads

- Creates unified view that correlates performance with data flows

Limitations:

- Integration work can sprawl across multiple systems

- Needs clear ownership to prevent duplicate events or missing links

Manual Documentation and Governance

Spreadsheets, wikis, and UML diagrams still appear in audits, especially for Mainframe jobs or vendor black boxes. Teams formalize this with governance workflows: every schema change requires an updated lineage diagram before merging.

Benefits:

- Total control over documentation scope and format

- Handles legacy systems where automation can't reach

Limitations:

- High maintenance overhead that scales poorly

- Goes stale quickly when hot-fixes bypass the process

Implementation Patterns:

- Start simple by enabling your platform's built-in lineage for pipelines that power executive dashboards

- Add precision by layering static code analysis on critical transformation repositories

- Scale with governance by enforcing pull-request checks that validate lineage coverage

How Do You Choose the Right Lineage Strategy?

When every platform claims to "automatically" map lineage, it's easy to buy tools before you understand what you actually need. Start with a decision framework that weighs your team's size, pipeline complexity, compliance pressures, and the business impact of bad data.

Team Size and Technical Sophistication

- Small teams benefit from platform-provided automatic lineage with minimal configuration

- Large teams may need sophisticated governance processes and custom integration

- Technical sophistication determines ability to implement and maintain complex lineage systems

Data Pipeline Complexity

- Simple ELT workflows: Platform automatic tracking usually sufficient

- Complex multi-tool pipelines: Hybrid approaches combining automatic and manual tracking

- Legacy systems with custom code: May require significant custom lineage implementation

Compliance and Governance Requirements

- Basic audit needs: Automatic platform lineage often adequate

- Regulatory compliance: May require formal documentation and approval processes

- Financial or healthcare: Might need detailed audit trails and change tracking

Business Impact and Risk Tolerance

- High-impact data products need comprehensive lineage for quick issue resolution

- Experimental or internal analytics may accept gaps in lineage coverage

- Customer-facing systems typically require detailed understanding of data dependencies

Growth and Evolution Planning

- Consider how lineage needs will change as data volume and team size grow

- Evaluate ability to migrate between lineage approaches as requirements evolve

- Plan for integration with future tools and platform changes

Common Success Patterns:

- Teams start with platform-provided lineage and add custom tracking where gaps exist

- Organizations implement governance processes as team size and complexity grow

- Successful lineage strategies focus on solving specific problems rather than comprehensive documentation

What Implementation Challenges Should You Expect?

Even the best-designed lineage strategy runs into bumps once you move from slide decks to production pipelines. By knowing where those bumps appear, you can budget time, tooling, and political capital before they derail the project.

Technical Implementation Challenges

- Complex transformations that span multiple tools and systems

- Legacy code without documentation that requires reverse engineering

- Performance overhead from lineage tracking on high-volume data processing

- Integration complexity between different lineage tools and data platforms

Organizational and Process Challenges

- Developer resistance to additional documentation requirements

- Keeping lineage documentation current as pipelines evolve rapidly

- Balancing automation with manual processes for edge cases

- Managing lineage information across different teams and tools

Practical Mitigation Strategies

- Start with high-value, business-critical pipelines rather than trying to track everything

- Focus on lineage that solves specific problems (debugging, compliance) rather than abstract completeness

- Integrate lineage tracking into existing development workflows rather than adding separate processes

- Use platform capabilities to minimize custom development and maintenance overhead

Legacy code compounds the problem. Scripts written years ago like legacy SSIS packages rarely include comments, and their owners may have left the company. Re-creating lineage often means reverse-engineering SQL or Python ETL jobs just to learn what the job does.

Technology alone doesn't solve everything. Developers push back when asked to add annotations or update wiki pages they suspect no one reads. Because modern pipelines change daily, manually maintained lineage drifts out of sync almost immediately, leading to the "why bother?" spiral.

How Do You Measure Lineage Success?

The easiest way to tell whether your data tracking program is paying off is to track how quickly and confidently you can answer data questions that once stalled the business.

Operational Metrics

- Time to resolve data quality issues and pipeline failures

- Percentage of data transformations with documented lineage

- Number of manual lineage lookup requests versus self-service capability

- Audit preparation time and compliance process efficiency

Business Impact Indicators

- Stakeholder confidence in data quality and reliability

- Reduced time spent in incident response and root cause analysis

- Faster onboarding for new team members working with data pipelines

- Improved collaboration between data teams and business stakeholders

Implementation Health Metrics

- Lineage coverage across critical data pipelines and transformations

- Accuracy of lineage documentation compared to actual data flows

- Developer adoption of lineage tools and processes

- Integration success between lineage systems and existing tools

When an alert fires, the clock starts ticking. Measure the average time it takes your team to trace an anomaly back to its source table or transformation. Manual lineage look-ups versus self-service searches provide another key indicator. Fewer Slack pings to the data team indicate better discoverability.

Data tracking succeeds when non-technical stakeholders trust the numbers in their dashboards. Look for higher data quality confidence scores in quarterly surveys and reduction in incident resolution time. Onboarding time for new analysts provides another strong signal: access to visual data flow maps reduces weeks of shadowing to days of exploration.

If you still spend hours hunting through SQL files or stale wikis, your metrics will expose the drag on productivity. Start by instrumenting the pipelines that power executive reporting; most modern ELT platforms already emit the metadata you need. As coverage expands, layer on deeper column-level insights and process controls.

Turn Metrics Into Momentum

Measuring lineage success isn’t just about ticking off metrics. It’s about proving that your data program saves time, prevents issues, and builds trust across the business. When your team can trace problems quickly, stakeholders rely on dashboards without hesitation, and onboarding happens faster, you know your lineage efforts are paying off.

If you want to scale those results without heavy manual work, Airbyte gives you built-in lineage tracking to get started right away.

Ready to implement automatic lineage tracking? Start with Airbyte to see how platform-provided lineage capabilities reduce custom implementation overhead while covering your most critical data pipelines.

Frequently Asked Questions

1. Why does data lineage matter for debugging?

When a schema change or data quality issue occurs, lineage provides a map showing every upstream and downstream dependency. Instead of manually tracing through SQL files or scripts, you can follow the lineage diagram to identify exactly where an error was introduced, reducing resolution time from hours to minutes.

2. How does data lineage help with compliance?

Regulations like GDPR, CCPA, and financial reporting standards require audit trails of where data came from, how it was transformed, and who accessed it. Lineage provides this documentation automatically, helping teams satisfy auditors without weeks of manual effort.

3. Do small teams really need data lineage?

Yes, but at a different scale. Small teams benefit from automatic lineage features built into their ETL/ELT platforms because they reduce firefighting time without adding heavy governance processes. Large teams may need more formal governance, but even a lightweight lineage map builds trust in dashboards.

4. What’s the difference between automatic and manual lineage tracking?

Automatic lineage tracking captures data flows via metadata, logs, or tool integrations, offering scalability and minimal manual effort. Manual lineage relies on human documentation of transformations, covering edge cases but being time-consuming and prone to becoming outdated.

5. How do you measure if lineage is working?

The clearest sign is reduced time to resolve incidents and increased stakeholder trust in dashboards. Supporting metrics include:

- Mean time to debug data quality issues

- Coverage of transformations with lineage documentation

- Audit prep time before compliance reviews

- Fewer ad-hoc requests asking, “where did this number come from?”

Suggested Read:

.webp)

.png)