12 Best Airflow Alternatives for Data Pipelines 2026

Summarize this article with:

.png)

In real-world applications, data usually resides in disparate sources. When present in several sources, data loses its effectiveness and essence. However, extracting data through data pipelines and loading it into an analytical platform can provide the power to generate actionable insights.

Popular data orchestration tools like Airflow can enable you to automate the process of data integration, transformation, and loading into a data warehouse. However, Airflow has many limitations. To overcome the issues, you can explore other Apache Airflow alternatives.

If you are wondering which data orchestration tool is the best, you have come to the right place. This article discusses Apache Airflow's limitations, its alternatives, and the considerations you must know before selecting an Airflow alternative.

What Is Airflow?

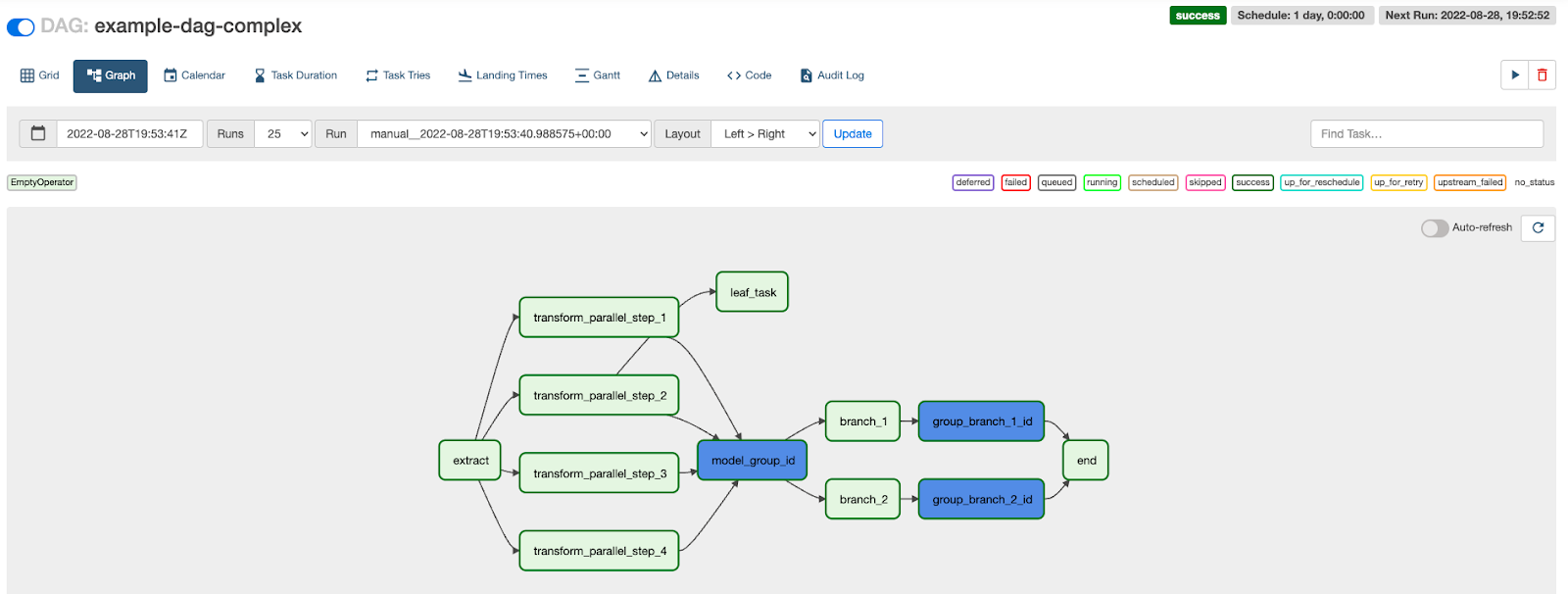

Airflow is an open-source platform that allows you to develop and monitor complex batch-oriented data orchestration tasks. To perform these tasks, you can either use Python programming language or a web interface. By utilizing DAGs (Directed Acyclic Graphs) in Airflow, you can schedule tasks across multiple servers, ensuring the execution of each task is done in the correct order.

Airflow defines workflows as code, enabling multiple features like:

- Dynamic pipeline generation.

- Operators to connect with various technologies.

- Work parameterization to provide runtime configuration to tasks.

12 Alternatives to Airflow

Let’s look at the top 12 Apache Airflow alternatives in 2026.

1. Airbyte

Airbyte is a robust data integration and replication platform. Introduced in 2020, it simplifies data migration between multiple sources and a destination. The destination can be considered as a central repository like a data warehouse or a data lake.

Airbyte’s easy-to-use user interface offers 600+ pre-built data connector options. If the connector you are looking for is unavailable, Airbyte provides the option to create custom ones using the Connector Development Kit.

Why Airbyte?

Data integration enhances the data orchestration process by improving your dataset’s consistency and accuracy. But why consider Airbyte for that? Here are the benefits that the platform provides that make it stand out among other data orchestration tools:

- Powering GenAI Workflows: Airbyte offers prominent vector stores as destinations, including Pinecone and Weaviate, to load unstructured data. Its RAG-specific transformation support enables you to perform advanced operations like chunking and embedding.

- Advanced Security: Airbyte supports major security standards, such as HIPAA, SOC 2, ISO 27001, and more, making it one of the most secure Airflow alternatives. Additionally, Airbyte lets you gain control over sensitive data by offering centralized multi-tenant management with self-service capabilities.

- Vast Community: Airbyte has an actively growing community of contributors, which enables access to community-driven plugins, connectors, and resources.

Pricing

Airbyte offers multiple plans with varying pricing options, including:

- An open-source plan that is free to use.

- A cloud plan with a price range varying according to the volume of replication data from the database and the number of rows to replicate from the API source.

- Team and Enterprise plans that you can access by contacting the sales team.

Use Cases

- You can effortlessly extract conversations from Intercom and store them in a data warehouse like Google BigQuery or Amazon Redshift. This migration enables you to understand customer segmentation and provide excellent customer service.

- Extracting data from your Shopify account into a data warehouse using Airbyte can benefit your business. You can then apply machine learning to this data to forecast purchase orders.

Migrating to Airbyte

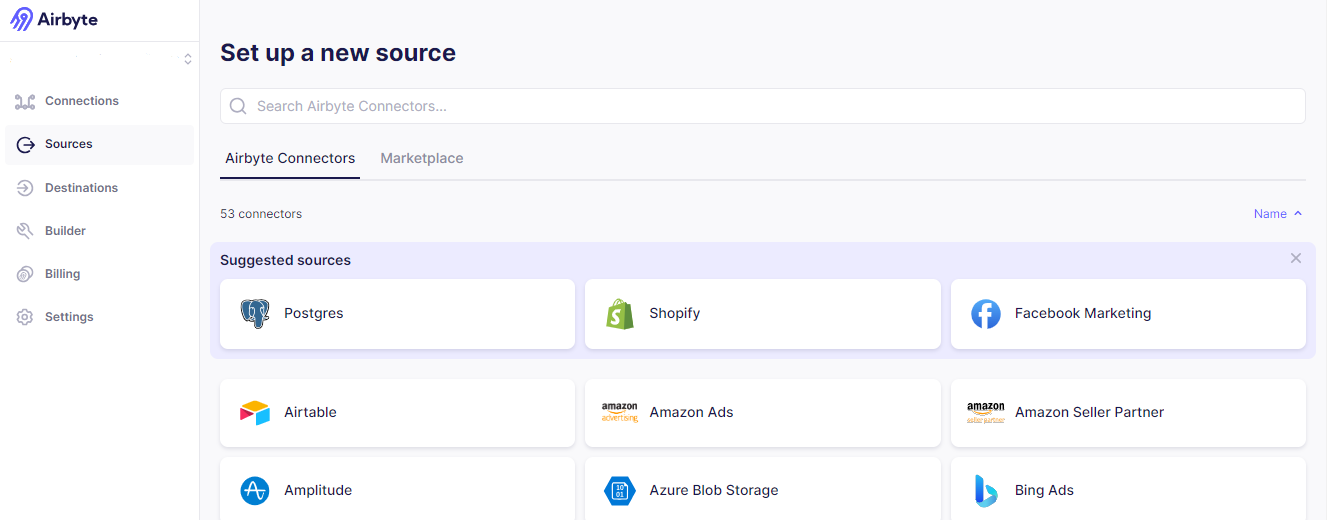

Data migration from any source to Airbyte is a two-step process. To perform these steps, you must log in to your Airbyte account. After logging in, you will see a dashboard. From that page's left panel, click Sources to configure a source in Airbyte.

In the next step, click the Destinations option to complete your data pipeline. This is how easy it is to migrate your data using Airbyte.

2. Prefect

Prefect is a cloud data orchestration platform that allows you to define and automate workflows using Python or API programming languages. It is a web-based service that can be easily containerized using Docker and deployed on the Kubernetes cluster.

Prefect’s flow acts similarly to Airflow DAGs; it describes the tasks and their dependencies. But, unlike Airflow, Prefect supports multiple concurrent task execution at once.

Why Prefect?

Here are a few advantages of using Prefect as an Airflow alternative:

- Task Library: Prefect provides you with a huge task library that contains most of the daily execution tasks, such as sending tweets, running shell scripts, and managing Kubernetes jobs.

- State Objects: Prefect provides rich state objects containing information about task status or flow run. This feature enables you to examine the current and historical states of tasks and flows.

- Caching: With Prefect’s caching, you can reuse the results of specific tasks that are expensive to run with flow.

Pricing

Prefect is suitable for you if you have the budget to choose a pricier option with managed workflow orchestrator. It offers three plans:

- Sandbox is a free-to-use version with limited features.

- Pro version costs $1,850 per month.

- Enterprise-level plan for which you can contact its sales team.

Use Cases

The prominent use cases of Prefect are:

- Prefect, in combination with other applications such as Ensign and River, can enable you to build real-time sentiment analysis applications.

- With Prefect, you can develop event-driven data pipelines, automating your data processing journey based on specific events.

Migrating to Prefect

You can easily migrate data from any cloud provider, including AWS, Azure, and GCP, into Prefect using Python. This involves:

- Installing relevant Prefect integration libraries.

- Registering a block type.

- Creating a storage bucket, a credentials block, and a storage block.

- Writing and reading data from the flow.

3. Dagster

Dagster is a data orchestration tool that can enable you to manage data pipelines from development to production. Similar to Airflow and Prefect, it uses Python to perform tasks. However, Dagster introduces asset-centric development that allows you to focus on delivering resources rather than executing individual tasks.

Why Dagster?

The benefits of using Dagster as an Airflow alternative are:

- Atomic Deployment: Dagster offers atomic deployment, enabling you to update the repository's code without restarting the system. Atomic deployment provides a robust method to reload code continuously.

- Flexibility: Dagster provides you with the freedom to effectively allocate compute resources. It supports horizontal scalability to execute run-specific computation processes independently.

- Easy Spin-up: Dagster's easy spin-up enables you to define a pipeline with a few lines of code and load it into a graphical environment for inspection and execution.

Pricing

Dagster offers three pricing plans, including:

- A Solo package for $10 a month.

- A Starter package for $100 a month.

- A Pro package for which you can contact Dagster sales.

Use Cases

Here are the use cases of Datster:

- You can use YAML DSL within Dagster’s robust data platform to enhance data analytics catering to specific business stakeholders.

- By combining Dagster with Zephyr AI, an AI-driven drug discovery company, you can perform predictive analysis using patient data to develop effective medicinal practices.

Migrating to Dagster

There are two different ways to migrate Airflow to Dagster:

- Migrating pipelines that use Taskflow API.

- Migrating containerized pipelines.

4. Luigi

Luigi is a Python package developed by Spotify. It manages Hadoop operations that require heavy computational resources across clusters, enabling you to attach multiple tasks. These tasks might include a Hadoop job in Java, dumping a table, a Python snippet, a Hive query, or anything else. Stitching these tasks together enables you to efficiently process data through a pipeline of jobs.

Why Luigi?

Here are a few benefits that set Luigi apart as a powerful Airflow Alternative.

- Modularity: Luigi transforms the Monolithic structure into separate UI modules. Each UI module corresponds to a specific backend component, making the application modular and easy to manage.

- Scalability: Luigi breaks down and distributes the development of end-to-end features to multiple teams, allowing a collaborative environment to build, manage, and launch their solutions independently.

- Extensibility: Luigi enables you to securely combine UI modules from different systems, allowing you to build applications with new functionalities provided by your team or external clients.

Pricing

Luigi is an open-source tool that is entirely free to use.

Use Cases

- Luigi is used to automate the tasks of music streaming services, as it stems from Spotify.

- Developers can use Luigi to create external jobs written in Scala, R, or Spark.

Migrating to Luigi

Follow the steps below to migrate Airflow to Luigi:

- Understand the original ETL process of Airflow.

- Replace cron jobs with Luigi pipelines and ensure clear task execution by simplifying code with Luigi tasks.

- Build Luigi tasks by creating a base class to reduce redundancy and define tasks.

- Execute Luigi's tasks.

5. Mage AI

Mage AI is a hybrid framework that enables you to automate your data pipelines. It provides a user-friendly method for extracting, transforming, and loading data. Additionally, Mage AI simplifies the developer experience by supporting multiple programming languages and providing a collaborative environment. Its pre-built monitoring and alerting features make it suitable for handling large-scale data.

Why Mage AI?

Let’s discuss a few features of Mage AI as an Airflow alternative:

- Orchestration: Mage AI lets you schedule and manage data pipelines with high-level observability, maintaining transparency.

- Data Streaming: It enables you to ingest and transform data from disparate sources, making it compatible with the destination.

- Notebook Editor: Mage AI provides a notebook editor where you can write code using interactive Python, R, and SQL to create data pipelines.

Pricing

Mage AI doesn’t disclose pricing, but it is free if you are self-hosted (Azure, GCP, AWS, or Digital Ocean).

Use Cases

- With Mage AI, you can visualize your sales data to uncover hidden patterns, enhancing your business decision-making.

- Mage AI’s low learning curve allows you to use pre-built blocks and add custom configurations to create data pipelines that help increase insight generation.

Migrating to Mage AI

Follow these steps to migrate from Airflow to Mage:

- Create a Python script with DAG code to move data to Amazon S3.

- Use AWS CLI to upload this DAG to Airflow and trigger it.

- The mage_ai.io.s3.S3 function allows you to transfer data from Amazon S3 to Mage AI.

- Configure the S3 connection in Mage using the correct AWS credentials.

- Create a new data loader in the Mage project, select S3 as the source, specify the bucket, and execute the loader.

6. Astronomer

Astronomer is a managed Airflow service that handles the DevOps part, enabling you to focus on creating workflows and data pipelines. With its abilities, you can leverage the capabilities of Airflow without stressing over infrastructure management.

Why Astronomer?

Here’s how Astronomer enhances Airflow’s abilities:

- Local Testing: Astronomer provides its own CI/CD tools that help eliminate local testing and debugging issues encountered while using Airflow.

- Customizability: Although Astronomer is a managed service, it offers extensive customization features to meet your workflow requirements.

- Deployment: When it comes to deployment, Astronomer provides flexibility, offering cloud-based and on-premise deployment options.

Pricing

Astronomer offers four different pricing options, including:

- Developer with pay-as-you-go option starting from $0.35/hr.

- Team, Business, and Enterprise options are available for which you can contact Astronomer sales.

Use Cases

The use cases of Astronomer are:

- Astronomer enables you to run an integrated ELT and machine learning pipeline on Stripe data in Airflow.

- With Astronomer, you can automate Retrieval-Augmented Generation by combining the capabilities of Airflow, GPT-4, and Weaviate.

Migrating to Astronomer

To utilize Airflow with Astronomer, follow the given steps:

- You must prepare your source Airflow for migration.

- Create a deployment on Astronomer.

- Migrate metadata from the source Airflow environment.

- Ensure you migrate DAGs and additional components from the Airflow source.

- Finally, deploy to Astro and migrate workflows from your source Airflow environment.

7. Kedro

Kedro is a Python framework for building reproducible, maintainable, modular data and machine learning pipelines. It provides standardized project templates, coding standards, and robust deployment options, simplifying the data project journey.

Kedro provides a robust mechanism named Hooks that enables you to add custom logic with a certain specification. With Hooks, you can add behaviors to Kedro’s main execution in a consistent manner. These behaviors might include adding data validation to inputs, machine learning metrics tracking, and more.

Why Kedro?

Here are some key features that make Kadro a great Airflow alternative:

- Add Plugins: Kedro allows you to add plugins to extend its capabilities by inserting new commands into the CLI.

- Data Cataloging: Kedro supports data cataloging, enabling you to track each pipeline node's data inputs and outputs. This makes reproducing results, tracing errors, and collaborating with different teams easier.

- Kedro-viz: Kedro’s web UI, Kedro-viz, is a visual representation tool. It helps you visualize your data pipeline as directed acyclic graphs (DAGs) on a web interface.

Pricing

Kedro is an open-source tool that is free to use.

Use Cases

The primary use cases of Kedro are:

- With Kedro, you can develop nodes and pipelines for different price prediction models to predict the price of certain entities.

- You can leverage Kedro-viz's capabilities to visualize your customer data. The visuals help you understand customer behavior and produce effective marketing campaigns to increase sales.

Migrating to Kedro

Follow the steps below to migrate Airflow to Kedro:

- Create a DAG to export tasks to Amazon S3.

- Use AWS CLI to upload the DAG to Airflow and trigger it to initiate the migration process to Amazon S3.

- After uploading data to S3, you must specify the location of the S3 bucket in a catalog.yml file. This file will help you extract data from Kedro.

- Create a credentials.yml file with all the necessary AWS credentials to enable data extraction.

- Initialize the Kedro pipeline that utilizes catalog.yml.

8. Apache NiFi

Apache NiFi is a software project designed to automate data flow between different software systems. It allows you to develop data pipelines using a graphical user interface (GUI) that builds workflows as DAGs. These DAGs support data routing, transformation, and system mediation logic.

Why Apache NiFi?

Here are a few features that NiFi provides:

- Data Monitoring: Apache NiFi’s data provenance module allows data monitoring from the start to the end of any data project. With this feature, you can easily design custom processors and report activities according to your needs.

- Configuration Options: NiFi offers multiple configuration options, enabling you to achieve high throughput, low latency, back pressure, dynamic prioritization, and runtime flow modification.

- High-Security Protocols: NiFi provides a highly secure architecture by supporting prominent security protocols, including SSL, SSH, and HTTPS.

Pricing

Apache NiFi, being an open-source software, is free to use.

Use Cases

The top use cases of Apache NiFi are:

- Network traffic analysis.

- Social media sentiment analysis.

Migrating to Apache NiFi

Follow these steps to perform data migration with Apache NiFi:

- Install and configure Apache NiFi.

- Design a data flow and perform data transformation.

- Track the progress of migration.

- Perform error handling mechanism.

- Test this process using sample data before executing it on a large scale.

- Execute the data migration process.

9. Azure Data Factory

Azure Data Factory (ADF) is a fully managed cloud data integration service that enables you to create data-driven workflows for data orchestration and transformation at scale. It offers over 90 pre-built data connector options for ingesting your on-premise and software-as-a-service (SaaS) data.

Why Azure Data Factory?

Here are a few features that make ADF a good Airflow alternative:

- Microsoft Compatibility: With Azure Data Factory, you can easily integrate with multiple Microsoft Azure services, including Azure Blob Storage and Azure Databases.

- Data Flow Customization: ADF provides the functionality to create custom data flows, allowing you to add custom actions and data processing steps.

- Automation: Custom event triggers can help you automate data preprocessing tasks. These triggers allow the execution of certain tasks when an event occurs.

Pricing

ADF pricing depends on your activity, including orchestration, data movement, pipeline development, and external pipeline management. It offers three plans:

- Azure Integration Runtime

- Azure Managed VNET Integration

- Self-Hosted Integration

Use Cases

The main Azure Data Factory Use Cases are:

- It can enable you to ingest your in-house data into Azure Data Lake, where you can perform advanced analytics and processing.

- ADF lets you connect to GitHub repositories, allowing you to streamline version control and promote a collaborative environment.

Migrating to Azure Data Factory

Here are the steps to migrate Airflow to Azure Data Factory (ADF):

- Create an Airflow environment in ADF studio.

- Configure Azure Blob Storage to store DAG files.

- Create a DAG.

- Import the DAGs from Azure Blob Storage to ADF by creating a link service.

- Run and monitor the DAGs.

10. Google Cloud Dataflow

Google Cloud offers a data orchestration solution named Dataflow. It mainly focuses on batch processing and real-time streaming data from IoT devices, web services, and more. Dataflow implements Apache Beam to clean and filter data, simplifying large-scale data processing.

Why Google Cloud Dataflow?

Let’s discuss a few features that make Google Cloud Dataflow a better Airflow alternative:

- Scalability: Dataflow offers horizontal and vertical auto-scaling features to maximize resource utilization.

- GPUs: It supports a wide range of NVIDIA GPUs that can optimize performance cost-effectively.

- Machine Learning: Dataflow ML lets you manage and deploy ML models, enabling local and remote interfaces with batch and streaming pipelines.

Pricing

Google Cloud Dataflow provides flexible pricing models based on the resources your data processing job utilizes. New customers get a $300 free credit.

Use Cases

- Dataflow lets you use Google Cloud Vertex AI to perform predictive analytics, fraud detection, and real-time personalization on your data.

- It provides an intelligent IoT platform to help you unlock business insights from your global device network.

Migrating to Google Cloud Dataflow

Follow these steps to migrate Airflow to Google Cloud Dataflow:

- Ensure access to Google Cloud services, including Dataflow and Composer.

- Install necessary libraries and SDKs like “google-cloud” and “apache-beam”.

- Prepare Google BigQuery to store the data to be processed.

- Develop a Dataflow pipeline using Apache Beam.

- Manage secrets using Google Cloud KMS or Hashicorp Vault.

- Deploy the pipeline.

- Integrate with Google Composer and monitor the pipeline.

11. AWS Step Functions

Amazon Web Services provides its own workflow solution, AWS Step Function. It is a fully managed, serverless, low-code tool that automates ETL workflows, orchestrates microservices, and prepares data for ML models.

Similar to using Azure Data Factory, Step Functions enable you to easily integrate with AWS services like Lambda, SNS, SQS, and more. Depending on your use case, you can choose a Standard or Express workflow to process long-running and high-volume event processing workloads.

Why AWS Step Functions?

Let’s look at a few features offered by AWS Step Functions:

- AWS Integrations: It offers direct integrations with 220 AWS services and 10,000+ APIs.

- Distributed Components: Step Functions enable you to coordinate any application with an HTTPS connection, including mobile devices and EC2 instances, and create distributed applications.

- Error Handling: AWS Step Functions automates the error handling process with built-in try/catch and retry features. It allows you to retry failed operations.

Pricing

Here are the AWS Step Functions pricing plans:

- Free trial offering 4000 state transitions.

- Beyond the free trial, AWS Step Functions costs $0.025 per 1,000 state transitions.

Use Cases

- AWS Step Functions can help you combine semi-structured data, such as a CSV file, with unstructured data, like invoices, to produce business reports.

- It lets you run real-time workflows. For example, if you update any information on your customer data, the changes will be immediately visible.

Migrating to AWS Step Functions

Migrate Airflow to AWS Step Functions by following these easy-to-implement steps:

- Create a DAG with Python script to extract data to Amazon S3.

- Load this DAG file to Airflow using AWS CLI and trigger it to perform the migration to s3.

- After the data gets uploaded to the S3 bucket, use the ItemReader field to specify the location of the bucket.

- Provide necessary AWS credentials to initiate the data transfer to Step functions and execute the query.

12. Apache Oozie

Apache Oozie is a server-based workflow scheduling system that enables you to manage Hadoop jobs. These jobs include tasks such as HDFS, MapReduce, Sqoop, etc. In Oozie workflow, a directed acyclic graph (DAG) contains a collection of control flow and action nodes.

Control flow defines the mechanism for controlling the workflow path. On the other hand, action nodes lay down the mechanism through which the workflow triggers the processing task execution.

Why Apache Oozie?

Here are a few features that make Apache Oozie among Apache Airflow alternatives:

- Web Service APIs: It offers web service APIs, enabling you to manage tasks from anywhere.

- Automated Emails: Oozie provides you with the capability to send email reminders after the completion of specific tasks.

- Client API: With Apache Oozie, you can start, control, and monitor jobs from Java applications using the client API and command-line interface.

Pricing

Apache Oozie’s pricing is not publicly available. To learn about costs, contact the Apache sales team.

Use Cases

- Oozie enables you to schedule complex tasks according to your specific requirements. To achieve this goal, you can configure workflow triggers.

- It lets you chain different tasks together, simplifying your data processing journey.

Migrating to Apache Oozie

To migrate Airflow to Apache Oozie, follow the given steps:

- Extract data to Amazon S3 by creating a custom DAG.

- Import and execute this data migration DAG in Airflow to load data into an Amazon S3 bucket.

- Access the required AWS credentials for this bucket and create a credential store (.jecks).

- Add the AWS access key and the secret to the credential store file in the Oozie workflow.

- Configure hadoop.security.credential.provider.path to the path of .jecks file in the MapReduce Action’s <configuration> section. This enables you to access Amazon S3 files in the Apache Oozie environment.

Reasons Why Data Professionals Considering Airflow Alternatives

Although Apache Airflow is one of the best data workflow management tools, it has multiple challenges that you must know about. Most users commonly encounter scalability and performance issues, among others. Let's discuss each limitation one by one.

Scalability Limitations

Scaling the Airflow environment depends on the supporting infrastructure and DAGs. Working with Airflow at a large scale can be difficult. One issue is a high task schedular latency due to limited resources for processing workflows.

If you schedule multiple DAGs with an exact time interval, it can overload Airflow. Many DAGs running simultaneously can lead to suboptimal resource utilization and increase execution time.

Steep Learning Curve

One key challenge of using Airflow to perform data orchestration tasks is that it requires DevOps and programming expertise. This limits non-technical users' ability to build and manage data workflows independently.

Performance Issues

Airflow runs into performance bottlenecks as the number of workflows and tasks increases. This limitation stems from the performance of the Airflow schedular, which is responsible for executing the tasks in a workflow. The primary reason for these inefficiencies is the complexity and tight coupling of Airflow’s core internals, including the complex domain model and the DAG parsing subprocess.

Lack of Data Recovery

Airflow presents data management and debugging challenges due to its lack of metadata preservation for deleted jobs. This means you cannot redeploy a deleted DAG or task in Airflow.

Things to Consider When Choosing Any One of the Above Airflow Alternatives

You must consider certain factors before selecting any of the above Apache Airflow alternatives. This section will highlight some of the critical considerations you must know about to achieve the best price/performance.

Scalability

The Airflow alternative you choose must be highly scalable and able to handle large volumes of data efficiently. This feature plays the most significant role in real-world applications involving large datasets. Efficiently handling data improves resource allocation and lets you quickly extract valuable insights from your data.

Ease of Use

The best Airflow alternative you choose must suit your specific needs. You have two options: a no-code tool or a tool requiring programming expertise. The no-code tool will give you an easy way to perform the orchestration tasks. On the other hand, tools that require you to code might have complexities associated with them. However, if you are confident with the programming aspect, you can go for them.

Integrations

The alternative you choose must offer integrations with multiple platforms to integrate data from a diverse range of sources. This critical step provides you with a holistic view of your data. By combining data from numerous sources, you can eliminate data silos to get informed insights beneficial for better decision-making.

Cost

Open-source tools cost you less when it comes to the overall cost involved in data orchestration. However, the cost increases in the long run, especially if you consider maintenance, custom coding, and debugging. Vendor platforms cover all the costs through their subscriptions or according to functionality usage. To get the best price/performance, you must consider calculating the costs you might incur while using these services beforehand.

Why Are People Moving Away From Airflow?

Despite being a pioneer in data orchestration, Airflow has increasingly shown its age. Here are the key reasons data teams are shifting to alternatives:

- Complex Setup & Maintenance: Running Airflow in production requires managing schedulers, web servers, workers, and databases.

- Latency and Bottlenecks: It struggles with high-frequency workflows or large DAGs, often leading to bottlenecks.

- Not Designed for Streaming: Airflow is batch-oriented and not ideal for real-time or event-driven pipelines.

- Poor Developer Experience: Debugging DAGs or implementing dynamic workflows requires non-trivial Python expertise.

- Limited Observability: Airflow’s UI lacks advanced monitoring, alerting, and lineage tracking found in modern tools.

Factors to Consider When Choosing an Airflow Alternative

Before replacing Airflow, evaluate these criteria:

- Scalability: Can it handle complex, high-volume workflows without latency?

- Ease of Use: Is the interface intuitive? Does it require DevOps expertise?

- Integration Ecosystem: Does it connect with your sources, destinations, and tools out-of-the-box?

- Flexibility & Customization: Can you extend or customize workflows easily?

- Security & Compliance: Does it support HIPAA, SOC2, or GDPR if you deal with sensitive data?

- Pricing: Is it truly open-source, freemium, or usage-based? Understand long-term costs.

- Cloud vs On-Premise: Depending on your infrastructure needs, verify deployment options.

Summary

While Airflow is one of the prominent tools available in data orchestration, working with it might present multiple challenges, including scalability and performance issues. You can easily overcome these issues by utilizing any one of the mentioned Airflow alternatives.

However, there are certain factors that you must consider before selecting any of these tools, such as ease of use, cost considerations, and more. Selecting a tool by considering these factors will enable you to get the best out of the tool while maintaining the best price/performance value.

FAQs

1. What is Apache Airflow used for?

Apache Airflow is an open-source workflow orchestration tool designed to programmatically author, schedule, and monitor data pipelines. It is widely used in data engineering to manage complex ETL workflows.

2. Why do teams look for alternatives to Airflow?

Teams often seek alternatives due to Airflow’s limitations in scalability, real-time capabilities, steep learning curve, and complex setup. Modern teams may prefer simpler, more flexible, or cloud-native options.

3. What are some popular alternatives to Airflow in 2026?

Top alternatives include:

- Airbyte – Open-source ELT platform with built-in connectors and integration features.

- Prefect – Pythonic workflow orchestration with modern observability.

- Dagster – Strong type-checking, data-aware pipelines, and developer-friendly tooling.

- Luigi – A Python-based workflow tool that focuses on batch processing.

4. Is Airflow suitable for real-time data processing?

Not ideally. Airflow is designed for batch processing and scheduling. It’s not optimized for real-time or streaming data workflows, which can lead users to choose other tools better suited for those scenarios.

5. Can Airflow handle large-scale data workloads?

While Airflow can handle complex workflows, its performance can degrade with very large-scale deployments unless carefully optimized. Users often report bottlenecks with scheduling and task execution in large environments.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)