Top 10 Open Source Data Ingestion Tools in 2026

Summarize this article with:

Large volumes of data are generated and consumed on a daily basis. By collecting this data and processing it, your organization can gain insights into emerging trends and utilize the knowledge to increase profitability. However, manually handling massive datasets is not only inefficient but also prone to errors.

You can automate the process by relying on open-source data ingestion tools. These tools provide flexible, cost-effective solutions to help you gain better control over your data management processes.

With numerous options available, selecting the right tool can be quite challenging. In this article, you will explore six open-source data ingestion tools that you can leverage to build data pipelines effortlessly and enhance productivity.

What are Open-Source Data Ingestion Tools?

Open-source data ingestion tools are software solutions that can help you streamline the process of gathering data from multiple sources into a centralized system. This reduces manual efforts, improves efficiency, and ensures all relevant data is consistently available for further processing or in-depth analysis.

10 Best Open-Source Data Ingestion Tools

There are multiple open-source data ingestion tools available in the market. Here are the top six choices that you can consider:

1. Airbyte

Airbyte is a powerful data integration platform with over 600+ pre-built connectors for ingesting data from databases, APIs, or data warehouses. It allows you to unify information from multiple sources into a centralized repository for further analysis and reporting. You also have the flexibility to build custom connectors using Connector Development Kits (CDKs) and Connector Builder.

The platform offers PyAirbyte (Python library) to enhance ETL workflows. With PyAirbyte, you can use connectors in the Python environment to extract data and load it into SQL caches like Postgres, DuckDB, BigQuery, and Snowflake. This PyAirbyte cached data is compatible with Python libraries (Pandas), SQL tools, and AI frameworks (LlamaIndex and LangChain) for developing LLM-based applications.

Key Features of Airbyte

- Flexible deployment for complete data sovereignty: Move data across cloud, on-premises, or hybrid environments from one easy-to-use interface—giving you full control over where your data lives.

- Every source, every destination: Connect to 600+ pre-built sources and destinations, and quickly build custom connectors with our AI-assisted tool for any unique data need.

- AI-ready data movement: Move structured and unstructured data together, preserving context so your AI models get accurate and meaningful insights.

- 99.9% uptime reliability: Set up pipelines once and rely on 99.9% uptime, so you can focus on using data instead of managing it.

- Open source flexibility: Modify, extend, and tailor Airbyte without vendor restrictions—benefit from full control and community-driven innovation.

- Balance innovation with safety: Build AI and new products confidently with Airbyte’s open source architecture and native data sovereignty.

- Scale easily with capacity-based pricing: Pay only for performance and sync frequency—not data volume—so growth doesn’t come with hidden costs.

- Built for modern data needs: Leverage advanced CDC methods and open formats like Iceberg, making integration faster and more efficient.

- Developer-first experience: Enjoy APIs, SDKs, and clear documentation designed to help engineers build and maintain pipelines effortlessly.

- Focus on what matters: Let Airbyte handle the technical details so your team can spend more time building products and driving value.

2. Apache Kafka

Apache Kafka is a distributed event-streaming platform that helps you manage high-throughput data ingestion pipelines for real-time applications. Its architecture is scalable and fault-tolerant and facilitates clusters of servers that allow you to input large data volumes from multiple sources with minimal latency. This makes Kafka suitable for real-time data analytics, log aggregation, and stream processing.

While Kafka doesn't include pre-built connectors, its Kafka Connect framework lets you integrate with various external systems, such as databases, sensors, and cloud storage services. The tool also ensures high data availability but can be difficult to set up, requiring more expertise than other plug-and-play solutions.

Key Features of Apache Kafka

- Message Buffering: You can utilize this feature to manage the flow of data during high-traffic periods. It ensures smooth data ingestion without overwhelming the system and further minimizes the risk of data loss or delays in processing.

- High Data Durability: With Kafka's persistent logs, you can retain data for extended periods and replay or reprocess data streams as needed.

3. Fluentd

Fluentd is an Apache 2.0 licensed data-collecting software that you can use to gather and manage log data in real time. By providing a unified logging layer, the platform helps you decouple data sources from backend systems, making processing and analyzing data efficient. With Fluentd’s community-contributed plugins, you can connect to over 500 data sources and destinations.

The tool is written in Ruby and C language. It requires minimal system resources to function and can be deployed within ten minutes. Fluentd also offers a lighter version, Fluent Bit, if you have tighter memory requirements (~450kb).

Key Features of Fluentd

- Built-in Reliability: The memory and file-based buffering enables you to prevent data loss. Fluentd also supports failover setups for high availability and smoother data processing, even in critical environments.

- Improved Accessibility: The platform structures your data as JSON to unify all aspects of log data processing, such as collecting, buffering, filtering, and outputting. This standardized format simplifies data handling due to its structured yet flexible schema.

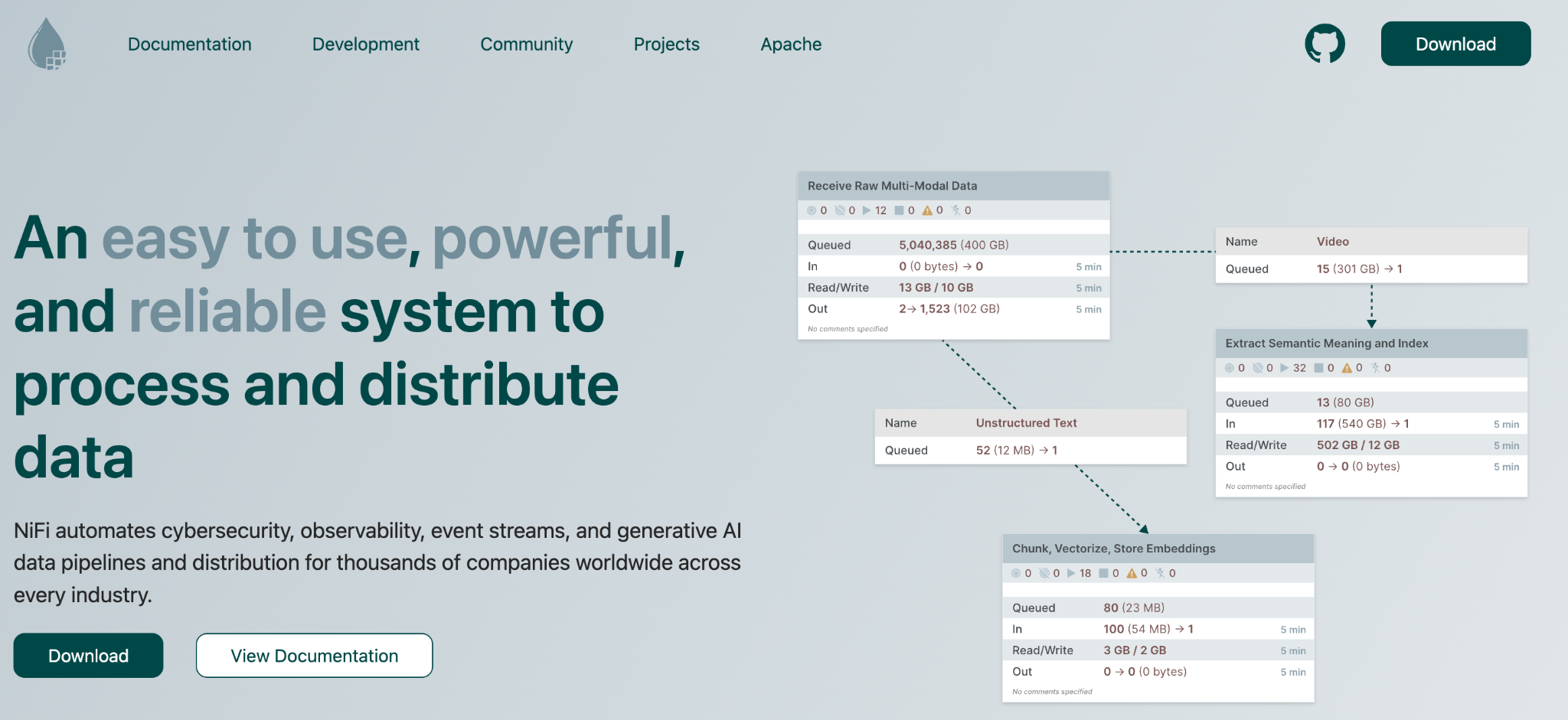

4. Apache Nifi

Apache NiFi is an open-source data ingestion tool that provides a user-friendly, drag-and-drop interface to automate data flow between your data sources and destinations. Its architecture is designed to leverage system capabilities efficiently, making optimal use of CPU, memory, and disk resources.

With features like guaranteed delivery, flexible flow management, and built-in data provenance, you can be assured of your data’s reliability and transparency. Additionally, you can use prioritized queuing and Quality of Service (QoS) configurations to control latency, throughput, and loss tolerance. Apache Nifi’s compliance policies are ideal for projects across industries such as finance, IoT, and healthcare.

Key Features of Apache Nifi

- Scalability: You can scale Apache Nifi both horizontally (through clustering) and vertically (by increasing task concurrency). Additionally, it can be scaled down for small, resource-limited edge devices.

- Advanced Security: Apache Nifi offers end-to-end security with encryption protocols such as TLS and 2-way SSL and multi-tenant authorization.

5. Meltano

Meltano is an ELT (Extract, Load, Transform) tool originally created by GitLab. It is now an independent platform with 600+ connectors that you can use to extract and load data. Your Meltano projects are stored as directories with configuration files to enable full integration with Git. This supports all modern software development principles like version control, code reviews, and CI/CD.

The platform follows DataOps best practices and a distributed data mesh approach, which enhances data accessibility, accelerates workflows, and improves reliability. Unlike commercial, hosted solutions such as Snowflake and Databricks, Meltano allows you to democratize your data workflows.

Key Features of Apache Meltano

- Self-Hosted Data Solution: As Meltano is self-hosted, it lets you process and control your data locally while maintaining compliance and reducing costs compared to cloud-hosted alternatives.

- Quick Data Replication: With Meltano, you can import data batches faster. It supports incremental replication via key-based and log-based Change Data Capture (CDC) and automates schema migration for streamlined data management.

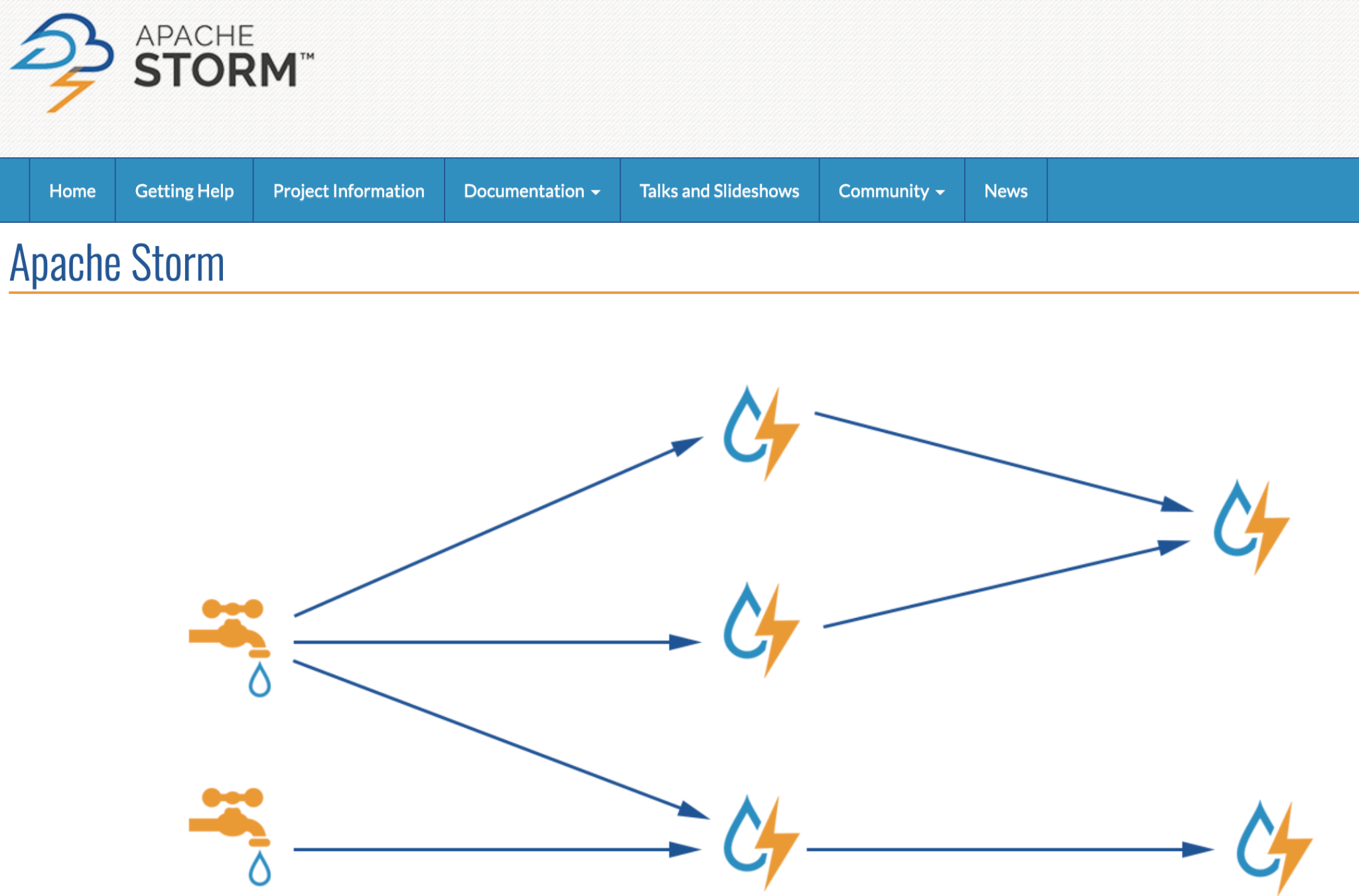

6. Apache Storm

Apache Storm is a big data processing tool that is widely used for distributed remote procedure calls (RPC), ETL (Extract, Transform, Load), and continuous computation. It enables you to ingest high volumes of data streams through parallel processing across multiple nodes. This further helps you achieve speed, scalability, and resilience.

The platform is inherently stateless for optimal performance. However, it leverages ZooKeeper (Apache’s coordination service) to maintain the state for recovery and offers Trident topology (a high-level abstraction) for stateful stream processing. This architecture allows Apache Storm to process massive volumes of data with low latency.

Key Features of Apache Storm

- Integration with Existing Systems: You can integrate Apache Storm with queueing (Apache Kafka) and database technologies (MySQL) that you are already using. This makes it easier to build and maintain complex data workflows.

- Language Agnostic: Apache Storm can be used with any programming language, such as Python, Java, Ruby, and more. It can adapt to diverse development environments.

7. Logstash

Logstash is an open-source data processing pipeline that enables you to collect, parse, and store logs and event data from a variety of sources. As part of the Elastic Stack, Logstash integrates seamlessly with Elasticsearch, Kibana, and Beats, making it an ideal choice for organizations focused on centralized logging, observability, and search functionalities.

It supports a wide range of input sources including files, databases, APIs, message queues, and cloud services. With its flexible filter plugins, you can enrich and transform your data before sending it to destinations such as Elasticsearch or other storage systems.

Key Features of Logstash

- Flexible Plugin Architecture: Logstash offers over 200 plugins, allowing you to process data from a variety of sources and formats. You can filter, mutate, enrich, and aggregate data on the fly.

- Seamless Integration with Elastic Stack: It works efficiently with Elasticsearch for search and analytics and Kibana for visualization and monitoring, providing end-to-end observability solutions.

- Data Parsing and Enrichment: You can leverage filters to parse unstructured data, apply patterns, and enrich it with additional metadata before outputting it to other systems.

- Scalability: Logstash is designed for distributed environments and can process millions of events per second when scaled appropriately.

8. Airflow

Apache Airflow is an open-source workflow orchestration tool that helps you design, schedule, and monitor complex data pipelines programmatically. Using directed acyclic graphs (DAGs), you can define tasks, their dependencies, and workflows with Python scripts, making it highly flexible and scalable.

While not a data ingestion tool in itself, Airflow is widely used for orchestrating data pipelines, including extraction and transformation tasks. You can integrate it with connectors and APIs to ingest data from multiple sources and ensure robust execution pipelines.

Key Features of Apache Airflow

- Python-Based Configuration: All workflows are defined using Python scripts, giving developers complete control and allowing reusable tasks.

- Scheduling and Monitoring: Airflow provides advanced scheduling capabilities with retries, triggers, and failure handling, ensuring data ingestion pipelines run smoothly.

- Extensible with Plugins: The platform supports numerous operators for cloud services, databases, and APIs, enabling integrations across various environments.

- Scalable Execution: Airflow’s architecture supports horizontal scaling via Celery and Kubernetes executors, helping handle large-scale workflows.

9. OpenLineage

OpenLineage is an open-source metadata tracking and lineage tool that helps you monitor data pipelines by capturing metadata at various stages of data ingestion and transformation. By integrating with existing ETL and data processing tools, it provides observability into how data flows through your systems.

This helps ensure data reliability, reproducibility, and debugging, especially when working with large, distributed datasets across cloud and hybrid environments.

Key Features of OpenLineage

- Standardized Metadata Capture: OpenLineage collects metadata such as job runs, inputs, outputs, schema changes, and dependencies across pipelines for traceability.

- Integration with Popular Tools: It integrates with tools like Airflow, dbt, and Spark, allowing seamless tracking of pipelines without modifying core logic.

- Data Observability: You can detect anomalies, missing data, and pipeline failures by visualizing data dependencies and lineage across your infrastructure.Open Standard: Built with interoperability in mind, OpenLineage encourages best practices in data observability across organizations.

10. Presto (Trino)

Presto, now also known as Trino, is a distributed SQL query engine that enables you to run fast analytics across large datasets in heterogeneous data sources. While it is not a direct ingestion tool, Presto facilitates querying and federating data from multiple sources—databases, data lakes, and cloud services—without requiring complex ETL pipelines.

This capability allows you to ingest data virtually by connecting to multiple systems and providing a unified query layer, helping organizations perform real-time analytics across siloed datasets.

Key Features of Presto (Trino)

- Federated Query Engine: Connects to a wide array of data sources like MySQL, PostgreSQL, Hive, Kafka, Elasticsearch, and more to enable on-the-fly querying.

- High Performance: Optimized for large datasets and high concurrency, Presto delivers low-latency results across distributed systems.

- Extensible Architecture: With connectors for new sources, it allows expansion into additional systems as data environments evolve

- Lightweight Deployment: Presto’s design supports fast deployment without heavyweight dependencies, making it easier to integrate into existing infrastructures.

How to Choose the Right Open-Source Data Ingestion Tool?

Choosing the right open-source data ingestion tool depends on your specific needs, budget constraints, and use cases. Here are a few things that you should consider:

- Data Source Compatibility: You should make sure the tool you select integrates with all your required data sources (databases, APIs, streaming platforms, CRMs). It should also work with various data formats (JSON, CSV, Avro) and have connectors for both on-premise and cloud-based systems.

- Scalability: The tool must be able to handle your current data volume and scale as your data grows while offering consistent performance. You can also consider the tool’s underlying data ingestion architecture, such as CDC, Kappa, or Lambda.

- Real-Time or Batch Ingestion: Depending on your latency requirements, you should decide if you need real-time ingestion for immediate insights or data imports in batches for periodic updates.

- Usability: You must assess the tool's learning curve and ease of configuration. It should also provide user-friendly interfaces and detailed documentation.

- Community and Technical Support: Having an active community ensures readily available resources, updates, tutorials, and assistance for troubleshooting. You can also consider whether the vendor renders commercial support.

Advantages of Using Data Ingestion Tools

Open-source data ingestion tools extend several benefits that can significantly improve how your organization manages and utilizes data. Some of the advantages are listed below:

- Data Centralization and Flexibility: Data ingestion tools allow you to consolidate information from diverse sources into a unified repository, streamlining your data analyses and other downstream processes.

- Scalability and Performance: With data ingestion tools, you can handle massive datasets and add new sources without compromising performance. These platforms ensure that your data infrastructure can accommodate your evolving needs.

- Data Quality and Consistency: Many tools provide features to validate, clean, and transform data during ingestion. You can utilize them to maintain high data quality and consistency and gain more reliable insights.

- Real-Time Data Integration: You can use real-time data ingestion capabilities to perform immediate analysis and react to events as they occur. Applications like fraud detection and weather forecasting require fast response times.

- Improved Data Availability: When dealing with time-sensitive situations, having data in a centralized location gives you quicker access to the latest and most relevant information.

- Better Collaboration: By using data ingestion tools, you can break down silos and make data accessible to a broad range of stakeholders, including non-technical users. This enhances collaboration between teams of different departments and supports data democratization.

- Data Security: Data ingestion tools can help you protect confidential data by providing features like role-based access, encryption, and compliance with data privacy regulations.

- Cost and Time Savings: By automating the data ingestion process, you can reduce the time and resources required to collect data manually. This allows your organization to allocate those resources elsewhere, significantly lowering costs and efforts.

- No Vendor Lock-In: With open-source data ingestion tools, you have the flexibility to switch platforms without being tied to a single provider or risking data loss.

Closing Thoughts

Choosing the right open-source data ingestion tool for your organization is a crucial decision. When making this decision, you need to consider several factors, like data source compatibility, scalability, and community support. Based on your organization’s budget and project requirements, you can opt for the most suitable ingestion tool.

With open-source data ingestion tools, you can customize and integrate your data pipelines with the existing infrastructure easily. Additionally, you don’t have to rely on specific vendors and their proprietary systems to perform your tasks. The platforms ensure your teams have access to the right data at the right time, equipping them with valuable insights to achieve business objectives efficiently.

FAQs

1. What is a data ingestion tool, and why is it important?

A data ingestion tool helps collect and centralize data from multiple sources into one system for analysis or processing. It reduces manual effort, improves efficiency, and ensures that your organization has access to consistent, high-quality data for informed decision-making.

2. What are the benefits of using open-source data ingestion tools?

Open-source tools offer flexibility, scalability, and cost-effectiveness. They allow customization, avoid vendor lock-in, provide access to a wide community for support, and enable seamless integration with existing data pipelines and systems.

3. How do I choose the right data ingestion tool for my organization?

Consider your data sources, volume, real-time vs. batch requirements, scalability needs, ease of use, and community support. Tools should integrate with your existing infrastructure, handle your expected workloads, and provide features like CDC, error handling, and data validation.

4. Can open-source tools handle real-time data ingestion?

Yes. Many open-source tools, such as Apache Kafka, Apache NiFi, and Apache Storm, support real-time data ingestion. They allow organizations to process and analyze data streams as events happen, which is critical for use cases like fraud detection, monitoring, and IoT analytics.

5. Are open-source data ingestion tools secure?

Open-source tools often include built-in security features such as encryption, role-based access control, and audit logs. When properly configured, they can meet enterprise security and compliance requirements while giving you full control over your data.

Suggested Read:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)