What Tools Are Best for Replacing Legacy ETL Systems in Large Organizations?

Summarize this article with:

✨ AI Generated Summary

Legacy ETL systems like Informatica, Talend, and Azure Data Factory cause escalating costs, maintenance burdens, scalability issues, and vendor lock-in, limiting innovation and increasing operational complexity. Modern ETL replacements should be evaluated on deployment flexibility, security, connector coverage, cost model, scalability, developer experience, migration ease, and monitoring capabilities.

- Top alternatives include Airbyte (open-source, hybrid, capacity-based pricing), Fivetran (fully managed SaaS, volume-based pricing), Matillion (warehouse-centric ELT), Talend Cloud (data quality and governance), Informatica IDMC (enterprise-grade, costly), Hevo Data (no-code pipelines), AWS Glue (serverless AWS integration), and Apache NiFi (real-time flow-based routing).

- Choosing the right tool depends on team size, hosting preferences, data sovereignty, and cost transparency, with Airbyte favored for flexibility and cost control, and Fivetran for zero-maintenance SaaS.

- Migrations typically take 3-9 months, requiring phased approaches and attention to hidden costs like staff ramp-up and code rewrites.

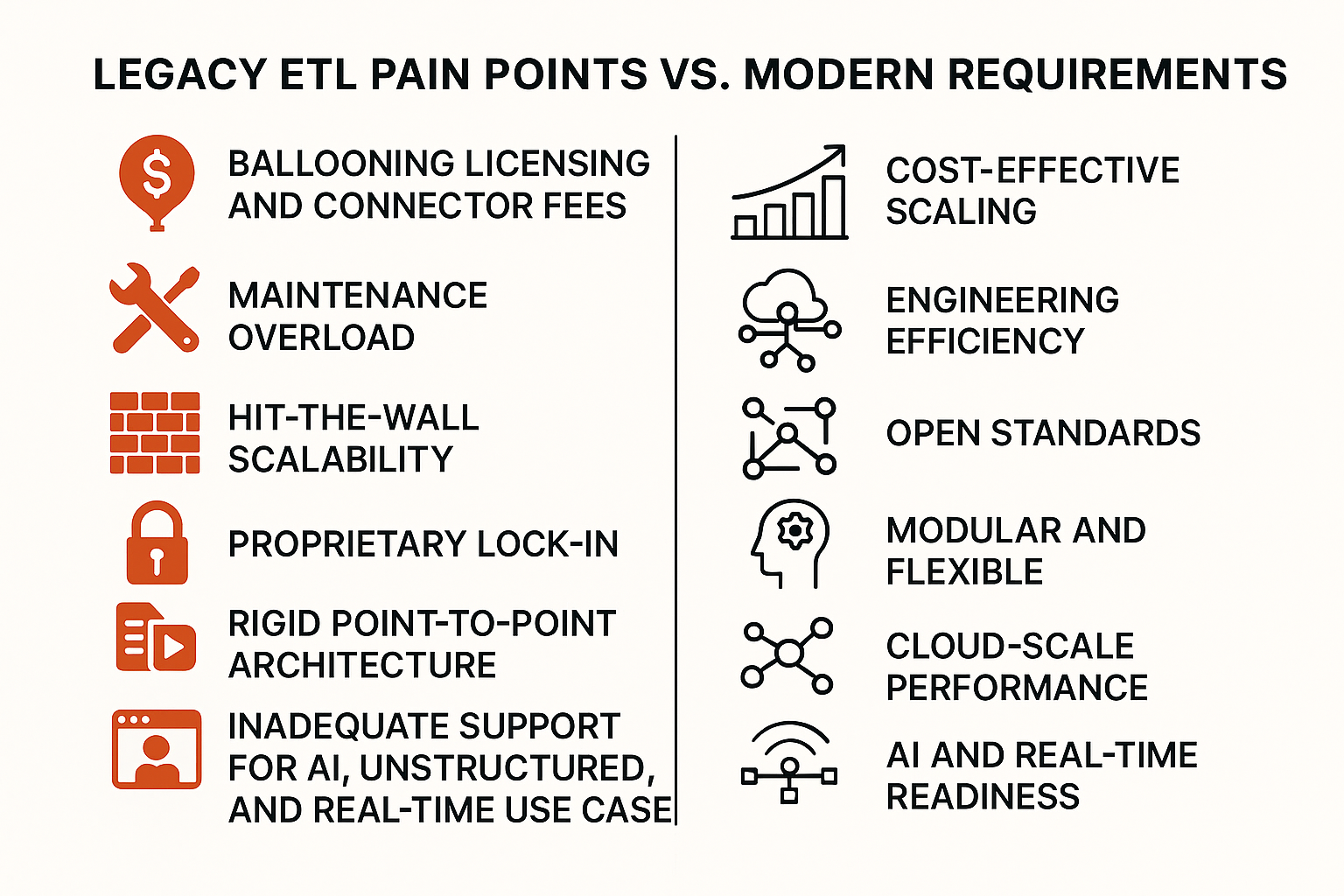

If you’re still on Informatica, Talend, or Azure Data Factory, you know the pain: rising license renewals, per-connector fees that punish growth, and servers that buckle under peak loads. Most of your team’s time goes into patching broken mappings instead of delivering analytics. Legacy ETL locks engineers into maintenance over innovation — and modern enterprises can’t afford that.

This guide shows why legacy ETL creates these problems, what to look for in replacements, and how the top alternatives perform in practice.

What Challenges Do Legacy ETL Systems Create for Large Enterprises?

Legacy stacks such as Informatica, Talend, and Azure Data Factory once powered daily data moves, but today they slow you down. Costs spiral, pipelines crack under cloud-scale workloads, and teams spend more time firefighting than innovating.

Here's where the pain shows up first:

Ballooning Licensing and Connector Fees

Per-connector pricing models turn data growth into budget nightmares. Every new integration point adds another line item to your annual contract. Moving to cloud editions can cost up to three times more than on-premises offerings.

The projected cloud savings vanish when you factor in the premium pricing tiers that enterprise features require.

Maintenance Overload That Drains Engineering Time

Data engineers report spending roughly 80% of their week on pipeline maintenance, schema fixes, and routine troubleshooting. These platforms offer little automation for monitoring or handling schema drift. Your team becomes full-time caretakers instead of builders.

Only 20% of engineering time remains for developing new capabilities that drive business value.

Hit-the-Wall Scalability for Modern Data Volumes

Traditional ETL architectures were built for nightly batch jobs on dedicated hardware, not the distributed, high-velocity datasets that modern enterprises generate. Teams hit performance walls with cloud-scale workloads.

This leads to missed SLAs and expensive over-provisioning attempts that still don't solve the underlying architectural limitations.

Proprietary Lock-In That Limits Your Options

Workflow definitions, metadata stores, and transformation logic live in closed, vendor-specific formats. Switching tools or cloud providers often becomes a lengthy migration project. Extracting and adapting your business logic from proprietary formats requires careful planning, mapping, and, in some cases, partial redevelopment.

Rigid Point-to-Point Architecture

Most traditional platforms still route data through tightly coupled, point-to-point connections instead of flexible hub-and-spoke patterns. Every new source or destination requires custom integration work. This makes your data architecture increasingly complex and brittle as you add more systems.

Inadequate Support for AI, Unstructured, and Real-Time Use Cases

Text documents, images, and streaming feeds that fuel modern analytics push these tools beyond their design limits. You're forced to bolt on additional services or accept delayed insights. The platforms can't handle the variety and velocity of data that AI and real-time applications demand.

What Criteria Should You Use to Evaluate ETL Replacements?

Pin down the attributes that matter most to your enterprise. This framework helps you weigh trade-offs objectively and avoid another generation of technical debt.

1. Deployment Flexibility

You need the option to run pipelines in the cloud, on-premises, or both when data sovereignty laws change. Tools that support hybrid topologies let you keep sensitive workloads local while bursting to the cloud for scale. This avoids rigid architectures.

2. Security and Governance

Role-based access control, encryption, audit logs, and documented compliance certifications aren't optional. Without these guardrails, migrations stall under governance gaps.

3. Connector Coverage and Extensibility

Pre-built connectors cut delivery time while a software development kit lets you build the rest. Traditional ETL's connector bottlenecks limit integration capabilities.

4. Cost Model and Total Cost of Ownership

Capacity-based pricing lets you grow predictably, while per-row models spike as volumes rise. Cloud cost increases can be significant during migrations.

5. Scalability and Reliability

Horizontal scaling, parallel processing, and automated retries protect SLAs during traffic spikes. When these features are missing, outages become common.

6. Developer Experience and Ecosystem

A clean API, strong documentation, and an active community shorten onboarding and reduce shadow scripts. Stagnant ecosystems force engineers to reinvent wheels.

7. Migration Capabilities

Native import utilities for existing mappings shrink project timelines. Tools that parse PowerCenter XML or Talend jobs directly avoid months of manual rewrites.

8. Monitoring and Error Handling

Automated lineage, alerting, and self-healing keep data teams out of firefighting mode. Platforms lacking these features trap engineers in maintenance cycles.

What Tools Can Replace Legacy ETL Systems in Enterprises?

Modern data teams have plenty of options when they decide to retire Informatica, Talend, or other traditional ETL stacks. The eight tools below represent the most common choices you'll encounter when mapping a migration path.

1. Airbyte

Airbyte's open-source foundation gives you 600+ pre-built connectors (more than any competitor), while its hybrid control plane lets you keep data wherever compliance dictates. You can deploy it as Airbyte Cloud, run it self-hosted on Kubernetes, or split control and data planes for complete data sovereignty.

Pricing is capacity-based, so you pay for compute, not data volume. The OSS version remains free for teams willing to self-manage. Enterprises use Airbyte to run CDC replication across cloud and on-prem databases while avoiding the per-row fees that destroyed their traditional budgets.

2. Fivetran

Fivetran delivers a fully managed SaaS experience: you authenticate a source, pick a destination, and the service handles change data capture, schema drift, and scaling. Its catalog includes over 500 connectors, with new SaaS sources added as determined and managed by Fivetran's engineering team.

Costs follow Monthly Active Rows, so price grows with data volume rather than compute hours.

You'll often see Fivetran used to offload SaaS data into Snowflake or BigQuery, which is ideal when your priority is zero maintenance and the data footprint is predictable. Cost management becomes critical once row counts explode.

3. Matillion

Matillion focuses on ELT transformations executed inside cloud warehouses such as Snowflake, Redshift, and BigQuery. You spin up a dedicated VM (or cluster), design jobs in a visual canvas, and let the warehouse engine perform the heavy lifting.

Subscription pricing is tied to instance size or user seats, giving you predictable OPEX.

If your pipelines revolve around warehouse-native transformations and you prefer drag-and-drop over hand-coded scripts, Matillion offers a comfortable middle ground.

4. Talend Cloud

Talend began as an on-prem solution; its cloud edition layers governance, data quality, and lineage features onto a modern interface. You can deploy entirely in Talend's SaaS or run remote engines inside your VPC for hybrid control.

Existing Talend Studio jobs import with minimal refactoring, easing the path off older servers. Many enterprises choose Talend Cloud when decommissioning on-prem servers but wanting to keep familiar metadata rules.

5. Informatica IDMC

Informatica's Intelligent Data Management Cloud is the spiritual successor to PowerCenter. It brings lineage, catalog, MDM, and API management under one roof, all governed by granular RBAC.

The trade-off is cost and complexity: contracts are multi-year, and implementation often mirrors the scale of an ERP roll-out. Organizations with deep PowerCenter investments often pilot IDMC modules first, then phase out on-prem nodes.

6. Hevo Data

Hevo positions itself as a no-code pipeline builder for analytics teams that lack heavy engineering support. You choose a source, pick a destination, and Hevo handles scheduling, schema mapping, and error retries.

Pricing is tiered by event volume, making costs easy to forecast for mid-sized estates. Teams often graduate from spreadsheets to Hevo when they outgrow manual CSV uploads.

7. AWS Glue

AWS Glue offers serverless Spark jobs, a built-in data catalog, and change-event ingestion via Glue Streaming — all living inside your AWS account. Because compute spins up on demand, you avoid idle infrastructure. You also need to tune jobs for Spark performance to control costs.

Glue shines when you already centralize data in S3 or Lake Formation.

8. Apache NiFi

NiFi is an Apache-licensed, flow-based engine designed for real-time data routing. You build drag-and-drop canvases that push, pull, and transform data in streaming or batch modes. Because it's open source, you can extend processors in Java or scripting languages, then deploy clusters on-prem or in the cloud.

NiFi tends to surface in manufacturing or security contexts where you need to route millions of small messages per second and can dedicate engineers to manage cluster health.

Which Tool Is Right for Your Enterprise?

Start by mapping your environment realities: data team size, self-hosting capacity, and data-sovereignty requirements. For full control over data pipelines and the flexibility to extend them, an open-source option with 600+ connectors like Airbyte’s foundation gives you that freedom without per-row cost escalation.

Organizations preferring turnkey SaaS with predictable data volumes can consider Fivetran's managed model, but expect cost spikes as Monthly Active Rows grow. Matillion works for data-warehouse-focused teams comfortable managing cloud VMs, while AWS Glue excels when your entire workload already runs on AWS.

When control, flexibility, and cost transparency are your top priorities, Airbyte’s open-source core, hybrid deployment options, and capacity-based pricing modernize pipelines while maintaining compliance requirements.

Try Airbyte for free today.

Frequently Asked Questions

How long does it typically take to migrate from a legacy ETL system?

Expect 3-9 months for dozens of pipelines and petabytes of data. Simple projects with well-documented mappings finish faster, while heavily customized Informatica or Talend estates take longer. The biggest delays come from untangling proprietary transformations and validating data quality.

Use a phased approach: migrate low-risk pipelines first, run old and new systems in parallel, then cut over after reconciliation.

What security certifications should I look for in a modern ETL solution?

Require SOC 2 Type II and ISO 27001 minimum, plus HIPAA or GDPR readiness for regulated data. Essential features include encryption at rest and in transit, granular RBAC, and detailed audit logs.

For data sovereignty requirements, insist on region-locked deployments or hybrid control-plane options.

How do modern ETL tools handle schema changes compared to legacy systems?

Legacy systems break when columns are added or renamed, requiring manual fixes. Modern platforms detect metadata changes automatically, propagate updates downstream, and alert before breaking transformations execute.

Tools like Fivetran and Airbyte track column lineage and let you accept or reject changes before the next run.

What are the hidden costs of ETL migration that enterprises should consider?

Beyond license savings, budget for staff ramp-up time and parallel system costs during validation. Custom code remediation often requires full rewrites of proprietary Informatica expressions or Java stages.

Factor in delayed feature rollouts and stakeholder fatigue during cutovers when calculating true migration costs.

.webp)