How to Create LLM with Hubspot Data: A Complete Guide

Summarize this article with:

✨ AI Generated Summary

HubSpot CRM can be enhanced by integrating Large Language Models (LLMs) using its structured data (contacts, companies, deals, tickets) to automate customer management, predictive analytics, and real-time query resolution. Key integration steps include secure API authentication, data cleaning, property mapping, and using tools like Airbyte for efficient data ingestion into LLM frameworks. Advanced AI agent architectures enable multi-step reasoning, workflow orchestration, and real-time responsiveness, while robust monitoring and token optimization ensure performance, cost-efficiency, and compliance.

While running a business, you need to employ CRM applications to streamline customer services, marketing, and sales operations. One of the most popular CRM platforms is HubSpot, which enables you to manage your business through its user-friendly features at an affordable cost.

However, to improve your business workflow further, you can create LLMs with HubSpot data. This will automate most of your customer-management tasks, allowing you to focus on more productive business aspects.

What Are the Essential Foundation Elements for HubSpot LLM Development?

HubSpot is an AI-powered customer relationship management (CRM) platform that offers software and resources for managing marketing, sales, and customer services. You can utilize them to automate your business operations, increasing productivity and profits. The services offered by HubSpot are known as Hubs, and there are six prominent Hubs—Marketing Hub, Sales Hub, Service Hub, Content Hub, Operations Hub, and Commerce Hub.

To further enhance the benefits of HubSpot, you can create an LLM with HubSpot data.

HubSpot Data Structure Overview

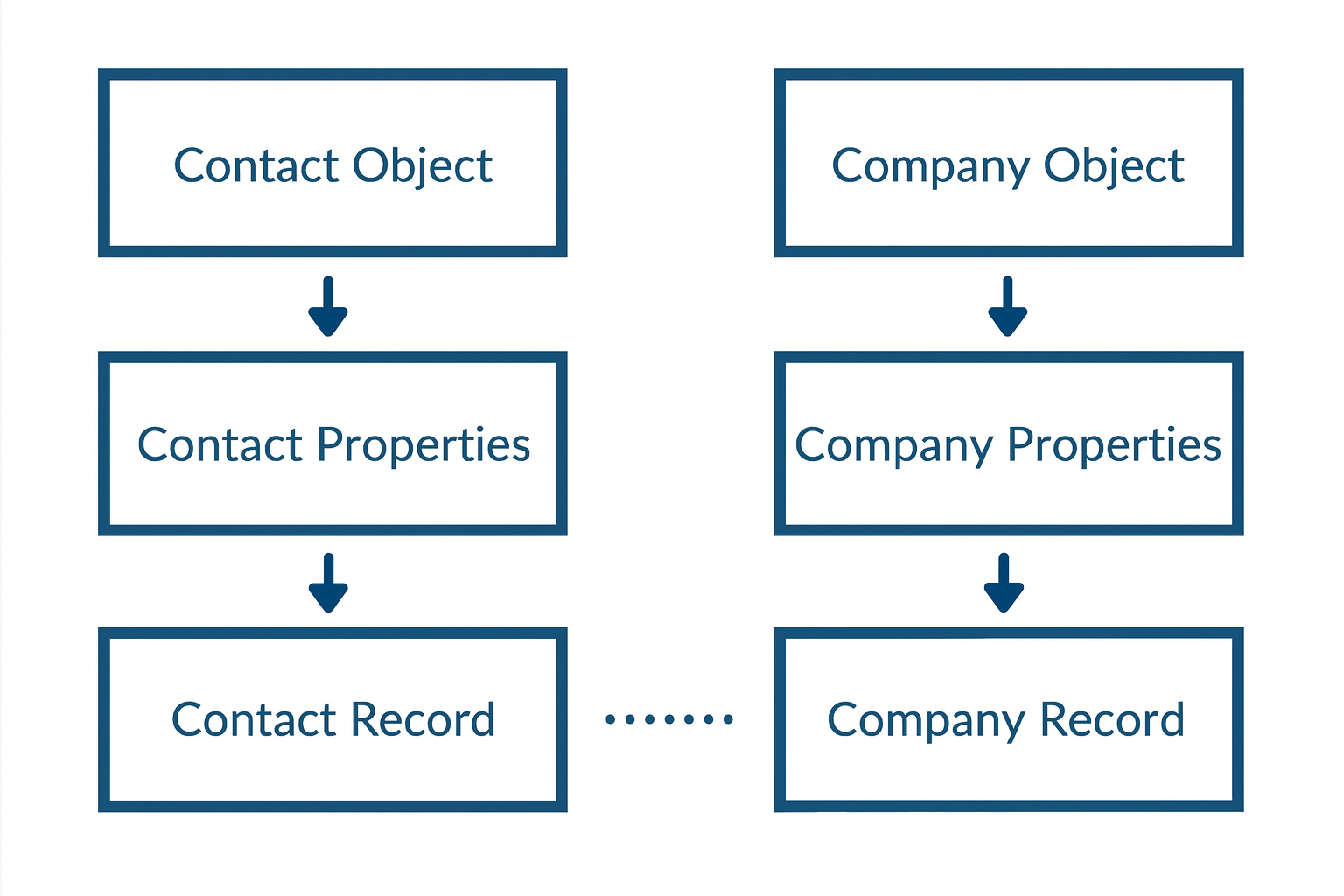

HubSpot's data structure (or data model) is a framework that enables you to organize CRM data within the platform. Its key components are:

- Objects – Every HubSpot account consists of four standard objects: contacts, companies, deals, and tickets.

- Records – A single instance of an object that stores information in properties.

- Properties – Individual fields that store information within a record. You can also create custom properties.

Example: James Smith is a contact record, and jamesmith@email.com is his email property. James's company Paper Inc. is a company record associated with his contact record. If James interacts with your sales and support team, you can create and link deals and tickets with both James and his company.

LLM Capabilities for CRM Data

An LLM trained on CRM data should provide:

- Contextual understanding – interpret customer data such as purchase history or engagement patterns.

- Predictive analytics – forecast customer behavior to understand churn rates and generate leads.

- Real-time query resolution – handle customer inquiries 24 × 7.

Modern LLM implementations can also leverage advanced semantic understanding to identify customer intent patterns across multiple communication channels, enabling more sophisticated automation workflows that adapt to individual customer preferences and behavioral patterns over time.

Use Cases and Business Value

LLMs automate routine tasks—extracting information from emails, contracts, or notes—reducing manual effort. They optimize sales by analyzing high-volume business data and improve customer communication by generating tailored responses.

Advanced applications include intelligent lead scoring that considers both explicit customer attributes and implicit behavioral signals, automated content personalization that adapts messaging based on customer journey stages, and predictive churn analysis that identifies at-risk customers before they disengage. These capabilities transform reactive customer management into proactive relationship optimization.

Privacy and Compliance Basics

- Encrypt CRM data at rest and in transit (e.g., GDPR, HIPAA).

- Implement RBAC to control data visibility in the LLM.

- Use model-level access controls (e.g., OpenAI GPT, Meta Llama) or your own authentication layer.

Enterprise implementations require additional considerations including data lineage tracking to demonstrate compliance with regulatory audit requirements, automated data retention policies that align with jurisdiction-specific requirements, and consent management systems that ensure customer data usage aligns with granted permissions throughout the LLM lifecycle.

Resource Requirements

- Data warehouses or other data-management systems.

- Computational infrastructure (GPUs/TPUs).

- LLM frameworks: LangChain, LlamaIndex, Hugging Face, etc.

- Data-integration and transformation tools.

Resource planning must also account for vector database storage requirements, model serving infrastructure that can handle peak query loads, and monitoring systems that track both technical performance metrics and business outcome indicators. Organizations typically require 2-4x their initial resource estimates when moving from development to production scale.

How Do You Set Up the Development Environment for HubSpot LLM Integration?

To create an LLM with HubSpot, set up the following.

1. HubSpot API Authentication

Two authentication methods:

OAuth

Use when multiple customers will use your LLM or you plan to list it on the HubSpot App Marketplace.

Private App Access Tokens

Use for internal-only applications.

To export data, make a POST request to /crm/v3/exports/export/async, specifying file format, objects, and properties.

Authentication implementation should include token refresh mechanisms for long-running applications, error handling for expired credentials, and security measures such as token rotation schedules. Production systems require robust credential management through secure vault solutions rather than environment variables.

2. Development Environment

Install required languages and libraries (PyTorch, TensorFlow, Hugging Face, relevant SDKs).

Modern development environments should also include containerization tools like Docker for consistent deployment across different environments, version control systems optimized for large model files using Git LFS, and integrated development environments that support debugging complex AI workflows. Consider using HubSpot ChatGPT SDKs that provide pre-built integrations for common LLM operations.

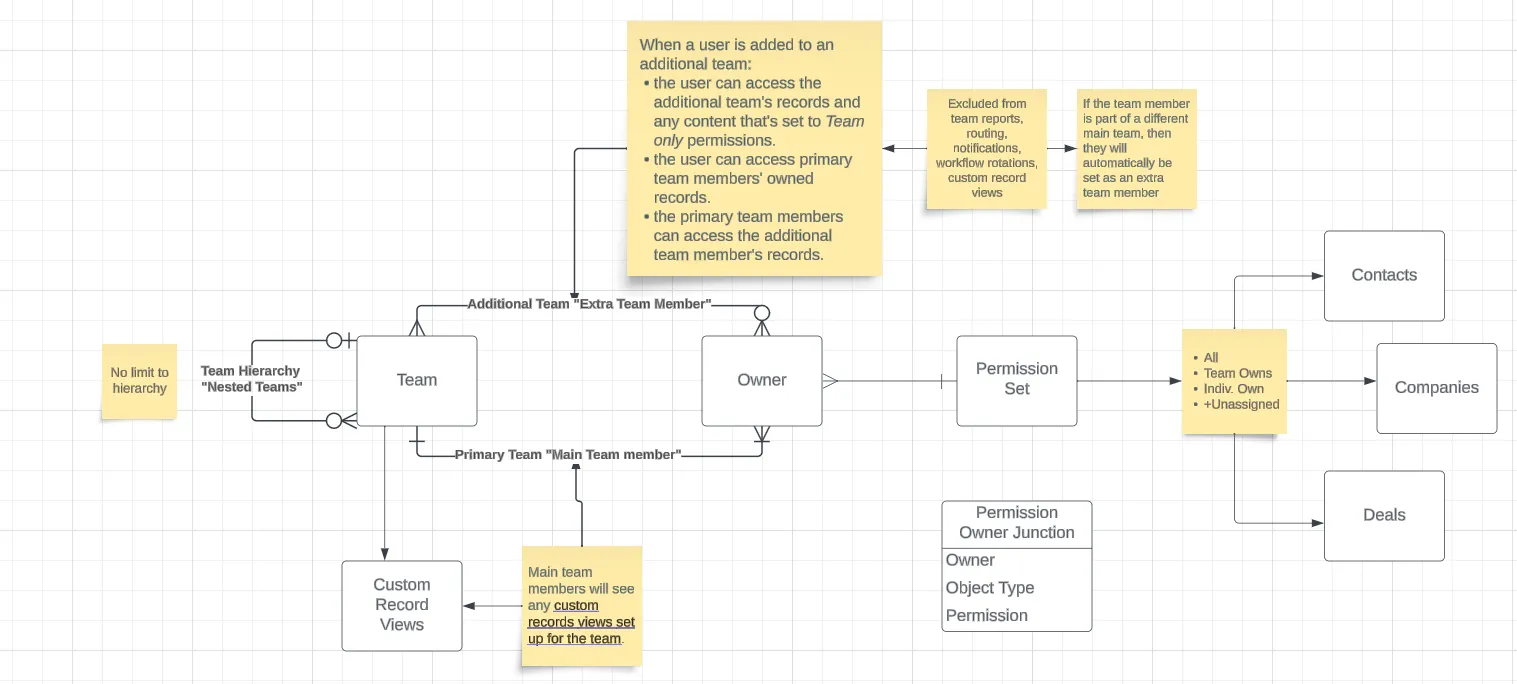

3. Required Permissions

Configure object permissions in HubSpot for contacts, companies, deals, tickets, CRM emails, and calls.

Permission configuration should follow principle of least privilege, granting only the minimum access required for specific LLM functions. Implement granular permissions that allow different LLM capabilities to access different data subsets, enabling fine-grained control over data exposure and supporting compliance requirements.

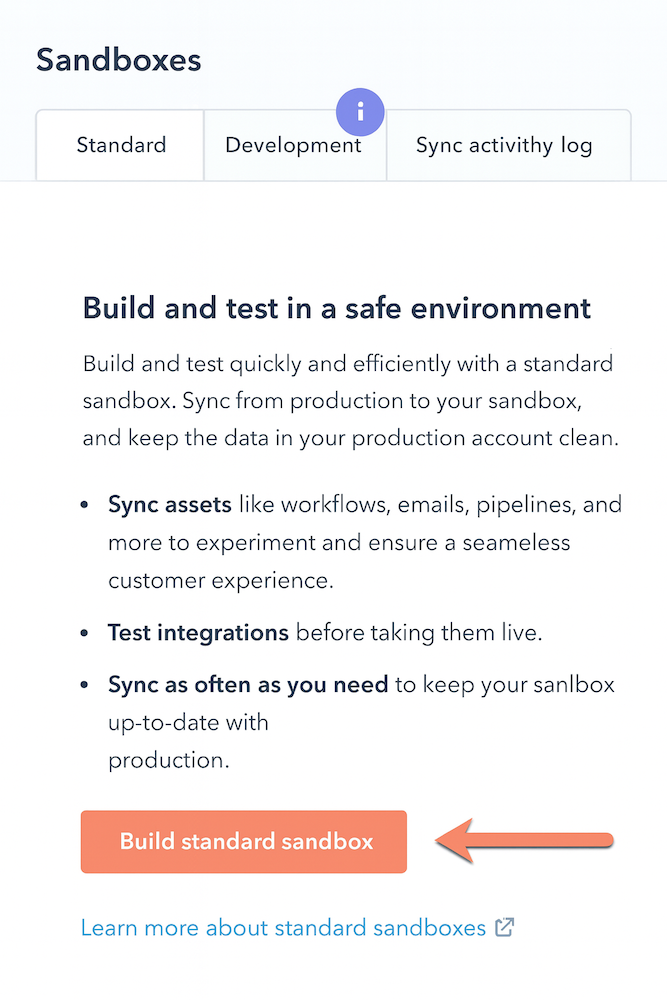

Testing Setup

Super-admins can create a standard sandbox to test integrations without affecting production data.

Testing environments should include synthetic data generation capabilities that create realistic customer scenarios for comprehensive LLM training and validation. Implement automated testing pipelines that verify LLM responses against expected outcomes, and establish A/B testing frameworks that enable safe deployment of model updates.

Security Configurations

Perform API security testing: authentication, parameter tampering, injections, fuzz testing, etc.

Security configurations must extend beyond basic API testing to include prompt injection detection, adversarial input filtering, and output validation mechanisms that prevent the LLM from exposing sensitive information inappropriately. Implement comprehensive logging and monitoring systems that track all data access and model interactions for audit purposes.

What Is the Complete HubSpot Data Architecture for LLM Applications?

Company Information

Stored in HubSpot Insights.

Company information serves as the foundational context for LLM operations, providing essential background about customer organizations that enables more sophisticated personalization and account-based strategies. This data includes industry classifications, company size metrics, technology stack information, and behavioral patterns that inform intelligent automation decisions.

Deal Pipelines

Visualize sales processes and revenue forecasts.

Deal pipeline data provides temporal context that enables LLMs to understand customer journey progression and predict optimal intervention timing. This structured data includes stage transitions, probability assessments, and historical deal velocity metrics that support predictive analytics and automated workflow triggers.

Marketing Data

Ads, campaigns, emails, social posts, SMS.

Marketing data encompasses multi-channel engagement patterns that enable LLMs to understand customer preferences and optimize communication strategies. This includes campaign performance metrics, engagement rates across different channels, content interaction patterns, and attribution data that supports intelligent content generation and channel selection.

Service Tickets

Centralize customer inquiries.

Service ticket data provides critical context about customer pain points, resolution patterns, and satisfaction trends that enable LLMs to proactively address customer needs and improve service delivery. This data includes ticket categorization, resolution timeframes, escalation patterns, and customer feedback that supports automated response generation and service optimization.

Custom Objects

Create if standard objects are insufficient (Enterprise tier).

Custom objects enable organizations to model industry-specific relationships and processes that standard HubSpot objects cannot accommodate. These custom data structures allow LLMs to understand specialized business contexts and provide more relevant insights tailored to specific organizational needs and industry requirements.

What Are the Most Effective Data Collection Strategies for HubSpot LLM Integration?

API Endpoints Utilization

Use object-specific endpoints and pagination.

Effective API utilization requires sophisticated strategies for handling large datasets efficiently while maintaining data quality and consistency. Implement intelligent pagination that adapts batch sizes based on API response times and available bandwidth, ensuring optimal throughput without overwhelming source systems or violating rate limits.

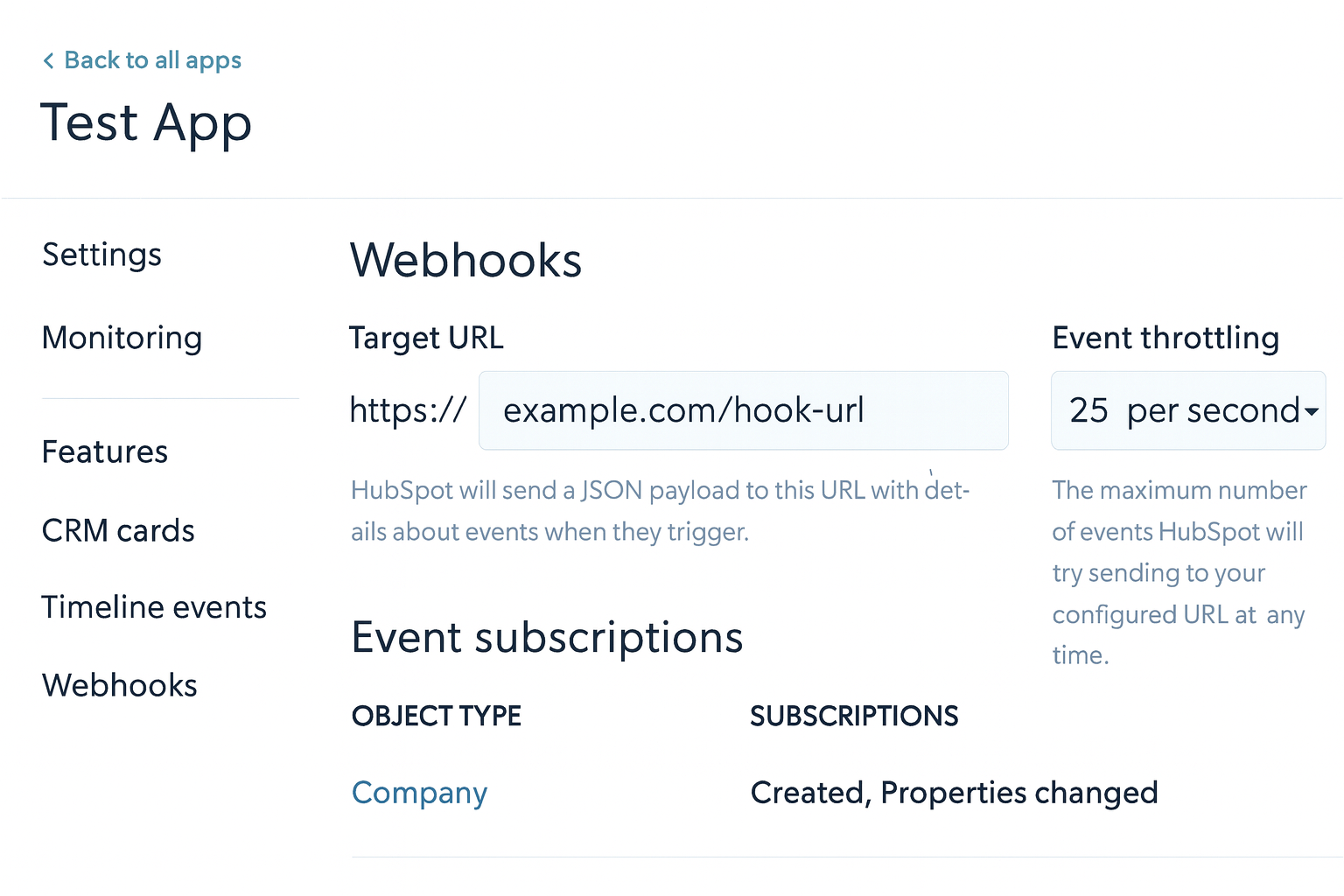

Webhook Implementation

Subscribe to HubSpot events (e.g., contact updates) to push data to your target system.

Webhook implementation requires robust error handling and retry mechanisms to ensure data consistency during network interruptions or system maintenance. Design webhook processors that can handle duplicate events gracefully, implement backpressure mechanisms to prevent system overload during high-volume events, and establish monitoring systems that track webhook delivery success rates and processing latencies.

Incremental Sync

Sync only changed data since the last run.

Incremental synchronization strategies must account for various change detection mechanisms including timestamp-based filtering, change logs, and API-native change detection features. Implement checkpoint management systems that track synchronization progress and enable recovery from failures without data loss or duplication.

Batch Processing

A tool like Airbyte offers 600+ pre-built connectors to batch-extract HubSpot data.

Key Airbyte features: AI-powered connector creation and schema management.

Batch processing implementations should leverage Airbyte's advanced features including automated schema drift detection, intelligent data quality monitoring, and scalable processing architectures that can handle enterprise-scale data volumes. Utilize Airbyte's change data capture capabilities to minimize processing overhead while maintaining data freshness requirements.

Historical Data Handling

Include past CRM interactions for better pattern recognition.

Historical data integration requires careful consideration of data relevance windows, storage optimization strategies, and processing efficiency. Implement data aging policies that prioritize recent interactions while maintaining sufficient historical context for pattern recognition, and design storage architectures that balance query performance with cost efficiency.

Rate Limit Management

Respect HubSpot API limits based on your subscription tier.

Rate limit management requires intelligent request scheduling, adaptive backoff strategies, and distributed request handling that maximizes throughput while avoiding limit violations. Implement monitoring systems that track API usage patterns and predict potential bottlenecks, enabling proactive capacity management and resource optimization.

How Do You Prepare HubSpot Data for Optimal LLM Performance?

Field Selection

Choose only relevant columns (e.g., deal stages, demographics).

Field selection requires deep understanding of both business objectives and LLM capabilities to identify data elements that provide maximum value for model training and inference. Implement feature importance analysis that quantifies the contribution of different data fields to model performance, enabling data-driven decisions about field inclusion and exclusion.

Data Cleaning

Remove duplicates, handle missing values, eliminate outliers.

Data cleaning processes must balance completeness with quality, implementing sophisticated algorithms that can identify and resolve data inconsistencies while preserving meaningful information. Design automated data quality pipelines that detect anomalies, standardize formats, and enrich data using external sources when appropriate.

Property Mapping

Map HubSpot property types (text, number, date, etc.) to target-system fields.

Property mapping requires comprehensive understanding of semantic relationships between different data representations and target system requirements. Implement intelligent mapping algorithms that can handle schema evolution, type conversions, and semantic transformations while maintaining data integrity and business meaning.

Relationship Handling

Preserve associations between objects (contacts ↔ companies, deals, tickets).

Relationship preservation requires sophisticated graph-based data modeling that maintains semantic connections while optimizing for LLM consumption. Design relationship encoding strategies that capture both direct associations and implicit relationships derived from interaction patterns and behavioral data.

Timeline Events

Use custom timeline events via API to enrich data.

Timeline event integration provides temporal context that enables LLMs to understand customer journey progression and predict optimal engagement timing. Implement event correlation algorithms that identify meaningful patterns across multiple timeline events and create composite features that capture customer lifecycle stages.

Activity Logs

Transform login frequency, messages, surveys, ticket history into model-ready format.

Activity log transformation requires sophisticated feature engineering that extracts meaningful behavioral signals from raw interaction data. Design aggregation strategies that capture both frequency and recency patterns, implement behavioral clustering algorithms that identify customer segments, and create derived features that quantify engagement quality and customer satisfaction trends.

What Is the Complete LLM Integration Framework Using Airbyte?

Airbyte can pipe data into LLM frameworks like LangChain or LlamaIndex and into vector databases (Pinecone, Weaviate, Milvus, Qdrant).

Model Selection

Define objectives, then pick models (GPT-4, Llama, BERT) that fit size, data, and compute constraints.

Model selection requires comprehensive evaluation of performance characteristics, cost implications, and deployment constraints across different organizational contexts. Implement model comparison frameworks that assess accuracy, latency, throughput, and resource requirements using representative workloads and data samples.

Context Engineering

Embed clear context so the LLM generates accurate answers.

Context engineering involves sophisticated prompt design strategies that provide optimal information density while maintaining clarity and relevance. Design context templates that adapt based on query types, user roles, and available data, ensuring that LLMs receive sufficient context without overwhelming token limits or processing capabilities.

Prompt Design

Choose zero-shot, one-shot, few-shot, or chain-of-thought prompts.

Prompt design requires systematic evaluation of different prompting strategies across various use cases and model architectures. Implement prompt optimization frameworks that can automatically test and refine prompt templates based on performance metrics, user feedback, and business outcomes.

Response Templates

Standardize output format for consistency.

Response template design must balance consistency with flexibility, enabling standardized outputs while accommodating diverse query types and business contexts. Implement template engines that can dynamically adapt response structures based on available data, user preferences, and integration requirements with downstream systems.

Token Optimization

Minimize tokens to cut latency and cost (see LLM tokenization).

Token optimization requires sophisticated compression strategies that maintain semantic meaning while reducing computational overhead. Implement intelligent summarization algorithms, context pruning techniques, and caching mechanisms that minimize token usage without compromising response quality or accuracy.

Error Handling

Use clean data, correct prompting, and robust feedback loops to reduce hallucinations.

Error handling systems must detect and mitigate various types of LLM failures including hallucinations, inappropriate responses, and processing errors. Design comprehensive validation frameworks that verify response accuracy, implement feedback loops that enable continuous improvement, and establish fallback mechanisms that ensure system reliability.

What Advanced AI Agent Architectures Can Transform HubSpot Workflow Automation?

Modern HubSpot integrations benefit from sophisticated AI agent architectures that extend beyond simple query-response patterns to enable complex reasoning, multi-step task execution, and autonomous decision-making within CRM environments. These advanced architectures combine multiple AI technologies to create intelligent systems capable of managing complex business processes with minimal human intervention.

Intelligent Agent Planning and Reasoning Systems

Advanced AI agents implement sophisticated planning algorithms that can decompose complex business objectives into actionable steps, evaluate multiple solution pathways, and adapt their approach based on changing conditions and feedback. These systems utilize hierarchical task networks that understand business process dependencies, enabling agents to coordinate multiple activities across different HubSpot modules while maintaining consistency and avoiding conflicts.

Goal-oriented behavior systems enable agents to understand business outcomes rather than just executing predefined tasks. When tasked with improving customer retention, these agents can autonomously identify at-risk customers, analyze their interaction patterns, determine optimal intervention strategies, and execute coordinated outreach campaigns across email, social media, and direct sales channels.

Multi-step reasoning capabilities allow agents to maintain context across extended business processes, understanding how actions in one domain affect outcomes in another. For example, an agent managing lead qualification can consider not only demographic and behavioral data but also sales team capacity, seasonal trends, and competitive market conditions when making prioritization decisions.

Collaborative Agent Networks and Workflow Orchestration

Sophisticated HubSpot implementations utilize collaborative agent networks where specialized agents focus on specific business domains while sharing information and coordinating activities through intelligent communication protocols. Marketing automation agents collaborate with sales intelligence agents to ensure consistent messaging and optimal handoff timing, while customer service agents share insights with product development agents to inform feature prioritization and roadmap planning.

Agent orchestration systems manage complex workflows that span multiple business functions, ensuring that activities are properly sequenced, dependencies are respected, and conflicts are resolved automatically. These systems can automatically adjust workflow execution based on resource availability, priority changes, and unexpected events, maintaining business continuity while optimizing for multiple objectives simultaneously.

Dynamic task allocation mechanisms enable agent networks to adapt their division of labor based on workload patterns, individual agent capabilities, and business priorities. During high-volume periods, generalist agents can provide additional capacity for specific functions, while specialized agents focus on complex cases that require domain expertise.

Integration with HubSpot ChatGPT SDKs and Advanced APIs

Modern agent architectures leverage HubSpot ChatGPT SDKs to create seamless integrations between conversational AI capabilities and CRM functionality. These integrations enable agents to understand natural language requests from business users, translate them into appropriate HubSpot operations, and provide conversational feedback about results and recommendations.

Advanced API integration patterns enable agents to perform complex operations that span multiple HubSpot objects and external systems. Agents can create sophisticated customer journey workflows that automatically trigger based on behavioral patterns, update multiple related records simultaneously, and integrate with external data sources to enrich customer profiles and improve decision-making accuracy.

Real-time processing capabilities ensure that agents can respond immediately to changing business conditions, customer interactions, and market events. This responsiveness enables proactive customer service, dynamic pricing strategies, and adaptive marketing campaigns that optimize performance based on current conditions rather than historical patterns.

How Do You Implement Performance Monitoring and Cost Optimization Strategies?

Effective LLM deployment requires comprehensive monitoring frameworks that track both technical performance metrics and business outcomes while implementing intelligent cost optimization strategies that maintain quality while minimizing operational expenses. These systems must provide real-time visibility into model behavior, usage patterns, and cost drivers to enable proactive management and continuous optimization.

Comprehensive Model Performance Monitoring

Model performance monitoring extends beyond simple accuracy metrics to encompass response quality, relevance, factual correctness, and alignment with business objectives. Implement automated evaluation systems that continuously assess model outputs against expected standards, using both rule-based validation and machine learning approaches to detect degradation, bias, and inappropriate responses.

Real-time monitoring dashboards provide stakeholders with immediate visibility into system performance, usage patterns, and potential issues. These dashboards track key performance indicators including response latency, query throughput, error rates, user satisfaction scores, and business impact metrics, enabling rapid identification and resolution of performance issues.

Advanced monitoring systems implement anomaly detection algorithms that can identify unusual patterns in model behavior, usage spikes, or performance degradation before they significantly impact business operations. These systems automatically alert relevant stakeholders and can trigger automated mitigation procedures such as scaling resources, switching to backup models, or implementing rate limiting to maintain system stability.

Intelligent Cost Management and Token Optimization

Token optimization strategies focus on minimizing computational costs while maintaining response quality through intelligent prompt engineering, context management, and caching mechanisms. Implement dynamic context pruning algorithms that remove irrelevant information from prompts while preserving essential context, reducing token consumption without compromising accuracy.

Predictive cost modeling systems analyze usage patterns, forecast resource requirements, and identify opportunities for cost optimization across different deployment scenarios. These systems can automatically adjust resource allocation based on demand patterns, implement usage-based scaling, and recommend configuration changes that optimize cost-performance ratios.

Intelligent caching frameworks store frequently requested information and common query patterns to reduce redundant processing and minimize token usage. These systems implement sophisticated cache invalidation strategies that ensure data freshness while maximizing cache hit rates, significantly reducing operational costs for high-volume applications.

Scalability Architecture and Resource Management

Scalable deployment architectures implement auto-scaling mechanisms that adapt resource allocation based on demand patterns, ensuring consistent performance during peak usage while minimizing costs during low-activity periods. These systems monitor queue lengths, response times, and resource utilization to make intelligent scaling decisions that balance performance and cost objectives.

Load balancing strategies distribute query loads across multiple model instances and deployment regions to optimize both performance and cost efficiency. Advanced load balancers consider factors such as model capability requirements, geographic proximity, resource costs, and capacity constraints when routing requests, ensuring optimal resource utilization across the entire system.

Resource optimization frameworks continuously analyze usage patterns and recommend configuration changes that improve efficiency and reduce costs. These systems identify underutilized resources, suggest right-sizing opportunities, and recommend deployment configurations that align resource allocation with actual business requirements and usage patterns.

What Core Features Should Your HubSpot LLM Application Include?

An LLM trained on HubSpot data should support:

- Deal Intelligence – insights to close deals.

- Email Content Generation – personalized marketing emails.

- Meeting Summaries – automate note-taking.

- Customer Insights – analyze behavior and purchasing habits.

- Engagement Scoring – measure and improve customer engagement.

Advanced core features should also include predictive churn analysis that identifies at-risk customers before they disengage, intelligent lead prioritization that considers both explicit criteria and behavioral signals, automated content personalization that adapts messaging based on customer journey stages, and dynamic pricing recommendations that optimize for both competitiveness and profitability.

Sophisticated implementations incorporate cross-channel orchestration capabilities that coordinate marketing activities across email, social media, direct sales, and customer service channels to provide consistent customer experiences. These systems understand channel preferences, timing optimization, and message frequency management to maximize engagement while avoiding oversaturation.

What Are the Most Impactful Business Applications for HubSpot LLMs?

Lead Qualification

Analyze engagement scores and lifecycle stages to prioritize leads.

Advanced lead qualification systems implement sophisticated scoring algorithms that combine explicit demographic and firmographic criteria with behavioral signals, engagement patterns, and predictive indicators. These systems continuously learn from conversion outcomes to refine their qualification criteria and adapt to changing market conditions and customer preferences.

Customer Service

Generate automatic responses to tickets; escalate complex issues to humans.

Intelligent customer service applications implement multi-tier response strategies that handle routine inquiries automatically while routing complex issues to appropriate human agents. These systems maintain context across multiple interactions, understand escalation patterns, and can proactively identify customers who may require additional attention based on their interaction history and satisfaction indicators.

Advanced customer service implementations include sentiment analysis capabilities that monitor customer emotions throughout interactions, enabling proactive intervention when frustration levels increase. These systems can automatically adjust response strategies, escalate to senior agents, or trigger retention workflows based on detected emotional states and satisfaction trends.

How Do You Deploy and Maintain HubSpot LLM Applications in Production?

Environment Staging

Provision software, vector stores, memory, and storage.

Production environment staging requires comprehensive infrastructure planning that accounts for computational requirements, data storage needs, network bandwidth requirements, and security constraints. Implement staging environments that accurately replicate production conditions, enabling thorough testing of performance characteristics, scalability limits, and failure scenarios before deployment.

Version Control

Track changes to code, data, and model weights.

Version control systems for LLM applications must handle both traditional code artifacts and large model files, training data, and configuration changes. Implement specialized version control workflows that can efficiently manage model versioning, track data lineage, and enable rollback procedures that maintain system integrity during updates and deployments.

CI/CD Pipeline

Automate testing and delivery of new model versions.

Continuous integration and deployment pipelines for LLM applications require specialized testing frameworks that validate model performance, accuracy, and safety before deployment. These pipelines implement automated testing procedures that verify response quality, check for bias and inappropriate content, and validate integration compatibility with existing systems and workflows.

Monitoring Setup

Track answer correctness, relevance, and hallucinations; gather user feedback.

Comprehensive monitoring systems track multiple dimensions of model performance including technical metrics such as latency and throughput, quality metrics such as accuracy and relevance, and business metrics such as user satisfaction and conversion rates. These systems implement automated alerting mechanisms that notify stakeholders when performance degrades or unusual patterns are detected.

Backup Procedures

Maintain raw/processed data and model checkpoints for rollback.

Backup and disaster recovery procedures must account for the complexity of LLM applications including trained models, training data, configuration settings, and integration state. Implement comprehensive backup strategies that enable rapid recovery from various failure scenarios while maintaining data consistency and minimizing business disruption.

Recovery Planning

Document and regularly test disaster-recovery workflows.

Disaster recovery planning requires detailed procedures for handling various failure scenarios including model corruption, data loss, infrastructure failures, and security breaches. Regular testing of recovery procedures ensures that backup systems function correctly and that recovery processes can be executed efficiently under stress conditions.

Conclusion

If you use HubSpot as your CRM, developing an LLM on top of your HubSpot data can further enhance performance and revenue. This guide covered every step—from authentication and data extraction to model deployment—so you can build an LLM that automates and optimizes your business operations.

The integration of sophisticated AI agents, comprehensive monitoring frameworks, and advanced optimization strategies transforms basic HubSpot integrations into intelligent business platforms that drive competitive advantage through automated decision-making, proactive customer management, and continuous optimization of business processes.

As AI technology continues to evolve, organizations that implement these comprehensive integration strategies will be best positioned to leverage emerging capabilities while maintaining operational excellence and customer satisfaction.

Frequently Asked Questions (FAQs)

Do I need technical expertise to build an LLM with HubSpot data?

Not necessarily. While advanced implementations may require data engineering and machine learning knowledge, many integrations can be set up using pre-built frameworks like LangChain or LlamaIndex combined with HubSpot APIs. Partnering with a developer or using managed AI services can also simplify the process.

How secure is it to connect HubSpot data with an LLM?

It’s secure if best practices are followed. You should use encrypted connections, implement role-based access control (RBAC), and manage authentication tokens through secure vaults. Compliance frameworks like GDPR and HIPAA also need to be considered if you’re handling sensitive data.

What business outcomes can I expect from integrating LLMs with HubSpot?

The most common benefits include faster customer query resolution, better lead qualification, personalized marketing campaigns, and predictive insights for churn prevention. Over time, this reduces manual effort, improves customer satisfaction, and increases revenue.

Can small businesses benefit from HubSpot LLMs, or is it only for enterprises?

Small and medium-sized businesses can gain just as much value as enterprises. By automating routine tasks like email drafting, ticket handling, and lead scoring, LLMs free up smaller teams to focus on growth and customer relationships without needing enterprise-level resources.

Suggested Reads

.webp)