What is Data Fabric: Architecture, Examples, & Purpose

Summarize this article with:

✨ AI Generated Summary

Data fabric is a network-based architecture that integrates data across on-premises, cloud, and hybrid environments using AI, ML, and automation to ensure consistent, secure, and real-time data availability. It breaks down data silos, improves data quality and governance, and accelerates decision-making by providing a unified, scalable platform.

- Key benefits include reduced integration and deployment time, democratized data access, enhanced data governance, and real-time insights.

- Five pillars: augmented data catalog & lineage, enriched knowledge graph, streamlined data preparation, workflow orchestration, and continuous observability.

- Successful implementation involves stakeholder engagement, infrastructure assessment, strong governance, pilot projects, and ongoing monitoring.

- Common use cases span manufacturing, financial services, and retail for operational efficiency, fraud detection, and personalized marketing.

- Leading vendors include SAP, IBM, and Informatica; Airbyte supports integration with 600+ connectors, open-source flexibility, and incremental syncs.

Data fabric is an innovative network-based architectural framework that facilitates end-to-end data integration across on-premises, cloud, and hybrid environments. By leveraging AI, machine learning (ML), and advanced automation, a data fabric ensures that data is consistently available, accurate, and secure across all platforms.

New technologies like Artificial Intelligence (AI) and the Internet of Things (IoT) have dramatically increased the volume of big data generated and consumed by organizations. Yet this data is often scattered across siloed systems and cloud environments, complicating data management and governance.

The result is heightened security risks, data inaccessibility, and inefficient decision-making. Many organizations are turning to data fabric solutions to tackle these challenges. A data fabric simplifies and optimizes data integration, offering a unified and comprehensive view of an organization's information assets.

What Are the Primary Benefits of Data Fabric?

According to Gartner, implementing a data fabric can reduce integration-design time by 30%, deployment time by 30%, and maintenance effort by 70%.

Democratizing Data Access

A data fabric breaks down silos, making trusted data readily available and fostering self-service data discovery. Organizations no longer need to rely on centralized IT teams for routine data requests.

Enhanced Data Quality and Governance

By leveraging active metadata, a data fabric improves data quality, consistency, and compliance with regulatory requirements. This ensures that business users can trust the data they access across all systems.

Real-Time Insights through Automation

Automated data-engineering tasks streamline integration, enabling real-time, data-driven decisions. This automation reduces manual intervention and accelerates time-to-insight for critical business processes.

Scalability for Any Environment

Automated workflows ensure data pipelines can handle any data volume and adapt quickly to new demands. Whether you're processing gigabytes or petabytes, the architecture scales seamlessly.

What Is the Purpose of Data Fabric?

Data fabric resolves data fragmentation by creating seamless connectivity and a holistic data environment. This unified approach is crucial for accelerating digital transformation initiatives, supporting innovation, product development, and enhanced customer experiences.

Real-time data processing becomes possible, which is essential for fraud detection and operational monitoring. Data integrity and availability are maintained for business continuity, ensuring that critical systems remain operational even during high-demand periods.

The overarching purpose is to create an intelligent data infrastructure that adapts to changing business needs while maintaining security and governance standards across all environments.

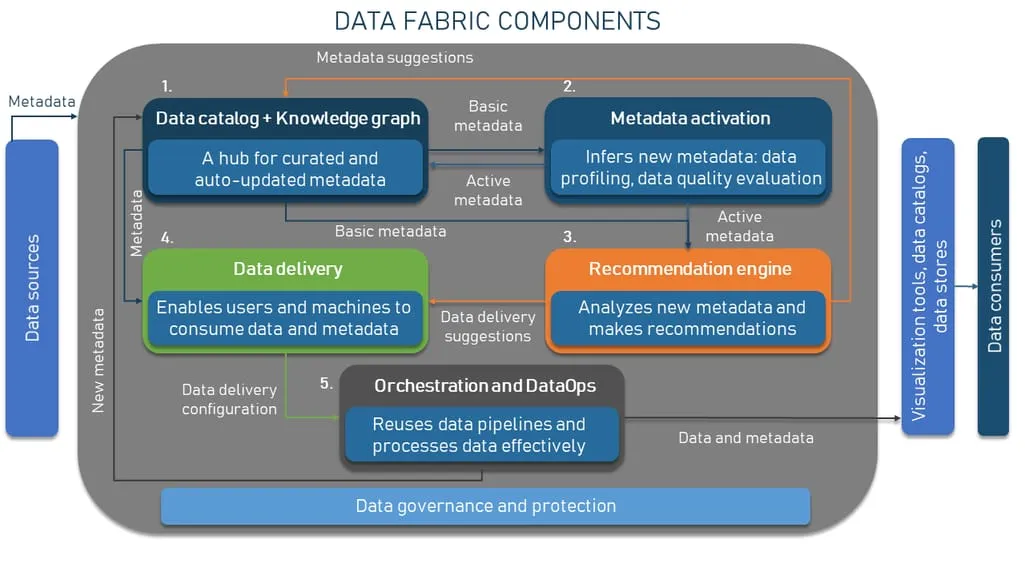

What Are the 5 Key Pillars of Data-Fabric Architecture?

A data-fabric architecture provides a unified approach to managing data across diverse environments, giving data teams a dynamic, self-service platform.

1. Augmented Data Catalog & Lineage

AI-powered discovery automatically catalogs, classifies, and tags data assets, making them easy to find while exposing lineage so teams can trust and collaborate around reliable data. This automated approach reduces the manual effort required for data cataloging while ensuring comprehensive coverage across all data sources.

2. Enriched Knowledge Graph with Semantics

Beyond technical metadata, a data fabric captures business context and relationships. Knowledge graphs visualize these connections and underpin robust data governance. This semantic understanding enables more intelligent data recommendations and automated policy enforcement.

3. Streamlined Data Preparation & Delivery

Multi-latency pipelines support both batch and real-time data movement and transformation. Masking and desensitization protect privacy and ensure regulatory compliance while maintaining data utility for business purposes.

4. Workflow Orchestration & DataOps Optimization

DataOps principles automate and orchestrate pipelines, incorporate CI/CD practices, and provide real-time monitoring for data quality and performance. This ensures reliable, repeatable processes that scale with organizational needs.

5. Continuous Observability & Improvement

Ongoing monitoring detects anomalies, enabling proactive optimization as data volumes and business needs evolve. This pillar ensures the data fabric remains performant and reliable as requirements change.

How Do You Implement Data Fabric Implementation Successfully?

Successful data fabric implementation requires careful planning and strategic execution across multiple phases.

1. Gather Requirements

Engage stakeholders from marketing, sales, and operations to identify specific data needs and key performance indicators. This foundational step ensures alignment between technical implementation and business objectives.

2. Assess Current Infrastructure

Catalog existing data sources, warehouses, lakes, and ETL/ELT processes to understand the current state. This assessment reveals integration points and potential challenges before implementation begins.

3. Establish Data Governance

Define clear data ownership, access controls, and security protocols that will govern the fabric. Strong governance prevents security gaps and ensures compliance throughout the implementation process.

4. Build a Cross-Functional Team

Include experts in data architecture, engineering, and business analysis to ensure comprehensive coverage of technical and business requirements. This diverse team enables better decision-making and problem-solving.

5. Start with a Pilot Project

Validate the approach with a limited scope implementation to uncover challenges and refine processes before enterprise rollout. Pilot projects reduce risk and provide valuable learnings for full-scale deployment.

6. Provide Training & Self-Service Tools

Empower teams with the knowledge and tools needed to leverage the data fabric effectively. Proper training accelerates adoption and maximizes return on investment.

7. Practice Data Observability

Implement comprehensive monitoring to track performance, detect anomalies, and scale as requirements grow. Data observability ensures the fabric remains healthy and performant over time.

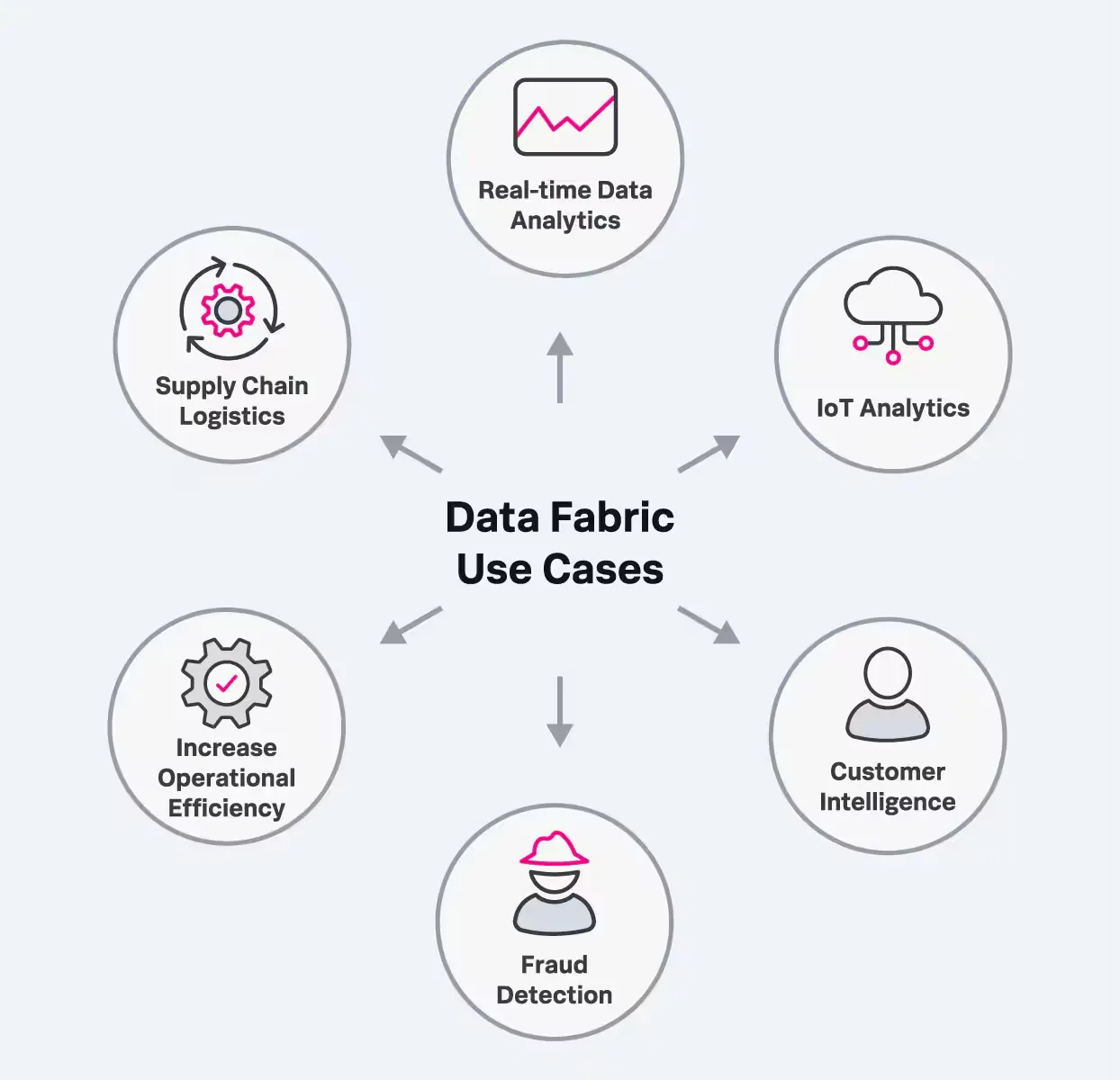

What Are Common Use Cases of Data Fabric?

Data fabric architecture supports diverse business scenarios across multiple industries, enabling organizations to unlock value from their distributed data assets.

Manufacturing Operations

Integrate production-line, inventory, and logistics data for real-time supply-chain optimization. Manufacturing companies use data fabric to connect IoT sensors, ERP systems, and supplier data for improved operational efficiency.

Financial Services

Combine transaction data, customer profiles, and threat intelligence to detect fraud in real time. Financial institutions leverage data fabric to meet regulatory requirements while improving customer experience through faster, more accurate decision-making.

Retail and E-commerce

Unify inventory systems, customer preferences, and sales data for a 360-degree customer view and personalized marketing. Retailers use this comprehensive data view to optimize pricing, inventory levels, and marketing campaigns.

What Are the Leading Data Fabric Vendors?

Several established vendors provide comprehensive data fabric solutions, each with distinct strengths and capabilities.

1. SAP Data Intelligence Cloud

Connect, discover, enrich, and orchestrate data assets across diverse sources while reusing any processing engine. SAP's solution integrates well with existing SAP ecosystems and provides strong governance capabilities.

2. IBM Cloud Pak for Data

Automate data integration, metadata management, and AI governance across hybrid-cloud environments. IBM's platform offers strong containerization and Kubernetes support for enterprise deployments.

3. Informatica Intelligent Data Fabric

AI- and ML-driven automation for complex data integration, analytics, data migrations, and master data management at enterprise scale.

How Can Airbyte Streamline Data Integration in Your Data Fabric?

Seamless data integration is vital to any successful data fabric implementation. Airbyte simplifies this critical component with more than 600 pre-built connectors that efficiently move data to warehouses, lakes, and databases.

Key Features for Data Fabric Integration

- Open-source, no-code ELT platform that eliminates vendor lock-in while providing enterprise-grade capabilities. Organizations can deploy Airbyte across cloud, hybrid, or on-premises environments to match their data fabric requirements.

- Connector Development Kit enables rapid creation of custom connectors for specialized data sources. This flexibility ensures that unique business requirements don't become integration bottlenecks.

- Incremental syncs move only new or changed data, optimizing bandwidth and reducing processing overhead. This efficiency is crucial for maintaining real-time data fabric performance.

- Native dbt integration supports in-pipeline transformations, enabling data quality and preparation within the integration layer. This reduces complexity and improves data consistency across the fabric.

Airbyte's open-source foundation aligns with data fabric principles of avoiding vendor lock-in while providing the reliability and scalability needed for enterprise data environments. Organizations implementing data fabric architectures can leverage Airbyte to connect diverse data sources without compromising on flexibility or governance.

Explore more capabilities in Airbyte's documentation.

Closing Thoughts

A data-fabric approach breaks down organizational silos, improves data quality, and empowers teams to leverage information assets for innovation and growth. Though data fabric implementation requires careful planning and continuous maintenance, the payoff is a unified, accessible, and secure data environment.

This integrated approach fuels a data-driven culture that enables faster decision-making, improved operational efficiency, and enhanced competitive advantage. Organizations that successfully implement data fabric architectures position themselves to capitalize on emerging technologies and evolving business requirements.

Frequently Asked Questions

What is the difference between data fabric and data mesh?

Data mesh decentralizes ownership by empowering individual teams to manage their data as products, whereas data fabric creates a centralized architectural layer that provides universal data access and integration capabilities across the organization.

Why use data fabric for a data warehouse instead of ADF + Azure SQL?

Data fabric handles unstructured and real-time streaming data and offers a more scalable, vendor-agnostic architecture for big-data analytics. While Azure Data Factory (ADF) and Azure SQL are optimized for traditional BI and structured data within the Microsoft ecosystem, data fabric provides broader integration capabilities across multiple cloud and on-premises environments.

How does a data fabric foster a data-driven culture?

By providing a unified, trusted view of all organizational data, a data fabric simplifies governance and enables everyone to make informed, data-backed decisions. This democratized access encourages collaboration and data-driven thinking across the enterprise while maintaining security and compliance standards.

What are the main challenges in data fabric implementation?

Common challenges include complex integration requirements across legacy systems, ensuring consistent data quality and governance across diverse sources, managing security and compliance across multiple environments, and coordinating cross-functional teams throughout the implementation process. Successful implementations address these challenges through careful planning, pilot projects, and ongoing monitoring.

How long does typical data fabric implementation take?

Implementation timelines vary significantly based on organizational size, existing infrastructure complexity, and scope of integration. Pilot projects typically take 3-6 months, while enterprise-wide implementations can range from 12-24 months. Organizations that start with focused use cases and expand incrementally often achieve faster time-to-value and reduce implementation risks.

.webp)