What is Data Observability? Unlock Its Potential for Success

Summarize this article with:

✨ AI Generated Summary

Data observability provides comprehensive, proactive monitoring of data quality, lineage, and pipeline health across its entire lifecycle, unlike traditional system-focused monitoring. Key pillars include freshness, distribution, volume, schema, and lineage, enabling early detection of anomalies and ensuring reliable data for AI/ML and business decisions. Implementing data observability involves phased rollout, tool integration, organizational change, and continuous improvement, with platforms like Airbyte enhancing integration and monitoring capabilities.

Businesses depend on data for insights that drive marketing campaigns, product development, and strategic decisions. However, having vast amounts of data isn't sufficient—you must ensure the data you use is accurate, complete, and trustworthy. When organizations implement artificial intelligence systems or make critical business decisions based on unreliable data, the consequences can be devastating to both operations and customer trust.

This is where data observability becomes essential for modern data-driven organizations. Data observability represents a comprehensive approach to understanding your data's health throughout its entire lifecycle.

Think of it as a sophisticated monitoring system that provides transparent visibility into the inner workings of your data ecosystem, enabling you to identify and resolve issues before they affect crucial business decisions. Unlike traditional monitoring that focuses solely on system performance, data observability encompasses the complete data journey from source to consumption, ensuring reliability at every stage.

This article explores what data observability is, its foundational pillars, implementation strategies, and the transformative benefits it delivers to organizations seeking to build trust in their data assets.

How Does Data Observability Differ from Traditional Monitoring?

Traditional monitoring approaches focus primarily on infrastructure metrics and system availability, alerting teams when servers go down or performance degrades. While these capabilities remain important, they fail to address the fundamental question of whether the data flowing through these systems is reliable, accurate, and fit for business use.

Data observability extends monitoring capabilities to encompass the data itself, examining content quality, structural integrity, and processing reliability. Through data observability, you can detect missing values, data inconsistencies, schema changes, and pipeline anomalies that may otherwise go unnoticed until they create significant downstream issues.

This proactive approach enables organizations to maintain data trust and prevent costly errors in business decision-making. The distinction becomes particularly important in modern cloud-native architectures where data flows across multiple systems, transformations, and processing stages.

Traditional monitoring might confirm that all systems are operational while critical data quality issues remain hidden, leading to incorrect business insights and strategic mistakes.

What Are the Key Components of Data Observability?

Data observability encompasses several critical dimensions that work together to provide comprehensive visibility into data ecosystem health.

Data Quality Assessment

Data Quality Assessment represents the foundation of observability, continuously evaluating accuracy, completeness, and consistency across all data assets. This includes identifying missing values, detecting outliers, and monitoring schema changes that could break downstream applications.

Pipeline Performance Monitoring

Pipeline Performance Monitoring tracks the execution health of data processing workflows, measuring pipeline execution times, identifying bottlenecks, and detecting errors that could cause data latency issues.

Data Lineage Tracking

Data Lineage Tracking provides complete visibility into data origins, transformations, and movement patterns throughout the data ecosystem. See more on data lineage.

Schema Evolution Management

Schema Evolution Management continuously monitors structural changes to data sources and processing systems, alerting stakeholders before modifications break pipelines or applications.

Anomaly Detection and Alerting

Anomaly Detection and Alerting leverages statistical analysis and machine learning techniques to identify unusual patterns that may indicate data quality issues, system problems, or security threats.

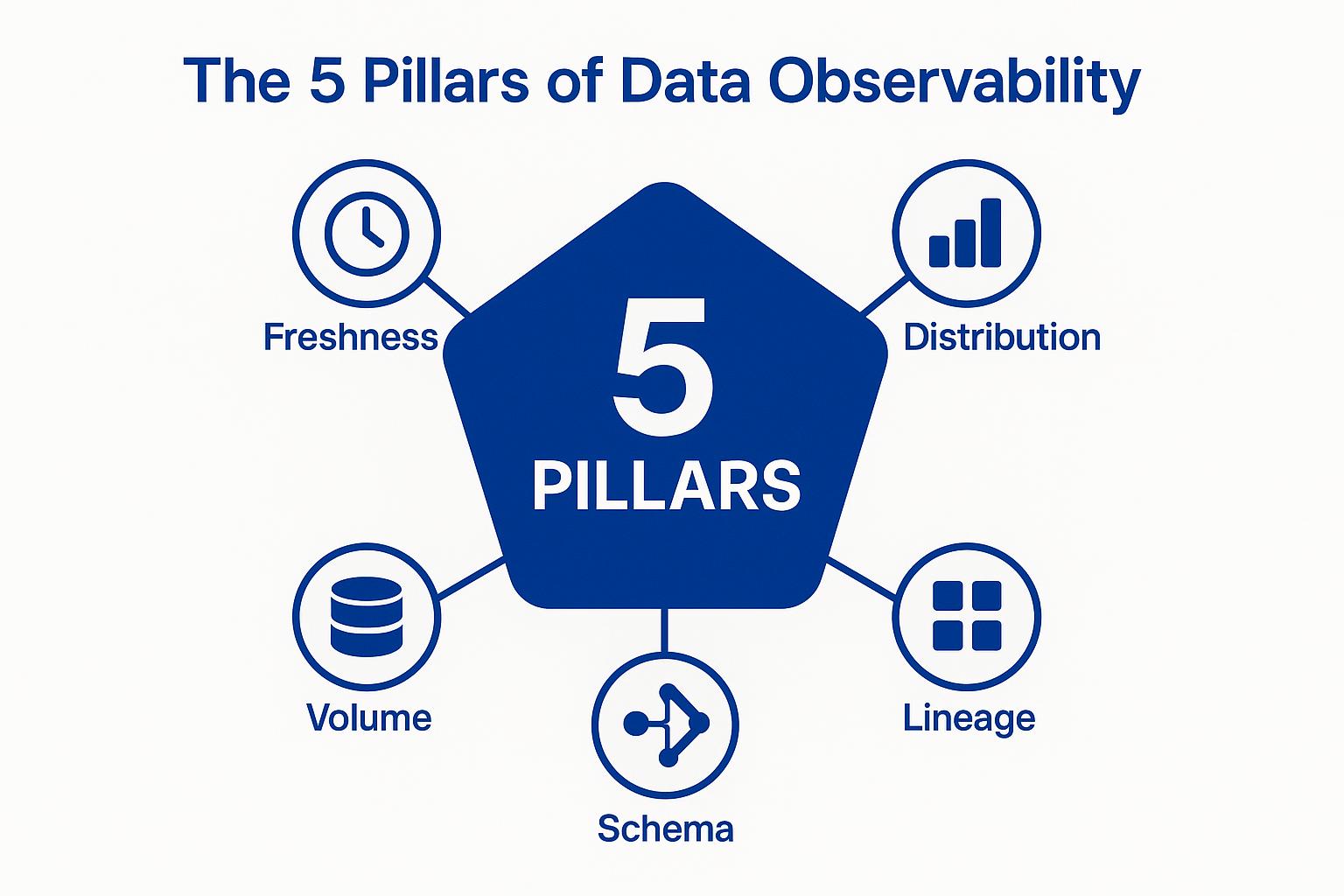

What Are the Five Pillars of Data Observability?

1. Freshness

Freshness measures how current and up-to-date your data is, ensuring that business decisions rely on the most recent available information.

2. Distribution

Distribution monitoring tracks whether data values stay within expected ranges to detect anomalies and support data quality in business operations.

3. Volume

Volume monitoring tracks the quantity of data ingested, processed, and delivered throughout the data pipeline.

4. Schema

Schema monitoring validates data structure and organization, ensuring consistency across systems and processing stages.

5. Lineage

Lineage tracking provides complete visibility into data flow from source systems through all transformation and processing stages to final consumption points.

How Does Data Observability Enable AI and Machine Learning Success?

AI and ML models depend fundamentally on high-quality, consistent, and reliable data. Data observability supports several critical capabilities for artificial intelligence and machine learning initiatives.

Training Data Quality Assurance validates that training datasets are complete, unbiased, and representative. Production Data Monitoring ensures that data used for inference maintains expected quality and structure.

Model Drift Detection identifies performance degradation as data patterns evolve. Bias Monitoring and Fairness Validation continuously assesses model outputs for unintended bias.

What Are the Benefits of Implementing Data Observability?

Improved Data Quality and Trust

Organizations gain confidence in their data assets through continuous monitoring and validation of data integrity across all systems and processes.

Faster Issue Identification and Resolution

Proactive detection capabilities enable teams to identify and address data problems before they impact business operations or decision-making processes.

Increased Confidence in Business Decisions

Leaders can make strategic choices based on verified, reliable data rather than hoping their information sources are accurate and complete.

Streamlined Data Governance and Compliance

Data observability supports governance initiatives and regulatory requirements through comprehensive tracking and validation capabilities, supported by practices such as data governance.

Enhanced Operational Efficiency

Teams spend less time troubleshooting data issues and more time delivering business value through improved data reliability and automated monitoring processes.

What Are the Essential Implementation Strategies for Data Observability?

1. Phased Implementation Approach

Start with high-impact pipelines and expand gradually across the organization. This strategy allows teams to demonstrate value quickly while building expertise and refining processes.

2. Tool Selection and Integration Strategy

Balance integrated platforms versus best-of-breed tools based on organizational needs, existing infrastructure, and long-term strategic goals.

3. Organizational Change Management

Establish clear ownership and incentives for data quality across teams and departments. Create accountability structures that encourage proactive data stewardship.

4. Technical Architecture Planning

Design scalable, cloud-friendly observability infrastructure that can grow with organizational data needs and integrate seamlessly with existing systems.

5. Success Measurement and Continuous Improvement

Track both technical metrics and business outcomes to demonstrate value and identify opportunities for enhancement and optimization.

What Challenges Must Organizations Overcome?

Additional challenges include scalability planning, alert-fatigue mitigation, and cross-system correlation. Organizations must also address cultural resistance to change and ensure adequate budget allocation for tools and personnel.

Many companies struggle with balancing comprehensive monitoring with system performance impact. The complexity of modern data architectures creates additional coordination challenges across multiple teams and technology stacks.

How Can Airbyte Enhance Your Data Observability Strategy?

Airbyte accelerates observability initiatives by offering comprehensive data integration capabilities that support monitoring and quality assurance across your entire data ecosystem.

Comprehensive Connector Ecosystem

Airbyte provides over 550 sources and destinations, enabling organizations to consolidate data from diverse systems into unified observability platforms for comprehensive monitoring and analysis.

Change Data Capture Capabilities

Real-time visibility into data modifications through change data capture functionality allows teams to monitor data evolution and detect issues as they occur rather than after downstream impacts.

Connector Development Kit

Build custom integrations quickly when standard connectors don't meet specific observability requirements or when connecting to proprietary systems and applications.

Monitoring and Alerting Integration

Airbyte does not provide native connectors for Datadog, Prometheus, or other monitoring platforms, but its flexible integration options and open-source nature enable organizations to build custom solutions that integrate pipeline health metrics with their existing observability infrastructure.

Orchestration Platform Compatibility

Seamless integration with Airflow, Prefect, Dagster enables comprehensive workflow monitoring and coordination across complex data processing environments (guide here).

PyAirbyte Library

Programmatic access for custom validations and monitoring logic allows data teams to implement specialized quality checks and observability requirements tailored to specific business needs.

Conclusion

Data observability represents a fundamental shift from reactive data management to proactive data reliability assurance. By continuously monitoring data health across freshness, distribution, volume, schema, and lineage, organizations can detect issues early and maintain high data quality standards. Strategic planning, the right tooling, and cultural commitment are essential to success. Solutions like Airbyte simplify integration, improve lineage visibility, and enable scalable observability across diverse data ecosystems, empowering organizations to act confidently on their most valuable asset.

Frequently Asked Questions

What is the difference between data monitoring and data observability?

Monitoring tracks predefined metrics; observability provides holistic insight into data health, lineage, and quality.

How long does it take to implement data observability?

Focused implementations deliver value in weeks; enterprise-wide rollouts often take months depending on organizational complexity and scope.

What skills are required?

Data engineering, statistical analysis, system monitoring, and domain expertise.

How does data observability support regulatory compliance?

Through detailed lineage, automated policy enforcement, and continuous auditing.

What are typical costs?

Tool licensing, infrastructure, and personnel; most organizations realize ROI within months of implementation.

.webp)