Data Orchestration vs ETL: Key Differences

Summarize this article with:

✨ AI Generated Summary

Data orchestration automates and manages complex, multi-system workflows with real-time processing and AI-driven optimization, while ETL focuses on extracting, transforming, and loading data into target systems. Key benefits of orchestration include improved automation, governance, and scalability, whereas ETL excels in structured data transformation and compliance-heavy scenarios.

- Data orchestration supports dynamic workflows, error handling, and multi-cloud coordination.

- ETL provides robust data quality validation, lineage, and is suited for predictable, regulated data pipelines.

- Open-source tools like Airbyte (600+ connectors) enable cost-effective integration combining both approaches.

- Choosing between them depends on workflow complexity, operational scale, and specific business needs.

Data is considered an important asset, so data management is a critical task for your organization. Data orchestration and data integration through ETL are important processes in data management. While both may seem similar and are used interchangeably, they are two different concepts that need to be understood thoroughly.

This guide dives into the data orchestration vs ETL comparison, providing you with a complete and detailed understanding of both methods. The modern data landscape has evolved significantly, with open-source solutions revolutionizing how organizations approach data integration, while new challenges around performance, scalability, and vendor lock-in continue to shape technology decisions. Let's explore these critical considerations for your data architecture strategy.

What Is Data Orchestration and How Does It Transform Data Management?

Data orchestration is the process of streamlining and optimizing various data-management tasks—such as data integration and transformation, governance, quality assurance, and more. By systematically managing data flows, you can make datasets more accessible throughout the organization, empowering your teams to develop effective strategies. To make data orchestration more effective, you can adopt data orchestration tools that remove data silos and lead to better data-driven decisions.

Modern data orchestration has evolved far beyond simple workflow scheduling to encompass intelligent automation, real-time processing capabilities, and AI-driven optimization. Organizations are increasingly adopting orchestration platforms that can automatically detect schema changes, optimize resource allocation dynamically, and provide sophisticated error handling and recovery mechanisms that reduce manual intervention.

The emergence of event-driven architectures has transformed how orchestration platforms operate, enabling immediate responses to data changes and business events rather than relying solely on scheduled batch processing. This shift supports real-time analytics and decision-making processes that are essential for competitive advantage in fast-moving markets.

How Data Orchestration Benefits Your Organization

Data orchestration provides several key advantages that can transform your data management approach. Less manual intervention reduces the possibility of human error, enhancing data quality and reliability through automated validation and monitoring systems.

Streamlining repetitive tasks frees resources for more strategic work while intelligent automation optimizes resource utilization across complex workflows. Centralized control allows you to handle growing, multi-source data securely and consistently with dynamic scaling capabilities that adapt to workload demands.

You can enforce governance and regulatory requirements across all organizational datasets through comprehensive audit trails and automated policy enforcement. Modern orchestration platforms support streaming data processing and immediate response to business events, enabling faster decision-making.

What Challenges Should You Consider With Data Orchestration?

Integrating and operating orchestration tools requires significant technical expertise in data pipeline management and understanding of distributed systems architecture. Diverse source structures, incompatible schemas, and conflicting models can be challenging to handle, particularly when coordinating across multiple cloud environments.

Comprehensive orchestration tooling can be expensive for startups or small businesses, especially when considering cloud infrastructure costs for high-volume processing. Complex interdependencies in orchestrated workflows can create unexpected resource contention and scheduling conflicts that are difficult to diagnose and resolve.

Where Does Data Orchestration Excel in Practice?

Data orchestration shines in several critical business scenarios. Automating data preprocessing and cleaning in ML workflows with tools such as Kubeflow while maintaining data lineage and model reproducibility across development and production environments demonstrates its power in machine learning operations.

Creating personalized marketing campaigns by integrating customer data from multiple touchpoints in real-time enables immediate response to customer behavior and preferences. Implementing comprehensive data quality monitoring across enterprise data ecosystems automatically detects anomalies and triggers corrective actions before issues impact business operations.

Managing complex regulatory compliance workflows that require coordinated data processing across multiple systems while maintaining detailed audit trails and data sovereignty requirements showcases orchestration's governance capabilities.

Graniterock, a long-standing supplier to California's construction industry, adopted Airbyte—with its 600+ pre-built connectors—and integrated it with Prefect for scheduling. This combination standardized data processes, increased visibility, and cut internal development efforts while reducing tool costs, as detailed in the Graniterock success story.

What Is ETL and How Does It Fit in Modern Data Architecture?

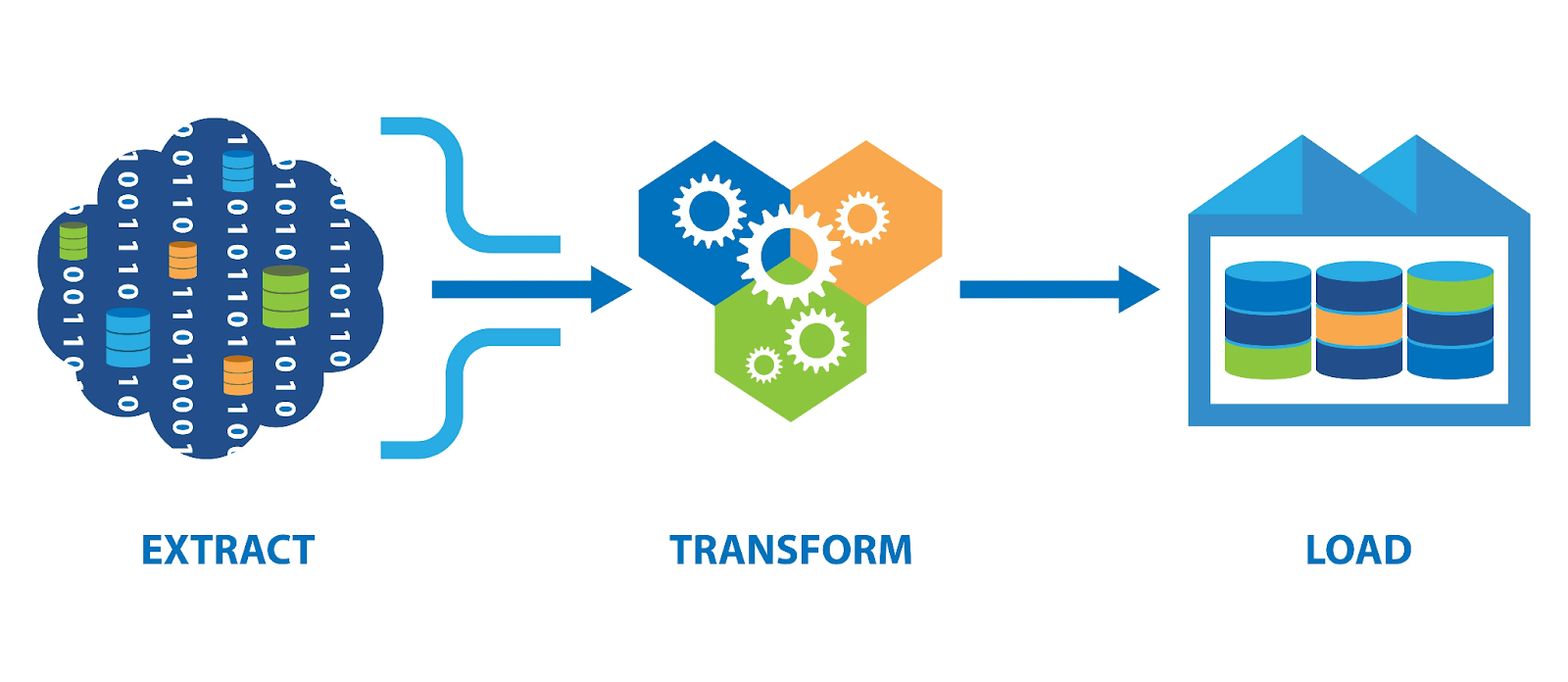

ETL stands for Extract, Transform, and Load—a long-established data-integration approach for moving large volumes of data from source systems to a destination. The traditional ETL paradigm has evolved significantly to accommodate cloud-native architectures, real-time processing requirements, and the growing complexity of modern data ecosystems.

Extract pulls data from sources such as files, CRM databases, APIs, or streaming platforms with support for both batch and real-time data capture methods. Transform cleans, enriches, and standardizes the raw data to match destination schemas using both traditional processing engines and modern cloud-native transformation capabilities.

Load moves the transformed data into the target system such as a data warehouse, data lake, or real-time analytics platform with optimized loading strategies for different data volumes and latency requirements.

How Has ETL Architecture Evolved?

The emergence of ELT (Extract, Load, Transform) architectures has challenged traditional ETL approaches, with cloud-native data warehouses providing computational capabilities that can handle transformation workloads more efficiently than external processing engines. This shift has led to the development of hybrid approaches that combine the benefits of both methodologies based on specific use-case requirements.

Modern ETL platforms now incorporate streaming capabilities, enabling real-time data processing alongside traditional batch operations. These platforms support schema evolution and dynamic transformation logic, addressing historical limitations around data structure changes.

What Are the Key Benefits of ETL?

ETL combines multiple sources into a single pipeline, unifying data in near real-time with sophisticated deduplication and conflict resolution capabilities. Transformation in a staging area saves time and ensures analysis-ready datasets with comprehensive data quality validation and enhancement processes.

ETL eliminates duplicate or redundant data for a comprehensive, accurate view while maintaining data lineage and governance controls. The extensive tooling and established best practices provide proven approaches for complex data integration scenarios.

What Limitations Should You Understand About ETL?

Processing large datasets or complex transformations can introduce delays, particularly when handling schema evolution or data quality issues. Incorrect transformations can severely degrade data quality and lead to poor decisions, with errors often discovered only after propagation to downstream systems.

Traditional ETL processes struggle with evolving data structures and require manual updates when source systems change their data formats. Processing large volumes through transformation stages can require significant computational resources and create performance bottlenecks.

Where Does ETL Provide the Most Value?

ETL excels in specific industry applications that require structured data processing. In retail, real-time pipelines update orders, inventory, and shipping data to reveal demand patterns while maintaining data consistency across multiple sales channels and integration points.

Finance organizations use ETL for masking and encrypting sensitive data before analytics, supporting fraud detection while ensuring regulatory compliance with comprehensive audit trails and data governance controls. Manufacturing companies collect IoT and sensor data to enable predictive maintenance and avoid downtime through sophisticated data processing that can handle high-frequency sensor data and complex analytical requirements.

Healthcare organizations process patient data from multiple systems while maintaining HIPAA compliance and enabling real-time clinical decision support through secure, validated data pipelines.

How Does Open Source Transform Modern Data Orchestration vs ETL?

The open-source revolution has fundamentally transformed the data orchestration and ETL landscape, creating unprecedented opportunities for organizations seeking flexible, cost-effective data solutions while fostering innovation through community-driven development. This transformation represents more than just cost savings—it reflects a fundamental shift toward collaborative development models that can adapt quickly to emerging requirements and technology changes.

Apache Airflow stands as the dominant force in open-source orchestration, with its contributor community continuing to grow. The platform has become one of the most active projects within the Apache Software Foundation ecosystem.

How Do Different Orchestration Platforms Compare?

The diversity of open-source orchestration platforms reflects different architectural philosophies and technical approaches. Task-centric orchestrators like Airflow and Luigi organize workflows as DAGs, while data-centric orchestrators such as Dagster and Flyte treat data assets as primary workflow components with native support for data lineage tracking and type safety.

Emerging platforms like Netflix's recently open-sourced Maestro demonstrate how large-scale technology companies contribute specialized solutions back to the community. Maestro's focus on handling both cyclic and acyclic workflow patterns addresses limitations found in traditional DAG-based approaches, while its flexible execution support for Docker images reflects evolving requirements of modern data and ML workflows.

What Role Does Community-Driven Innovation Play?

Airbyte exemplifies the potential for strategic community engagement to accelerate product development. The platform claims a large and active community of data engineers, with a significant portion of its connectors created by community members. Its bounty system and revenue-sharing plans provide sustainable economic incentives for long-term community engagement.

Airbyte maintains over 600+ connectors, making it the industry leader in integration coverage. This community-driven approach enables rapid expansion of integration capabilities while reducing development overhead for individual organizations.

How Do Open Source vs Open Core Models Differ?

Pure open-source projects like Apache Airflow operate under foundation governance where no single commercial entity controls the project's direction, while open-core models like those used by Kestra, Dagster, and Prefect keep premium features—such as SSO, RBAC, and enterprise integrations—in paid versions. Organizations must weigh the trade-offs between cost, control, and sustainability when selecting a platform.

How Do You Choose Between Data Orchestration vs ETL?

Data orchestration manages and automates the flow of data across systems and processes, while ETL is a specific process for extracting, transforming, and loading data into a target system. Understanding these fundamental differences helps you make informed technology decisions.

What Are the Key Functional Differences?

How Do Flexibility and Adaptability Compare?

Modern ETL tools now support schema evolution and dynamic transformation logic, enabling more responsive data processing. Data orchestration tools integrate with multiple systems simultaneously and support complex conditional logic and dynamic workflow generation.

ETL provides fine-grained control over each pipeline step, while data orchestration offers comprehensive visibility and coordination across interdependent tasks. This distinction becomes critical when managing complex, multi-team environments.

What About Operational Scale and Cost?

ETL proves effective for predictable processing patterns with well-defined transformation requirements. Data orchestration becomes optimal for complex, interconnected data infrastructures requiring coordination across multiple teams and systems.

Using platforms like Airbyte with orchestration tools such as Dagster, Kubernetes, or Prefect—or implementing PyAirbyte—can provide budget-friendly solutions that centralize both ETL pipelines and orchestration capabilities.

What Current Challenges Do Data Engineers Face?

Modern data engineering presents several persistent challenges that impact both orchestration and ETL implementations. Understanding these challenges helps inform architectural decisions and technology selection.

How Do Connector Reliability Issues Impact Operations?

Unreliable connectors and poor state management often lead to crashes, stalled syncs, and expensive full refreshes instead of incremental updates. This becomes particularly problematic when dealing with high-volume data sources or mission-critical business processes.

Organizations frequently struggle with connector maintenance overhead, especially when managing dozens of different integration points. The complexity of maintaining custom connectors while ensuring data quality and reliability creates ongoing operational burden.

What Performance and Scalability Bottlenecks Exist?

Distributed architectures introduce resource contention, network latency, and storage-cost optimization challenges that are difficult to diagnose and resolve. These issues become magnified when processing large volumes of data across multiple cloud environments.

Auto-scaling configurations often lag behind actual demand, creating performance degradation during peak processing periods. Resource allocation becomes increasingly complex when coordinating multiple processing engines and storage systems.

How Does Integration Complexity Affect Implementation?

A fragmented tooling ecosystem forces engineers to maintain brittle custom integrations and middleware, especially when dealing with legacy systems. Each new tool introduction creates additional complexity in maintaining consistent data flows and monitoring capabilities.

API versioning and schema evolution across different systems create ongoing maintenance overhead that diverts engineering resources from strategic initiatives to operational troubleshooting.

What Security and Compliance Challenges Persist?

Implementing consistent security policies across hybrid and multi-cloud environments requires sophisticated governance frameworks to ensure encryption, audit logging, and data residency compliance. Managing credentials and access controls across diverse systems creates additional complexity.

Regulatory requirements continue evolving, requiring ongoing updates to data processing workflows and governance procedures. Maintaining compliance while enabling business agility becomes an ongoing balancing act.

When Should You Consider Data Orchestration?

Choose data orchestration when you handle complex, interconnected workflows such as ML pipelines or real-time analytics that require sophisticated coordination across multiple processing stages. Organizations operating across multiple clouds or hybrid environments benefit from orchestration's ability to manage distributed resources consistently.

You need comprehensive governance, monitoring, and automation at scale when dealing with enterprise-wide data operations. Data orchestration excels when you need to coordinate data processing across distributed teams and systems while maintaining visibility and control.

Consider orchestration when your workflows involve conditional logic, dynamic resource allocation, or complex dependency management that goes beyond simple sequential processing. The investment in orchestration pays off when operational complexity exceeds what traditional scheduling and monitoring tools can handle effectively.

When Should You Consider ETL?

Choose ETL when you need well-defined extraction, transformation, and loading into specific destinations with predictable data patterns and transformation requirements. ETL proves most valuable when you require complex transformations before loading due to data quality or regulatory needs.

Select ETL approaches when you frequently add new data sources while preserving existing workflows and transformation logic. This approach works well when you have established data governance processes and need consistent transformation patterns across multiple data sources.

Process sensitive data that demands strict validation, encryption, and audit trails through ETL pipelines that can enforce security policies during transformation stages. ETL provides the control and visibility needed for compliance-heavy industries and regulated data processing requirements.

What Does the Future Hold for Data Integration?

Several emerging trends are reshaping the data integration landscape. Zero-ETL paradigms reduce data movement by integrating sources directly with analytical platforms, minimizing data transfer costs and latency while simplifying architecture complexity.

AI orchestration frameworks optimize prompt management, model switching, and cost control for LLM workloads, enabling organizations to leverage artificial intelligence capabilities within their data processing workflows. These frameworks provide intelligent resource allocation and model selection based on workload characteristics.

Serverless compute architectures offer improved cost-performance for ingestion and transformations by automatically scaling resources based on demand. This approach reduces operational overhead while optimizing costs for variable workloads.

Metadata-driven and self-service platforms automate pipeline behavior and governance through rich metadata management, enabling business users to create and manage data workflows without extensive technical expertise.

Conclusion

ETL's core function is to collect, transform, and load data, whereas data orchestration oversees entire workflows and coordinates complex interactions across systems. The most successful organizations leverage both approaches strategically. Open-source ecosystems—exemplified by platforms like Airbyte—make it possible to implement enterprise-grade capabilities without vendor lock-in or prohibitive licensing costs. The future lies in strategically combining orchestration and ETL to build flexible, scalable data architectures that empower innovation while maintaining reliability and performance.

FAQ

What is the main difference between data orchestration and ETL?

Data orchestration manages and automates comprehensive workflows across multiple systems, while ETL specifically focuses on extracting, transforming, and loading data from source to destination. Orchestration provides broader coordination capabilities, while ETL handles specific data processing tasks.

Can you use data orchestration and ETL together?

Yes, combining data orchestration with ETL provides the most comprehensive solution for complex data environments. Use ETL for structured transformation pipelines and orchestration to manage scheduling, dependencies, and coordination across multiple systems and teams.

Which approach is more cost-effective for small businesses?

ETL typically offers lower initial costs for small businesses with predictable data processing needs. However, open-source orchestration platforms can provide cost-effective scaling for growing organizations that need coordination across multiple systems and processes.

How do I know if my organization needs data orchestration?

Consider data orchestration if you manage complex workflows across multiple systems, operate in hybrid or multi-cloud environments, need comprehensive monitoring and governance, or coordinate data processing across distributed teams with interdependent tasks.

What role does Airbyte play in data orchestration vs ETL decisions?

Airbyte provides 600+ pre-built connectors that support both ETL pipelines and orchestration workflows. The platform integrates with orchestration tools like Prefect, Dagster, and Airflow, enabling organizations to implement comprehensive data integration strategies without vendor lock-in or excessive licensing costs.

Suggested Read:

.webp)