Data Pipeline vs. ETL: Optimize Data Flow (Beginner's Guide)

Summarize this article with:

✨ AI Generated Summary

Data pipelines and ETL are distinct but related approaches for moving and processing data, with data pipelines offering broader flexibility, supporting real-time streaming, and handling diverse data types, while ETL focuses on structured batch processing with complex transformations before loading into data warehouses.

- Data pipelines support low-latency, real-time processing and can trigger downstream actions beyond loading.

- ETL emphasizes data quality and compliance, typically running batch jobs with higher latency and mandatory transformations before loading.

- Modern tools like Airbyte enable building hybrid pipelines combining ETL and streaming capabilities, simplifying orchestration and integration.

- Choosing between ETL and data pipelines depends on latency needs, transformation complexity, data variety, compliance, and team expertise.

These days, businesses are collecting massive amounts of data from multiple sources. Two common approaches dominate the conversation: Extract, Transform, Load (ETL) and the broader concept of a data pipeline. Though often used interchangeably, understanding the differences between a data pipeline and ETL is crucial for optimizing your data flow.

This guide walks you through the key differences between data pipelines vs ETL, when to use each, and how modern tools such as Airbyte can simplify both batch processing and real-time data streaming.

What Is a Data Pipeline?

A data pipeline is an end-to-end workflow that moves data from multiple sources, such as SaaS apps, IoT sensors, or transactional databases, through various steps until it reaches its final destination. These steps can include validation, enrichment, aggregation, or even simply copying raw data. Unlike traditional ETL pipelines, data pipelines offer more flexibility and can handle a variety of data processing tasks.

Core Components of a Data Pipeline:

- Source Systems – Includes operational databases, APIs, files, logs, or streaming brokers that generate raw data.

- Processing Logic – Code or configuration that transforms, filters, or routes the records.

- Destination – Where the data is ultimately loaded, such as a data lake, message queue, operational datastore, or data warehouse.

Execution Styles:

- Batch Processing – Scheduled jobs that move large volumes of historical data or periodic snapshots.

- Streaming/Real-Time Data Processing – Continuous ingestion that pushes events within seconds of creation. This is ideal for applications such as fraud detection, personalization, or monitoring financial transactions.

💡Suggested Read for a deeper dive What is Data Pipeline: Benefits, Types, & Examples

What Is ETL?

ETL (Extract, Transform, Load) is a data integration pattern where data is first extracted from a source system, transformed through complex logic, and then loaded into a structured destination (most often a data warehouse).

ETL Process Breakdown:

- Extract – Data is pulled from tables, files, or APIs into a staging area.

- Transform – The data is cleaned, joined, validated, or aggregated to fit business rules and analytics schemas.

- Load – The transformed data is then written into the destination, typically a data warehouse optimized for business intelligence.

While ETL is optimized for data quality and compliance, it can introduce higher latency. Many teams now reverse the order to ELT (Extract, Load, Transform), where data is first loaded and then transformed inside powerful cloud warehouses.

Data Pipeline vs ETL: Key Differences at a Glance

The Bottom Line: ETL pipelines are one type of data pipeline, but not all data pipelines follow the ETL sequence.

Data Pipeline vs ETL Pipeline: Deep Dive

Typical Use Cases

- ETL excels when you need to:

- Populate a finance-grade data warehouse for month-end close or regulatory reporting.

- Perform rigorous data cleansing on customer data before presenting it to executives.

- Migrate historical datasets from legacy systems to a modern analytics stack.

- Data Pipelines serve broader needs, including:

- Streaming clickstream events for real-time recommendation engines.

- Transferring data between microservices in an event-driven architecture.

- Replicating operational data for disaster recovery.

Data Processing & Latency

- ETL runs in predictable batches (nightly, hourly, or on-demand) to process large volumes of data with strict business rules.

- Data Pipelines can mix batch data for backfills with millisecond-level real-time data processing for operational analytics, exemplified by tools like Apache Kafka, AWS Kinesis, or Snowflake Snowpipe.

Transformation Strategy

- ETL: Heavy transformations such as lookups, aggregation, and validation are done in the staging layer before data enters the analytics pipeline.

- Data Pipelines: Some pipelines defer transformations (ELT) or apply lightweight filters in-flight to keep throughput high and storage costs low.

Data Storage Targets

- Data Pipelines can send data to various destinations like object storage (data lakes), search indexes, message brokers, or data warehouses.

- ETL almost always ends in a data warehouse optimized for SQL analytics and business processes.

Leveraging Data Pipelines for Efficient Data Migration and Management

When considering data migration or managing large-scale data, understanding how data pipelines can efficiently move and process data is crucial. Both ETL and data pipelines play an essential role in ensuring that relevant data is moved, transformed, and loaded correctly into the target data warehouse or any other storage solution. Whether you're dealing with financial data, sales data, or aggregate data, using the right pipeline approach can significantly impact your ability to analyze data quickly and effectively.

Key Considerations in the Loading Process

The loading process is a critical part of any ETL data pipeline or data pipeline workflow. Efficient data migration requires ensuring that the data sources are properly extracted and transformed to match the target data warehouse's schema. If the source data is in different data formats, it's essential to handle the transformations correctly to ensure the final data is both accurate and usable.

For example, migrating financial data demands careful attention to regulatory compliance and data quality checks, while sales data may need to be aggregated and analyzed in real-time for business intelligence purposes. Choosing the right pipeline ensures data management is streamlined, allowing you to maintain consistency and compliance while reducing the time spent on manual data processing.

How Data Pipelines Help with Analyzing and Managing Data

In a world of rapidly changing data, it's essential that businesses can quickly analyze data and draw actionable insights. With data pipelines, you can automate the transfer and transformation of relevant data, enabling faster decision-making processes.

By integrating various data sources such as transactional data, customer data, or inventory data, you can feed the necessary information directly into analytics tools for further analysis.

Data pipelines are also essential when handling large amounts of data migration, ensuring that data flows seamlessly between different systems while retaining data integrity and quality throughout the entire process.

Security, Governance & Compliance

Highly regulated industries require strong audit trails, no matter which model you choose.

Cost, Complexity & Maintenance

- Infrastructure: ETL may require powerful servers during batch windows, while serverless ELT offloads compute to cloud warehouses.

- Operational Overhead: Streaming pipelines require 24/7 monitoring, but pay-for-use pricing can help offset variable costs.

- Skill Sets: ETL developers focus on SQL and dimensional modeling, while data pipeline engineers manage stream processing frameworks and orchestration tools.

- ROI Considerations: Real-time analytics can lead to higher sales conversion rates or reduce fraud, justifying the higher streaming costs.

Choosing the Right Approach: Quick Checklist

- Data Freshness Needed? Seconds → Data Pipeline; Hours/Days → ETL.

- Transformation Complexity? Heavy logic → ETL; Minimal or deferred → Pipeline.

- Data Volume & Variety? High-velocity logs → Pipeline; Structured ERP tables → ETL.

- Budget & Team Skills? Existing ETL expertise → Stick with ETL; Need scalability → Invest in data pipelines.

Build Automated Pipelines Using Airbyte

Airbyte unifies both worlds—build classic ETL pipelines, real-time data pipelines, or hybrid models without reinventing the wheel.

Key Features:

- 600+ Connectors: Move data from multiple sources into Snowflake, BigQuery, Redshift, or a data lake.

- Custom Connectors: Create bespoke integrations with the Connector Development Kit.

- Scheduling & Monitoring: Orchestrate batch loads or continuous replication with monitoring for data quality.

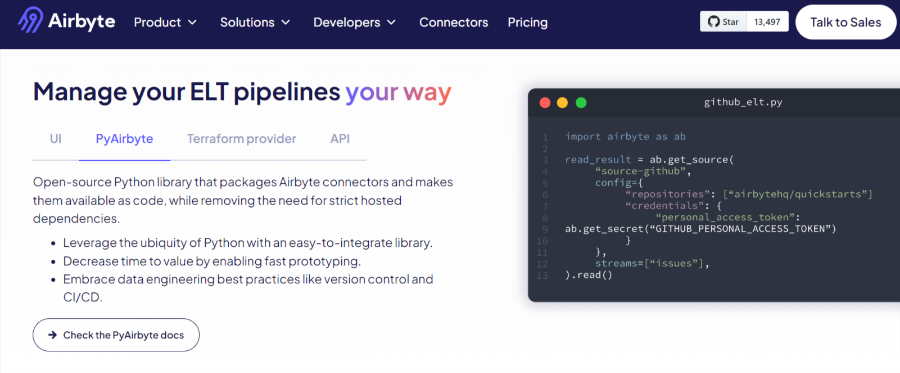

- Flexible Interfaces: Manage pipelines via UI, PyAirbyte, Terraform, or REST APIs.

Choosing the Right Data Pipeline for Your Needs

The ETL vs. Data Pipeline debate isn't a strict either/or decision. Most modern architectures blend ETL jobs for curated analytics with high-throughput pipelines for operational insights. When deciding which approach is right for you, consider:

- Latency requirements and business intelligence goals.

- Transformation complexity versus flexibility.

- Security, governance, and compliance obligations.

- Total cost of ownership and team expertise.

Over 40,000 engineers rely on Airbyte to move data, improve data quality, and keep analytics humming. Ready to optimize your data workflows? Sign up and start building in minutes.

💡Related Reads