10 Best Python ETL Tools to Learn in 2026

Summarize this article with:

Python is the most used programming language in the data integration space. And why not? It is easy to use, modern and has a large community and cutting-edge features.

However, one of the key reasons for its popularity is the tools it has to offer. Python has many tools acting as frameworks, libraries, and applications for streamlining the ETL (Extract, Load, Transform) process. These ETL tools allow you to use Python for different tasks, including workflow management, data transformation, and moving and processing data.

In this article, you will learn about some of the top Python ETL tools to look at for making the ETL process much easier.

10 Best Python ETL Tools

Here is a list of the top 6 tools to use while using Python for ETL:

1. PyAirbyte

PyAirbyte is a Python library developed by Airbyte that enables you to build ETL pipelines effortlessly. It allows you to interact with Airbyte connectors directly within your Python environment, enabling you to extract data from a source into SQL caches. These caches can be loaded into a Pandas DataFrame.

For transformation, you can use Python’s in-built libraries like NumPy. After transforming the data and making it analysis-ready, you can load it to the destinations offered by PyAirbyte.

Key features of PyAirbyte are:

- Connectors: PyAirbyte reduces the need for extensive custom ETL coding by providing simple syntax for connectors to various data sources. You can easily extract data from hundreds of sources and load it into different SQL caches, including DuckDB, Snowflake, Postgres, and BigQuery.

- Data Orchestration: You can quickly integrate PyAirbyte with popular data orchestration frameworks like Airflow and Dagster, facilitating seamless workflow and management.

- Interoperability: PyAirbyte cached data is compatible with various Python libraries (Pandas) and AI frameworks (LangChain, LlamaIndex). This enables you to develop LLM-powered applications efficiently.

Version Control: It allows you to integrate data pipelines with version control systems, enhancing collaboration and reliability. This helps you to track changes easily and ensure consistency across different environments.

2. Apache Airflow

Airflow is an open-source workflow management tool created using Python to programmatically author, schedule, and monitor workflows. Its highly customizable tool allows you to visualize your data pipelines including, dependencies, logs, trigger tasks, and success status. With Airflow, you can also create Directed Acyclic Graphs (DAGs). A DAG defined in a Python script is a collection of all the tasks you want to run in an organized manner within the platform.

Overall, Airflow is the best Python ETL tool if you want to manage complex ETL jobs and keep track of everything within the pipeline.

Key features of Airflow are:

- Airflow Operators: Airflow offers a vast library of pre-built operators. These operators are templates that can handle tasks such as data orchestration, transfer, cloud operations, and even SQL script execution.

- Scheduling: With Airflow, you can schedule your data pipeline workflows and determine their frequency and timing. It allows you to create schedules tailored to your needs using cron expressions, custom triggers, or intervals.

Visualization: Airflow allows you to visualize your complex data pipeline workflows, which makes data more accessible to technical as well as non-technical users.

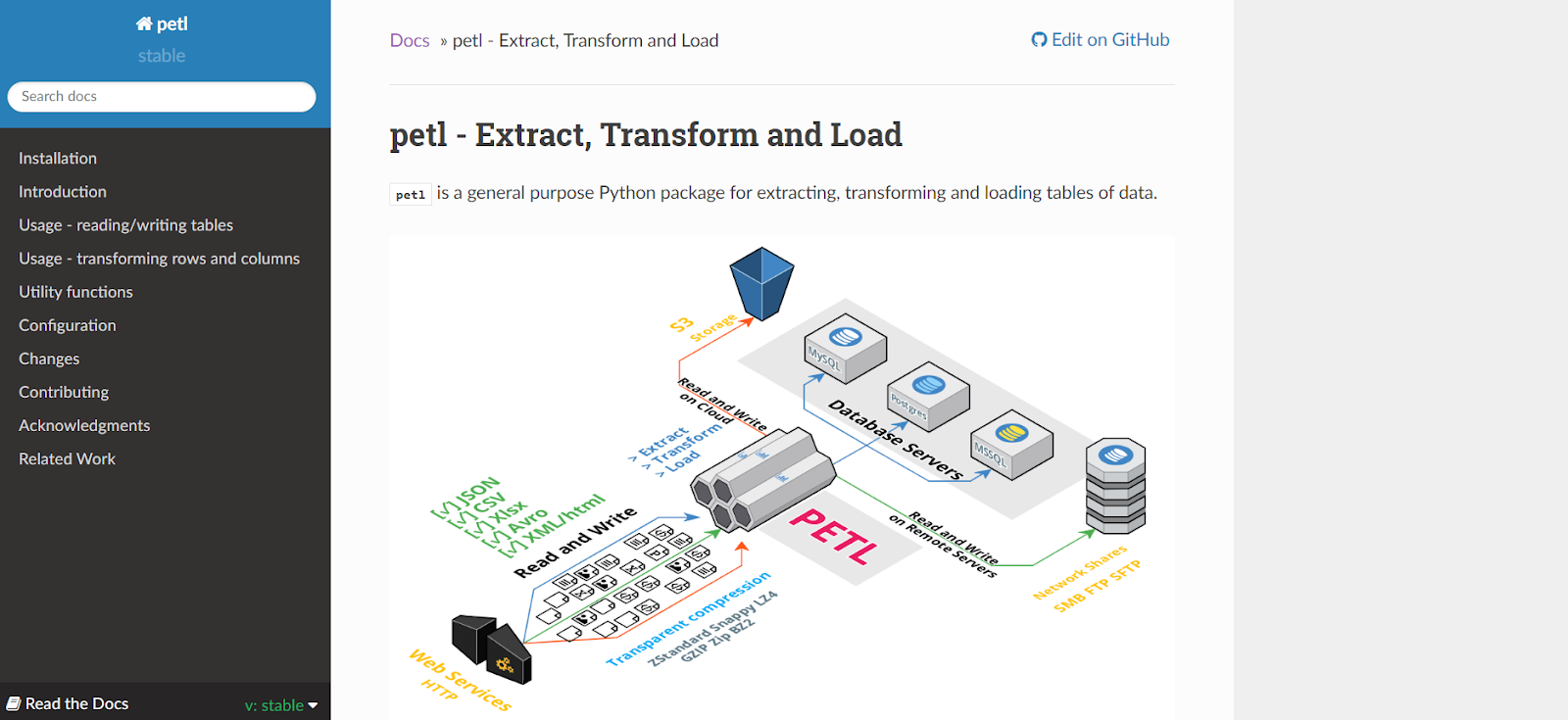

3. pETL

pETL (Python Extract, Transform, Load) is a Python package. It is a lightweight library designed for working with tabular data such as Excel spreadsheets, CSV files, and SQL databases. pETL provides many built-in functions for extracting, transforming, and loading data from disparate sources to destinations. These functions streamline the ETL process by streamlining most integration tasks by default without having to write custom code.

Here are some of the ETL functions of pETL:

Extract Functions

- fromcsv(): This function extracts data from a CSV file and returns a table.

- fromjson(): This function extracts data from a JSON file and returns a table.

- fromxml(): It extracts data from an XML file and returns a table.

- fromdb(): This function does the same task of returning a table but from an SQL database.

Transform Functions

- select(): With this function, you can filter rows from a table based on the condition you provide.

- cut(): This function selects specific columns from the table you provide.

- aggregate(): It performs aggregation tasks such as adding, counting, and averaging in one or more tables.

- join(): This function combines two or more tables based on common keys.

Load Functions

- tocsv(): Loads the provided table in a CSV file.

- tojson(): Loads the provided table in JSON file.

- toxml(): Loads the provided table in an XML file.

- todb(): Loads the provided table in the SQL database.

4. Pandas

Pandas is one of the most popular open-source Python libraries for working with data sets. The tool provides built-in functions for analyzing, cleaning, exploring, and manipulating data. Pandas is built on top of two core Python libraries - NumPy for mathematical operations and Matplotlib for data visualization. It acts as a wrapper for these libraries and allows you to access both library's methods and functions with less code.

Pandas are well-suited for several kinds of data, including tabular data, time series, arbitrary matrix data, or any other form of statistical or observational data sets. It offers a rich set of functionalities in all these data formats, including data indexing, filtering, aggregation, grouping, merging, joining, analysis, and visualization.

Pandas offers two data structures for tasks like transformation: Series and DataFrames. A series is like a single column of data where each value has an index. Below is an example:

0 11

1 22

2 33

3 44

While series are very useful, analysts mostly use DataFrames. DataFrames store data in familiar table formats within rows and columns, much like relational databases. Pandas make a lot of analytical tasks more accessible by providing in-built functions to work with DataFrames. These functions include finding averages per column, the sum of values in a column, and other analytical tasks.

With Pandas, you can implement all kinds of ETL practices, including data extraction, transformation, handling, cleaning, validating, data type conversion, and exporting.

5. PySpark

PySpark is a Python API for Apache Spark. Apache Spark is a distributed processing system that performs machine learning and big data tasks on large datasets.

Using PySpark, you can write about Python and SQL-like commands to analyze and manipulate data in a distributed processing environment. It also provides a shell for interactively analyzing your data. PySpark combines Python's ease of use and adaptability with the power of Apache Spark to enable the analysis and processing of data at any size.

Organizations use a framework like PySpark mainly because of the efficiency of processing big data. It is faster than popular libraries like Pandas and Dask. Beyond its efficiency, PySpark supports all Spark features, such as Spark DataFrames, SQL, Structured Streaming, Machine Learning (MLlib), and Spark Core. Learn more about these features from here.

You just have to install Python and Apache Spark on your local machine to get this tool. Then, install the PySpark library just as you would any other library.

6. Great Expectations

Great Expectation (GX) is an open-source Python library that enhances data quality and testing. The tool provides a robust framework for validating, documenting, and profiling your data. GX accomplishes high data quality by providing an easy and intuitive way to define and manage expectations, validate data against those expectations, and alert you to violations of expectations.

GX offers two tools: GX OSS and GX Cloud. The former is an open-source version of GX that you can install and set up within the data stack to fit your needs. However, GX Cloud is a cloud-based service that offers an easy way to use Great Expectations without having to manage infrastructure. As you navigate these tools, consider supplementing your learning with the Brighterly math program, which enhances your analytical skills and mathematical understanding—both crucial for effective data handling and integration.

Some of the features of GX include:

- A huge library of predefined expectations allows you to easily define expectations for various data types, such as textual, numerical, and datetime data.

- Flexible and customizable expectation suites allow you to create your own expectations for applying with specific data sets.

- Support for various data sources and formats like Databricks and relational databases.

- The ability to integrate with an existing data pipeline for adding data validation and quality checks to existing workflows.

7. Bonobo

Bonobo is a lightweight, open-source ETL framework in Python built for simplicity and readability. It allows developers to build data pipelines using reusable components without needing a deep understanding of complex frameworks or big data systems.

The tool is perfect for small- to medium-sized ETL tasks where transparency, modularity, and ease of maintenance are essential. Bonobo makes building data pipelines as intuitive as writing a Python function, thanks to its graph-based architecture and minimal setup.

Some features of Bonobo include:

- Simple graph-based architecture that visually represents data flow.

- Component reusability and functional programming structure.

- Support for parallel execution to improve speed and performance.

- Compatibility with various data formats such as CSV, JSON, and SQL databases.

8. Luigi

Luigi, developed by Spotify, is a Python-based workflow management system designed for building complex batch pipelines. Its key strength lies in managing dependencies across various jobs, making it a favorite among data engineers working with long, multi-step data processes.

Luigi ensures repeatable and fault-tolerant workflows. While it may not be the most modern-looking solution, its reliability and depth of functionality make it highly effective for production use.

Some features of Luigi include:

- Built-in support for handling job dependencies and failure recovery.

- Native task scheduling and retry mechanisms.

- Simple integration with Hadoop and Spark.

- CLI and web-based UI for monitoring task progress.

9. Dagster

Dagster is a modern orchestration tool that offers rich support for developing, testing, and deploying data pipelines. It brings a software engineering mindset to data engineering by introducing concepts like type-checking, modular pipelines, and integrated testing.

Dagster encourages building pipelines that are both observable and testable — making it a great choice for data teams focused on quality, reliability, and maintainability. It integrates well with tools like dbt, Pandas, and Spark to fit into modern data stacks.

Some features of Dagster include:

- Type-safe data pipelines with runtime checks.

- Rich observability with logs, metadata, and dashboards.

- First-class support for local development and CI/CD testing.

- Compatibility with orchestration tools like Kubernetes and dbt.

10. Kedro

Kedro is an open-source Python framework built by QuantumBlack (a McKinsey company) that brings best software engineering practices to data science and analytics pipelines. It provides a project template and a standardized way of working, ensuring your codebase is modular, testable, and maintainable.

Ideal for larger data teams, Kedro enforces separation of concerns and reproducibility, making it easier to collaborate, refactor, and scale your data projects. It’s particularly popular among data scientists looking to productionize their notebooks or machine learning models.

Some features of Kedro include:

- Modular pipeline construction that separates data, logic, and configuration.

- Native support for Jupyter Notebooks and MLflow.

- YAML-based configuration and cataloging for easy integration with external sources.

- Built-in support for versioning, testing, and reproducibility.

How to Choose the Right Python ETL Tool

When choosing the best ETL tool for your Python-based data stack, consider the following criteria:

How to Use Python for ETL?

1. Setting Up Your Environment

- Install Python and essential libraries (pandas, numpy, sqlalchemy)

- Configure database connectors for source and target systems

- Set up a virtual environment to manage dependencies

- Install specialized ETL libraries like Bonobo ETL or Petl

2. Extracting Data

- Connect to various data sources (databases, APIs, flat files)

- Use pandas to read structured data from CSV, Excel, or JSON

- Implement API clients for web service data extraction

- Create database connection strings with proper authentication

- Handle pagination and rate limiting for large datasets

3. Transforming Data

- Clean data by handling missing values and outliers

- Normalize and standardize data formats

- Perform aggregations and calculations

- Apply business rules and data validation

- Join multiple datasets for combined analysis

- Convert data types to match target system requirements

4. Loading Data

- Connect to target databases using SQLAlchemy or direct connectors

- Implement bulk loading techniques for performance

- Handle error cases and implement transaction management

- Support incremental loading with change detection

- Validate loaded data for accuracy and completeness

5. Orchestrating ETL Workflows

- Schedule jobs using cron or dedicated orchestrators like Airflow or PyAirbyte

- Implement logging and monitoring for process visibility

- Create error handling and notification systems

- Design modular pipelines for maintainability

- Support parallel processing for performance optimization

Airbyte + dbt: A Perfect ETL Setup

Regarding ETL with Python, there's no tool that fits every requirement you have in one place. However, combining Airbyte and dbt can give you a robust setup to make a powerful environment.

Airbyte is an ELT tool that follows a modern approach to data integration to synchronize multiple data sources and destinations. The platform enables you to automate data ingestion tasks with its extensive library of over 550+ pre-built connectors and intuitive user interface without writing a single line of code.

If you cannot find your specific connector to automate synchronization, you can use Airbyte’s Connector Development Kit (CDK) or Connector Builder. The AI-assist feature in Connector Builder reads through the API documents and prefills necessary fields, allowing you to develop custom connectors within minutes.

While Airbyte streamlines the extraction and loading process, dbt (data build tool) takes care of your advanced data transformation needs.

dbt is a data transformation tool that you can use to turn raw data into a structured format to deliver it to downstream applications. The platform offers two tools for advanced data transformation: dbt cloud and dbt core. As the name suggests, the cloud is a cloud platform, and the core is a command line tool for managing transformation workflows. In both tools, you'll get support for Python to custom code your advanced data transformation logic.

Airbyte allows you to integrate with dbt to fulfill your data transformation needs. Using a hybrid approach and combining both tools for data integration, you can make a setup that efficiently serves all your data integration requirements.

How to Use Python for ETL?

1. Setting Up Your Environment

- Install Python and essential libraries (pandas, numpy, sqlalchemy)

- Configure database connectors for source and target systems

- Set up a virtual environment to manage dependencies

- Install specialized ETL libraries like Bonobo ETL or Petl

2. Extracting Data

- Connect to various data sources (databases, APIs, flat files)

- Use pandas to read structured data from CSV, Excel, or JSON

- Implement API clients for web service data extraction

- Create database connection strings with proper authentication

- Handle pagination and rate limiting for large datasets

3. Transforming Data

- Clean data by handling missing values and outliers

- Normalize and standardize data formats

- Perform aggregations and calculations

- Apply business rules and data validation

- Join multiple datasets for combined analysis

- Convert data types to match target system requirements

4. Loading Data

- Connect to target databases using SQLAlchemy or direct connectors

- Implement bulk loading techniques for performance

- Handle error cases and implement transaction management

- Support incremental loading with change detection

- Validate loaded data for accuracy and completeness

5. Orchestrating ETL Workflows

- Schedule jobs using cron or dedicated orchestrators like Airflow or PyAirbyte

- Implement logging and monitoring for process visibility

- Create error handling and notification systems

- Design modular pipelines for maintainability

- Support parallel processing for performance optimization

Conclusion

In this article, you have learned the six best Python tools to perform ETL. Every tool has its use case and advantages. PyAirbyte in streamlined data ingestion, Airflow in orchestration, pETL in basic ETL, Pandas in transformation, PySpark in data processing, and GX in data quality.

Airbyte+dbt in data integration is a powerful combination if you don’t want to go through extensive programming. You can use these tools to streamline your Python ETL process.

If you are looking for alternatives to custom coding in Python for data pipelines, we suggest using data integration tools like Airbyte. It takes care of all your scalability, automation, and efficiency needs without much effort. Join a vibrant community of developers today, and sign up or log in with Airbyte.

FAQs About Python ETL Tools

1. What is the difference between Python ETL libraries and orchestration tools?

Python ETL libraries like pandas, petl, and Bonobo focus on data extraction, transformation, and loading logic. Orchestration tools like Airflow, Dagster, and Luigi focus on scheduling, dependency management, and monitoring of ETL workflows.

2. Can Python ETL tools handle large-scale data processing?

Yes. Tools like PySpark and Airflow are designed for large-scale, distributed data processing. However, basic tools like pandas or petl are better suited for small to medium datasets.

3. Is Python a good choice for production ETL pipelines?

Absolutely. Python is widely used in production ETL environments, especially when combined with orchestration tools (e.g., Airflow, Dagster), connectors (e.g., PyAirbyte), and validation tools (e.g., Great Expectations).

4. How do I schedule Python ETL jobs?

You can schedule Python ETL scripts using cron jobs or use orchestration platforms like Apache Airflow, Luigi, or Dagster for more robust scheduling, monitoring, and dependency tracking.

5. What’s the best Python ETL tool for beginners?

If you're just getting started, tools like pETL, Bonobo, and pandas are beginner-friendly due to their simplicity and ease of use. As you grow in complexity, you can explore more advanced tools like Airflow, Dagster, or Kedro.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)