Kafka CDC: A Comprehensive 101 Guide for You

Summarize this article with:

✨ AI Generated Summary

You can't build timely analytics on data that arrives hours late. Traditional batch ETL forces you to wait for nightly windows, leaving dashboards stale and microservices missing critical events. Every upstream schema change risks breaking delicate jobs. In fast-moving businesses, that lag costs revenue and creates mounting technical debt.

Kafka-based Change Data Capture turns every database insert, update, or delete into a live event stream. Systems stay in sync without heavy batch pulls. With durable logs, scalable partitions, and independent consumer groups, you shift from brittle jobs to event-driven pipelines that power instant analytics and responsive applications.

This guide covers CDC fundamentals, Kafka's architecture, step-by-step setup, and pitfalls to avoid.

TL;DR: Kafka CDC at a Glance

- Turn every database insert, update, and delete into a live event stream instead of waiting on slow batch ETL jobs.

- Stream changes in near-real time by reading transaction logs (binlog/WAL) through log-based CDC, keeping source load minimal and events perfectly ordered.

- Kafka provides the backbone: durable logs, scalable partitions, consumer groups, and replayable history for analytics, microservices, and feature stores.

- Most teams pair Kafka with Debezium, Kafka Connect, or Airbyte to extract changes, publish them to topics, validate schemas, and manage offsets safely.

- A standard flow emerges: database → CDC connector → Kafka topics → independent consumers for warehouses, apps, ML, or downstream systems.

- Expect operational challenges like schema drift, hot partitions, lag, and I/O saturation—solved with schema registries, careful partitioning, monitoring, and dead-letter queues.

- Airbyte simplifies CDC setup with 600+ connectors, built-in log-based extraction, schema handling, and easy deployment if you don’t want to manage Debezium or Connect clusters.

What Is CDC and Why Does Kafka Matter for It?

Change Data Capture (CDC) streams every insert, update, and delete from your operational database in near-real time. It reads the database's transaction log and emits structured events describing what changed. Because you move only new records, you avoid the heavy full-table scans that slow nightly jobs and leave dashboards hours behind live activity.

Apache Kafka provides the durable backbone those streams need. Its partitioned, append-only log scales linearly, so high-volume tables never drown a single node. Built-in replication keeps change events safe even if a broker fails, while consumer groups let multiple applications process the same stream independently.

The architecture mirrors a database's own log structure, letting CDC connectors translate transaction entries to Kafka messages with minimal friction. This alignment turns raw change data into a resilient, replayable event stream you can rewind, reprocess, or fan out to downstream sinks.

Database → CDC Connector → Kafka → Consumers (Analytics / Apps / Services)Together, CDC and Kafka replace the lag and fragility of traditional ETL with an always-on pipeline that keeps every system in sync as changes happen.

How Does Kafka CDC Work at a High Level?

A Kafka-based data capture pipeline watches your database's transaction log and turns every insert, update, or delete into an event you can replay, store, or react to in near-real time. Each piece of work happens independently, so you can evolve one layer without breaking the others.

The workflow follows these steps:

- Change Extraction: A connector tails the database's binlog, WAL, or redo logs and grabs every committed transaction without touching user queries. Because the connector reads the same append-only log your database uses for recovery, you avoid the load spikes and stale snapshots common in traditional processing approaches.

- Change Transformation: Each raw log entry is reshaped into a structured event that carries the operation type, before/after row images, and metadata like the source timestamp. This keeps the payload self-describing and ready for schema validation downstream. Typically you'll see JSON or Avro formats.

- Change Delivery: The connector publishes those events to Kafka topics. Most teams map topics one-to-one with tables (for example, orders.public.orders) so you can scale partitions independently and apply compaction or retention rules that fit each dataset.

- Downstream Consumption: Consumers subscribe to the relevant topics through their own consumer group. This includes warehouse loaders, microservices, or feature stores. Kafka tracks offsets separately for every group, letting you replay history, fork new applications, or perform blue-green deployments without changing the extraction logic.

By chaining these steps together, you replace fragile nightly dumps with a durable event log that keeps every system in sync as changes happen.

What Are the Core Components Involved in Kafka CDC?

You can move from traditional pipelines to instant replication by integrating several Kafka components, each fulfilling roles such as storing, producing, reading, validating, orchestrating, and managing event streams.

1. Kafka Brokers

Brokers hold partitions and replicate them for fault tolerance. Data gets copied across brokers, so a node failure never loses your change log. This matters for regulatory traceability and exactly-once delivery. High-volume workloads add more brokers to distribute the load.

2. Kafka Topics

A topic is where producers send events and consumers subscribe. Streaming pipelines typically use one topic per table so downstream teams subscribe to exactly the data they need without extra filtering. Clear naming like orders.public.orders reduces confusion when dozens of tables start streaming.

3. Producers

A Debezium connector functions as the producer. It reads the database's transaction log, transforms each change into an event, and publishes it to Kafka. Idempotent producers and transactional writes ensure you never see duplicates during failovers.

4. Consumers

Consumers pull events at their own pace, tracking progress with offsets stored in Kafka. Multiple consumer groups read the same topic concurrently, letting you feed a warehouse, search index, and fraud-detection microservice without coupling them together.

5. Schema Registry

When a column is added or its type changes, the registry validates that the new event schema remains compatible with downstream readers. Storing schemas centrally avoids the 'mystery byte array' problem and eliminates manual version control headaches.

6. Connect Framework

Kafka Connect provides a runtime for source and sink connectors, handling configuration, scaling, and RESTful management APIs. You define a connector once, and Connect distributes the work across workers. This orchestration keeps your pipeline manageable as the number of databases or downstream targets grows.

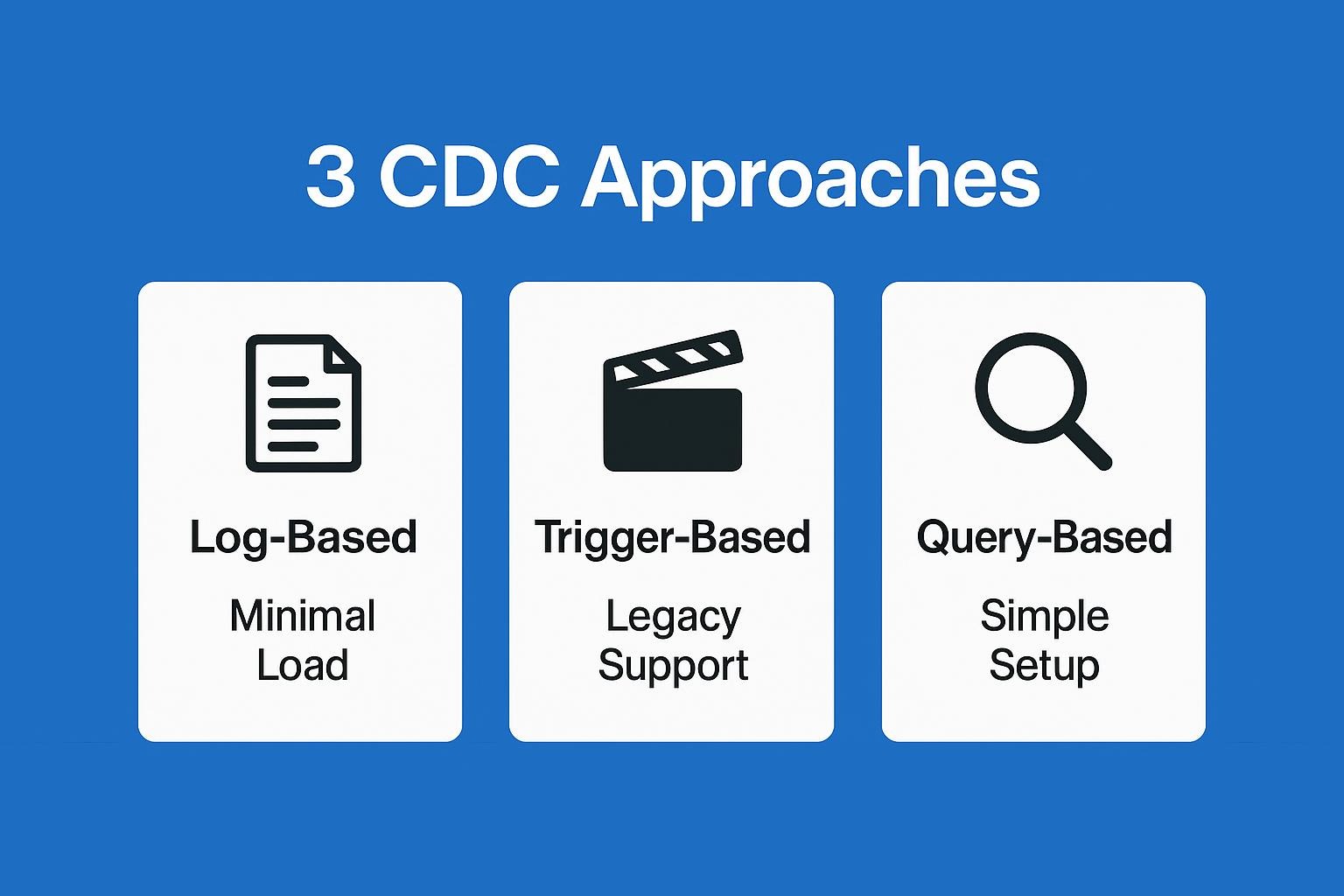

Which CDC Approaches Are Commonly Used With Kafka?

You have three practical ways to capture database changes before pushing them to Kafka. Each comes with trade-offs in performance, complexity, and database impact.

1. Log-Based CDC

A connector reads the database's transaction log and streams every committed change to Kafka topics. This includes MySQL binlog, PostgreSQL WAL, or Oracle LogMiner. You get near real-time replication with almost no extra load on the source database.

Debezium uses this pattern to guarantee ordered events for each primary key. Because it captures the same log the database uses for recovery, you receive inserts, updates, and deletes exactly once, even during outages.

2. Trigger-Based CDC

If your DBA won't grant log access or the database doesn't expose it, you can add AFTER INSERT/UPDATE/DELETE triggers that write changes to shadow tables. A connector then reads those tables and publishes the mutations to Kafka.

This approach keeps implementation flexible but introduces additional writes and possible lock contention as volume grows.

3. Query-Based CDC

The most straightforward option is periodic polling. You run a SELECT with a "last-modified" predicate, compare results to the previous snapshot, and emit any differences. Because it polls on a schedule, latency is tied to the interval.

Fast-changing rows can be skipped between polls. For smaller tables or non-critical data, query-based capture provides an easy entry point before moving to log-based approaches when requirements tighten.

What Tools Are Commonly Used for Kafka CDC?

Once you decide to stream database changes through Kafka, the next question is which tool will handle each part of the pipeline. Four options appear in almost every production stack: Debezium, Kafka Connect, custom-built connectors, and Airbyte. Each solves a different piece of the data capture puzzle.

1. Airbyte

If you want change data capture without deep Kafka expertise, Airbyte bundles the heavy lifting behind an intuitive UI and 600+ connectors. You select your source, point it at Kafka or another destination, and Airbyte manages log positions, schema evolution, and retries for you. That abstraction lets you ship real-time pipelines faster while still keeping the option to fine-tune lower-level Kafka settings when necessary.

2. Debezium

Debezium is the workhorse for log-based extraction. It tails transaction logs and emits structured change events for MySQL, PostgreSQL, MongoDB, SQL Server, Oracle, and more, giving you broad database coverage in a single open-source project. Because Debezium runs as a Kafka Connect source connector, you configure it through the same APIs you already use for the rest of your Kafka infrastructure.

3. Kafka Connect

Kafka Connect is the runtime that keeps connectors running and scalable. You can launch it in standalone mode for quick tests or in distributed mode when you need fault tolerance and horizontal scaling. Connect handles offset storage, task rebalancing, and REST-based configuration so you spend less time wiring up plumbing and more time shipping data.

4. Custom Connectors

Sometimes you have to capture changes from a proprietary system no off-the-shelf connector supports. Building a custom connector lets you meet that need, but you take on engineering and maintenance overhead. You'll handle testing for schema drift, managing retries, and keeping up with Kafka version changes without the benefit of a community-maintained codebase.

How Do You Set Up Kafka CDC Step-by-Step?

Setting up a reliable data capture pipeline means treating it like production infrastructure. Configure your source database properly, give Kafka adequate resources, and validate every change before consumers see it. These six steps follow that approach:

1. Prepare Your Source Database

Enable row-based logging so the connector can capture every insert, update, and delete without locking tables. In MySQL set binlog_format=ROW and binlog_row_image=FULL. PostgreSQL users turn on logical replication with wal_level=logical. Create a connector user with log read permissions but no data modification rights.

2. Deploy Kafka Infrastructure

Your cluster needs brokers, Zookeeper or KRaft, Connect workers, and a Schema Registry. Three brokers provide the practical minimum for fault tolerance. Container orchestration with Strimzi or Kubernetes makes scaling and rolling upgrades easier to manage.

3. Configure Your CDC Connector

Debezium's MySQL connector handles most use cases. A basic configuration looks like this:

{

"name": "debezium-mysql",

"config": {

"connector.class": "io.debezium.connector.mysql.MySqlConnector",

"tasks.max": "1",

"database.hostname": "mysql",

"database.port": "3306",

"database.user": "cdc",

"database.password": "cdcpass",

"database.server.id": "184054",

"database.include.list": "sales",

"table.include.list": "sales.orders",

"snapshot.mode": "initial"

}

}Key options control initial snapshots, table filters, and offset storage behavior.

4. Map Tables to Kafka Topics

Follow the database.schema.table pattern. The example above creates the sales.orders topic. High-volume tables need extra partitions to prevent hot spots. Set cleanup-policy to compact for changelog semantics, or delete for retaining event history within a configurable retention window.

5. Test With Sample Events

Insert test rows and monitor Connect logs or use kafka-console-consumer to verify events arrive correctly. Replay the topic into a staging sink before connecting production systems.

6. Handle Schema Evolution

Register every topic in Schema Registry and enforce backward compatibility. When columns change types, the registry blocks incompatible writes to prevent silent data corruption. Proper schema change management prevents downstream application failures.

What Are the Most Common Kafka CDC Use Cases?

Data capture solves real operational problems that traditional processing creates. Here are five patterns where teams stream database changes through Kafka to unlock time-sensitive workflows.

1. Real-Time Analytics Pipelines

Stream every insert, update, and delete into Kafka to feed cloud warehouses the moment data lands in production. Dashboards stay current because events arrive continuously instead of overnight processing, giving you fresh metrics when decisions can't wait.

2. Microservices Synchronization

When your architecture spans multiple services, each needs reliable copies of shared records. Publishing database changes as Kafka topics lets services subscribe, update their stores, and stay loosely coupled. This event-driven approach beats managing point-to-point APIs when breaking apart monoliths.

3. Event-Driven Applications

Inventory depletion, suspicious transactions, or new signups can trigger downstream actions instantly. Data capture preserves transaction order and atomicity, so you can react safely without polling databases. Send alerts, allocate stock, or start workflows. That immediacy drives many teams to move critical logic onto Kafka's change stream.

4. ML Feature Serving

Models trained on yesterday's data decay quickly. Streaming events into feature stores keeps data minutes old instead of days. This includes user activity counts and product prices. When you retrain or serve predictions, you're working with live business state, not stale snapshots.

5. Backup & Disaster Recovery

Continuous replication from source tables to off-site clusters provides a lossless audit trail. If the primary database fails, you can replay Kafka topics to rebuild state with minute-level precision. Traditional backups can't match this.

What Operational Challenges Should You Expect With Kafka CDC?

Data capture pipelines create operational issues that rarely appear in traditional processing. You'll notice them first as subtle consumer lag, then as broken dashboards and stalled microservices. Understanding these issues and how to neutralize them keeps real-time data flowing.

1. Schema Drift

A single ALTER TABLE can halt deserialization for every downstream consumer. Register schemas and enforce forward or backward compatibility to catch breaking changes before they reach production. Tools like Confluent Schema Registry pair well with Debezium to automate these checks.

2. High-Volume Partitions

When one customer ID or product SKU dominates traffic, a "hot" partition emerges and broker utilization skyrockets. Distribute keys evenly or pre-split heavy tables across additional partitions to balance throughput and avoid throttling. This approach prevents single partitions from becoming bottlenecks. Learn more about data partitioning strategies.

3. Out-of-Order Updates

Multi-shard databases emit commits concurrently, so related events can land in different partitions. Preserve order by hashing on a stable key, often the primary key, so all changes for that row hit the same partition.

For cross-table transactions, embed transaction IDs and enforce ordering in your consumer logic. This ensures related changes process in the correct sequence.

4. Lag Monitoring

Consumer lag creeps up when downstream services slow or broker I/O tightens. Track logEndOffset – committedOffset continuously to reveal trouble early.

The moment lag trends upward, scale consumer replicas or investigate slow processing routines. Proactive monitoring prevents small delays from becoming system-wide outages.

5. Resource Constraints

Data capture traffic is write-heavy and relentless. Disk throughput, not CPU, usually fails first. Network follows close behind.

Spread high-volume topics across brokers and upgrade to NVMe storage to reduce back-pressure. When partitions still under-replicate, tweak JVM settings and broker configuration before adding brokers.

Detect these issues early, and streaming pipelines stay the real-time backbone you planned, not the fire you're forever putting out.

How Do You Keep Kafka CDC Pipelines Reliable at Scale?

Reliable pipelines start with clear priorities: reduce points of failure, keep data consistent, and surface problems before they hurt downstream systems. These five practices come directly from field experience running high-volume Kafka clusters.

1. Use Log-Based CDC Whenever Possible

Log-based capture reads directly from database transaction logs, avoiding trigger overhead and polling gaps. It preserves commit order, which is critical for transactional consistency. When legacy systems block log access, fall back to triggers only for specific tables and isolate those topics.

2. Standardize Event Schemas

A schema registry acts as your contract between producers and consumers. Register every version, enforce backward compatibility, and reject events that break the contract before they reach production topics.

3. Structure Topics for Scale

High-throughput tables deserve their own topics and ample partitions so a single shard never becomes the bottleneck. Follow proven scaling practices and rebalance proactively.

4. Implement Dead Letter Queues

A dead letter queue lets you quarantine malformed messages without stopping the entire connector. Route deserialization errors or schema mismatches here, then replay after patching the issue.

5. Monitor Key Metrics

Track these metrics and alert when they drift:

- Consumer lag per partition

- Broker disk usage and I/O wait

- Under-replicated partitions

- Network throughput spikes

- Connector task failures

These expose early warning signs of backpressure or hardware exhaustion, letting you scale out before users notice stale dashboards.

How Can You Simplify Kafka CDC With Airbyte?

While Kafka-based data capture offers powerful capabilities for real-time data integration, managing the infrastructure complexity can slow your team down. Airbyte simplifies CDC with 600+ pre-built connectors covering major databases, APIs, and cloud platforms. You get CDC capabilities without wrestling with Debezium configuration files or Kafka Connect cluster management.

Airbyte handles log position tracking, schema evolution, and automatic retries through an intuitive interface. This lets you deploy production-ready CDC pipelines in hours instead of weeks. The platform generates open-standard code, so you avoid vendor lock-in while maintaining the flexibility to optimize Kafka settings when needed.

Try Airbyte and set up your first CDC pipeline in minutes. Connect your database, configure your Kafka destination, and start streaming change events without managing connector infrastructure.

Frequently Asked Questions

What is the difference between Kafka CDC and traditional ETL?

Traditional ETL runs on schedules and moves data in batches, creating hours or days of lag. Kafka CDC captures every database change as it happens and streams those events continuously, giving you real-time data access without heavy full-table scans. CDC also preserves transaction order and atomicity, which matters when downstream systems need consistency.

How does Kafka CDC handle schema changes in source databases?

Kafka CDC uses a schema registry to track and validate schema versions across all topics. When a source table changes, the connector publishes the new schema to the registry, which checks compatibility with existing consumers. The registry blocks incompatible changes before they break downstream applications. Tools like Debezium automatically include schema metadata with each event to help consumers adapt.

What are the main performance considerations for Kafka CDC pipelines?

Performance depends on partition count, broker resources, and consumer throughput. High-volume tables need multiple partitions to distribute load and prevent hot spots. Disk I/O typically becomes the bottleneck before CPU, so NVMe storage helps maintain throughput. Monitor lag continuously and scale consumer groups when processing can't keep pace. Proper partition key selection prevents uneven key distribution and imbalanced workloads.

Can I use Kafka CDC with databases that don't expose transaction logs?

Yes, but you'll need trigger-based or query-based CDC instead of log-based capture. Trigger-based CDC adds database triggers that write changes to shadow tables, which a connector reads and publishes to Kafka. Query-based CDC polls tables periodically using last-modified timestamps. Both approaches work when log access isn't available, though they add source database load and higher latency compared to log-based capture.

.webp)