What is Kafka Streams: Example & Architecture

Summarize this article with:

✨ AI Generated Summary

Apache Kafka is now a core part of modern data systems as organizations work to manage rapidly growing data and deliver real-time insights. Traditional batch processing cannot keep pace, making stream processing essential. Kafka Streams, the platform's built-in processing library, allows teams to analyze events as they happen, identify patterns in real time, and trigger automated actions. This is vital for use cases like financial transactions, IoT data, and user behavior analytics where delays can lead to missed opportunities or hidden risks.

The adoption of Kafka has reached unprecedented scale, with over 150,000 organizations now using Kafka worldwide and more than 80% of Fortune 100 companies incorporating Kafka into their data infrastructure. The event stream processing market has grown from $1.45 billion in 2024 to a projected $1.72 billion in 2025, representing an impressive 18.7% compound annual growth rate.

Modern Kafka Streams implementations have evolved significantly, incorporating AI-powered optimization, cloud-native deployment patterns, and advanced state-management capabilities. This guide explains how to leverage Kafka Streams' full potential for real-time data processing.

TL;DR: Kafka Streams at a Glance

- Kafka Streams is a lightweight client library for building real-time stream-processing applications directly on Apache Kafka.

- Key features include exactly-once semantics, horizontal scalability without code changes, and stateful operations like aggregations and windowing.

- Kafka 4.0 introduces KRaft mode (replacing ZooKeeper), enhanced consumer group protocols, and versioned state stores for improved performance.

- AI/ML integration enables real-time RAG pipelines, feature stores, and dynamic model retraining with sub-second latency.

- Airbyte provides 600+ connectors to load diverse data sources into Kafka without custom coding, accelerating stream processing workflows.

What Is Kafka Streams and How Does It Work?

Kafka Streams is a client library for building stream-processing applications and microservices on top of Apache Kafka. It:

- Consumes data from Kafka topics

- Performs analytical or transformation operations

- Publishes processed results to another Kafka topic or external system

Unlike batch systems, Kafka Streams processes data continuously as it arrives, enabling real-time analytics with exactly-once guarantees. With the release of Kafka 4.0, significant architectural improvements have been introduced, including the complete elimination of Apache ZooKeeper dependency, with KRaft (Kafka Raft) mode becoming the default and only supported metadata management system. Recent innovations have also introduced versioned state stores for temporal look-ups and Interactive Query v2 (IQv2) for direct, low-latency state queries.

Kafka Streams applications start on a single node and scale horizontally simply by adding more instances—no code changes required. Performance testing has demonstrated that Kafka can handle up to 2 million writes per second on a three-machine cluster configuration, showcasing its ability to manage high-throughput scenarios effectively.

Key Features of Kafka Streams

- Lightweight client library—no separate cluster required

- No external dependencies other than Kafka

- At-least-once or exactly-once semantics

- Event-time and processing-time handling with watermarks

- Stateful operations (aggregations, joins, windowing)

- DSL and low-level Processor API

- Built-in fault tolerance and automatic state recovery

- Versioned state stores for temporal queries

- Interactive Query v2 (REST-based state queries)

- Enhanced foreign key extraction capabilities through KIP-1104 improvements

- ProcessorWrapper interface via KIP-1112 for seamless custom logic injection

- AI-powered optimization that dynamically adjusts routing and resources

How Kafka Streams Processing Topology Works

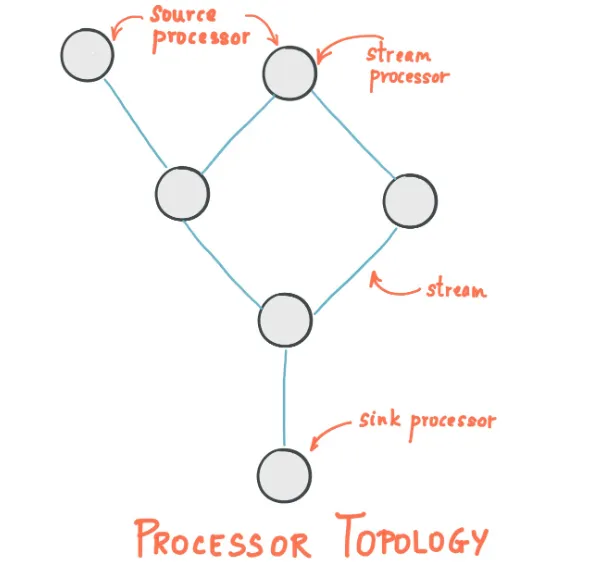

A topology is a directed acyclic graph of processors and state stores that defines how data flows through your application.

Source Processor

Entry point. Consumes records from one or more topics, deserializes them, and forwards downstream.

Sink Processor

Exit point. Receives transformed records and writes them to topics or external systems.

Upstream processors feed data to downstream processors; downstream processors consume data from upstream ones.

Architecture of Kafka Streams

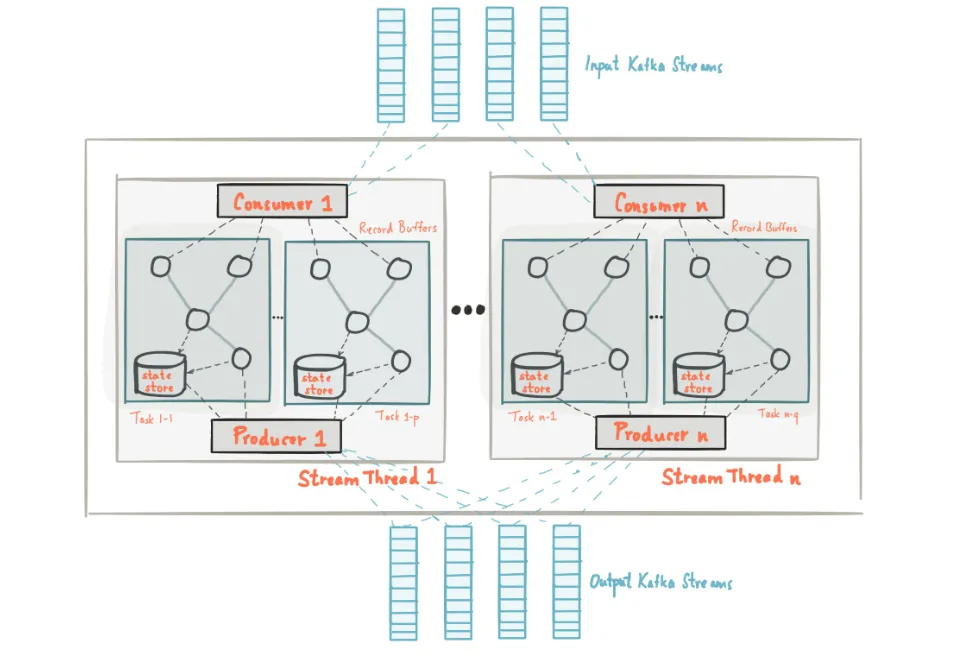

Stream Partitions & Tasks

- Partition – ordered sequence of records within a topic.

- Task – unit of parallelism; owns its own topology instance and state stores.

Threading Model

Configure the number of stream threads per application instance; threads run tasks independently—no shared state, no locking.

Local State Stores

Local key-value stores (now optionally versioned) enable low-latency stateful operations. Changelog topics replicate state for recovery.

Fault Tolerance

On failure, tasks restart on another instance; state stores are rebuilt from changelog topics, resuming exactly where they stopped. The new KRaft architecture in Kafka 4.0 eliminates traditional bottlenecks associated with ZooKeeper coordination, enabling Kafka clusters to support larger numbers of partitions and topics that Kafka Streams applications commonly require for parallel processing.

Exactly-Once Processing Semantics (EOS)

Exactly-once guarantees ensure no duplicates and no data loss—even during failures.

How It Works

- Idempotent producers prevent duplicate writes.

- Transactions group reads, state updates, and writes atomically.

- Read-committed consumers expose only committed data downstream.

Configuration (Java)

EOS adds ~5–10 % overhead versus at-least-once but is essential for mission-critical workloads. Organizations using time-windowed aggregations in their streaming applications can increase their operational efficiency by up to 30% through these enhanced processing capabilities.

AI & Machine Learning in Kafka Streams

Real-Time RAG Pipelines

Kafka Streams powers Retrieval-Augmented Generation flows:

- Convert user queries to embeddings.

- Query vector DBs.

- Augment prompts for large language models (LLMs).

- Return responses with sub-second latency, keeping context in stream state.

Dynamic Model Retraining

Change Data Capture (CDC) streams trigger incremental retraining, while Kafka Streams state stores act as real-time feature stores—eliminating training/serving skew.

Cloud-Native & Serverless Deployments

Serverless Kafka

- AWS MSK Serverless – auto-scales, pay-per-throughput.

- StreamNative Cloud – separates compute/storage, faster rebalances.

The democratization of Kafka through competitive market offerings has contributed to cost benefits, with some specialized cluster types offering up to 90% cost reduction for specific use cases like high-volume log analytics.

Kubernetes Operators

Operators (e.g., Cloudera Streams Messaging) manage rolling upgrades, PVCs, and security for stateful streaming workloads.

Zero-ETL Architectures

Confluent Tableflow materializes Kafka topics into Iceberg tables automatically. Data-integration tools like Airbyte load diverse sources directly into Kafka, reducing batch ETL latency.

Enhanced Consumer Group Protocol and Performance Improvements

Kafka 4.0 introduces KIP-848, delivering substantial improvements to consumer group management, directly impacting Kafka Streams application performance and reliability. This next-generation consumer group protocol addresses long-standing challenges in stream processing environments, particularly around rebalancing operations that could previously disrupt stream processing continuity.

The enhanced consumer group protocol significantly reduces downtime during rebalances and lowers latency for consumer operations, creating more stable and responsive stream processing environments. For Kafka Streams applications, these improvements translate into enhanced operational stability and reduced processing interruptions.

Monitoring & Observability

Key Metrics

Enhanced Kafka Streams monitoring has been introduced through KIP-1091 providing improved operator metrics including:

- Stream thread health with new client.state and thread.state metrics

- Consumer lag

- State-store size & query latency

- End-to-end processing latency

- Error/exception rates

- Recording.level indicators for granular visibility

Tooling

- Prometheus + Grafana dashboards

- OpenTelemetry tracing

- Confluent Cloud Kafka Streams UI

- Chaos engineering with Conduktor Gateway

Alert on business-impacting thresholds and maintain runbooks for rapid incident response.

Security Considerations for Kafka Streams

Recent security developments require attention from Kafka Streams users. Critical vulnerabilities have been identified in 2025, including CVE-2025-27819 affecting SASL JAAS JndiLoginModule configuration, CVE-2025-27818 impacting SASL JAAS LdapLoginModule configuration, and CVE-2025-27817 introducing arbitrary file read vulnerabilities.

Organizations must prioritize upgrading to Kafka versions 3.9.1 or 4.0.0 to address these security issues and maintain compliance in regulated environments. The new security configurations require updates to deployment scripts and security policies across affected organizations.

How Airbyte Enhances Kafka Streams

- 600 + pre-built connectors load SaaS/DB data into Kafka without custom code.

- No-code Connector Builder accelerates creation of niche connectors.

- Automatic chunking & embeddings bring unstructured data (PDF, audio, images) into streaming AI workflows.

- Open-source model avoids per-row pricing; incremental sync minimizes resource usage.

PyAirbytelets data scientists read Kafka Streams state stores directly in Python notebooks.

FAQs

1. What are the benefits of Kafka Streams?

Kafka Streams offers horizontal scalability without code changes, built-in fault tolerance, and exactly-once processing semantics to prevent data loss. It features versioned state stores, AI-powered optimization, and the enhanced consumer group protocol in Kafka 4.0 for reduced downtime.

2. What are typical Kafka Streams use cases?

Common use cases include real-time aggregations, fraud detection, IoT telemetry processing, personalization engines, and dynamic pricing systems. It's also used for RAG pipelines in AI-powered chatbots, with 72% of Kafka users employing it for stream processing.

3. How does Kafka Streams integrate with AI/ML?

Kafka Streams integrates AI through embedded ML models for real-time anomaly detection and supports real-time feature stores that eliminate training/serving skew. It enables dynamic model retraining via CDC streams and powers low-latency RAG architectures for LLM applications.

4. Why choose serverless Kafka?

Serverless Kafka offers automatic scaling that adjusts to workload demands and pay-per-usage pricing that reduces costs compared to fixed clusters. It eliminates cluster management overhead while preserving standard Kafka APIs, with some options offering up to 90% cost reduction.

5. How do data-integration platforms enhance Kafka Streams?

Platforms like Airbyte provide 600+ pre-built connectors to load data into Kafka without custom coding and handle unstructured data processing with automatic chunking for AI workflows. They enable incremental syncing and end-to-end real-time analytics without managing separate integration infrastructure.

.webp)