Should I Use Partitioning in my Data Transformation Logic?

Summarize this article with:

✨ AI Generated Summary

Partitioning large datasets into smaller segments enhances ETL pipeline performance by enabling parallel processing, reducing query response times, and improving scalability. Key partitioning strategies include horizontal (row-based), vertical (column-based), range, and functional partitioning, each suited to different data types and access patterns. However, partitioning is unnecessary for small datasets, simple transformations, or systems with stable data volumes, as it may add complexity without benefits.

- Partitioning optimizes performance by aligning with data access patterns and distributing workload evenly.

- Effective partitioning requires monitoring and adjusting partition sizes to avoid bottlenecks and resource contention.

- Implementation is supported by ETL tools like Airbyte, which facilitate scalable and efficient data integration workflows.

Partitioning data in your transformation logic can greatly enhance the performance of your ETL pipeline, particularly when dealing with large datasets. By partitioning data into smaller, more manageable chunks, you can leverage parallel processing to optimize performance, reduce query response time, and better manage data volume.

However, deciding whether to use partitioning depends on several factors, including data access patterns, the type of data you're processing, and the complexity of your system.

In this post, we will explore different partitioning strategies (such as vertical partitioning and horizontal partitioning), and when it makes sense to implement partitioning to improve data integrity and system performance.

What is Partitioning in Transformation Logic?

Data partitioning refers to the technique of dividing large datasets into smaller, more manageable segments, or partitions, based on specific criteria. These partitions can then be processed independently, often in parallel, to optimize system performance and data processing.

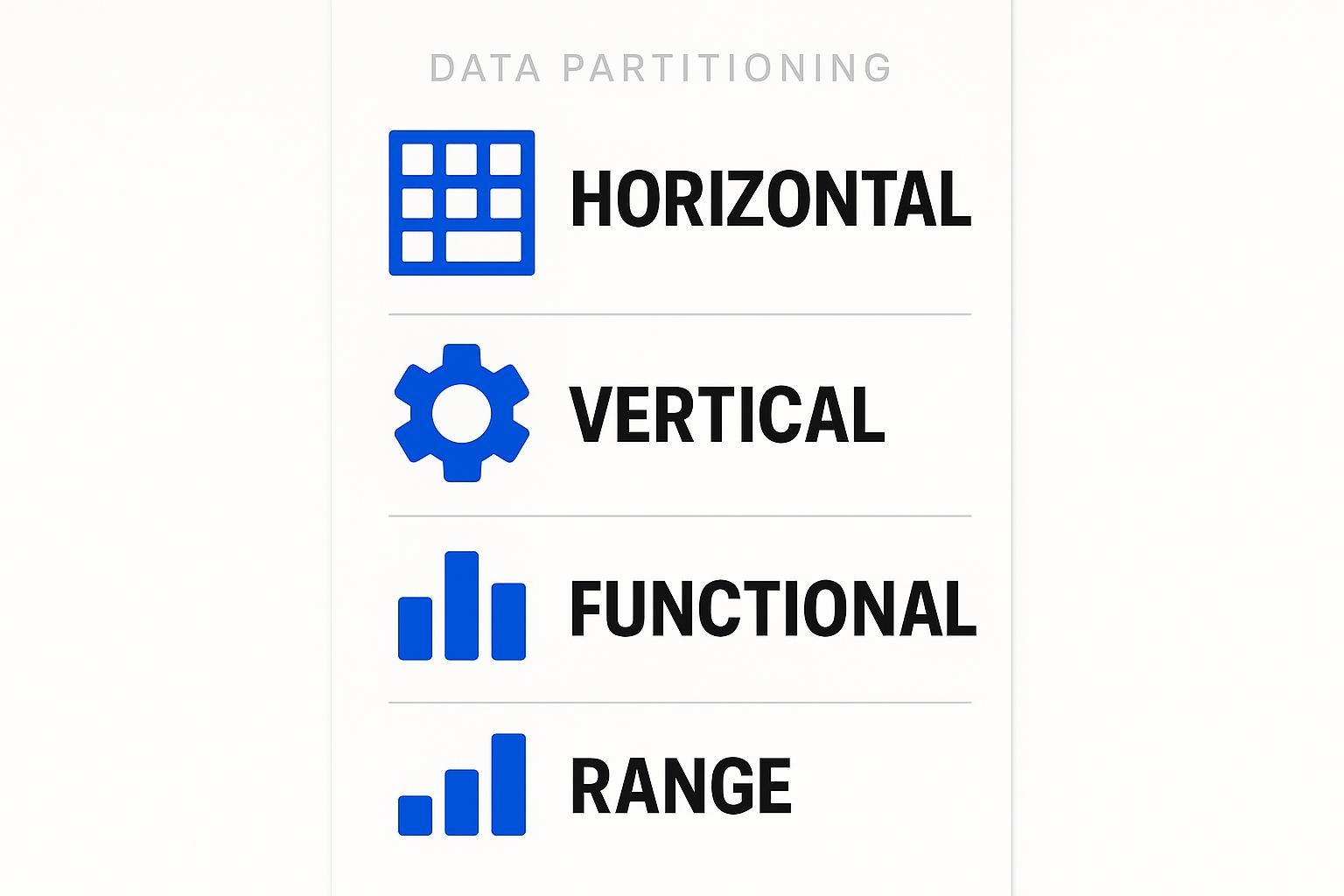

Types of Partitioning:

- Horizontal Partitioning: This involves splitting data into rows, typically based on a partition key like date range or primary key (e.g., customer ID). This allows efficient querying of data subsets based on access patterns.

- Vertical Partitioning: Here, data is divided based on columns rather than rows. This approach is often used when some columns are more frequently accessed than others, allowing for optimized storage and faster queries.

- Functional Partitioning: This involves dividing data based on specific functions or use cases, like separating transaction data from inventory data for better management and quicker access.

- Range Partitioning: Key range partitioning splits data based on specific ranges (e.g., by revenue or age), which is ideal for datasets with a wide distribution of values.

When to Use Partitioning in Your Transformation Logic

Large Data Volumes

Partitioning becomes essential when you're working with large datasets that are difficult to process all at once. Dividing the data into smaller, more manageable chunks makes it easier to handle high data volume efficiently. This is especially useful when the dataset is continuously growing, such as with transaction data, inventory data, or any other type of big data. When dealing with expanding datasets, partitioning can help maintain performance and scalability.

Partitioning works particularly well with time-sensitive data or data that spans long periods, like daily logs or historical data. For example, using date range partitioning or range partitioning for time-series data enables you to process and query the data more efficiently, even as the volume increases.

Performance Optimization

One of the key advantages of this process is its ability to optimize query performance. By reducing the amount of data that needs to be processed at once, partitioning can significantly speed up data transformations.

This is especially beneficial for complex transformations or when data needs to be queried frequently. Partitioning makes it possible to process partitions in parallel, which helps reduce query response time and overall processing time.

To ensure that partitioning optimizes performance, it's important to align the partitioning strategy with your data access patterns. For instance, if your system regularly queries data based on certain attributes (such as customer ID, product category, or region), applying horizontal partitioning can make those queries much faster. When done correctly, partitioning transforms data processing into a more efficient, scalable operation.

Managing Growing Data

As datasets grow, managing and processing the data becomes increasingly difficult. Without partitioning, the sheer volume of data can overwhelm the system, causing slowdowns and bottlenecks. Partitioning helps by splitting data into smaller, more manageable chunks, which makes it easier to maintain and process as the dataset expands.

This is particularly useful for systems that handle slow-moving data—data that doesn’t change frequently but still needs to be processed in batches. Sharing data across separate data stores based on partition keys allows for better data distribution, enabling more efficient processing and storage.

Reducing Resource Contention

When working with large datasets in a distributed environment, it’s crucial to avoid resource contention, where one part of the system monopolizes processing resources. Partitioning helps distribute the data into smaller chunks, allowing for parallel processing across different resources.

This prevents bottlenecks and ensures that the system's resources are used optimally, without any one task slowing down the entire pipeline.

When NOT to Use Partitioning in Your Transformation Logic

Partitioning can bring performance benefits, but it's not always necessary. Here are situations where partitioning may not be the right choice:

Small Data Volumes

For small datasets, partitioning often introduces unnecessary complexity. When the data can easily fit into a single partition, the overhead of managing multiple partitions is not worth the potential performance gains. Processing the entire dataset without partitioning is typically more efficient in these cases.

Simple Transformations

When the transformation logic is straightforward—such as basic data cleaning or aggregation—partitioning is not needed. For these simple tasks, partitioning adds complexity without providing performance improvements. A single partition workflow can achieve good performance without the need for vertical or functional partitioning.

Complex Partitioning Logic

If the partitioning strategy introduces more complexity than it resolves, it can become a bottleneck. Complex partitioning points or key range partitioning may lead to inefficient data distribution, especially if partitions are not evenly balanced. This complexity can reduce performance rather than enhance it.

Insufficient Scalability

For systems that don’t need to scale, partitioning data is often overkill. If data volume is stable and not expected to increase drastically, a single partition store may suffice, and adding partitions only complicates the system unnecessarily.

Types of Partitioning Strategies

How Partitioning Affects Data Quality and Performance in Data Integration

Impact on Data Quality

Dividing large datasets into smaller segments improves data quality and data distribution. However, improper splitting can lead to inconsistencies, affecting data integrity. Consistently applying a partitioning strategy ensures that data remains accurate and reliable across all segments.

Aligning the partition key with data access patterns is crucial. Using methods like range partitioning or vertical partitioning ensures even distribution, while change data capture (CDC) helps maintain consistency in distributed systems.

Performance Benefits

Splitting data into manageable segments enables parallel processing, allowing for faster transformation and reduced query response time. This is particularly beneficial for processing big data or dynamic data, as it prevents any single segment from slowing down the entire pipeline.

Approaches like key range partitioning improve query performance by distributing data evenly, helping manage high data volumes without bottlenecks. As data grows, partitioning ensures scalability and maintains system performance.

Managing Data Processing Speed

Data segmentation helps maintain processing speed by preventing slowdowns that occur when a single system is overloaded. Dividing data into multiple sections allows for more efficient parallelization and better resource management.

However, careful management of partition size is essential to avoid imbalances that could lead to slowdowns. Dynamic partitioning and adding partition points can help ensure optimal performance even as data volume increases.

How to Implement Partitioning in Your Transformation Logic

Partitioning data is a powerful technique to optimize data processing and query performance by dividing large datasets into smaller, more manageable partitions. In this section, we'll explore when and how to apply partitioning strategies to improve data integration and ensure better performance across your ETL pipeline.

1. Choose the Right Partitioning Strategy

The first step in implementing partitioning is choosing the correct partitioning strategy based on your data type and data access patterns. For instance, if your data is time-series, range partitioning (e.g., partitioning by date range) is a natural choice. If you're dealing with customer data, functional partitioning (e.g., partitioning by customer ID or region) might be more effective.

For example, in an e-commerce application, partitioning transaction data by date range (e.g., monthly or quarterly) ensures that query performance is optimized for frequent sales reports, which are often filtered by time periods.

2. Implement Partitioning in Your ETL Tool

Once you've chosen your partitioning strategy, you’ll need to implement it within your ETL tool or pipeline. Most cloud-native ETL platforms, such as Airbyte, Google Cloud Dataflow, and Azure Data Factory, provide built-in support for partitioning data.

In Airbyte, for instance, you can configure multiple partitions directly in the pipeline settings by specifying the partition key and partitioning logic. Depending on the ETL tool you're using, you may need to adjust your partitioning configuration to match the chosen partition type, such as key range partitioning or vertical partitioning.

3. Distribute Data Efficiently

After setting up partitioning, the next step is to ensure that the data is distributed across separate data stores or processing units. When partitioning data, it’s essential to ensure that the data is divided evenly across partitions, avoiding some partitions becoming overloaded with too much data volume.

In a distributed system, you want to make sure each partition is processed independently in parallel, optimizing system resources. Dynamic data partitioning can be used to adjust partition sizes based on data volume, helping to avoid resource contention and ensure smooth parallel processing.

4. Monitor and Optimize Partitioning Performance

As your data grows, monitoring partition performance becomes crucial. Regularly assess the size of your partitions to make sure the data size in each partition is balanced. Uneven partition sizes can result in certain partitions taking longer to process than others, slowing down the overall pipeline.

Use performance metrics to track the query performance of different partitions and adjust partition points as necessary. For example, if one partition becomes too large over time (such as due to seasonal data increases), consider splitting it further to ensure efficient processing.

5. Manage Changes and Scalability

Partitioning is an ongoing process, especially as your data increases or changes over time. As your system scales, the partitioning strategy you first implemented may need to evolve. For example, if you're initially working with a single partition and later realize that the data volume has increased significantly, you might need to implement multiple partitions or adjust partitioning logic to distribute data more efficiently.

In some cases, it might be necessary to adjust the partition key to better suit the growing data size or data types being processed. This ensures that your ETL pipeline remains efficient as data increases and your processing needs change.

Optimizing Data Integration with Partitioning Strategies

The decision to use partitioning in your data integration process depends on the size of your data, the complexity of your transformations, and the performance needs of your system. For large, complex datasets, partitioning can significantly enhance data processing by improving parallel processing and reducing query response time. However, for smaller datasets or simpler integrations, the overhead of partitioning might not be necessary.

By carefully selecting the right partitioning strategy, you can optimize your data integration workflows, ensuring they are scalable, efficient, and capable of handling increasing data volumes. Airbyte makes implementing effective data partitioning easy by offering an open-source platform with over 600 pre-built connectors, allowing you to seamlessly integrate and process your data with minimal friction.

Explore how Airbyte can simplify and optimize your data workflows, ensuring faster processing and scalability as your data needs grow. Start building with Airbyte today.

Frequently Asked Questions

1. What is the best partition strategy for slow moving data?

For slow-moving data, range partitioning splitting data by time or categories are effective, allowing smaller, manageable chunks for efficient processing. Vertical partitioning can complement this by separating frequently accessed columns from rarely used ones to optimize query performance.

2. What’s the difference between vertical and functional partitioning?

Vertical partitioning splits a table by columns to optimize access and storage, while functional partitioning divides data by application purpose or use case, like separating customer data from transaction data, improving performance for specific workflows

3. How do I know when to use multiple partitions versus one partition?

Use multiple partitions when datasets are large, complex, or require parallel processing to improve performance. For smaller or simpler datasets, a single partition is sufficient, reducing management overhead and keeping operations straightforward.

.webp)