Redis vs Elasticsearch - Key Differences

Summarize this article with:

✨ AI Generated Summary

Redis and Elasticsearch serve distinct but complementary roles in modern data management: Redis excels in ultra-low latency, in-memory operations like caching and real-time processing, while Elasticsearch specializes in full-text search, complex queries, and analytics across distributed data. Key considerations include:

- Redis offers sub-millisecond response times with flexible data structures and recent enhancements like server-side scripting and vector search capabilities.

- Elasticsearch provides scalable, distributed search and analytics with advanced features such as semantic search (ELSER), hybrid search, and cost-optimized storage tiers.

- Redis’s 2024 licensing changes have driven enterprises toward alternatives like Valkey, impacting adoption and vendor relationships.

- Hybrid architectures combining Redis for speed-sensitive tasks and Elasticsearch for deep analytics are common, with integration tools like Airbyte facilitating seamless data synchronization and governance.

Choosing the right data management tool is critical when handling massive volumes of information across distributed systems. Data teams today face pressure to deliver sub-millisecond response times for real-time apps while also supporting complex analytical workloads. These demands create a tension between ultra-fast, memory-optimized architectures for caching and disk-based systems for full-text search and analytics.

Redis and Elasticsearch take very different approaches to these challenges but have become essential to modern infrastructure. Redis is an in-memory key-value store built for low-latency access, while Elasticsearch is a distributed search and analytics engine powered by Apache Lucene. Understanding their architectures, performance trade-offs, and evolving features helps you choose the right fit for your needs.

This guide compares their core strengths, recent developments—from Redis's licensing changes to Elasticsearch's storage optimizations—and how they can even work together in complementary roles.

Why Compare Redis vs Elasticsearch?

Although they serve distinct purposes, Redis and Elasticsearch are both powerful tools for systematic data management and processing. Both are designed to store and retrieve data efficiently and are frequently employed in situations that demand real-time data access and complex search functionalities.

Comparing Elasticsearch vs Redis helps you recognize use cases that benefit from each tool's unique strengths. Redis is built for speed and excels in low-latency processes such as caching and message queues, whereas Elasticsearch is optimized for complex querying and analytical workloads.

The comparison becomes particularly relevant as organizations increasingly adopt hybrid architectures where both technologies complement each other. E-commerce platforms might use Redis for session storage and real-time inventory tracking while leveraging Elasticsearch for product search and recommendation engines. Understanding how these tools integrate within modern data ecosystems enables you to architect solutions that maximize performance while minimizing operational complexity.

What Makes Redis a Preferred Choice for High-Speed Operations?

Redis (REmote DIctionary Server) is a NoSQL in-memory key-value store. You can deploy it on-premises or in the cloud as a standalone server, in a master-slave replication setup, or as a cluster for scalability and availability.

Built in C, Redis supports data structures such as strings, hashes, lists, sorted sets, bitmaps, and hyperloglogs, enabling flexible data modeling. Its single-threaded, event-driven architecture ensures simplicity and fast query execution. The platform's architecture eliminates the overhead of thread context switching and lock management, contributing to its exceptional performance characteristics.

Recent updates have significantly expanded Redis's capabilities beyond traditional caching. Redis 7.2 introduced server-side scripting through Triggers and Functions, enabling event-driven workflows that execute JavaScript code directly within the Redis instance. This advancement allows complex data transformations and business logic to run closer to the data, reducing network latency and improving overall system responsiveness.

Key Features of Redis

In benchmarks against vector-database providers like Milvus, Weaviate, and Qdrant, Redis outperformed them in throughput and latency, particularly in scenarios requiring real-time vector similarity searches.

How Does Elasticsearch Excel in Search and Analytics?

Elasticsearch is a distributed, open-source search and analytics engine built on Apache Lucene. It enables quick full-text searches across structured and unstructured data. A cluster of nodes stores and indexes data in shards, providing horizontal scalability and fault tolerance.

Its user-friendly HTTP interface, RESTful APIs, and schema-flexible JSON documents make it ideal for log analytics, monitoring, and GenAI augmentation. The distributed architecture automatically handles data replication, shard allocation, and cluster rebalancing, simplifying operations for large-scale deployments.

Recent enhancements have focused on AI integration and performance optimization. Elasticsearch 8.x introduced ELSER (Elastic Learned Sparse EncodeR), an out-of-the-box semantic search model that generates context-aware results without requiring fine-tuning. The platform also implements hybrid search with Reciprocal Rank Fusion, combining vector and traditional text search results for improved relevance.

Key Features of Elasticsearch

What Are the Core Architectural Differences Between Redis vs Elasticsearch?

Redis is an in-memory key-value store optimized for high-speed caching and real-time processing, whereas Elasticsearch is a search engine designed for full-text search and real-time analytics.

Data Types and Storage

Elasticsearch stores data on disk in a document-oriented JSON format and supports numerous field types including boolean, arrays, spatial data, nested objects, and specialized types for time series and geospatial data. Its schema-on-read approach allows flexible data modeling without predefined structures.

Redis stores data primarily in memory using various data structures including strings, hashes, lists, sets, sorted sets, bitmaps, and hyperloglogs. Each data type is optimized for specific access patterns and use cases. Redis also supports more specialized structures through modules, such as JSON documents via RedisJSON and graph relationships through RedisGraph.

The storage approach fundamentally impacts performance characteristics. Redis achieves sub-millisecond latency by avoiding disk I/O for read operations, while Elasticsearch balances speed with durability through configurable refresh intervals and segment merging strategies.

Performance and Scalability

Redis offers sub-millisecond responses for simple operations and horizontal scaling via Redis Cluster, which automatically partitions data across multiple nodes. The single-threaded architecture eliminates concurrency issues but can create bottlenecks for CPU-intensive operations.

Elasticsearch provides near-real-time search capabilities with configurable refresh intervals and scales horizontally through automatic shard distribution. While individual query latencies typically range from milliseconds to seconds depending on complexity, the distributed architecture handles massive concurrent workloads effectively.

Performance optimization strategies differ significantly between platforms. Redis performance tuning focuses on memory management, command optimization, and cluster topology. Elasticsearch optimization involves shard sizing, index templates, query optimization, and resource allocation across cluster nodes.

Querying and Indexing

Redis supports simple key-based access patterns with specialized commands for each data structure. Complex queries require multiple operations or Lua scripting. The recently introduced search capabilities through RediSearch enable more sophisticated querying, including full-text search and secondary indexing.

Elasticsearch's inverted index architecture enables complex queries including range queries, aggregations, geospatial queries, full-text search with stemming and tokenization, and analytical functions. The Query DSL provides a rich syntax for constructing sophisticated search and analytical queries.

Data Model

Elasticsearch uses a document-oriented model where data is stored as JSON documents within indices. This flexible schema allows for nested structures, arrays, and dynamic field mapping. Documents can be updated partially, and the schema can evolve over time without downtime.

Redis employs a key-value model with various in-memory data structures. Keys serve as unique identifiers, while values can be simple strings or complex structures like sorted sets and hashes. The model emphasizes fast access patterns rather than complex relationships.

Data Consistency and Persistence

Redis provides strong consistency within single-node operations and offers various persistence options including RDB snapshots and Append-Only File (AOF) logging. Redis Cluster implements eventual consistency for multi-node operations, with specific commands available for cross-slot operations.

Elasticsearch offers eventual consistency across distributed nodes but provides strong consistency for individual document operations. The platform implements multi-version concurrency control and automatic conflict resolution. Replication ensures durability, with configurable consistency levels for read and write operations.

Cost Considerations

Redis pricing models vary significantly based on deployment approach. Redis Cloud starts at approximately $5 per month (as of early 2024) for basic configurations, while Redis Enterprise offers advanced features with pricing based on memory and throughput requirements. Open-source Redis eliminates licensing costs but requires operational expertise for enterprise deployments.

Elasticsearch Cloud starts at approximately $95 per month (as of early 2024) for basic deployments, with costs scaling based on data volume, query complexity, and feature requirements. The Elastic License model has evolved, with certain features requiring commercial licenses. Self-managed deployments can reduce software costs but require significant operational investment.

Total cost of ownership extends beyond licensing to include infrastructure, operational overhead, and developer productivity. Redis typically requires less operational complexity for simple use cases but may need additional tools for comprehensive monitoring and management. Elasticsearch provides integrated operational tools but demands expertise for optimization and troubleshooting.

How Has Redis Licensing Evolution Affected Enterprise Adoption?

The Redis ecosystem underwent significant disruption in March 2024 when Redis Ltd. abandoned its traditional BSD open-source license, adopting restrictive RSALv2 and SSPLv1 licenses. This change prohibits cloud service providers from offering managed Redis services without paying licensing fees to Redis Ltd., creating substantial uncertainty for enterprise adoption strategies.

Impact on Enterprise Migration Patterns

Industry surveys reveal that approximately three-quarters of Redis users are actively evaluating alternatives, with Valkey emerging as the primary beneficiary of this licensing transition. Valkey, developed under the Linux Foundation, maintains full compatibility with Redis APIs while preserving the original open-source licensing model.

Migration urgency varies significantly by organization size and deployment model. Large enterprises with existing Redis Enterprise contracts face complex renewal negotiations, while organizations using Redis through cloud providers must navigate changing service offerings. AWS, Google Cloud, and Microsoft Azure have all rebranded their Redis-compatible services to support Valkey, distancing themselves from Redis Ltd.'s licensing restrictions.

The technical migration path from Redis to Valkey remains straightforward due to API compatibility, but operational considerations create complexity. Organizations must evaluate support ecosystems, as Valkey relies on third-party providers like Percona and cloud vendors rather than Redis Ltd.'s integrated support infrastructure.

Enterprise Support and Operational Challenges

Traditional Redis Enterprise features create migration barriers for organizations dependent on specific capabilities. Redis Enterprise's Active-Active CRDT (Conflict-free Replicated Data Types) functionality enables conflict-free multi-region deployments, a capability not available in Valkey's initial releases. Similarly, Redis on Flash cost optimization features and RedisAI edge inference capabilities remain proprietary to Redis Ltd.

Organizations requiring these advanced features face difficult decisions between accepting new licensing terms, developing alternative solutions, or redesigning architectures around Valkey's capabilities. The support ecosystem fragmentation means enterprises must rebuild vendor relationships and operational procedures around new support models.

Cloud providers have responded by enhancing their managed Valkey offerings with comparable features, but the transition period creates operational risk. Performance benchmarks show Valkey maintaining Redis-compatible latency characteristics, though long-term feature parity remains uncertain as development paths diverge.

Strategic Implications for Redis vs Elasticsearch Decisions

The licensing controversy affects Redis vs Elasticsearch comparisons by introducing vendor risk as a decision factor. Organizations previously favoring Redis for its open-source model must now weigh licensing uncertainty against technical benefits. Elasticsearch, despite its own licensing evolution to the Elastic License, provides clearer commercial terms and established cloud service partnerships.

For hybrid architectures combining both technologies, the Redis licensing change may shift balance toward Elasticsearch for primary data storage with Redis serving specialized caching roles. Organizations can limit Redis exposure while maintaining performance benefits for specific use cases like session management and real-time operations.

What Advanced Storage Optimization Strategies Do These Platforms Offer?

Modern data architectures require sophisticated approaches to managing storage costs while maintaining query performance. Both Redis and Elasticsearch have developed innovative solutions that challenge traditional assumptions about the relationship between storage economics and access speed.

Elasticsearch Frozen Tier Architecture

Elasticsearch's frozen tier represents a breakthrough in cost-performance optimization through partially mounted searchable snapshots. This approach combines local SSD caching with object storage backends like Amazon S3 or Azure Blob Storage, enabling organizations to maintain queryable access to historical data while achieving significant cost reductions.

The architecture works by storing complete index segments in object storage while maintaining a configurable local cache for frequently accessed data. Initial queries may experience 2-3x latency compared to hot tier performance, but subsequent queries on cached data show less than a 20% latency difference. This performance profile makes frozen tier suitable for historical analysis, compliance queries, and exploratory data analysis where slight latency increases are acceptable in exchange for dramatic cost savings.

Benchmark testing with datasets exceeding 100TB demonstrates that frozen tier can handle complex queries including Discover UI operations, aggregations, and dashboard visualizations with minimal performance degradation. The key to successful implementation lies in proper cache sizing and object storage I/O optimization.

Operational Considerations and Implementation

Frozen tier implementation requires careful planning around several technical dependencies. Shared cache sizing defaults to 90% of available disk space but requires tuning based on query patterns and data access frequencies. Organizations must also ensure object storage compatibility and plan for scenarios where multiple nodes simultaneously retrieve non-cached data, which can create I/O bottlenecks.

The newer Data Stream Lifecycle (DSL) feature simplifies frozen tier management by automatically handling time-based data rollovers, downsampling, and retention enforcement. This represents an evolution from traditional Index Lifecycle Management (ILM) policies, offering more intuitive configuration for time-series data.

Storage hierarchy optimization enables sophisticated cost management strategies. Hot tier data incurs premium storage costs but provides sub-100ms query performance, while frozen tier reduces storage costs by up to 90% with acceptable 200-500ms query latencies. This tiered approach allows organizations to maintain comprehensive historical data access without proportional cost increases.

Redis Enterprise Scaling Innovations

Redis Enterprise addresses storage economics through different architectural approaches focused on memory optimization and intelligent tiering. Auto Tiering functionality, which replaced Redis on Flash in recent releases, extends RAM capacity using flash storage while maintaining sub-millisecond latencies for frequently accessed data.

The Auto Tiering implementation doubles throughput while reducing latency for large datasets that exceed available RAM. The system automatically promotes hot data to memory while keeping cold data on flash storage, creating a seamless hybrid storage model that maintains Redis's performance characteristics.

Sharded Pub/Sub functionality addresses scalability challenges in distributed deployments by enabling publish/subscribe patterns across cluster shards. This capability proves critical for IoT and chat applications that require high-throughput message distribution across multiple Redis nodes.

When Should You Choose Redis vs Elasticsearch?

Redis Use Cases

Redis excels in scenarios demanding ultra-low latency and atomic operations. Modern applications leverage Redis for coordinating service communication in microservices architectures, where sub-millisecond response times for configuration data and service discovery reduce overall system latency.

Ephemeral search implementations represent an innovative Redis use case, particularly in retail environments where temporary per-user indexes expire after logout. This approach provides personalized search experiences without the overhead of maintaining permanent user-specific indices.

Real-time conversation analysis in telecommunications demonstrates Redis's streaming capabilities. Organizations use Redis Streams for sentiment analysis and uptime monitoring, processing millions of messages per second while maintaining the low-latency response required for real-time decision making.

Session management and distributed locking remain core Redis strengths, with the platform's atomic operations ensuring consistency in distributed systems. Gaming platforms use Redis sorted sets for real-time leaderboards, financial systems employ it for rate limiting, and e-commerce sites rely on it for shopping cart persistence.

Elasticsearch Use Cases

Elasticsearch dominates scenarios requiring complex search functionality and analytical capabilities. Geospatial searches enable location-based queries for mapping applications, logistics optimization, and proximity-based recommendations. The platform's spatial indexing supports polygon searches, distance calculations, and complex geographical filtering.

Data observability represents a primary Elasticsearch strength, with organizations using it for log aggregation, metric analysis, and security monitoring. The platform's ability to ingest high-volume log streams while providing real-time search and analytical capabilities makes it essential for troubleshooting and threat detection.

Autocomplete and instant search implementations leverage Elasticsearch's text analysis capabilities, including prefix queries, n-gram analysis, and suggestion engines. E-commerce platforms use these features to provide responsive search experiences that adapt to user behavior and inventory changes.

Business intelligence applications benefit from Elasticsearch's aggregation framework, which enables complex analytical queries across large datasets. Organizations use it for customer behavior analysis, performance metrics, and compliance reporting where traditional databases struggle with unstructured data analysis.

How Can Organizations Implement Hybrid Data Management Approaches?

Combining Redis and Elasticsearch can deliver both real-time access and advanced analytics capabilities within a single architecture. For example, an e-commerce platform might store product-stock levels in Redis for quick updates during high-traffic periods, while indexing product descriptions, reviews, and metadata in Elasticsearch for rich search capabilities and recommendation engines.

This architectural pattern becomes particularly powerful when you consider the complementary strengths of each platform. Redis handles hot path operations where microsecond latencies matter, while Elasticsearch manages cold path analytics where query sophistication outweighs response time requirements.

Integration Patterns and Data Flow

Effective hybrid implementations require careful orchestration of data flows between systems. Common patterns include using Redis as a high-speed cache layer in front of Elasticsearch for frequently accessed search results, or employing Redis Streams to buffer high-velocity data before bulk indexing into Elasticsearch.

Change data capture (CDC) pipelines can synchronize data between both systems, ensuring consistency while optimizing each platform for its specific role. Redis maintains real-time operational data while Elasticsearch indexes the same information for analytical queries and complex search operations.

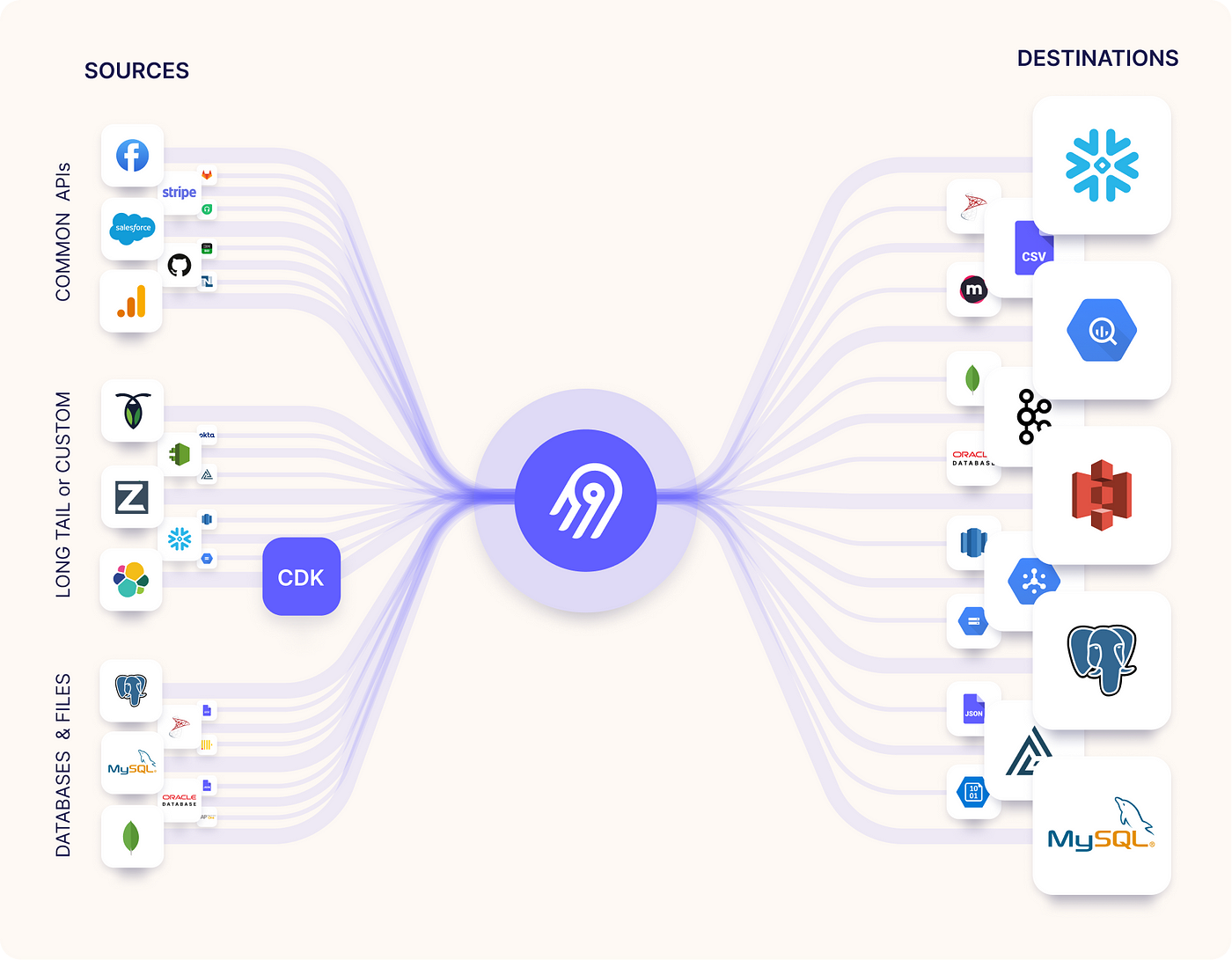

Leveraging Airbyte for Seamless Integration

Tools like Airbyte can orchestrate data replication between Redis and Elasticsearch, providing over 400 connectors for comprehensive data ecosystem integration. Airbyte's transformation capabilities via dbt, incremental sync features, and AI-powered functionality create sophisticated workflows that leverage both platforms' strengths.

Airbyte's data residency controls ensure compliance with data sovereignty requirements when synchronizing between Redis and Elasticsearch across different geographical regions. Field-level masking and comprehensive audit trails provide the governance capabilities needed for regulated environments.

Key Airbyte Capabilities

Airbyte's security features include end-to-end encryption, role-based access control, and integration with enterprise identity management systems. The platform supports SOC 2, GDPR, and HIPAA compliance requirements, making it suitable for organizations with strict governance needs.

Key Considerations for Your Redis vs Elasticsearch Decision

Redis excels at ultra-fast, in-memory operations, while Elasticsearch shines in full-text search, analytics, and complex queries across distributed data. Choosing between them means weighing data model needs, consistency, performance, complexity, and cost. Redis is ideal for low-latency caching and real-time workloads, while Elasticsearch powers sophisticated search and analytics.

Recent changes also shape decisions: Redis's licensing shift impacts vendor relationships, and Elasticsearch's advances in vector search and storage optimization expand AI use cases. Often, the best path isn't choosing one over the other but combining both. A hybrid architecture lets Redis handle speed-sensitive operations while Elasticsearch drives deep data exploration.

Integration platforms like Airbyte make this possible by syncing data across systems, preserving governance, and enabling flexible, high-performance architectures tailored to diverse needs.

Frequently Asked Questions

1. What are the main differences between Redis and Elasticsearch?

Redis is an in-memory key-value store optimized for ultra-fast operations like caching, session management, and message queues. Elasticsearch is a distributed search and analytics engine designed for full-text search, aggregations, and advanced queries across structured and unstructured data. Redis prioritizes low latency, while Elasticsearch prioritizes query sophistication and scalability.

2. Can Redis replace Elasticsearch for search use cases?

Redis (even with RediSearch) can handle basic search, but it cannot match Elasticsearch for full-text search, complex queries, relevance scoring, or large-scale analytics. Redis is better as a complementary real-time cache or buffer alongside Elasticsearch.

3. How do licensing changes affect Redis adoption?

Redis Ltd.'s move to RSALv2 and SSPLv1 licenses in 2024 introduced restrictions for cloud service providers, prompting many enterprises to consider alternatives like Valkey. While Redis Enterprise still offers advanced proprietary features, organizations must weigh licensing uncertainty and support models when planning long-term adoption strategies.

4. When should organizations use both Redis and Elasticsearch together?

Organizations often pair Redis and Elasticsearch when they need real-time responsiveness plus advanced search. Redis handles caching, session management, or live counters, while Elasticsearch manages indexing and full-text queries. Syncing both ensures fast, scalable, and search-optimized performance.

Suggested Reads:

.webp)