SAP Data Integration: Moving 10TB+ Logs to Cloud Without Network Bottlenecks

Summarize this article with:

✨ AI Generated Summary

Your SAP environment generates massive daily log volumes (10TB+), making traditional batch ETL inefficient due to high latency, network strain, and incomplete data capture. Change Data Capture (CDC) replication, especially via hybrid architectures, streams only incremental changes in near real-time, reducing network load and preserving system performance.

- Hybrid architecture separates cloud-based orchestration (control plane) from local data extraction (data plane), ensuring compliance with data residency and privacy regulations.

- Airbyte Flex simplifies SAP integration by decoding complex SAP tables locally, enabling elastic scaling, secure outbound connections, and centralized monitoring without exposing sensitive data.

- Best practices include partitioning log streams, automating schema evolution, real-time validation, and regional scaling to maintain performance during peak loads.

- Compliance is ensured through end-to-end encryption, immutable audit logs, credential management, and regional data isolation aligned with GDPR, DORA, and APRA CPS 234.

Your SAP instance generates tens of terabytes of logs daily from ECC, S/4HANA, or BW. Moving that data to a cloud lake shouldn't mean saturating your network or missing batch windows.

Traditional ETL tools collapse under 10TB+ payloads. SAP's pool and cluster tables embed ABAP logic that must be decoded before export. Standard interfaces like IDoc or OData drop records or throttle throughput when volumes spike. Your dashboards go stale and SLAs slip whenever real-time expectations meet nightly schedules.

Modern analytics and AI stacks expect continuous SAP CDC replication delivered through hybrid architecture. Without it, your logs remain locked inside the ERP while business decisions wait.

What Is SAP Data Integration?

SAP data integration connects the data locked inside ECC, S/4HANA, or BW with the systems where you analyze, audit, or operationalize it. When this connection works, your dashboards stay current, your compliance reports reconcile automatically, and service-level agreements aren't jeopardized by overnight delays.

SAP data often includes personal and financial information. You need every sync to respect retention rules and regional data residency. Teams report duplicated master data, missed cut-off times, and audit findings that slow month-end close when traditional export jobs fall behind massive log volumes.

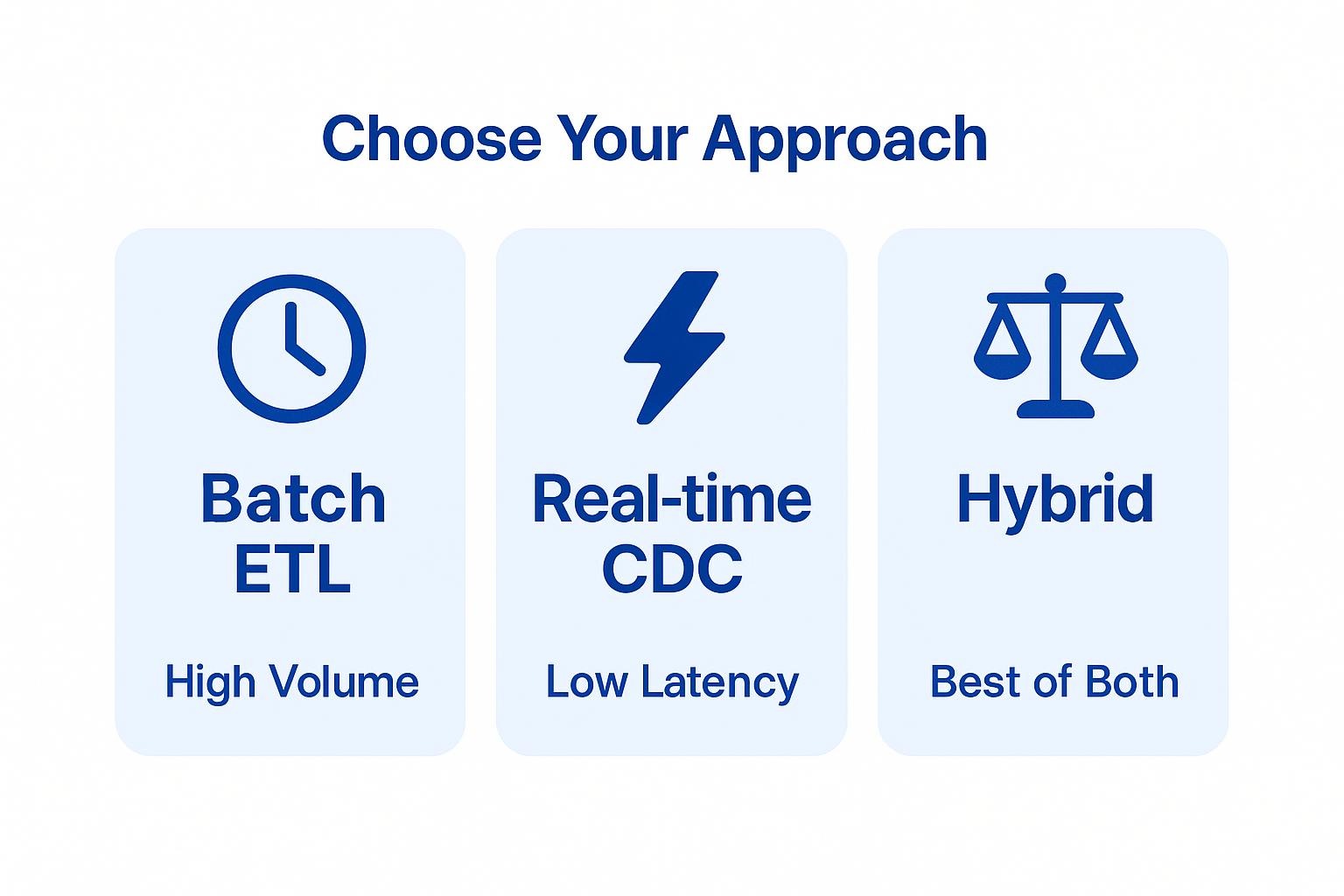

Three distinct approaches handle data movement with varying trade-offs:

For enterprises moving terabytes daily, Change Data Capture trims data transfer to deltas and scales horizontally. Batch tools struggle to match this when tables change every few seconds.

Integrated data keeps finance closing on schedule, supply chains adjusting in real time, and auditors satisfied without forcing you to trade control or compliance for speed.

How Does SAP CDC Replication Handle 10TB+ of Log Processing Daily?

Change Data Capture keeps your SAP pipelines lean by moving only the rows that changed since the last sync. When you're processing more than 10 TB of daily application and audit logs, that difference between moving everything versus moving deltas separates minutes of lag from hours.

SAP offers three primary CDC mechanisms. SAP Landscape Transformation (SLT) reads SAP database redo logs or triggers tables directly. Operational Data Provisioning (ODP) streams changes through the Operational Delta Queue. HANA Log Reader taps straight into transaction logs. Each method delivers an ordered feed of inserts, updates, and deletes that downstream systems can consume in near real time.

A typical large-scale design includes:

- Event-driven extraction paired with parallel buffers running in a regional data plane

- Workers that split high-volume tables by primary-key ranges

- Compression of each batch before pushing over outbound-only HTTPS to your cloud data lake

- Independent shards that let you add processing nodes when log volume spikes instead of resizing your entire cluster

Compared with nightly ETL jobs, CDC cuts network traffic by orders of magnitude and eliminates reporting blind spots that appear between batch runs. Telecom operators we work with now replicate call-detail records within two minutes of creation, even during peak traffic periods, while their SAP database load stays flat.

How Can Enterprises Architect Hybrid SAP Data Integration Pipelines?

You keep control of complex SAP pipelines by separating orchestration from data movement. In a hybrid model, the cloud-managed control plane schedules and monitors jobs, while customer-hosted data planes extract, transform, and load records locally. This approach solves the bandwidth, privacy, and latency problems that plague traditional ETL stacks.

Each data plane runs inside the region or even the same rack as your SAP instance, instead of forcing everything through a single global pipeline. Since only operational metadata flows back to the control plane, you avoid wide-area network overload and meet regional data residency requirements. You also get elastic scaling: when overnight order spikes hit, add workers to the local plane without touching the control layer.

This split design solves the core integration problems you face daily. Heavy ABAP tables stream in parallel within the data plane, so production systems stay responsive. Proprietary pool and cluster tables get decoded close to source, limiting raw data exposure.

Deployment patterns vary based on your sovereignty needs. Multinationals often run a control plane in a neutral cloud region and spin up data planes per continent to satisfy GDPR or APRA CPS 234 requirements. Highly regulated banks flip that approach: they host both control and data planes on-premises, yet still get single-pane monitoring from the architecture.

Whatever model you choose, the hybrid control-data plane delivers predictable throughput for terabytes of logs while keeping auditors and your network team satisfied.

How Does Airbyte Flex Simplify SAP Data Integration?

Airbyte Flex uses a hybrid control plane and data plane architecture that keeps orchestration in the cloud while running extraction locally.

Key capabilities include:

- Cloud-managed control plane that schedules jobs and tracks metrics without hosting burden

- Customer-managed data planes where extraction and loading happen inside your network

- Data sovereignty with SAP records that never leave your environment

- Outbound-only connections that keep firewall rules simple with no exposed inbound ports

- Regional parallel workers that compress and ship only incremental changes

Financial institutions replicate high-volume transaction logs while maintaining strict audit control. Manufacturing companies process terabytes of operational logs daily without crossing compliance boundaries.

Airbyte Flex delivers 600+ connectors across all deployment models with the same connectors and same quality everywhere. You orchestrate everything from one dashboard while keeping encryption keys, credentials, and raw logs entirely within your environment.

How Can Enterprises Improve Performance for Large-Scale SAP Log Processing?

You already know that 10 TB of daily logs will overwhelm any pipeline that treats them like ordinary batch files. The key is to move only what changes and to process those changes in parallel.

1. Partition Log Streams by Logical Boundaries

Modern SAP log extraction tools employ parallelism and partitioning to maintain performance during high-volume periods. Partition log streams by logical boundaries. One worker per plant or customer segment maximizes throughput, especially during end-of-month spikes.

2. Use CDC Replication to Cut Network Traffic

CDC replication cuts network traffic by an order of magnitude compared with nightly full loads. You transmit nothing but inserts, updates, and deletes. When combined with lightweight compression, this dramatically reduces data transfer. More importantly, delta loading avoids heavy table locks on your ECC or S/4HANA systems, preserving production performance during business hours.

3. Scale Data Planes Elastically by Region

A hybrid control plane can spin up extra data-plane workers in the region where traffic spikes occur, then scale them back when volumes normalize. Because traffic flows outbound-only, your firewall rules stay unchanged while you handle variable workloads efficiently.

4. Automate Schema Evolution Tracking

Schema evolution tracking prevents weekend surprises when new columns or table splits appear. Automate this process so structural changes are published alongside data, keeping downstream models compatible and rollbacks painless.

5. Implement Real-Time Validation

Real-time validation catches problems before they propagate. Insert counts, hash totals, and latency targets should be verified as data lands, not discovered in post-mortems. Continuous checks surface anomalies while you can still fix them.

A global retailer partitions inventory tables by warehouse ID, compresses each delta batch, and balances up to 40 data-plane workers during holiday peaks. This keeps stock-level dashboards within a two-minute SLA across continents.

What Are the Compliance and Security Considerations?

When processing sensitive SAP data across regions and cloud environments, regulatory compliance becomes paramount. Key frameworks like GDPR in the EU, DORA for financial services, and APRA CPS 234 in Australia mandate stringent controls that hybrid architectures must address without compromising performance.

Core security measures include:

- End-to-end data encryption protecting information both in transit and at rest to minimize unauthorized access risks

- Immutable audit logs enabling thorough tracking of data movement for operational integrity and regulatory audits

- External credential management storing secrets in customer-controlled vaults rather than embedding them in pipelines

- Regional data plane isolation ensuring sensitive data remains within designated, secure environments

An EU-based manufacturer demonstrates effective GDPR compliance using regional data planes that keep customer information within necessary jurisdictions while maintaining real-time analytics capabilities. This approach satisfies data residency requirements without sacrificing operational efficiency.

Understanding the shared responsibility model becomes crucial in hybrid deployments. While service providers secure the infrastructure, you must manage your data and applications securely. By combining encryption, detailed logging, and regional data strategies, teams can navigate compliance challenges while maintaining the performance needed for terabyte-scale processing.

SAP Data Integration Requires Hybrid Architecture

Processing massive volumes of SAP logs daily requires architectures that keep orchestration in the cloud while running extraction locally. Airbyte Flex delivers hybrid architecture for 24x7 operations, enabling SAP CDC replication without table locks while feeding AI models for predictive maintenance. Talk to Sales for enterprise-scale industrial AI integration that processes 10TB+ daily without breaking WAN links.

Frequently Asked Questions

What is the difference between SAP CDC and traditional batch ETL for large log volumes?

CDC replication streams only changed rows continuously, while batch ETL extracts entire tables on a schedule. For 10TB+ daily log volumes, CDC cuts network traffic by orders of magnitude and eliminates the hours-long reporting gaps that appear between batch runs. CDC also avoids table locks on production SAP systems during business hours.

How does hybrid architecture address SAP data sovereignty requirements?

Hybrid architecture separates orchestration (control plane) from data movement (data plane). Your SAP records stay in customer-managed data planes within your network or designated region, while only lightweight metadata flows to the cloud control plane for monitoring. This satisfies GDPR, DORA, and other regional residency requirements without sacrificing centralized management.

Can Airbyte Flex handle SAP pool and cluster tables?

Yes. Airbyte Flex decodes SAP pool and cluster tables close to source within your data plane, where ABAP logic is accessible. The decoded data then flows through your hybrid pipeline without exposing raw proprietary structures across wide-area networks.

What happens when SAP log volume spikes during month-end or peak periods?

Airbyte Flex's regional data planes scale elastically. You can spin up additional workers in the affected region when log volume spikes, then scale them back when volumes normalize. Traffic flows outbound-only, so your firewall rules stay unchanged while you handle variable workloads efficiently.

.webp)