Snowflake Data Integration: Methods, Tools, and Best Practices

Summarize this article with:

✨ AI Generated Summary

Snowflake has evolved into a comprehensive AI Data Cloud platform with unique architecture separating storage and compute, enabling scalable and flexible data integration. Key integration methods include batch loading, Snowpipe for near real-time ingestion, and third-party tools like Airbyte offering extensive connectors and orchestration support.

- Advanced features: Cortex AI for intelligent processing, OpenFlow for multimodal data, and native AI-powered query optimization.

- Best practices: optimize staging, flexible schemas, robust security, right-sizing resources, and proactive monitoring.

- Airbyte enhances Snowflake integration with broad connector ecosystem, flexible deployment, enterprise security, and Python integration for data science workflows.

- Modern integration supports real-time streaming, secure data sharing, reverse ETL, CDC, and autonomous workflow management for efficient, governed, and scalable data operations.

Snowflake has transformed from a simple cloud data warehouse into a comprehensive AI Data Cloud platform, but many organizations still struggle with outdated integration approaches that limit their ability to leverage modern data capabilities.

Recent developments including Snowflake OpenFlow for multimodal data ingestion, Cortex AI for intelligent processing, and enhanced Apache Iceberg support for open lakehouse architectures represent fundamental shifts in how data professionals should approach integration strategies.

This guide explores both established and emerging Snowflake data integration methodologies and provides practical insights for organizations seeking to optimize their data workflows while maintaining enterprise-grade security and governance standards.

What Makes Snowflake's Architecture Unique for Data Integration?

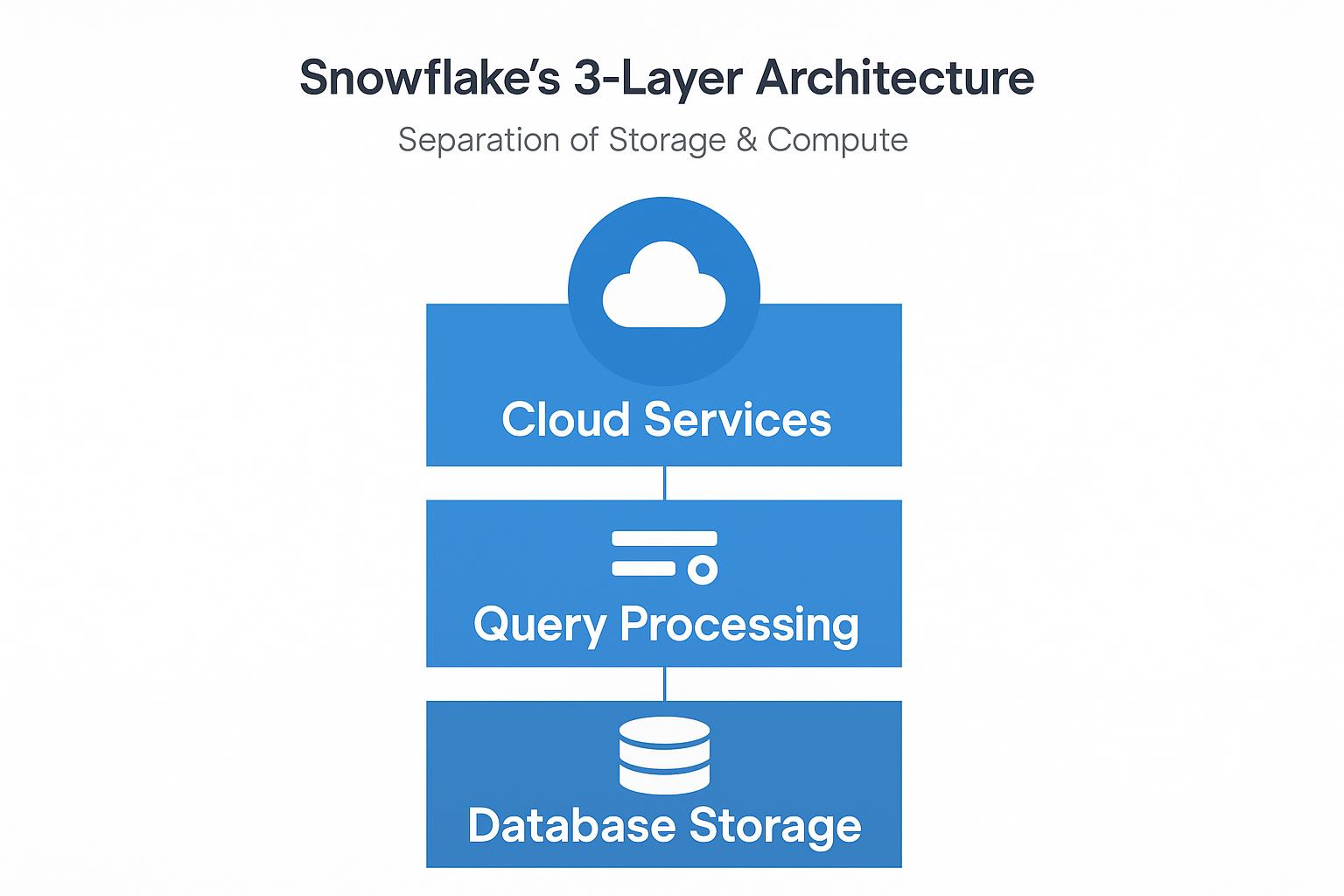

Snowflake's architecture fundamentally differs from traditional data warehousing systems through its separation of storage and compute resources. This creates unprecedented flexibility for data integration workflows.

The platform uses a three-layer design that maximizes integration efficiency. The database storage layer automatically handles compression, partitioning, and metadata management without manual intervention.

The query processing layer provides virtual warehouses that deliver isolated compute environments. These can be sized and suspended or resumed independently based on workload demands.

The cloud services layer centralizes metadata, security, and transaction coordination across all operations. This architecture lets data engineers scale resources precisely while avoiding workload contention and enforcing consistent governance policies across every integration pipeline.

How Do Different Data Integration Methods Work with Snowflake?

Each method serves different use cases and data volume requirements. Batch loading works best for scheduled, high-volume data transfers where near real-time processing is not critical.

Snowpipe excels at continuous ingestion scenarios where data arrives incrementally throughout the day. Third-party platforms like Airbyte provide the broadest connector ecosystem with minimal custom development.

What Are the Essential Steps for Loading Data into Snowflake?

1. Prepare and Stage Data

Start by conforming data to supported formats including CSV, JSON, Parquet, Avro, or XML. Optimize file sizes for peak throughput by targeting the sweet spot range that balances transfer efficiency with processing overhead.

Structure your data to match Snowflake's columnar storage model. This preparation step significantly impacts downstream query performance and storage costs.

2. Configure a Stage

Set up either internal Snowflake-managed stages or external stages using Amazon S3, Azure Blob Storage, or Google Cloud Storage. External stages often provide better integration with existing cloud infrastructure and data lake architectures.

Configure appropriate access permissions and network connectivity between your data sources and the chosen stage location. Proper staging configuration prevents security vulnerabilities and access issues during production loads.

3. Execute COPY Commands

Define file formats, column mapping, error thresholds, and validation rules before executing COPY operations. These parameters control how Snowflake interprets and loads your data while handling format inconsistencies.

Implement proper error handling and logging to capture load failures and data quality issues. This monitoring becomes critical for maintaining data integrity across all integration pipelines.

4. Automate with Snowpipe

Create pipe objects that monitor your stages and automatically load new files as they arrive. This automation eliminates manual intervention while ensuring consistent data availability for downstream applications.

Configure appropriate resource allocation and scheduling to balance load performance with cost optimization. Snowpipe automation should align with your business requirements for data freshness and processing priorities.

5. Monitor and Optimize Performance

Use system views and query history to analyze load performance and identify optimization opportunities. Regular monitoring helps you adjust warehouse sizing, clustering strategies, and file organization approaches.

Establish alerting mechanisms for load failures and performance degradation. Proactive monitoring prevents data availability issues that could impact business operations.

What Best Practices Ensure Optimal Snowflake Data Integration Performance?

Optimize Staging Strategy and File Management

Focus on file sizing that balances transfer efficiency with processing overhead. Properly sized files reduce both load times and compute costs while improving overall system performance.

Design your staging approach to minimize data movement and storage costs. Efficient staging reduces the total cost of ownership for your Snowflake data integration infrastructure.

Design Flexible Schemas and Manage Evolution

Implement schema design patterns that accommodate future data structure changes without breaking existing integrations. Flexible schemas reduce maintenance overhead and development friction as business requirements evolve.

Establish version control and change management processes for schema modifications. Proper versioning prevents compatibility issues and enables rollback capabilities when needed.

Embed Comprehensive Security Controls

Implement role-based access control, encryption, data masking, and row-level security as integral parts of your integration architecture. Security cannot be an afterthought in modern data integration environments.

Establish audit trails and monitoring for all data access and modification activities. Comprehensive logging supports compliance requirements and security incident response procedures.

Right-Size Resources and Apply Intelligent Optimization

Configure warehouse sizes based on actual workload characteristics rather than assumptions. Regular performance analysis helps you balance processing speed with cost efficiency.

Implement automatic clustering for frequently queried tables to improve query performance and reduce compute costs. Proper clustering becomes increasingly important as data volumes grow.

Establish Proactive Monitoring and Error Handling

Create automated monitoring systems that detect integration failures, performance degradation, and data quality issues before they impact business operations. Proactive monitoring reduces mean time to resolution for integration problems.

Implement automated error handling routines that can address common failure scenarios without manual intervention. Robust error handling improves system reliability and reduces operational overhead.

How Can You Troubleshoot Common Snowflake Data Integration Issues?

Diagnose Load Failures Systematically

Inspect COPY command error logs for format mismatches, data type conflicts, and constraint violations. Error logs provide specific details about which records failed and why they were rejected.

Implement data profiling and validation checks before attempting loads to catch formatting issues early. Proactive validation reduces load failures and improves overall data quality.

Address Performance Bottlenecks Methodically

Analyze query execution plans and warehouse utilization patterns to identify resource constraints. Performance bottlenecks often stem from inadequate resource allocation or inefficient query patterns.

Adjust warehouse sizing, clustering strategies, and query optimization based on actual performance metrics rather than theoretical guidelines. Real-world performance data provides the most accurate optimization guidance.

Manage Schema Drift Proactively

Implement automated change detection systems that identify schema modifications in source systems before they cause integration failures. Early detection prevents downstream application failures.

Establish version control processes for schema changes that coordinate updates across all dependent systems and applications. Coordinated schema management reduces integration complexity and failure rates.

Implement Comprehensive Data Quality Controls

Use data profiling, validation checks, and automated quality monitoring to identify data issues before they propagate to downstream systems. Prevention costs less than remediation after quality problems impact business operations.

Create quarantine processes for data that fails quality checks while maintaining integration pipeline operations. Proper quarantine procedures prevent bad data from corrupting analytical results while preserving overall system availability.

Resolve Connectivity and Authentication Issues

Verify network routes, firewall configurations, and credential management for all integration endpoints. Connectivity issues often stem from infrastructure changes that are not immediately obvious.

Schedule regular health checks and connectivity tests to identify authentication and network issues before they cause integration failures. Proactive testing reduces unplanned downtime and improves system reliability.

What Advanced Technologies Are Transforming Snowflake Data Integration?

Real-Time Streaming and Continuous Processing

Apache Kafka integration and Snowpipe Streaming enable continuous data ingestion with minimal latency for real-time analytics and operational applications. Streaming integration supports use cases that require immediate data availability.

Real-time processing capabilities transform Snowflake from a batch-oriented warehouse into a platform that can support operational applications and real-time decision making. This capability expansion creates new opportunities for data-driven business processes.

Secure Data Sharing and Collaboration

Native data sharing capabilities enable internal and external collaboration without creating data copies or exposing sensitive information inappropriately. Secure sharing reduces data proliferation while enabling broader access to analytical insights.

Cross-cloud and cross-region sharing capabilities support modern distributed organizations and partner ecosystems. These features enable data collaboration at scale while maintaining security and governance controls.

Reverse ETL and Operational Integration

Reverse ETL capabilities sync analytical insights back into CRMs, marketing platforms, and other operational applications. This integration closes the loop between analytics and action, enabling data-driven operational processes.

Bidirectional integration transforms Snowflake from a destination-only platform into a central hub that can both consume and distribute data across the entire technology ecosystem. This capability enables more sophisticated data architectures.

Change Data Capture for Low-Latency Synchronization

CDC capabilities provide low-latency synchronization of database changes including inserts, updates, and deletes. Real-time change tracking enables more responsive analytical applications and operational reporting.

Advanced CDC features support complex replication scenarios and conflict resolution for distributed database environments. These capabilities become critical as organizations adopt microservices architectures with distributed data management.

In-Platform Transformations and Processing

Built-in transformation capabilities using stored procedures, user-defined functions, and advanced SQL eliminate the need for external transformation tools in many scenarios. Native processing reduces architectural complexity and data movement costs.

In-platform processing provides better performance and security for sensitive data transformations. Keeping transformations within Snowflake reduces exposure risks and simplifies compliance management.

What AI-Powered Integration and Intelligent Data Processing Capabilities Does Snowflake Offer?

Cortex AI and Large Language Model Integration

Snowflake Cortex AI provides access to large language models from OpenAI, Anthropic, Meta, Mistral AI, and DeepSeek with secure hosting inside the Snowflake platform. This integration enables AI-powered data processing without exposing sensitive data to external services.

Native AI capabilities transform unstructured data processing by enabling text analysis, content generation, and intelligent data enrichment directly within data integration pipelines. These features eliminate the need for separate AI infrastructure while maintaining data security.

Multimodal Data Processing with OpenFlow

OpenFlow leverages Apache NiFi technology to handle text, images, audio, video, and IoT data alongside traditional structured datasets. This multimodal capability enables comprehensive data integration strategies that encompass all organizational data types.

Advanced data processing workflows can now incorporate visual, audio, and sensor data into analytical processes without requiring specialized external tools. This capability expansion supports emerging use cases in computer vision, IoT analytics, and content management.

Autonomous Workflow Management with Cortex Agents

Cortex Agents deliver autonomous, agentic workflows that can adapt and optimize integration pipelines based on changing conditions and performance requirements. Intelligent automation reduces manual intervention and improves system reliability.

Self-optimizing workflows can automatically adjust resource allocation, modify processing strategies, and respond to data quality issues without human intervention. This automation capability becomes increasingly important as data integration complexity grows.

Document AI and Unstructured Data Processing

Document AI capabilities extract insights from contracts, invoices, reports, and other unstructured files directly within Snowflake integration workflows. This processing eliminates the need for external document processing services while maintaining security controls.

Intelligent document processing enables organizations to incorporate previously inaccessible information into their analytical processes and business intelligence applications. This capability unlocks significant value from unstructured data assets.

AI-Driven Query Optimization and Resource Management

AI-powered query optimization automatically analyzes performance patterns and implements tuning strategies to improve processing efficiency. Intelligent optimization reduces manual tuning overhead while improving system performance.

Automated resource allocation adjusts compute resources based on workload characteristics and performance requirements. This optimization capability helps organizations balance performance requirements with cost efficiency automatically.

What Role Does Airbyte Play in Modern Snowflake Data Integration?

Comprehensive Connector Ecosystem and Community Development

Airbyte provides an extensive library of pre-built connectors with rapid community-driven expansion covering databases, APIs, SaaS applications, and cloud services. This ecosystem eliminates custom development overhead for most common integration scenarios.

The Connector Development Kit enables rapid creation of custom connectors for specialized or proprietary systems. This flexibility ensures that organizations can integrate any data source without being limited by vendor connector availability.

Enterprise-Grade Orchestration and Workflow Integration

Native integration with orchestration platforms such as Dagster enables sophisticated workflow management and dependency handling, while integration with Airflow and Prefect can be achieved through custom configurations or APIs. This integration supports complex data pipeline orchestration requirements while maintaining flexibility and control.

Enterprise orchestration capabilities ensure that Snowflake data integration workflows can be incorporated into broader data processing pipelines and business process automation. This integration capability supports comprehensive data architecture strategies.

Flexible Deployment Options and Infrastructure Control

Multiple deployment options including fully managed cloud, self-hosted, and on-premises installations provide flexibility for organizations with different security, compliance, and infrastructure requirements. This flexibility ensures that Airbyte can fit into any organizational architecture.

Infrastructure control enables organizations to optimize costs, maintain data sovereignty, and integrate with existing security and monitoring systems. Flexible deployment options prevent vendor lock-in while maintaining enterprise-grade capabilities.

Predictable Pricing and Resource Efficiency

Cost-effective pricing models based on usage rather than connector counts or data volumes provide predictable expenses that scale with business value rather than technical complexity. This pricing approach supports sustainable growth and budget planning.

Efficient resource utilization and optimization features reduce infrastructure costs while maintaining high performance and reliability. Resource efficiency becomes increasingly important as data volumes and integration complexity grow.

Enterprise Security and Compliance Framework

Comprehensive security capabilities including end-to-end encryption, role-based access control, audit logging, and compliance certifications support enterprise requirements without compromising functionality. Security integration ensures that data integration workflows meet organizational governance standards.

Compliance support for regulations like SOC 2, GDPR, and industry-specific requirements enables organizations to maintain regulatory compliance while scaling their data integration capabilities. Built-in compliance features reduce the overhead of maintaining complex data governance frameworks.

Python Integration and Data Science Workflow Support

PyAirbyte brings connector capabilities directly into Python workflows, enabling data scientists and analysts to work with live data sources within their familiar development environments. This integration eliminates data access barriers and improves analytical productivity.

Native Python integration supports modern data science workflows and enables rapid prototyping and experimentation with different data sources. This capability bridges the gap between data engineering and data science teams while maintaining data quality and security controls.

Conclusion

Airbyte's flexibility, control, and cost-effectiveness combined with Snowflake's evolving AI Data Cloud capabilities equip organizations to build robust, future-proof data integration strategies. These platforms deliver governed, real-time insights while unlocking transformative business value through intelligent data processing and automated workflow optimization.

Modern integration approaches that leverage both platforms enable organizations to scale their data capabilities sustainably while maintaining security and governance standards. The combination of open-source flexibility with enterprise-grade reliability provides the foundation for long-term data strategy success.

FAQ

1. What are the key differences between Snowflake's architecture and traditional data warehouses for integration purposes?

Snowflake separates storage and compute, enabling independent scaling, concurrent integration workloads, and automatic storage optimization. Traditional warehouses combine resources, limiting scalability and often requiring complex ETL. Snowflake also natively handles semi-structured data and centralized governance for smoother integration.

2. How does Snowpipe compare to traditional batch loading for real-time data integration needs?

Snowpipe enables near real-time, automated data ingestion by continuously monitoring storage and loading new files, unlike traditional batch loading, which processes data on a schedule. Snowpipe suits low-latency needs, while batch loading is better for large, scheduled transfers.

3. What security and compliance considerations should organizations address when integrating data with Snowflake?

Key Snowflake security and compliance measures include end-to-end encryption, role-based access control (RBAC) with enterprise identity integration, audit logging, data masking, and row-level security. Organizations must also ensure adherence to regulations like GDPR, HIPAA, and SOC 2, plus data residency requirements.

4. How can organizations optimize costs while scaling their Snowflake data integration operations?

Organizations can optimize Snowflake costs by right-sizing virtual warehouses, enabling auto-suspend/resume, optimizing file sizes and compression, monitoring query and warehouse usage, and using data sharing, clustering, and partitioning to reduce storage and compute expenses.

5. What role do modern integration platforms like Airbyte play in a comprehensive Snowflake data strategy?

Modern integration platforms like Airbyte streamline Snowflake data strategies by offering pre-built connectors, ELT flexibility, and CDC support. They simplify data ingestion, reduce engineering overhead, ensure scalability, and integrate seamlessly with orchestration tools for reliable, governed, and automated data pipelines.

Suggested Reads:

.webp)