Unified Logging and Lineage Across Hybrid Deployments

Summarize this article with:

✨ AI Generated Summary

Hybrid cloud architectures face visibility challenges due to scattered logs and lineage metadata across multiple environments, complicating troubleshooting and compliance. Unified logging consolidates control-plane and data-plane events into a single schema with consistent identifiers, enabling end-to-end traceability, faster root cause analysis, and comprehensive audit trails without compromising data sovereignty.

- Central schema and global correlation IDs unify logs from cloud and on-premises sources.

- Region-aware retention keeps sensitive data within legal boundaries while forwarding summaries for monitoring.

- Automated log collection, immutable storage, and integrated lineage tracking improve operational speed and regulatory confidence.

- Tools like OpenTelemetry, Fluent Bit, Kafka, and SIEM platforms support unified logging architectures.

Hybrid architectures scatter logs and lineage metadata across public clouds, private VPCs, and on-premises servers. Without a single view, you chase errors through multiple dashboards, struggle to prove controls during audits, and miss signals buried in disconnected storage. Visibility gaps remain a persistent challenge in hybrid cloud environments.

Unified logging aggregates every control-plane event and data-plane action into a common schema; lineage tracks each dataset from source to destination. But collecting that evidence outside its home region can create compliance gaps. In the sections below, see patterns for complete visibility, then tackle the question: how can you unify logs and lineage without compromising data sovereignty or performance?

Why Does Unified Logging Matter in Hybrid Deployments?

Hybrid architectures split your observability surface in two: cloud control planes record orchestration events, while on-premises data planes emit execution details. Each domain stores logs in its own format and dashboard, leaving you to stitch together context during every outage.

When a CDC pipeline stalls or an ETL transformation corrupts a dataset, scattered logs force you to hop between tools instead of following a single thread. That delay costs business hours and risks missing service-level objectives. Auditors reviewing SOC 2, HIPAA, or DORA controls require comprehensive evidence of data movements; incomplete logs create compliance exposure and, in some cases, failed audits.

Lineage turns raw log events into a narrative showing how data moved, transformed, and landed. Without unified logging, lineage graphs break at cloud boundaries, making it impossible to prove data custody or trace the blast radius of an error. Consolidating logs and lineage into one layer restores both operational speed and regulatory confidence.

Persistent, correlated logs give you the foundation to build reliable lineage, closing both operational and compliance loops in hybrid environments.

What Makes Logging and Lineage Challenging in Hybrid Architectures?

You face two separate environments every time you deploy a hybrid stack: a cloud-based control plane that orchestrates operations, and an on-premises data plane that executes the work. Each environment generates its own logs, using different schemas, often stored in different regions. The gap between them breaks visibility, leaving you unable to trace events end-to-end across those boundaries.

Control-plane monitoring tools frequently ship metadata to provider regions outside your legal boundary. That round-trip might be convenient for dashboards, but it exposes personally identifiable information and violates residency mandates. Meanwhile, on-premises log stores rarely capture SaaS orchestration events, so auditors see only half the picture.

Technical hurdles compound the governance risk:

- Logs arrive late because cross-cloud links add latency

- Fields rarely line up because vendors use incompatible formats

- Job identifiers collide when multiple schedulers reuse numeric IDs

Consider a scenario where a global bank cannot prove how a failed CDC job in its European data center related to a retry triggered from a U.S. SaaS control plane. This gap highlights how missing lineage links create costly audit exposures.

These structural divides create multiple blind spots, leaving you without the forensic trail required for security or compliance. Unified logging and lineage must bridge these gaps before you can claim truly observable hybrid operations.

What Should Unified Logging and Lineage Look Like?

You need a single visibility layer that spans every control plane API call and every data plane record write, whether those events originate in AWS, an on-premises cluster, or a SaaS service. This layer aggregates logs in real time, normalizes them into a shared schema, and stores them immutably so auditors can replay any event sequence years later.

Data lineage connects those events, mapping each dataset from source to transformation to destination. You get reliable lineage when every log carries consistent timestamps, job IDs, and connector IDs. Cross-plane correlation IDs tie control events (like a scheduler triggering a sync) to the actual data plane rows moved, closing the visibility gap that hybrid environments often create.

Region-based retention keeps PHI or PII inside sovereign boundaries while forwarding summaries for global monitoring.

Platforms using this layered approach (standardized schemas, WORM storage, and region-aware retention) achieve centralized log management with traceability you can trust when audits hit or pipelines break.

What Are the Best Practices for Building Unified Logging and Lineage?

Fragmented logs and missing lineage stunt troubleshooting and keep auditors circling back with follow-up questions. The pattern for standardizing hybrid observability stacks is consistent: start with structure, then layer on automation, separation, and airtight access controls.

1. Start With a Central Schema and Global Identifiers

Without a shared language your logs turn into a game of telephone. A central schema (JSON or Parquet works fine) lets every pipeline, connector, and user speak in the same dialect. Your schema needs:

- Timestamp in ISO-8601 UTC format

- pipeline_id with job_id and run_id for tracking

- source_id and destination_id for flow mapping

- user_id and service_account for access control

- correlation_id for cross-system joins

Teams struggling with inconsistent log formats cite this gap as the single biggest blocker to centralized visibility in hybrid setups. Agree on the fields first; parsing and analytics become routine after that.

2. Separate Control and Data Plane Logs by Function

Control plane logs track orchestration: API calls, scheduler events, configuration changes. Data plane logs capture execution details: row counts, latency spikes, checksum failures.

Keep them in distinct streams, but stamp both with the same correlation_id so you can trace a failed CDC job from the API request down to the affected rows. Lumping everything together hides root causes and recreates the fragmented visibility problem hybrid teams fight daily.

3. Keep All Sensitive Logs Within Your Boundary

PHI, payment data, or customer identifiers must never hop regions just to satisfy a dashboard KPI. Store those logs in the same jurisdiction as the data they describe, then ship summaries (counts, hashes, error codes) to your cloud SIEM.

This approach closes compliance gaps in hybrid deployments and keeps auditors satisfied that no raw records crossed borders.

4. Automate Log Collection and Retention Policies

Manual SCP copies and ad-hoc S3 buckets collapse the first time volume spikes. Deploy agents like Fluent Bit or Elastic Agent next to every workload and let them funnel logs into region-aware pipelines.

Apply WORM (write-once-read-many) storage so evidence can't be tampered with, then roll data off to cold storage based on actual regulations, not gut feel.

5. Integrate Lineage Tracking Into ETL and CDC Jobs

Lineage isn't something you add later; it's metadata you emit at every step. Capture source versions, transformation hashes, and destination checksums directly inside your jobs.

Airbyte Enterprise Flex writes connector IDs, schema versions, and job events to the same log stream that lives in your environment, making downstream data lineage graphs complete by default.

6. Stream Unified Logs to a Central Observability Layer

Once structured and sanitized, push both control and data plane logs into a durable bus (Kafka, OpenTelemetry collectors, or your existing SIEM). Normalization at ingest time eliminates region-specific quirks, and back-pressure handling keeps latency predictable during traffic spikes.

7. Align Retention and Access With Compliance Frameworks

HIPAA requires a six-year retention for certain documentation; DORA leaves log retention periods up to risk-based policies, without specifying a duration. Map each log category to its governing standard and automate deletion once the timer expires.

Enforce RBAC on every viewer and API key, and record those access events in their own immutable log stream. Gaps in auditability remain a top concern in hybrid security, and tightened access controls are the fastest fix.

Follow these practices and you'll move from piecemeal logging to a cohesive, lineage-aware fabric that survives scale, satisfies regulators, and gives you answers when something breaks.

How Can Unified Logging Strengthen Compliance and Security?

Centralized logs give you a single, tamper-proof record of every control-plane command and data-plane event, eliminating the patchwork evidence trail that regulators flag as a weakness in hybrid architectures. Visibility that spans clouds and on-premises closes the blind spots attackers exploit while also cutting the time you spend assembling audit packages.

Key compliance benefits include:

- Immutable audit trails through write-once storage and cryptographic hashing

- Cross-environment breach reconstruction by correlating control and data events

- Automated compliance reporting for SOC 2 Type II, GDPR, or DORA reviews

- Regional data sovereignty by keeping PHI or PII within legal boundaries

Healthcare networks pass HIPAA audits using this approach: PHI-bearing data-plane logs stay inside their datacenter while redacted control-plane summaries stream to a cloud SIEM.

How Does Unified Lineage Improve Data Reliability?

Complete lineage gives you a living map of every dataset (from source system to dashboard) so errors surface exactly where they occur instead of somewhere downstream. When each sync, transformation, and load carries consistent identifiers, you can trace a broken report back to the single job or column that introduced bad data, even across on-premises databases and multiple clouds.

Key reliability improvements include:

- Rapid root cause identification by tracing datasets from dashboard to source

- Blast radius visualization showing which tables, jobs, and dashboards depend on changing schemas

- Deterministic error tracking replacing hours of log correlation with minutes of directed search

- Proactive impact analysis before deploying schema changes or infrastructure updates

Consider the fintech scenario where suspicious revenue numbers appeared in Looker. Detailed lineage let engineers jump from the dashboard to the failed dbt model in minutes, rather than combing through disparate logs for hours.

This depends on standardized logging underneath. When control-plane events and data-plane metrics follow the same schema, duplicate identifiers disappear and you avoid the fragmented visibility that plagues many hybrid stacks. Reliable lineage is only as trustworthy as the logs that feed it.

How Can You Implement Unified Logging with Existing Tools?

Treat your cloud logs as data streams that flow through a centralized collection point. Configure AWS CloudWatch and Azure Monitor exports to send data through an OpenTelemetry collector, then route everything to your existing ELK or Splunk infrastructure, whether that's on-premises or in a compliant region. The collector normalizes log formats before they reach your analysts, eliminating cross-vendor inconsistencies.

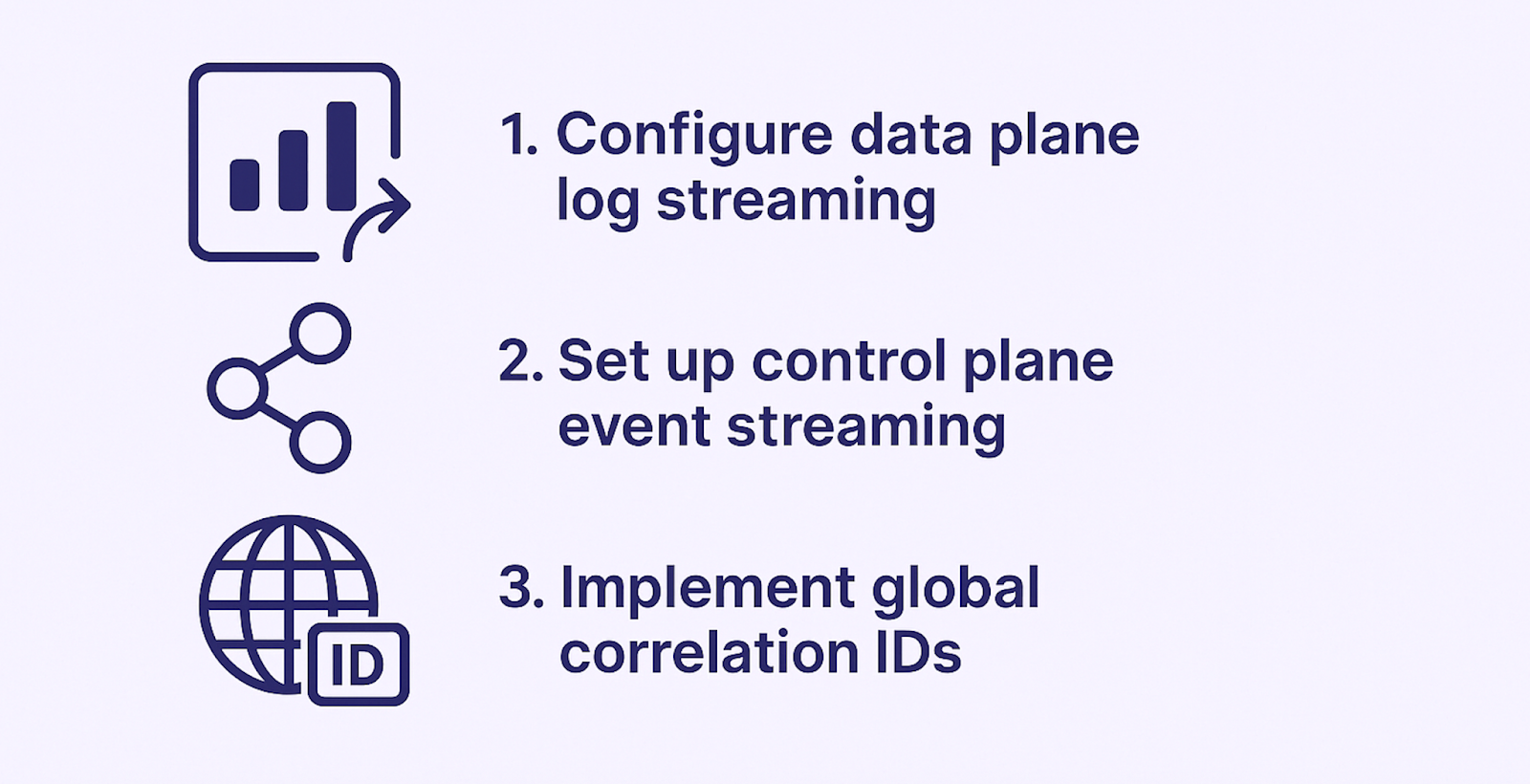

Implement the centralized logging pipeline in three steps:

1. Configure Data Plane Log Streaming

Direct connector throughput and latency logs from your hosts to your SIEM endpoint using TLS encryption.

2. Set Up Control Plane Event Streaming

Route orchestration events through Kafka or OpenTelemetry for low-latency distribution to multiple consumers.

3. Implement Global Correlation IDs

Attach a unique job_id to every log record so you can trace individual Airbyte syncs across regions and cloud boundaries.

Airbyte Enterprise Flex simplifies this process by emitting structured JSON logs and lineage metadata with consistent job_id correlation. The architecture keeps your control plane in the cloud while maintaining data plane logs in your sovereign environment, enabling centralized observability without compromising data residency requirements. This approach gives you complete audit trails while meeting compliance frameworks like HIPAA or SOX.

Why Is Unified Logging the Foundation of Hybrid Observability?

When your logs live in separate clouds, VPCs, and on-premises servers, troubleshooting becomes guesswork. Security teams see alerts from one environment while performance issues surface in another. Compliance auditors find evidence scattered across systems they can't cross-reference.

The operational impacts of fragmented logging include:

- Extended incident resolution from minutes to hours as teams correlate timestamps across time zones

- Incomplete security investigations when attack patterns span multiple disconnected systems

- Failed compliance audits due to missing evidence or gaps in data custody chains

- Higher operational costs maintaining separate monitoring tools for each environment

Data teams tell us this fragmentation turns every incident into an archaeology project. You're matching job IDs that don't connect and rebuilding context that should already exist.

Consolidated logging creates a single timeline where every control-plane decision and data-plane execution connects. CDC lag in your manufacturing plant correlates with the schema change deployed from your cloud control plane. Failed pipeline runs link directly to the connector version that caused them.

How Does Unified Logging Enable Complete Hybrid Visibility?

Complete logging and lineage enable comprehensive visibility across hybrid deployments while maintaining data sovereignty and compliance requirements. When implemented correctly, this foundation supports both regulatory audits and faster troubleshooting across distributed infrastructure.

Airbyte Enterprise Flex delivers HIPAA-compliant hybrid architecture, keeping ePHI in your VPC while enabling AI-ready clinical data pipelines. Talk to Sales to see how unified logging and lineage work in real hybrid deployments without compromising data sovereignty.

Frequently Asked Questions

What is the difference between unified logging and traditional logging?

Traditional logging scatters events across multiple systems, each with its own format and storage location. Unified logging aggregates all control-plane and data-plane events into a single schema with consistent identifiers, enabling you to trace activities end-to-end across hybrid environments without manual correlation.

How does unified lineage help with compliance audits?

Unified lineage creates an immutable record showing how data moved from source to destination, including all transformations. Auditors can verify data custody, prove residency compliance, and trace any dataset back to its origin. This documentation satisfies SOC 2, HIPAA, and DORA requirements without manual evidence assembly.

Can I implement unified logging without moving sensitive data to the cloud?

Yes. Keep sensitive logs within your sovereign boundary and forward only summaries (counts, error codes, hashes) to cloud monitoring tools. Airbyte Flex follows this pattern: data-plane logs containing PHI or PII stay in your environment while control-plane metadata streams to the cloud for orchestration.

What tools integrate with unified logging architectures?

Most observability stacks work with unified logging through standard protocols. OpenTelemetry collectors, Fluent Bit agents, Kafka streams, and SIEM platforms (Splunk, ELK, Datadog) all consume structured JSON logs. Airbyte Flex emits logs in this format, making integration with your existing tools straightforward.

.webp)