10 Data Wrangling Tools to Tame Your Wild Datasets

Summarize this article with:

It is difficult to manage and analyze raw data to generate useful business insights. As a result, you must convert it into a form suitable for different data-related tasks.

This is where data wrangling comes into the picture. It is a process that can help you refine your raw and unstructured datasets. However, performing manual data wrangling can be effort and resource-intensive.

To overcome this challenge, you can opt for data wrangling tools. This blog guides you through a list of ten popular data wrangling tools to enhance data quality for robust data analytics.

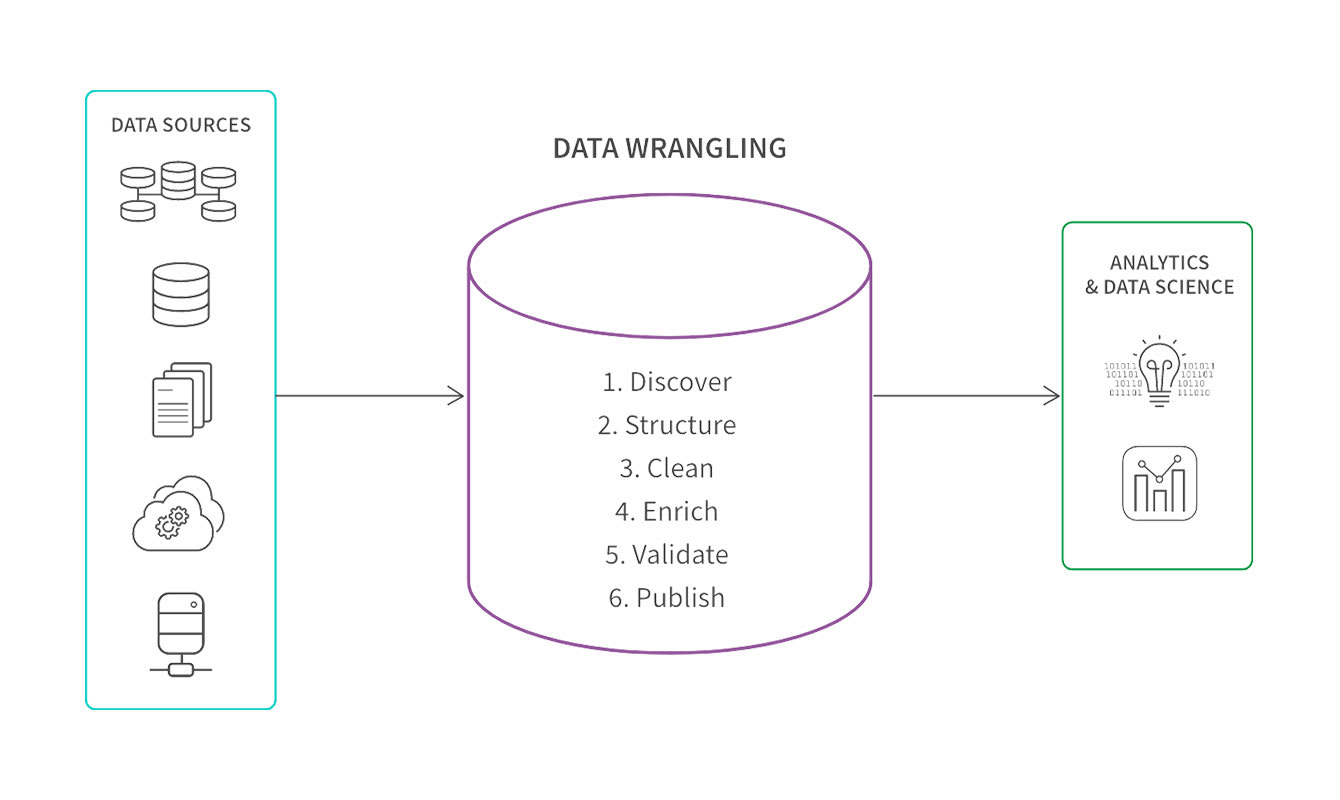

What are Data Wrangling Tools?

Data wrangling includes structuring, cleaning, enriching, validating, and publishing data. Performing these steps manually can be time-consuming and prone to error. To avoid this, you can use data wrangling tools. They are software applications that automate transforming raw and complex data into a simplified form for effective data analysis.

Data wrangling tools can help you enhance data quality through automation and streamline data workflow for insightful data analytics. Let us look at some of the best data wrangling tools in detail.

10 Powerful Data Wrangling Tools Unveiled

1. Talend

Acquired by Qlik, Talend is one of the most popular data wrangling tools. It offers a simple drag-and-drop user interface that lets you transform your data without much technical expertise. Talend provides various features to simplify all the steps involved in data wrangling. Some of these are discussed below.

Key Features

- Multi-cloud Platform: You can deploy Talend on various cloud service providers, such as AWS, Microsoft Azure, and Google Cloud Platform.

- Improved Data Quality: It enables you to refine your data through data standardization, deduplication, and validation. You can also leverage its machine learning capabilities to address complex data quality issues.

- Data Integration: Talend facilitates robust data integration by facilitating data collection and consolidation from any source to any destination of your choice.

Open Source or Commercial

It is a commercial platform.

Community Support and Documentation

Talend has active community support that quickly provides solutions to resolve problems. It also offers detailed product and API documentation that helps you understand the platform's functionality.

2. Alteryx APA

Alteryx APA is a versatile data analytics platform. It supports various data wrangling features that enable you to consolidate and streamline data from various sources. You can leverage data wrangling templates offered by Alteryx Designer Cloud to expedite your data wrangling process.

Key Features

- AI-based Data Analytics: Alteryx facilitates AI-powered data analytics. This helps in refined analysis of your datasets and helps in accurate data-based insight generation for better decision making.

- Holistic Data Preparation: It enables you to prepare data and build effective data models. A good data model forms the foundation of advanced data analysis.

- Collaboration: Alteryx has adopted many ideas of the open-source movement. It permits you to share information with its other users and access any information or analytics feature developed by the Alteryx community.

Open Source or Commercial

Alteryx is a commercial platform. However, it offers four open-source Python libraries through its Alteryx Open Source service, which allows you to leverage its machine learning capabilities.

Community Support and Documentation

It has strong community support that actively contributes to enhancing its services. Alteryx's extensive documentation makes it easy to understand its functions.

3. Altair Monarch

Altair Monarch is a desktop-based data preparation solution. It automates data wrangling by providing over 80 pre-built functions such as String, Date, or Conversion for data preparation.

Key Features

- Extracting PDF Data: Altair Monarch facilitates data extraction from text-heavy PDF files. You can modify this data if you want and export it to Monarch Data Prep Studio for further data preparation process.

- Multifunctional Platform: It supports functions such as combine, join, blend, wrangle, and append. This allows you to leverage features of both Excel and SQL for data preparation. Altair also offers a visualization service named Panopticon that helps you create insightful visuals of time-critical data.

- Usage in the Healthcare Sector: Altair is used extensively to manage healthcare sector data. Pharmaceutical and life sciences researchers use the Altair Grid Engine to manage high-performance computing workloads.

Open Source or Commercial

Altair is a commercial tool. It offers a free trial, but the paid version is expensive.

Community Support and Documentation

It has a large community of users that respond quickly to resolve any troubleshooting queries. The documentation of Altair Monarch is detailed and provides comprehensive information on its products and services.

4. Datameer

Datameer is a data pipeline building and management tool. Its spreadsheet-like interface allows users to collect, transform, and analyze data from various sources. You can integrate it with Snowflake to use conversion, numeric, date and time functions to prepare and wrangle your datasets.

Key Features

- Advanced Analytics: It provides a Smart Analytics module that simplifies complex data analysis using machine learning. It allows you to deploy clustering, decision trees, and predictive analytics.

- Robust Data Governance: The data governance module of Datameer provides enterprise-grade governance features. It includes regulatory compliance, data quality, data lineage, and security features.

- Collaborative Ecosystem: Datameer offers a shared workspace for different domain teams to share their work and collaborate. This fosters innovation and a productive work environment.

Open Source or Commercial

It is a commercial tool.

Community Support and Documentation

Datameer has an active community of users who respond quickly to resolve issues. To use the platform effectively, you can refer to its concise and well-written documentation.

5. Scrapy

Scrapy is a data extraction tool based on Python. It is fast and scalable and supports some features for effective data wrangling. However, it is a complex tool with a high learning curve. You can use this tool for data wrangling if you have the resources and time to understand its functioning thoroughly.

Key Features

- Efficient Web Scraping: You can use Scrapy to extract data from HTML/XML files using CSS selectors, XPath expressions, and helpers.

- Telnet features: Telnet is a Python protocol that allows you to connect with other computers on the same network. Scrapy has a built-in telnet console extension that simplifies connecting to Python terminals.

- Flexible Data Export: You can create feed exports in various file types, such as JSON, CSV, or XML, and store them in various backend locations, like FTP or a local file system.

Open Source or Commercial

It is an open-source solution.

Community Support and Documentation

Scrapy has a vast community of users spread across various platforms. If you have basic or no coding knowledge, you may find Scrapy's documentation insufficient.

6. ParseHub

ParseHub is a cloud-based web scrapping tool. It also enables you to seamlessly perform various data wrangling tasks, such as deduplication, filtering, or merging data from multiple sources. To achieve this, ParseHub provides regular expressions (RegEx) that supports retrieving, removing or replacing data records.

Key Features

- Easy-to-use Interface: ParseHub has a simple interface that enables no-code data scrapping from various websites. It helps you achieve error-free web scrapping and saves time that is lost using manual methods.

- IP Rotation: It supports IP rotation while web scrapping to access your desired data securely. IP rotation is a technique of changing IP addresses periodically to avoid restrictions imposed by websites based on their IP address.

- Versatile Integration: You can easily integrate ParseHub with various file types. It allows you to extract data from sources like Ajax, JavaScript, sessions, or cookies. You can download this scrapped data in Excel, CSV, or Google Sheets files.

Open Source or Commercial

It is a commercial platform.

Community Support and Documentation

ParseHub has an active community that routinely contributes to helping you navigate the platform. It also has comprehensive documentation that clarifies its products and services.

7. Microsoft Power Query

Microsoft Power Query is a data transformation and preparation engine. It is a highly popular data wrangling tool because of its advanced data manipulation capabilities. You can directly integrate it with Microsoft Excel. Microsoft Power Query is also used for data analysis and business intelligence purposes.

Key Features

- Multi-source Integration: Microsoft Power Query allows you to extract data from various sources such as SQL databases, webpages, cloud storage systems, and native systems. You can leverage this to get a holistic view of your datasets and make informed decisions.

- Data Transformation: It offers various pre-built functions for simple and advanced data transformation. These include functions for removing a column, filtering rows, merging, appending, grouping, and pivoting data records. You can easily access these functions from the platform’s interface to perform the desired transformations.

- M Langauge Support: You can conduct complex transformations in Microsoft Power Query with the help of its specialized M language. It can be used with an advanced query editor to refine transformation functions.

Open Source or Commercial

It is a commercial service.

Community Support and Documentation

Microsoft Power Query has excellent community support because of the Microsoft ecosystem. It also offers detailed documentation about different products and their features.

8. Tableau Desktop

Tableau Desktop is a desktop version of the data visualization tool Tableau. It is not primarily a data wrangling tool. However, it supports some data preparation and cleaning tools to aid data wrangling. The refined data can be used to create interactive data visualizations to gain useful insights for enterprise growth.

Key Features

- Engaging Platform: The reports and dashboards generated through Tableau are visually appealing. As a result, it gives you clarity on the data wrangling process by identifying patterns and trends in the datasets.

- Enhanced Security: Tableau ensures data security by providing various authentication and authorization techniques. It also supports security protocols like Active Directory and Kerberos for secure communication between different data systems.

- Real-time Collaboration: Using Tableau, you can share your reports, dashboards, and visualization workbooks in real-time with your team members. This speeds up the data analytics process and facilitates quick decision-making.

Open Source or Commercial

It is a commercial tool.

Community Support and Documentation

The Tableau community is very active and responds quickly to queries. The documentation is well-written and explains all its features in detail.

9. Tamr

Tamr is a data curation platform that helps you resolve data management problems. Its Unify API allows you to consolidate your data effectively. You can then clean this consolidated data using Tamr's human-guided machine learning feature.

Key Features

- Master Data Management: You can leverage Tamr's AI and ML capabilities to manage your master data. It contributes to converting your data into a standard and consistent format.

- Library of Pre-trained ML Models: Tamr offers a vast library of pre-trained ML models. You can use them to efficiently curate different datasets at scale by investing minimum time and effort.

- LLM-based Semantic Comparison: Semantic comparison is a method for comparing similarities and differences between grouped entities. Tamr allows you to leverage LLMs for semantic comparison of data. This helps you understand the relationship between various data records.

Open Source or Commercial

It is a commercial tool.

Community Support and Documentation

Tamr has limited community support. However, it provides exhaustive documentation and tutorials that help you understand about its services.

10. Astera

Astera is a software that offers data management services. It has some features, such as the data cleanse transformation object and data quality mode option that contributes to data wrangling.

Key Features

- AI-based Data Extraction: Astera allows you to use AI tools to extract data from source systems. It automates the data collection and saves the time required to gather data manually from multiple sources.

- Parallel Processing Engine: It uses a parallel processing engine to handle large datasets efficiently. This facilitates faster completion of complex data tasks such as data wrangling.

- Handles Unstructured Data: The Astera ReportMiner assists you in extracting unstructured data formats such as PDF, RTF, or PRN. It uses AI to create extraction templates and imports data from unstructured files.

Open Source or Commercial

It is a commercial tool.

Community Support and Documentation

Astera has a large user community that actively contributes to troubleshooting issues and shares knowledge to improve the platform. The documentation is comprehensive, with detailed explanations of all Astera products and services.

Features to Look Out For in Data Wrangling Tools

Here are some features that you should look for while selecting tools for data wrangling:

Data Cleaning Capabilities

Data cleaning is a crucial step in the data wrangling process. It involves identifying and fixing missing values, outlier data points, or unsupported data formats. You should select a data wrangling tool that can effectively perform all these tasks to clean your data.

Data Transformation

Your data wrangling tools should also act as advanced data transformation tools. They should support basic transformations like filtering, sorting, joining, and pivoting. You should look if the chosen tool supports complex data manipulation and enrichment functions.

Handling Various Data Formats

Data wrangling involves processing data from different sources, which may or may not be in uniform format. For seamless data wrangling, your tool should be equipped to handle structured, semi-structured, or unstructured data. Most tools successfully handle common data formats, such as CSV, Excel, JSON, or XML. Some data wrangling tools can also handle text data such as PDF, image, audio, or video data files.

Scalability and Performance

You should opt for tools that have high scalability and give optimized performance when there is an increase in data workload. There are numerous tools that offer cloud-based services and distributed processing capabilities to deal with massive datasets. For optimized performance, you should look for tools with parallel processing architecture and caching mechanisms to reduce downtime in query execution.

Integration

Data integration is a primary step in the data wrangling process. It involves collecting and consolidating data from multiple sources at a unified destination. To transform your data using data wrangling into a usable format, you should first perform data integration. You may opt for a data integration tool that directly integrates with data sources or schedules integration periodically.

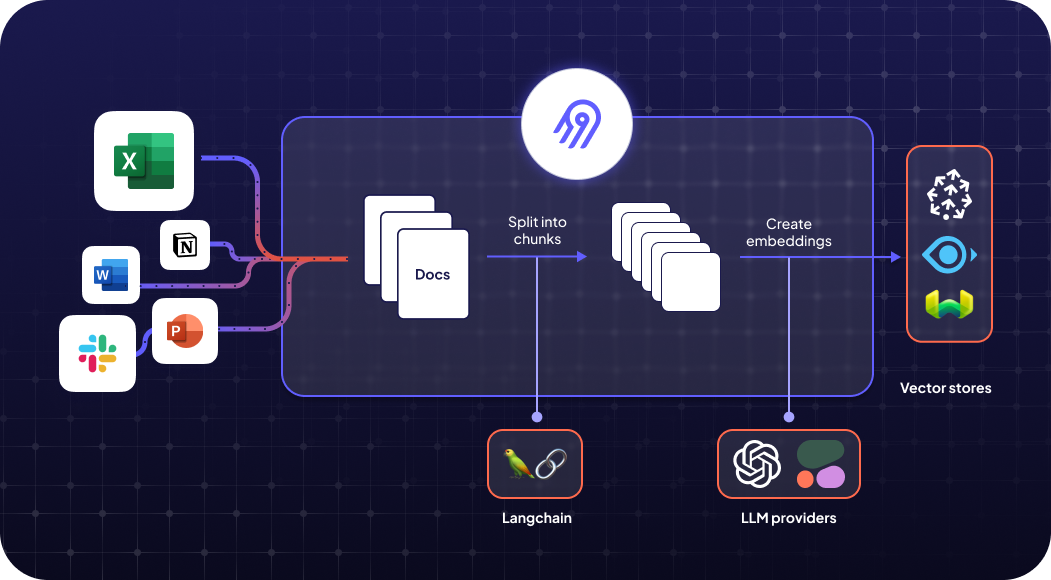

Using cloud-based data integration tools is another option for successful data wrangling. You can use tools like Airbyte to extract data from various sources and integrate this data with any data wrangling tool. It offers an extensive library of 550+ pre-built connectors that can be used as data sources and destinations. You can also build your own connectors if you do not find them in the existing set of connectors using Airbyte’s CDK.

In addition, you can also use Airbyte’s AI Assistant, which automatically configures several fields in the Connector Builder and speeds up the development process. This AI assistant scans provided API documentation and pre-fill configuration fields and offers intelligent suggestions to fine-tune your connector’s configuration.

Some key features of Airbyte are as follows:

- Open-source: In addition to cloud services, Airbyte provides an open-source version for data integration. You can leverage it to integrate data without paying licensing costs. It also has an active community that regularly contributes to its development.

- Change Data Capture: The change data capture (CDC) feature allows you to continuously update the target dataset to reflect the changes made at the source. This helps you keep your source and destination data systems in sync.

- PyAirbyte: PyAirbyte is an open-source Python library offered by Airbyte. It enables you to import data from multiple sources into your Python ecosystem via Airbyte-supported connectors.

- Record Change History: This feature enhances sync reliability by automatically addressing problematic rows during transit. Airbyte ensures that data synchronization remains uninterrupted, even when issues such as oversized records or invalid entries occur.

- GenAI Workflows: Airbyte enables you to manage AI workflows by loading unstructured data into vector store destinations like Pinecone. You can also integrate the platform with RAG tools to streamline the outcomes of LLMs.

- Data Security: The platform provides robust data security features like single sign-on (SSO), role-based access control, and encryption mechanisms. It also fulfills the provisions of GDPR, HIPAA, and CCPA data regulations.

- Retrieval-Based LLM Applications: Airbyte empowers you to develop retrieval-based conversational interfaces on top of your synced data using frameworks like LangChain or LlamaIndex. This enables you to quickly access required data through user-friendly queries.

- Self-Managed Enterprise: Airbyte’s Self-Managed Enterprise offers a flexible and scalable data ingestion solution that easily accommodates your evolving business requirements. It provides you with enhanced data governance, multitenancy and access management, enterprise source connectors, and column hashing to safeguard your sensitive data.

Conclusion

Data wrangling is a critical process that improves data quality by standardizing data. This contributes to advanced and efficient data analytics, which can be used to make insightful data-driven decisions.

Data wrangling tools play an important role in highly data-dependent fields such as healthcare, finance, and marketing, helping you automate tasks to standardize datasets. They save your time and resources, which otherwise could be wasted in resolving data quality issues. By choosing from the top data wrangling tools, you can clean and transform your data according to your requirements.

FAQs

Is ETL part of data wrangling?

Yes, ETL is a part of data wrangling. Both processes involve data transformation but differ from each other in some aspects. Data wrangling involves processing structured, semi-structured, and unstructured data. It is exploratory and is performed to discover new trends in data.

ETL tools, on the other hand, deal mostly with structured data. They are used to convert data into a consistent format for analytics. Thus, ETL can be considered a subset of the data wrangling process.

Which is the best data wrangling tool?

There are several versatile and best-performing data wrangling tools. This includes platforms such as Talend, Alteryx, Astera, Microsoft Power Query, etc. Depending on your technical expertise and monetary resources, you can use any of these tools for data wrangling.

When to use a data wrangling tool?

You should use a data wrangling tool when you want to reduce the time spent in data preparation. This speeds up the data analysis process to generate faster data insights for your business growth.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: