11 Best Web Scraping Tools for 2026

Summarize this article with:

Web scraping has emerged as one of the widely adopted methods by organizations to extract publicly available information in different data formats. The demand for robust web scraping tools has grown incrementally as several businesses are looking to gather data to improve their products and services.

This article will take you through some of the best web scraping tools and help you choose a platform that aligns well with your data requirements. Let’s start by understanding what web scraping tools are.

What are Web Scraping Tools? Why are They Important?

Web scraping, also known as web data harvesting, involves the automated process of extracting data from websites. The method is quite useful when you are dealing with projects requiring data extraction from numerous web pages.

Web scraping tools have been developed to help you efficiently collect data from different websites. You can collate all of this information in a centralized repository, such as a local database or spreadsheet until you are ready to utilize it.

Using the data, you can conduct market research and competitor analysis. You can also build machine learning models for predictive analysis or use business intelligence tools to create interactive dashboards. These further processes help you visualize and easily disseminate the raw data into formats that are understandable by all your stakeholders.

While web scraping can be done manually, it is best to rely on tools that can automate the process for you. With them, you can rapidly access large volumes of data, saving valuable time. The platform can make HTTP requests to a website’s server, download HTML or XML content, and parse the data to provide you with relevant information.

Take a look at some of the well-known web scraping tools in detail to make an informed decision for your business.

11 Best Web Scraping Tools

While web scraping tools can provide you with a host of features that reduce the burden on your existing data processing capabilities, it is vital that you assess your organization’s data requirements before the selection. Here are some of the best web scraping tools available today!

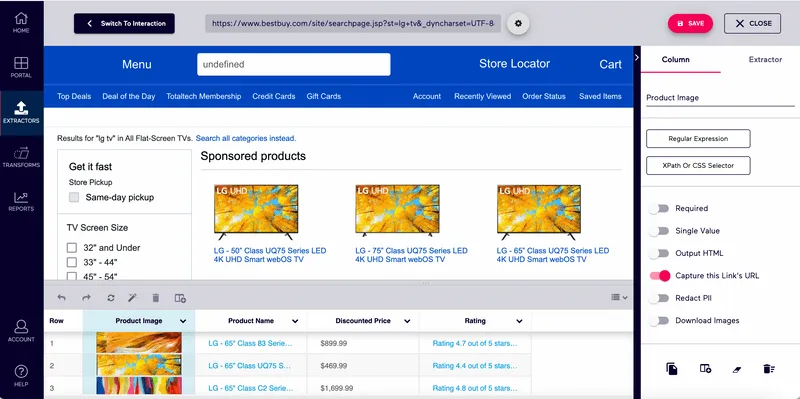

1. Octoparse

Octoparse is a flexible web scraping tool that is crafted to be suitable for a range of users. Even if you do not have extensive experience in coding, you can utilize Octoparse to handle vast amounts of data effectively. This cloud-based solution offers cloud storage services, making it a valuable tool for accommodating varying workloads while providing you with a web scraping solution.

Key Features

- Visual Point-and-Click Interface: With Octoparse’s easy-to-use visual interface, all you have to do is click and select the desired data to initiate the web scraping process.

- Handling Advanced Technologies: Octoparse is capable of scraping and fetching data from websites that use technologies like JavaScript and Ajax.

- Anti-Scraping Mechanism: This web scraping tool effectively deals with CAPTCHA and other anti-scraping mechanisms on websites.

- Scheduled Scraping: You can schedule scraping frequencies and duration with Octoparse and set alerts to receive updates on your data.

Pricing

Octoparse provides a free plan to get started. However, it has certain limitations, like the maximum number of tasks, which must be ten only. There is a Standard plan beginning from $75 per month and a Professional plan at $208 per month. You can set up a customized Enterprise plan by getting in touch with their sales team.

Pros

- Superior Scraping Capabilities: It can handle websites that use sophisticated technologies like Ajax and JavaScript, guaranteeing thorough data extraction

- Anti-Scraping Mechanism: To ensure continuous scraping sessions, Octoparse efficiently handles CAPTCHA and other anti-scraping mechanisms.

- Scheduled Scraping: To increase automation and efficiency, users can set up scraping activities in advance and receive alerts when there are modifications.

Cons

- Pricing Restrictions: Octoparse has a free plan, but it has some restrictions, such as the limited number of jobs and features that may be used.

2. ScraperAPI

ScraperAPI is a user-friendly web scraping tool designed to crawl through large data volumes. The tool exposes a single API endpoint for you to send GET requests–an HTTP request to obtain data from any source. This GET request has two query string parameters, and ScraperAPI returns the HTML response for your desired URL through an API key. You must remember to add appropriate query parameters at the end of the ScraperAPI URL.

Key Features

- JavaScript Rendering: ScraperAPI can work around website data that is protected with JavaScript, thereby simplifying the extraction for you.

- Geo-located Proxy Rotation: Geo-located rotating proxies allow you to circumvent geographic restrictions on data. With ScraperAPI, you can obtain data even from websites where anti-scraping features are enabled.

- Specialized Proxy Pools: Proxies are created to streamline the scraping process and ensure that you are not blocked or censored by websites while crawling through their data. With this tool, you get access to specialized pools of proxies that can manage CAPTCHAs and browsers for various scraping types like search engines or social media scraping.

- Auto Parsing: ScraperAPI collects and parses JSON data from Amazon, Google Search, and Google Shopping and presents it to you in a structured format.

Pricing

You can choose between three monthly pricing plans: Hobby at $49, Startup at $149, and Business at $299. There is a fourth Enterprise plan that you can customize for your organization.

Pros

- JavaScript Rendering: ScraperAPI makes data extraction easier by getting around websites that are secured by JavaScript

- Geo-located rotating proxies: These are provided in order to get around geographical limitations and anti-scraping protocols.

- Auto Parsing: To expedite the scraping process, ScraperAPI automatically gathers and parses JSON data from particular sources.

Cons

Restricted API Queries: Scalability may be impacted by pricing plans that place restrictions on the number of concurrent requests and API queries.

3. ParseHub

ParseHub is another web scraping tool that allows you to conduct data extraction from websites without coding. Its simple user interface helps you to collect data from multiple sources. The platform offers a ParseHub expression that functions similar to the regular expressions used in programming languages. You can set a conditional (if) command with ParseHub to filter certain results during the scraping process. This can include instances where you need data for top-rated reviews of a product or scraping specific values from certain web pages. The feature eliminates the need for additional refinement of data after collection, saving valuable time.

Key Features

- Infinite Scroll: It is a technique where additional content keeps loading automatically at the bottom of a web page, allowing you to scroll down continuously without navigating to the next page. It is also known as lazy loading, and ParseHub utilizes it to extract data for you.

- IP Rotation: ParseHub distributes requests for web crawling across multiple IP addresses. The tool carries out the IP rotation practice while fetching data for you to prevent rate limits. It ensures that your IPs are not blocked by websites, allowing you to get the information you desire with a different IP address each time.

- REST API: This web scraping tool’s API is designed with REST principles, utilizing HTTP verbs and predictable URLs whenever possible. It allows you to control and execute your scraping projects with ease.

- Image Scraping: Image scraping is a method that involves extracting image data from online sources in formats like JPEG, PNG, and GIF. ParseHub can assist you in scraping images from social media platforms and e-commerce websites by extracting the URL for each image on a webpage.

Pricing

ParseHub has a free plan which you can download easily. There are two monthly paid plans: the Standard at $189 and the Professional at $599. You can also choose a customized ParseHub Plus plan to deploy it for your entire enterprise.

Pros

- IP Rotation: In order to avoid IP blocking and guarantee continuous scraping sessions, IP rotation is utilized.

- REST API: Users can effectively manage and carry out scraping tasks with ParseHub's RESTful API.

- Image Scraping: By enabling the extraction of image data from numerous internet sites, the application allows image scraping.

Cons

- Expensive Plans: Although ParseHub has a free plan, its commercial plans—especially for enterprise—can be somewhat pricey.

4. ScrapingBee

ScrapingBee is one of the well-known APIs for web scraping. This tool runs JavaScript on web pages and seamlessly changes proxies with each request, ensuring your access to HTML pages without encountering any blocks. ScrapingBee offers different APIs for managing a wide range of tasks, such as extracting customer reviews or comparing prices of different products. It also provides you with a specialized API for scraping Google search results.

Key Features

- SERP Scraping: Scraping a Search Engine Results Page (SERP) can be challenging due to the presence of rate limits. ScrapingBee simplifies this cumbersome process by offering you a Google search API.

- Result Export Options: This web scraping tool supports export results in JSON, CSV, and XML formats. It is also compatible with other web scraping platforms such as ParseHub, Octoparse, and more. These integrations help you to scrape more data from other sources. You can export your data into the format you wish on ScrapingBee and use the file to conduct data operations.

- Capturing Screenshots: ScrapingBee has a unique Screenshots API that assists you in capturing full-page and partial screenshots of a website. You can simply screenshot a portion of the website instead of scraping its HTML.

Pricing

ScrapingBee offers four monthly pricing plans that have varying API credits and concurrent requests. The Freelance and Startup plans commence at $49 and $99, respectively. The Business plan charges you $249, while the Business + plan starts at $599.

Pros

- Options for Exporting Results: It facilitates exporting results in CSV, XML, and JSON formats, increasing data management versatility

- Screenshots API: For taking full-page and partial screenshots of websites, ScrapingBee offers a special Screenshots API

- Seamless Proxy Management: To provide dependable access to HTML pages, the program automatically rotates proxies with each request.

Cons

- Price Variability: It can be difficult to select the best plan for a given set of requirements because different pricing plans may have differing API credits and concurrent requests.

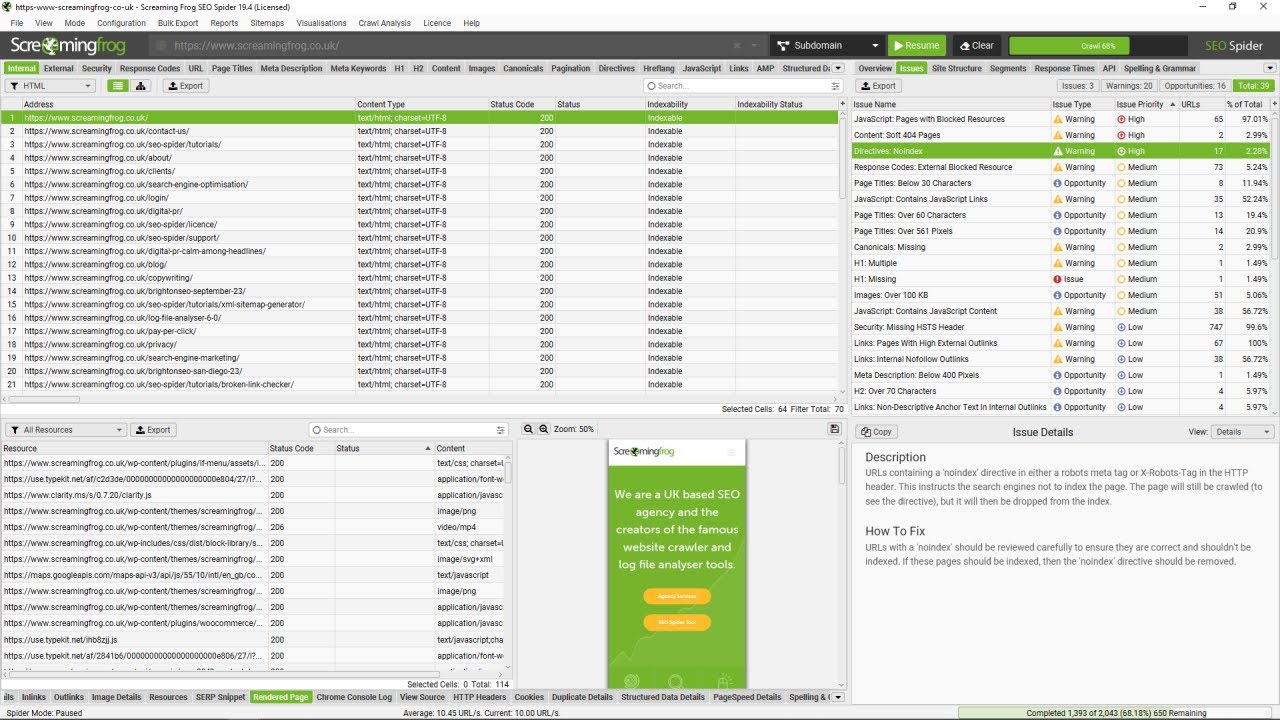

5. Screaming Frog

Screaming Frog is a feature-rich SEO tool that can be used for auditing and analyzing websites, as well as for web scraping.

Pros

- Beginners may easily use it thanks to its user-friendly UI.

- Gives thorough information about the links, structure, and SEO components of websites.

- Supports several operating systems, including Linux, macOS, and Windows.

Cons

- The free version's limited capabilities.

- Technical know-how could be needed for advanced functionalities.

6. Scrapy

Scrapy is an open-source Python framework for web crawling and scraping that is ideal for large-scale scraping tasks.

Pros

- Effective and scalable for managing large-scale scraping jobs.

- Provides a wealth of documentation along with robust community assistance.

- Provides support for asynchronous requests to improve speed.

Cons

Initial setting and setup might be difficult.

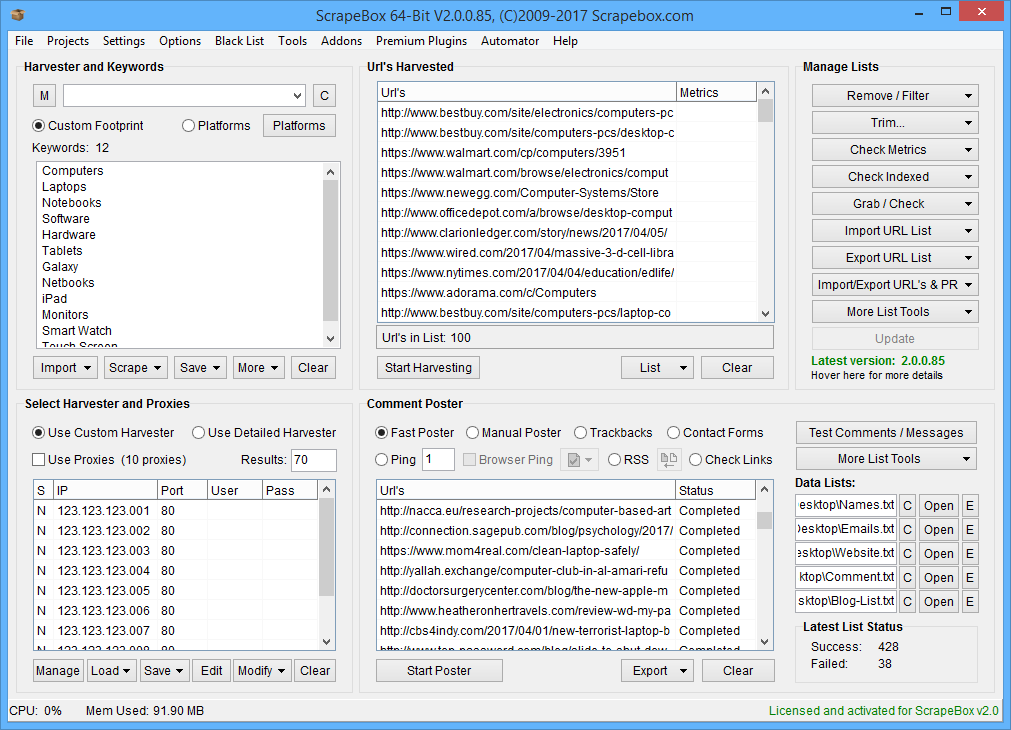

7. Scrapebox

Scrapebox is a flexible tool with a wide range of scraping functions that make it perfect for a variety of online scraping applications.

Pros

- Provides a variety of scraping functions, such as proxy, URL, and keyword collecting.

- Facilitates CAPTCHA solving and proxy rotation.

- Plans at reasonable prices in comparison to other web scraping tools.

Cons

- Only compatible with Windows operating systems.

- An antiquated user interface might not be as simple to utilize.

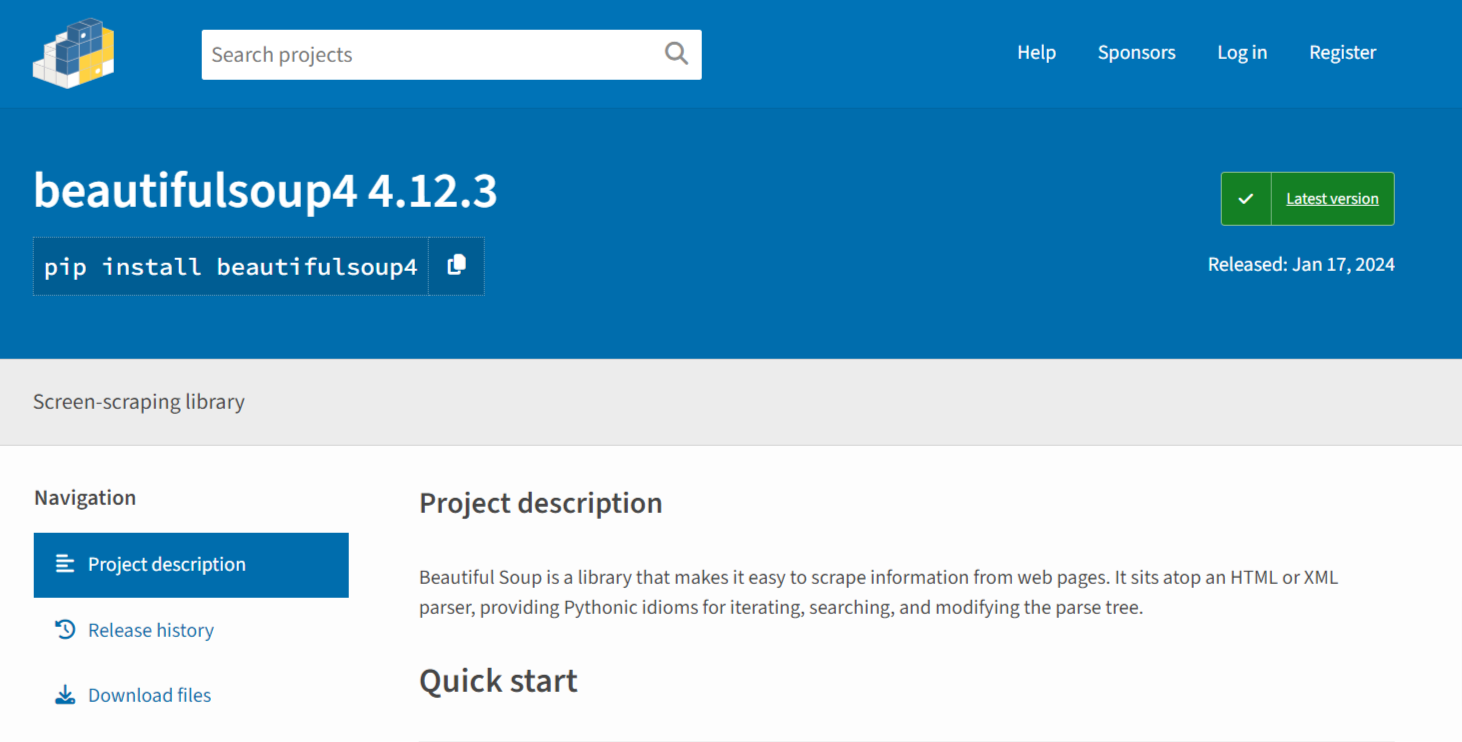

8. BeautifulSoup

A popular Python web scraping Library called BeautifulSoup is well-known for being user-friendly and having a wide range of capabilities for extracting data from HTML and XML files.

Pros

- Offers strong capabilities for exploring and parsing HTML content.

- Compatible with many different Python libraries, which expands its capabilities.

Cons

- In comparison to other scraping frameworks, it lacks sophisticated functionality.

- Limited support for websites with a lot of JavaScript.

9. Import.io

Web scraping and data extraction services are provided by import.io, a cloud-based platform that does not require coding knowledge.

Pros

- Non-technical individuals can utilize it because no coding knowledge is needed

- Provides an easy-to-use interface for making scraping agents.

- Offers tools for automating and scheduling scraping jobs.

Cons

- Fewer customizability choices than with programming-based solutions.

- Larger-scale scraping initiatives may have more expensive pricing plans.

10. Nanonets

Using web scraping, Nanonets is an AI-powered platform for extracting data from images and documents.

Pros

- Enables precise data extraction from documents and photos, which is a plus.

- Offers models that may be altered to meet unique data extraction requirements.

- The model can be trained quickly and seamlessly.

Cons

- Not appropriate for many online scraping jobs; limited to extracting data from images and documents.

- Depending on usage and customization needs, prices may change.

11. Common Crawl

Common Crawl is a nonprofit that makes publicly available datasets of web crawl data so that anybody can access and examine a tonne of online information.

Pros

- Provides free access to petabytes of web crawl data for analysis and study.

- Supports several application cases, such as machine learning, data analysis, and academic research.

Cons

- To use the dataset and APIs efficiently, technical competence is required.

- Restricted control over the crawl data's freshness and substance.

Factors to Consider when Scraping the Web

1. Legality

Make sure you confirm whether web scraping is allowed in your jurisdiction; it may be prohibited by terms of service of websites or data protection laws. Some places forbid scraping without permission because of copyright violations or privacy issues. To prevent legal repercussions, always abide by the terms of use and any applicable laws.

2. Rate Limiting

Rate restrictions must be put into place to avoid sending the target website's server an excessive number of requests. By taking this step, you lessen the chance that your IP address will be blacklisted for questionable activity and help to ensure a seamless scraping process.

3. Website Structure

Before scraping a website, analyze its structure to ensure the reliability of your script. Changes in layout or coding can impact scraping accuracy, so understanding the website's architecture beforehand is crucial for successful data extraction.

4. Data Privacy

Be mindful of data privacy and avoid uninvited data scraping of sensitive information, such as proprietary or personal data. Respecting privacy laws guarantees moral scraping methods and lessens the possibility of legal repercussions or reputational harm.

5. Ethical Considerations

Respect the website's terms of use and consider the ethical implications of scraping, ensuring that your actions align with principles of integrity and respect for others' intellectual property.

How does Airbyte help you Leverage Web Scraping Data Further?

Web scraping tools are crafted to gather large quantities of data in varying formats. However, once you have extracted all the data, you need to understand every part of it holistically. The best way to manage the obtained data effectively is to load it into a cloud data warehouse or Big Data platform.

The quickest way to move your dataset into a data warehouse is through data integration and replication platforms like Airbyte. With this platform, you get the flexibility to extract data from not one, but multiple sources. Airbyte has a diverse array of 350+ pre-built connectors. You can choose the destination of your choice and set up a robust data pipeline in only a few minutes.

Once your data obtained from web scraping tools is loaded into a data warehouse of your choice, you can perform subsequent data analysis. Thus, you can extract the maximum value from your web scraping process and make data-driven strategies for your organization.

Final Takeaways

Web scraping is an effective way to gather relevant and trending information on customers, competitors, and reviews. Initially, it may have been a manual and labor-intensive process. But with the advent of automated web scraping tools, you can streamline and expedite complex data extraction in no time.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: