4 ways to optimize your storage

Before you even start writing your query, there are steps you can take when designing your Data Warehouse to ensure data is already stored in the most optimal way.

BigQuery does full table and column scans for any given query. In typical OLTP databases, this can be mitigated by indexing columns, which isn’t supported in BigQuery.

In a typical OLTP, you can limit the amount of data scanned by using the keyword LIMIT. Let’s see if that’s the case on BigQuery.

Notice that both these queries will scan 379.24GB when run.

Therefore, it’s very important that your query limits the amount of data being scanned in the first place. At the design stage, you can partition and cluster your tables.

Partition your tables

Partitioning splits your table into smaller logical sections based on a given field. More often than not, date and timestamp columns in the data are used for partitioning. The time of ingestion into BigQuery and integer columns can also be used.

BigQuery manages writing data into partitions by automatically writing new data into the specific partition based on data in the partition column.

When you run a query against a partitioned table, you pass a condition that filters on the partition field. BigQuery will then only scan data in the partition with that value, a process called pruning.

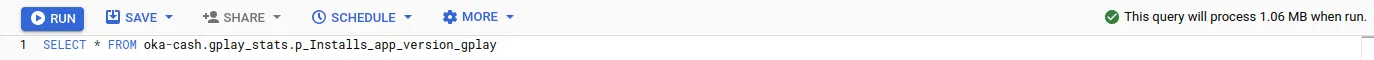

Here is an example of a table partitioned on the field _PARTITIONTIME:

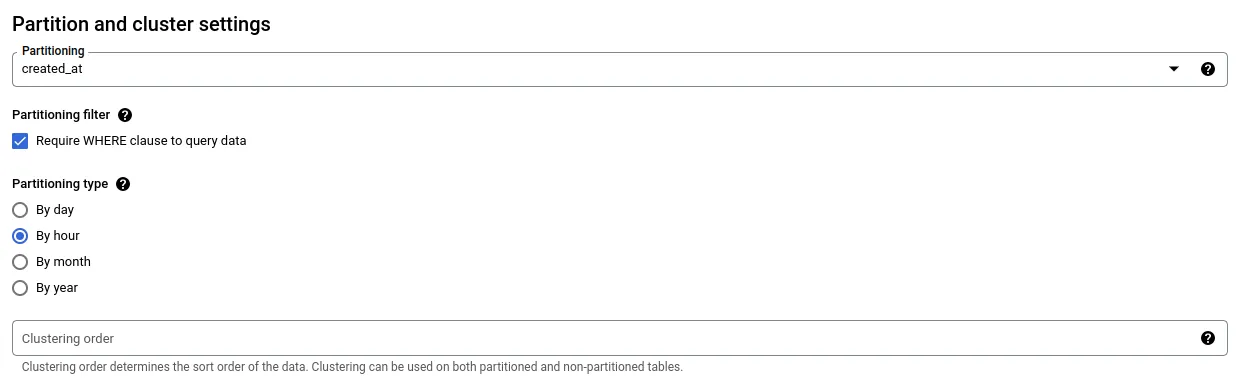

On the BigQuery console, in the table creation UI, you can specify how you want the table partitioned, and for extra safety, whether any queries on that table must have a WHERE clause.

You can tell a table is partitioned on the BigQuery console either by looking at the icon, a banner in the table information, or a section in the table details

Cluster your tables

Clustering orders the contents of your table or partition based on defined columns. The ordered contents are then stored in blocks of correlated values, with data in each block lying between a range of values.

Clustering is quite similar to indexing in OLTP databases. BigQuery keeps track of the start and end of each block similar to a key in an index. This is very useful, especially for columns that you will frequently filter on. When a query filters on a clustered column, BigQuery will only scan blocks matching the filter.

Clustering has a few of gotchas to note:

- Only Google Standard SQL can query clustered tables

- It’s useful for tables 1GB or more in size

- The cost estimator isn’t as accurate for clustered tables

- When filtering a table clustered on multiple columns you need to include at least the first cluster column in your filter

- When filtering a table clustered on multiple columns the filter order must match the cluster order

Pre-transform your data into materialized views

A view is not a table. It’s a query that’s run every time it’s referenced. Every time you run a query that references a view, the view reference is replaced by the SQL that forms the view.

If you have a view successful_transactions that returns a subset of transactions defined as <span class="text-style-code">SELECT * FROM transactions WHERE status = 'success'</span> then you write a query like <span class="text-style-code">SELECT * FROM successful_transactions sf LEFT JOIN users u ON sf.user_id = u.id</span> what actually runs is:

Above, you may have expected that the view would have a reduced amount of data, but what actually happens is that you scan the entire transactions table, filter it, then join data every time you run the query. Note that a query can reference a maximum of 16 views directly or indirectly.

Materialized views are a periodically updated cache of results of the view. These are queried similarly to a normal table which avoids the repeated complete scans and computation of the underlying tables. BigQuery fully manages these to stay up to date about the underlying tables.

You can take advantage of materialized views to store pre-transformed data to be read by other queries i.e. filtering, joining, sorting etc.

You can create materialized views as follows:

Denormalize your data

In a classic database schema, data is usually normalized with up to 6 normal forms existing. This is okay for OLTP use cases but leads to degraded performance for analytical queries, especially for tables 10GB and up. BigQuery doesn’t even have support for referential integrity via foreign keys!

Denormalization means that you only create a few wide tables with all the needed data, also known as OBT (One Big Table). BigQuery offers data types to make denormalization easier.

STRUCT/RECORD

A row of data (record) can be stored in a column of a table in its completeness and accessed very similarly to JSON data.

ARRAY

Multiple records can be stored in a single column and accessed using the common array indexing methods. To illustrate this, say we have users that can transact on our platform. Classically, we would have two tables: users and transactions with the transactions table having a foreign key referencing a primary key in the users table

If we want the total amount of transactions per user, we would most likely write a query like:

If we denormalise our data into one table with user information and a column with all transactions as an ARRAY of RECORDs, we can just do:

Conclusion

BigQuery is a petabyte-scale analytics DB utilizing parallel processing to achieve fast querying of massive datasets. To optimize your workloads on BigQuery, you can optimize your storage by:

- Partitioning your tables

- Clustering your tables

- Pre-aggregating your data into materialized views

- Denormalizing your data

You can also optimize your queries to run faster. We will cover strategies for query optimization in part 2 of this article.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)