Cloud Control Plane: Architecture Patterns for Hybrid Environments

Summarize this article with:

✨ AI Generated Summary

Hybrid cloud architecture separates the control plane (orchestration, scheduling, monitoring) from the data plane (execution, data processing), enabling secure, compliant, and flexible data operations across on-premises, private, and public clouds. Key hybrid deployment patterns include cloud-controlled customer-hosted execution, regionally distributed execution planes, and tiered multi-cloud models to meet data sovereignty and compliance requirements.

- Airbyte Enterprise Flex supports hybrid orchestration with customer-controlled data planes, ensuring sensitive data stays within regulatory boundaries.

- Secure hybrid data planes rely on isolation, outbound-only connectivity, autoscaling, compliance-aware placement, and observable telemetry without exposing sensitive data.

- Unified architectures use a single codebase, portable connector catalog, and common control surface to maintain feature parity across cloud and on-premises deployments.

- Compliance demands (GDPR, HIPAA, SOC 2, DORA) are met by keeping execution local to data residency requirements while orchestrating centrally, with immutable audit logs stored in customer infrastructure.

Enterprises no longer run everything in a single data center. You process transactions on-premises, archive them in a private cloud, and train models in a public region, sometimes within hours of each other. This reality makes hybrid cloud architecture essential for modern data operations.

Each of those moves crosses the data plane, the execution layer that actually reads, writes, and transforms records. The data plane touches raw payloads, so its design dictates encryption, latency, and the audit trails regulators demand. This makes it central to security and compliance in mixed environments where data sovereignty requirements vary by location.

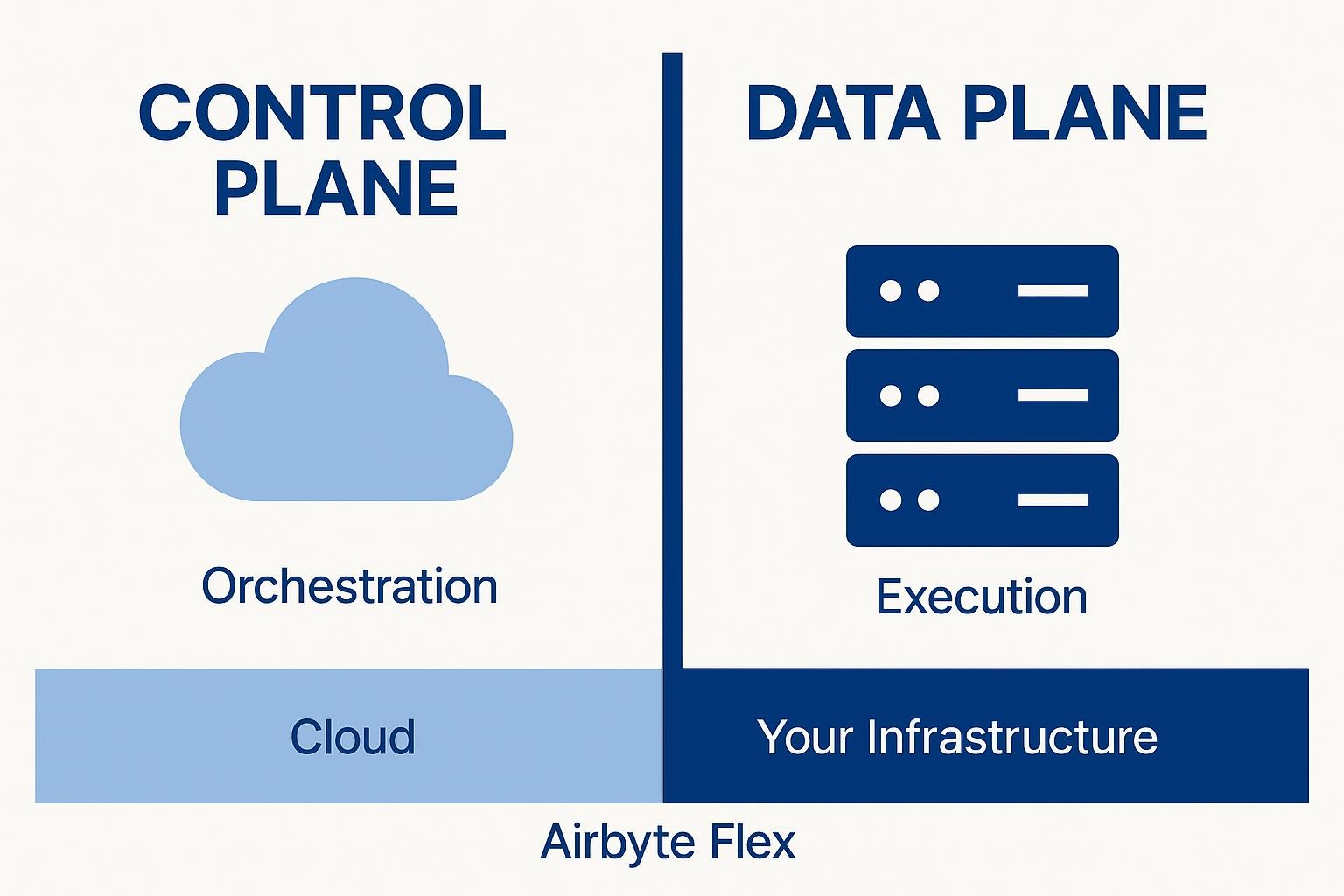

When you isolate the control and data planes, you gain operational flexibility. A cloud-hosted control plane can schedule jobs and provide monitoring, while data planes stay inside your VPC or on-premises infrastructure, never exposing sensitive data to vendors. Airbyte Enterprise Flex follows this model, providing hybrid orchestration with customer-controlled data planes.

What Is a Cloud Control Plane and How Does It Work?

Data teams spend too much time worrying about where their pipelines run instead of what they accomplish. The data plane solves this by handling the actual execution: running connectors that replicate records, applying transformations, and streaming results to targets like lakehouses, warehouses, or operational databases.

The control plane sits above it, managing orchestration by scheduling syncs, monitoring health, and storing configuration metadata. This separation lets you keep coordination logic in the cloud while running sensitive workloads anywhere you need them.

Two deployment models dominate the market. A managed data plane runs inside the vendor's cloud account, giving you elastic scale but routing data through third-party infrastructure. A customer-controlled data plane executes in your own VPC or data center, so nothing crosses your security perimeter.

True hybrid approaches support both simultaneously. The SaaS control plane coordinates while each workload runs in the region or network segment that satisfies compliance requirements.

You might schedule a nightly sync from Salesforce to Snowflake via the control plane while the data plane runs inside your EU-based Kubernetes cluster, meeting residency rules without giving up centralized management. This separation is increasingly common in regulated enterprises.

What Architecture Patterns Enable Hybrid Data Plane Deployment?

When you need to meet strict regulations without slowing down business operations, how you deploy the execution layer determines both control and compliance. Three patterns have emerged across enterprises that refuse to choose between security and agility.

1. Cloud-Controlled, Customer-Hosted Execution

A vendor-managed control plane handles scheduling, retries, and monitoring while every byte of data moves inside infrastructure you operate. Sensitive tables never cross your perimeter, satisfying laws that demand local processing while giving you unified visibility.

2. Regionally Distributed Execution Planes

Instead of one monolith, you run multiple isolated planes, one per jurisdiction. French records stay in Paris, Brazilian events stay in São Paulo. This design makes compliance audits straightforward because geographic residency becomes an architectural guarantee, not a policy promise.

3. Tiered Multi-Cloud Models

High-risk PII stays on-premises, moderately sensitive data lands in private cloud, and anonymized aggregates flow to public cloud for heavy analytics. This graduated approach balances cost and control without forcing everything into the most restrictive tier.

These patterns can be combined in various ways depending on your requirements. Airbyte Enterprise Flex supports a hybrid multicloud architecture that brings all three patterns together with outbound-only networking that eliminates inbound firewall rules, external secrets managers that keep credentials out of containers, and per-region Kubernetes clusters that provide hard isolation where regulators demand it.

How Do You Design a Secure and Scalable Hybrid Data Plane?

Separating the control plane from the execution layer only works when your infrastructure can handle real security threats and traffic spikes. These five principles give you a checklist for building pipelines that scale safely, whether you're running Kubernetes clusters or using managed services.

When you apply these principles together, your execution infrastructure becomes both safer and cheaper to run. Each sync runs in an isolated Kubernetes namespace, connects outbound to the control plane, auto-scales pods based on queue depth, and tags every job with its required jurisdiction. You can satisfy auditors while scaling from one gigabyte to one terabyte per hour.

How Can You Unify Cloud and On-Premises Data Planes Under One Architecture?

Most data teams run some pipelines in SaaS services and others on infrastructure they maintain. The challenge isn't moving workloads; it's maintaining identical behavior so pipelines don't break when switching environments. Unified cloud and on-premises execution requires three core components that work consistently across both environments.

1. Single Codebase Across All Environments

You need a single codebase that executes identically wherever deployed. When both managed and self-hosted runtimes share the exact binaries, you avoid code forks that drift over time and create maintenance headaches.

2. Portable Connector Catalog

Your connector catalog must travel with the code. A unified catalog packages every connector and its tests alongside the core runtime, making the same integrations available whether you deploy in the cloud or on-premises. Airbyte Enterprise Flex uses this approach, providing 600+ connectors with consistent quality across all deployment models.

3. Common Control Surface

The orchestration layer should expose scheduling and monitoring through consistent APIs, whether the underlying execution runs on your Kubernetes cluster or a vendor-managed instance. This separation keeps credentials local while enabling central management.

A unified approach uses one open-source codebase, identical connector catalog, and a hybrid orchestration layer that manages both cloud and customer-hosted execution through consistent YAML or Terraform configurations. You get feature parity without rewriting pipelines when compliance requirements push workloads back on-premises.

What Are the Compliance and Governance Considerations?

Compliance teams at healthcare companies spend weeks documenting data flows just to prove PII never leaves their datacenter. Financial services companies face similar pressure with cross-border residency rules that can trigger massive fines. GDPR, HIPAA, SOC 2, and the coming DORA regulation all demand you design the execution layer first, not last.

In a distributed model, the orchestration layer can run in the cloud while the execution layer stays wherever regulations dictate: on-premises, in a private region, or inside a sovereign cloud. That separation lets you satisfy geographic residency rules without giving up the elasticity of cloud services.

Sovereignty rules come down to physical location and jurisdiction. If a dataset must never cross a border, you can keep the execution layer inside that border and still orchestrate it remotely. Clear residency boundaries matter for audit readiness and liability protection.

Distributed execution planes give you room to choose the right control for each dataset:

- Keep regulated data on-premises so personally identifiable information never leaves your environment

- Send anonymized or aggregated records to public cloud analytics to unlock modern tooling without exposing raw PII

- Maintain immutable, customer-owned logs that document every read and write for simplified, tamper-evident audits

Because every workload follows the same orchestration APIs, you can apply one set of RBAC policies, encryption standards, and retention schedules everywhere. Hybrid architectures can extend that uniformity by allowing logs to be stored in your infrastructure, so auditors see a single, trustworthy trail regardless of where each execution instance runs.

How Do You Operate and Scale a Hybrid Cloud Efficiently?

You run a distributed execution infrastructure smoothly when the orchestration layer manages scheduling and configuration through stable APIs. This approach centralizes daily operations while leaving the execution layer free to focus on replication and transformation.

The separation keeps the attack surface small. Only the control plane needs inbound network access, yet you can still monitor every job in real time, restart failed tasks, and push connector updates or schema changes without maintenance windows.

Scalability comes from packaging each sync as an isolated container. Kubernetes becomes the execution engine:

- Launches fresh task pods for each workload

- Routes logs back to the orchestration layer for centralized monitoring

- Terminates pods when workloads finish to free resources

Clusters can add or remove workers automatically under load without manual node tuning. Horizontal scaling paired with container isolation keeps throughput predictable without compromising compliance.

What's the Bottom Line for Hybrid Cloud Architecture?

Execution layer design determines whether your distributed infrastructure stays secure, performant, and audit-ready. Airbyte Enterprise Flex delivers hybrid control plane architecture with customer-controlled data planes, keeping ePHI in your VPC while enabling AI-ready clinical data pipelines. Talk to Sales to discuss your healthcare AI compliance requirements.

Frequently Asked Questions

What is the difference between a control plane and a data plane?

The control plane handles orchestration, scheduling, monitoring, and metadata management. The data plane executes the actual work by reading from sources, transforming records, and writing to destinations. Separating these layers lets you run sensitive data processing in your own infrastructure while using cloud-based orchestration.

Can I run multiple data planes in different regions?

Yes. Regional data planes enforce data sovereignty by keeping workloads within specific jurisdictions. You can deploy one data plane per region while using a single control plane to coordinate all of them, ensuring French data stays in Paris and Brazilian data stays in São Paulo.

How do hybrid architectures maintain feature parity across deployment models?

Hybrid architectures use a single codebase and unified connector catalog across all deployments. Whether you run in the cloud, customer VPC, or fully on-premises, the same integrations and functionality remain available. No features are locked to specific deployment tiers.

What compliance certifications should I look for in a hybrid data plane solution?

Look for SOC 2, GDPR, HIPAA support, and preparation for emerging frameworks like EU DORA. The hybrid architecture should let you meet data residency rules without giving up modern orchestration capabilities, and audit logs should be stored in your own infrastructure for compliance verification.

.webp)