What Is Data Validation Testing: Tools, Techniques, Examples

Summarize this article with:

✨ AI Generated Summary

Organizations handling massive data volumes face escalating challenges in maintaining accuracy and reliability across their systems. Recent surveys indicate that data quality issues cost enterprises an average of $12.9 million annually, with validation failures contributing to 40% of strategic initiative delays. The complexity intensifies as businesses adopt AI-driven analytics, real-time processing, and multi-cloud architectures, where traditional validation approaches prove insufficient for modern data ecosystems.

Data validation testing emerges as the critical defense mechanism, evolving beyond simple rule-checking to encompass AI-powered anomaly detection, streaming validation protocols, and predictive quality management. This comprehensive approach ensures that only accurate, complete, and reliable data flows through organizational systems, supporting informed decision-making and operational excellence.

This article explores the complete spectrum of data validation testing, from foundational techniques to cutting-edge methodologies including AI-driven frameworks and real-time validation architectures.

TL;DR: Data Validation Testing at a Glance

- Data validation testing verifies accuracy, completeness, and reliability of data across enterprise systems to prevent costly quality issues

- Modern approaches include AI-powered anomaly detection, real-time streaming validation, and predictive quality management beyond traditional rule-checking

- Data validation techniques range from manual inspection to automated scripts, statistical analysis, and cross-field validation

- Leading tools like Informatica and Talend offer comprehensive validation capabilities with cloud and on-premises deployment options, while Datameer specializes in cloud-based validation

- Best practices emphasize understanding your data ecosystem, implementing continuous monitoring, and building cross-functional validation teams

What Is Data Validation Testing?

Data validation testing is a pivotal aspect of software testing that focuses on verifying data accuracy, completeness, and reliability within a system. It involves validating data inputs, outputs, and storage mechanisms to meet predefined criteria and adhere to expected standards.

The primary goal of data validation testing is to identify and rectify any errors, inconsistencies, or anomalies in the data being processed by a software system. This validation process typically involves comparing the input data against specified rules, constraints, or patterns to determine its validity.

Data validation testing can encompass various techniques, including manual inspection, automated validation scripts, and integration with validation tools and frameworks. It is essential for ensuring data integrity, minimizing the risk of data corruption or loss, and maintaining the overall quality of software systems.

What Are the Key Data Validation Techniques?

Some standard data-validation techniques include:

Manual Inspection

Human review and verification to identify errors, inconsistencies, or anomalies. Recommended for small datasets or data that require subjective judgment.

Automated Validation

Scripts, algorithms, or software tools perform systematic checks on data inputs, outputs, and storage. Ideal for large datasets and repetitive tasks.

Range and Constraint Checking

Data Integrity Constraints

Rules within a database schema—primary keys, foreign keys, unique constraints, check constraints—to maintain consistency and prevent invalid entries.

Cross-Field Validation

Validates relationships between multiple fields (e.g., start-date < end-date, or column totals meeting a predefined value).

Data Profiling

Analyzes structure, quality, and content to identify patterns and anomalies, informing the design of validation rules.

💡 Suggested Read: Data Profiling Tools

Statistical Analysis

Techniques such as regression analysis, hypothesis testing, and outlier detection to assess distribution, variability, and relationships within datasets.

How Do AI-Powered Data Validation Frameworks Transform Quality Management?

Modern organizations increasingly leverage artificial intelligence to revolutionize their approach to data quality management. AI-powered validation frameworks represent a fundamental shift from reactive error detection to proactive quality assurance, enabling systems to predict, prevent, and automatically resolve data quality issues before they impact business operations.

Autonomous Data Quality Management

AI systems now independently identify data anomalies, enforce validation rules, and cleanse datasets without manual intervention. Machine learning algorithms detect inconsistencies like mismatched date formats and standardize them in real-time, while pattern recognition merges duplicate records with variant spellings to ensure database consistency. These autonomous systems reduce manual validation efforts by over 60% while improving accuracy through continuous learning from historical patterns and user feedback.

Predictive Quality Assurance

Predictive analytics transforms validation from corrective to anticipatory by analyzing historical data patterns to forecast potential integrity breaches before they occur. Machine learning models trained on past failures can predict quality issues with 85% accuracy, enabling preemptive corrections before data pipelines execute. Retail and healthcare sectors deploy these systems to anticipate inventory discrepancies or patient record inconsistencies, reducing downstream errors by 45-70% through early intervention and automated remediation workflows.

Generative AI for Test Data Synthesis

Generative adversarial networks and large language models create high-fidelity synthetic datasets that mirror production data distributions without exposing sensitive information. These systems generate tailored test scenarios based on natural language descriptions, allowing organizations to validate edge cases and stress-test systems using realistic data that maintains statistical properties while ensuring privacy compliance. Financial institutions use synthetic transaction records to validate fraud detection systems, achieving comprehensive testing coverage without regulatory exposure.

Natural Language Processing for Unstructured Data

NLP-driven validation engines process textual data like emails, contracts, and social media content to extract semantic meaning and identify inconsistencies. Combined with multimodal AI capabilities, these systems validate relationships between different data types, ensuring product descriptions match visual attributes in e-commerce catalogs or verifying timestamp correspondence in meeting recordings. This approach addresses the growing challenge of unstructured data validation in modern data ecosystems.

What Are the Most Effective Data Validation Tools?

Data validation tools are applications or frameworks that automate verification of data against predefined criteria, ranging from traditional enterprise platforms to modern open-source solutions.

1. Datameer

Native Snowflake integration and automatic ML encoding accelerate data preparation and modeling.

2. Informatica

Provides deduplication, standardization, enrichment, and validation across cloud and on-prem environments, with parallel-processing performance gains.

3. Talend

Offers end-to-end data integration with robust transformation, security, and compliance features.

4. Alteryx

Features data-quality recommendations, customizable processing, profiling, and AI-powered validation.

5. Data Ladder

A no-code, interactive interface for profiling, cleansing, and deduplication across varied data sources.

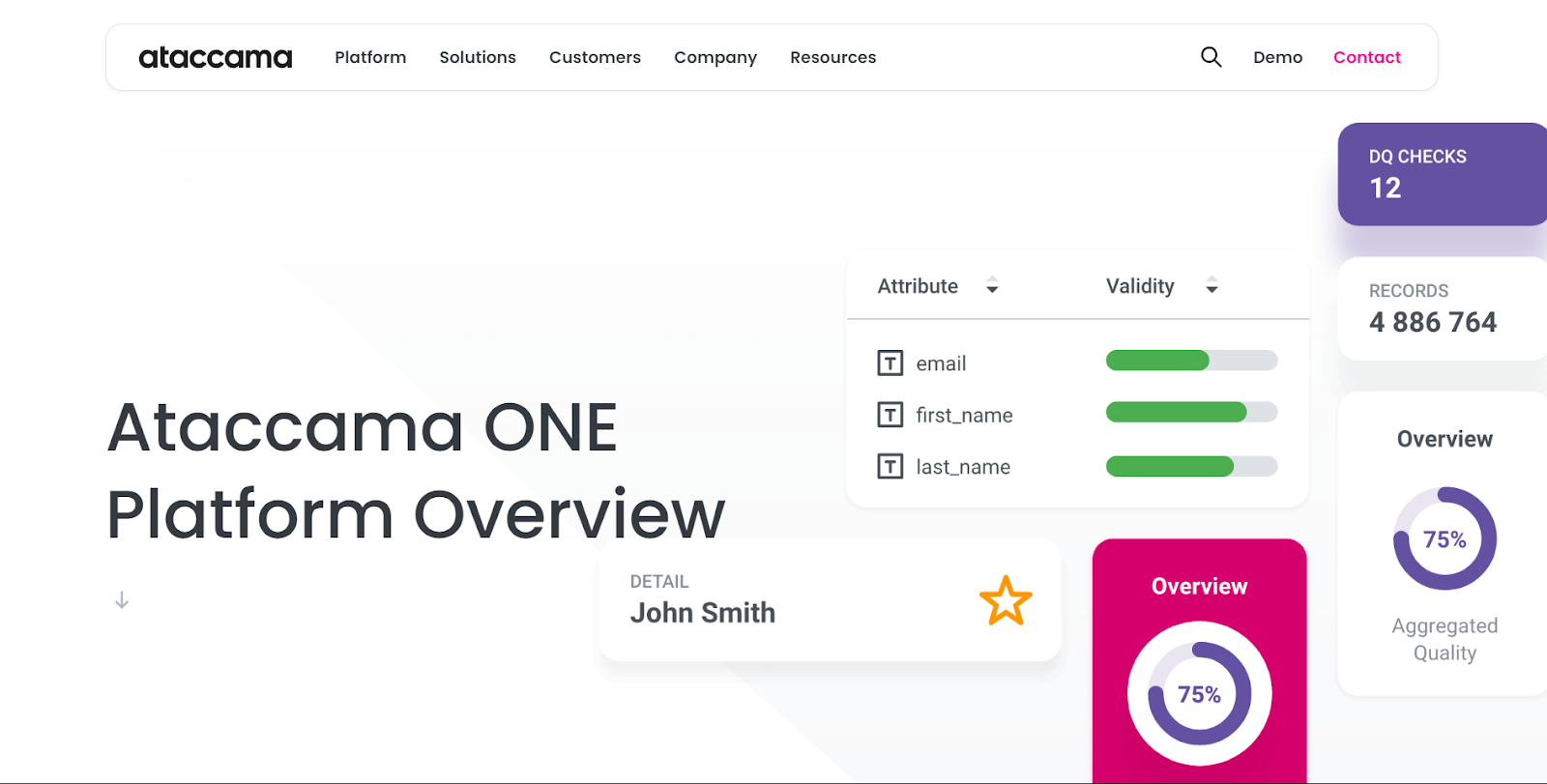

6. Ataccama One

Combines profiling, cleansing, enrichment, and cataloging with integrated AI/ML for anomaly detection and rule automation.

How Does Real-Time and Streaming Data Validation Address Modern Requirements?

Real-time and streaming data validation represents a paradigm shift from traditional batch processing approaches, addressing the critical need for immediate data quality assurance in modern, high-velocity data environments. As organizations increasingly rely on real-time analytics and streaming architectures, validation systems must operate with minimal latency while maintaining comprehensive quality checks.

Streaming Validation Architectures

Modern streaming validation layers embed directly into data ingestion pipelines, enforcing rules concurrently with data flow. Synchronous validation rejects non-compliant records instantaneously, preventing corrupted data from entering downstream systems, while asynchronous modes quarantine discrepancies for later analysis without interrupting primary data streams. Microbatching techniques enable stateless validation of sequential data chunks, balancing latency requirements with completeness checks by processing small groups of records in rapid succession.

Data Observability and Monitoring Platforms

Contemporary data observability tools provide comprehensive validation oversight across entire data ecosystems, tracking quality metrics in real-time and providing immediate alerts when anomalies occur. These platforms correlate system performance with business outcomes, offering automated root-cause analysis for failed validations and integrated dashboard views of data health across multiple sources. Organizations achieve end-to-end pipeline visibility through tools that automatically detect changes in data freshness, volume distribution patterns, and schema evolution.

Statistical Drift Detection and Monitoring

Advanced statistical techniques continuously monitor data distributions for drift patterns that indicate quality degradation over time. Kolmogorov-Smirnov tests detect changes in numerical feature distributions, while chi-squared divergence measures categorical data drift severity, with automated alerts triggering when drift exceeds predefined likelihood thresholds. These methods enable proactive quality management by identifying gradual changes that traditional rule-based validation might miss, particularly important for machine learning applications where data drift can significantly impact model performance.

Schema Evolution and Compatibility Management

Dynamic schema evolution tracking systems automatically test backward and forward compatibility when data structures change, preventing validation failures caused by schema modifications. Version-aware validation maintains compatibility layers that handle multiple schema versions simultaneously, enabling gradual transitions without service interruption. These systems integrate with centralized schema registries to enforce compatibility rules during updates and provide automated rollback mechanisms when changes cause data corruption or validation errors.

What Are Common Data Validation Testing Examples?

Missing-Value Validation

Applied during ETL transformation to flag or fill rows with null values.

Email Address Validation

Ensures correct format (e.g., "user@example.com") before storage or communication, often via services such as Clearout or email list validation tools like DeBounce, which help verify address legitimacy, reduce bounce rates, and improve deliverability.

Numeric Range Validation

Verifies that numeric inputs fall within allowed bounds (e.g., age between 18 and 100).

Date-Format Validation

Checks compliance with required formats (MM/DD/YYYY, YYYY-MM-DD) for consistency and downstream processing.

Centralizing data in a modern stack simplifies these checks. Solutions like Airbyte help consolidate heterogeneous sources so that validation is applied uniformly.

What Are the Best Practices for Data Validation Testing?

1. Understand Your Data

Map your data ecosystem, formats, and quality expectations before designing tests.

2. Perform Data Profiling and Sampling

Use profiling tools to discover anomalies; sample subsets for quick feedback.

3. Implement Data Validation Testing

Automate type, format, and integrity checks; remove anomalies; validate marketing metrics such as PPC statistics.

4. Continuous Monitoring

Adopt real-time validation and schedule regular audits to catch recurring issues early.

5. Build a Cross-Functional Team

Engage analysts, engineers, and IT specialists to own and refine validation workflows.

How Can Airbyte Simplify Your Data Integration and Validation Needs?

Airbyte transforms data validation challenges through its open-source data integration platform that embeds quality assurance throughout the data movement process, eliminating traditional trade-offs between validation comprehensiveness and operational efficiency.

Comprehensive Connector Validation Framework

Airbyte's extensive library of 600+ pre-built connectors includes built-in Connector Acceptance Tests that ensure each connector meets rigorous quality specifications before deployment. The platform's AI-powered Connector Builder with AI Assistant automatically validates API configurations and data structures, reducing connector development time from weeks to minutes while maintaining enterprise-grade reliability. Custom connector development through the Connector Development Kit enables organizations to build validated integrations for specialized data sources without compromising quality standards.

Advanced Data Movement with Embedded Validation

The platform's Change Data Capture capabilities provide real-time validation during incremental data synchronization, ensuring only verified changes propagate through data pipelines. Capacity-based pricing eliminates validation overhead concerns by charging based on connection frequency rather than data volume, enabling comprehensive quality checks without cost penalties. Direct loading capabilities to platforms like BigQuery include automatic schema validation and type checking, preventing format inconsistencies that traditionally cause downstream processing failures.

Flexible Deployment for Data Sovereignty

Airbyte's deployment flexibility supports validation requirements across cloud, hybrid, and on-premises environments while maintaining consistent quality standards. Self-managed enterprise editions provide granular control over sensitive data validation through features like role-based access control, data masking capabilities, and comprehensive audit logging. This approach ensures organizations can implement rigorous validation protocols while meeting regulatory compliance requirements like GDPR, HIPAA, and SOC 2 standards.

Programmatic Validation with PyAirbyte

The PyAirbyte SDK enables developers to build sophisticated validation workflows programmatically, integrating data quality checks directly into Python-based data science and machine learning pipelines. This capability supports advanced validation scenarios including metadata preservation for AI workloads, cross-source data consistency checks, and automated data profiling for anomaly detection.

Enterprise-Grade Monitoring and Observability

Airbyte's integration with modern observability platforms provides comprehensive validation monitoring across entire data ecosystems, with real-time alerts for quality threshold breaches and automated root-cause analysis for validation failures. The platform's support for orchestration tools like Dagster and Airflow enables sophisticated validation workflows that combine data movement with quality assurance in unified pipeline architectures.

Conclusion

Effective data validation is crucial for ensuring the accuracy, completeness, and reliability of your business data. Modern validation approaches extend far beyond traditional rule-checking to encompass AI-powered anomaly detection, real-time streaming validation, and predictive quality management systems that prevent issues before they impact operations.

By implementing robust validation processes that combine automated techniques with intelligent monitoring, organizations can mitigate the risk of errors, inconsistencies, and data-quality issues while supporting the demanding requirements of AI-driven analytics and real-time processing. Focusing on validating your data inputs, outputs, and storage mechanisms through comprehensive frameworks will help you maintain data integrity and trustworthiness, facilitating informed decision-making and enhancing operational efficiency in increasingly complex data environments.

FAQs About Data Validation Testing

What is the main difference between data validation and data verification?

Data validation ensures that data is accurate, consistent, and compliant with rules before being processed or stored. Data verification, on the other hand, checks that data was transferred or entered correctly without corruption or loss. Validation is about quality, while verification is about correctness of transfer.

How does real-time validation differ from batch validation?

Batch validation checks data after it has been collected, usually at scheduled intervals. Real-time validation occurs during data ingestion or processing, identifying and addressing errors instantly. Real-time approaches are critical for streaming analytics, fraud detection, and any use case where data accuracy directly impacts decisions.

Can AI fully replace manual data validation?

AI significantly reduces manual effort by detecting anomalies, predicting errors, and automating corrections. However, human oversight is still needed for edge cases, subjective judgments, and governance. The best results come from combining AI-driven automation with human domain expertise.

What industries benefit most from advanced data validation?

Any industry dependent on accurate, high-volume data benefits, including finance (fraud detection), healthcare (patient record accuracy), retail (inventory and sales data consistency), and manufacturing (IoT sensor data integrity). AI-powered validation frameworks are especially valuable where errors carry high compliance or financial risks.