How Do I Manage Dependencies and Retries in Data Pipelines?

Summarize this article with:

✨ AI Generated Summary

Data pipeline failures mostly stem from dependency issues like task ordering, schema changes, and external system delays rather than SQL bugs. Effective management requires:

- Explicit dependency definitions using DAGs and readiness signals to ensure correct task sequencing.

- Robust retry strategies employing exponential backoff with jitter, error differentiation, and idempotency to prevent retry storms and data corruption.

- Modular pipeline design and monitoring tools to isolate failures, reduce downtime, and improve debugging speed.

Airbyte supports these best practices by integrating with orchestrators, enforcing dependency order, providing automatic intelligent retries, and isolating pipeline stages to enhance reliability and reduce firefighting.

Even with flawless SQL, pipelines break when downstream tasks start before upstream data arrives or when blind retries hammer external APIs. Dependency failures, including missing tables, permission changes, schema drifts, and poorly configured retry logic, cause more production outages than actual code bugs.

You see the impact immediately: dashboards refresh with partial data, SLA clocks keep ticking, and engineers spend nights replaying jobs instead of shipping features. One misordered task ripples through dozens of jobs, while retry storms triggered by rate-limit errors lock you out of critical SaaS endpoints. Managing dependencies and smart retries is what separates reliable insights from constant firefighting.

What Are the Key Dependency Challenges in Data Pipelines?

Broken pipelines rarely fail because of bad SQL — they fail because of hidden dependencies that fired at the wrong moment. A dependency is the contract that one task must finish successfully before another begins, yet that simple rule is fragile in practice.

1. Upstream and Downstream Ordering Issues

If a transformation kicks off before yesterday's ingestion job finishes, you end up reading partial tables and pushing stale metrics. This shows up most often in nightly batch workflows where scheduler drift lets tasks start "on time" even though the data isn't ready.

2. External System Availability Problems

Your pipeline might wait on a SaaS API that suddenly rate-limits requests or a partner SFTP server that lags by an hour. Because those services live outside your control, delays cascade through every downstream step, creating unpredictable latencies the business sees as missed SLAs.

3. Schema Change Disruptions

A source team renames a column, and the transformation layer crashes, or silently drops the column, because the code still expects the old name. Schema changes represent one of the leading triggers of unexpected downtime.

4. Additional Common Failure Modes

Beyond these three headline issues, day-to-day operations expose additional failure modes:

- Data quality problems: Null explosions, type mismatches, or duplicate rows cause perfectly ordered jobs to abort mid-run

- Resource constraints: Starved CPU or memory on a shared cluster delay dependent tasks and stretch execution windows

- Permission changes: Revoked credentials or altered IAM roles suddenly block access to critical inputs

The table below maps these challenges to concrete business impacts:

How Can You Manage Dependencies Effectively?

When a single late-arriving table can derail your entire workflow, explicit dependency management becomes your first defense. Instead of hoping tasks run in the right order, you tell the orchestrator exactly how data should flow.

1. Define Dependencies Explicitly

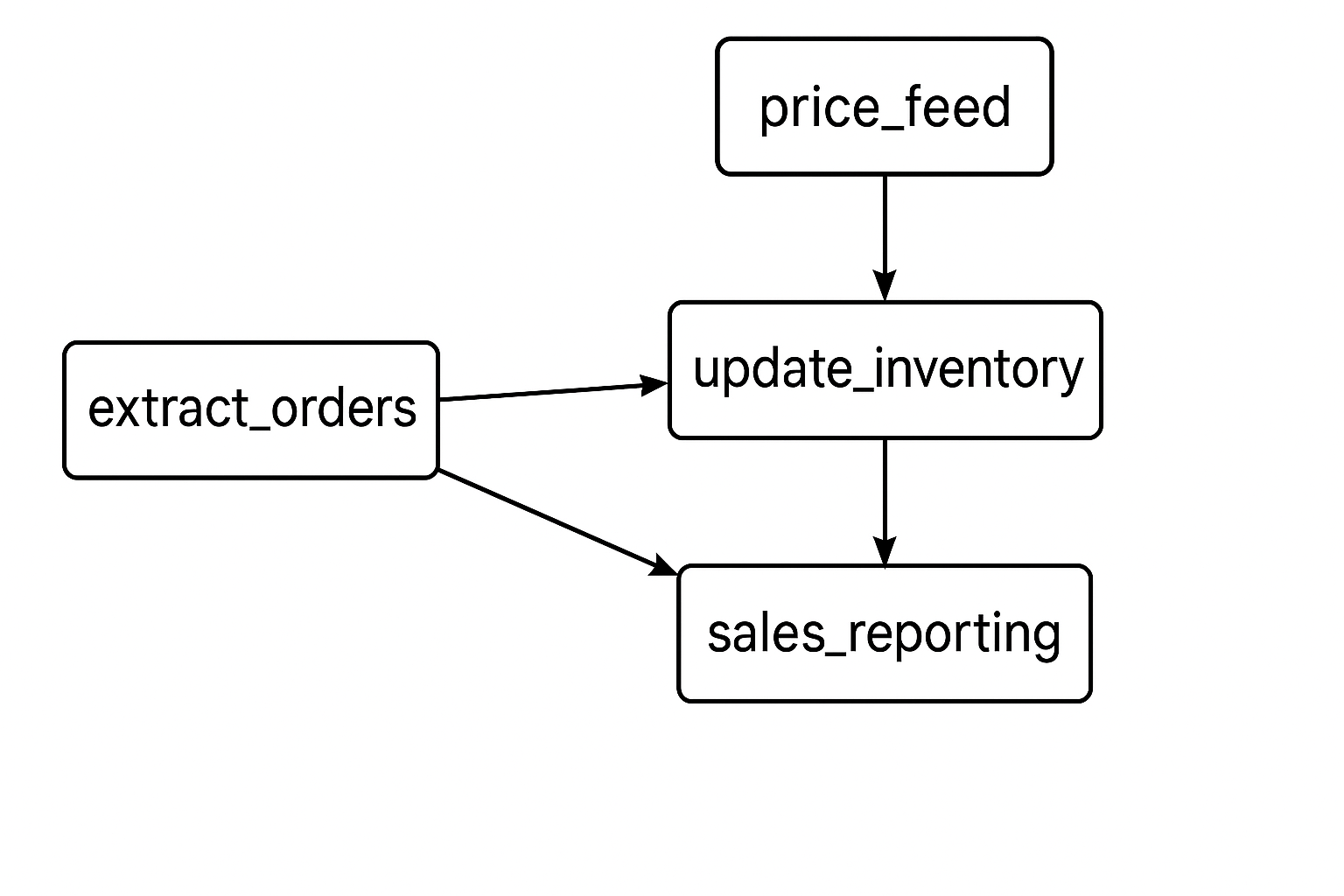

DAG-based orchestrators give you several ways to define clear dependencies:

- Apache Airflow: Write a Python DAG and set update_inventory.set_upstream(load_orders) so the inventory job never starts until orders finish

- Dagster: Declare an orders asset and an inventory asset, and Dagster infers the edge automatically, giving you searchable lineage

- Prefect: Use an imperative style where dependencies are created at runtime with methods like .map()

2. Implement Isolation and Modular Design

Break ingestion, transformation, and serving into separate, loosely coupled modules. If an upstream API changes its schema, only the ingestion layer takes the hit; transformations stay green because they depend on a contract-controlled staging table, not the volatile raw feed.

3. Use Readiness Signals

You need positive confirmation that prerequisites are actually ready. Readiness signals can be as light as a row-count check or as strict as full schema validation against a stored contract. Data teams combine health probes with schema diffing tools that block execution if a column disappears.

What Are the Common Retry Pitfalls?

Retries exist because networks drop packets, SaaS APIs time out, and transient glitches are inevitable. Yet the same technique meant to keep pipelines resilient can silently create bigger outages when it's misconfigured.

1. Blind Retries Trigger Provider Limits

A wall of blind retries can trigger HTTP 429 responses that lock your account. Cloud providers warn that synchronized retries from many clients create a "thundering herd" that overwhelms services.

2. Fixed Intervals Create Bottlenecks

Without exponential backoff, every attempt fails at exactly the same bottleneck, whether that's a flaky endpoint or a slow database, so you waste compute and extend outages. Static delays rarely give systems enough time to recover.

3. Non-Idempotent Operations Risk Corruption

If a pipeline resends a POST that inserts rows or charges cards, you risk duplicate transactions and data corruption. Missing idempotency keys turn innocent retries into costly duplicates.

4. Aggressive Policies Overwhelm Infrastructure

Fast, unlimited retries can saturate thread pools and hide the root cause, forcing engineers into marathon debugging sessions instead of quick recovery.

Watch for these warning signs in your systems:

- Spikes in 429 or 503 errors after failures

- Duplicate records appearing downstream

- CPU or thread pools maxed out during incidents

- Manual pauses you add to "let the system cool off"

How Do You Design Robust Retry Strategies?

You can salvage most failures without overwhelming the systems you depend on by pairing disciplined retry logic with clear failure semantics.

1. Use Exponential Backoff with Jitter

Straight-line or immediate retries turn a single hiccup into a request storm. Stretch the delay between attempts exponentially and add randomness so your retries don't land in lockstep with everyone else's.

2. Differentiate Transient vs. Permanent Failures

Not every error deserves another shot. A 500 or network timeout is often fleeting, whereas a 401 or malformed payload will fail forever. Before retrying, inspect the error surface and only loop when the fault is truly transient.

3. Implement Idempotency and Deduplication

Even perfect backoff logic can corrupt data if the same operation runs twice. Use idempotency keys, natural primary keys, or upsert semantics to ensure a second attempt rewrites the exact record instead of inserting a duplicate.

How Do Orchestration and Monitoring Tools Help?

Modern orchestrators turn your scripts into dependable workflows by enforcing order, retries, and visibility. Instead of hoping a downstream task waits for its input, you define that dependency once and let the scheduler enforce it every run.

1. Apache Airflow

Treats a pipeline as a static DAG defined in Python. You spell out task_b >> task_c, and the scheduler never lets task_c fire until task_b succeeds. Set retries=3 and an exponential delay, and Airflow automatically re-queues the task if it receives a transient error.

2. Dagster

Takes a more declarative, asset-centric stance. You focus on the data artifact and Dagster derives the dependency graph for you. Because each asset carries metadata, the UI renders end-to-end lineage out of the box.

3. Prefect

Favors an imperative, code-as-flow style. Tasks link at runtime, and methods like .map() spawn dynamic parallel branches. Retry policies attach to tasks with built-in backoff, jitter, and circuit breaking.

4. Monitoring Benefits

A health dashboard shows retry storms forming before they saturate an API. When last night's currency-rates fetch hits a 429, the orchestrator delays the retry, logs the 429, and surfaces an alert, protecting the finance dashboard your CFO checks every morning.

What Outcomes Can You Expect With Proper Management?

Get dependency chains and retry logic right, and you spend far less time firefighting. Teams that hard-code explicit task order, validate readiness signals, and adopt exponential backoff with jitter see on-call pages drop because upstream hiccups no longer ripple through the stack.

Reliability Improvements

Clear DAG definitions and proactive validation eliminate most dependency-related failures, while retries that respect rate limits and differentiate transient from permanent errors avoid "retry storms" that lock you out of APIs and silently corrupt data.

Quality Benefits

Clean hand-offs between jobs prevent corrupt or partial datasets from making their way into downstream transformations. With validation gates guarding every merge point, schema drifts and permission changes are caught early.

Debugging Advantages

Clear lineage and structured retries cut mean time to recovery; root causes surface in minutes instead of hours. Modular, isolated stages let you scale individual components without risking cascade failures.

You'll know it's working when metrics like failure rate, MTTR, and duplicate-load incidents trend down while on-time delivery and SLA conformance trend up.

How Does Airbyte Support Dependency and Retry Management?

Airbyte's design aligns with the dependency and retry patterns that keep workflows resilient, so you spend less time firefighting fragile connections and more time shipping trusted data.

Built-in Orchestration Integration

Airbyte connectors expose upstream and downstream ordering through the same DAG semantics used by orchestrators such as Airflow, Dagster, and Prefect. When you register a connection, the platform publishes explicit edges: "source extraction ➜ normalization ➜ loading", making it impossible for a downstream load to start before its source data is materialized.

Automatic Retry Logic

Each connector ships with automatic retries that follow exponential-backoff-with-jitter algorithms. You configure only the upper limits (maximum attempts or total timeout), and Airbyte spreads subsequent calls over progressively longer, randomized intervals. This prevents thundering-herd storms that would trigger API rate-limit lockouts.

Isolation by Design

Extraction, normalization, and loading run in separate containers within the same pod, so a schema drift detected during normalization halts that step without polluting raw ingest files. This mirrors the proactive validation approach for preventing cascade failures.

Flexible Deployment Options

Airbyte's deployment options (cloud, self-managed, or hybrid) maintain identical retry policies and dependency graphs across environments. This removes the "works in staging, fails in prod" drift that often haunts data teams.

What's the Bottom Line?

Explicit dependency management and intelligent retry logic separate reliable systems from constant firefighting. When you pair clear dependency definitions with jitter-based backoff strategies, your team shifts from reactive troubleshooting to proactive data value creation.

Try Airbyte for free today, and explore how it handles dependencies and retries at enterprise scale with 600+ connectors.

Frequently Asked Questions

Why do most pipeline failures come from dependencies, not SQL bugs?

Because dependencies control task timing and order, a late upstream job or missing schema can break the entire chain even if the SQL itself is perfect. Misordered tasks, missing readiness checks, or revoked permissions are more common causes of outages than syntax errors.

What are the biggest retry pitfalls to avoid?

Common mistakes include blind retries that trigger API rate limits, fixed-interval retries that overwhelm bottlenecks, and non-idempotent operations that cause duplicates. Without exponential backoff and idempotency keys, retries can create larger outages instead of solving them.

How can I design robust retry logic?

Pair exponential backoff with jitter to avoid synchronized retry storms, differentiate between transient (timeouts, 500 errors) and permanent (401s, invalid payloads) failures, and enforce idempotency with unique keys or upserts so replays don’t corrupt data.

How does Airbyte help manage dependencies and retries?

Airbyte enforces dependency order between extraction, normalization, and loading, so jobs can’t run out of sequence. Its connectors include automatic exponential-backoff-with-jitter retries, while container isolation prevents upstream schema changes from corrupting downstream data.

.webp)

.png)