How to Train LLM on Your Own Data in 8 Easy Steps

Summarize this article with:

✨ AI Generated Summary

Generative AI, powered by large language models (LLMs), is increasingly adopted across industries but requires fine-tuning on domain-specific data for improved accuracy and compliance. Modern training emphasizes efficient methods like LoRA/QLoRA, systematic data governance, and privacy-preserving techniques such as differential privacy and federated learning.

- Fine-tuning on proprietary data yields 20–30% accuracy improvements in specialized fields.

- Training requires high-quality, rights-cleared data, advanced GPU/TPU infrastructure, and robust evaluation frameworks.

- Efficient parameter tuning and data governance (deduplication, FAIR principles) reduce costs and enhance model reliability.

- Privacy and security are maintained via homomorphic encryption, confidential computing, and bias mitigation tools.

- Following an 8-step training process enables organizations to deploy secure, high-performing, domain-specific LLMs.

Generative AI applications are gaining significant popularity in finance, healthcare, law, e-commerce, and beyond. Large language models (LLMs) are a core component of these applications because they understand and produce human-readable content. Pre-trained LLMs, however, can fall short in specialized domains such as finance or law. The solution is to train—or fine-tune—LLMs on your own data.

Recent developments in LLM training have transformed how organizations approach custom model development. Enterprise adoption has accelerated dramatically, with a majority of organizations now regularly using generative AI powered by large language models. Modern training methodologies now emphasize systematic data curation, advanced preprocessing techniques, and parameter-efficient approaches that reduce computational requirements while maintaining performance. Organizations leveraging these contemporary practices report significant accuracy improvements on domain-specific tasks compared to general-purpose alternatives.

Below is a step-by-step guide that explains why and how to do exactly that.

What Is LLM Training and How Does It Work?

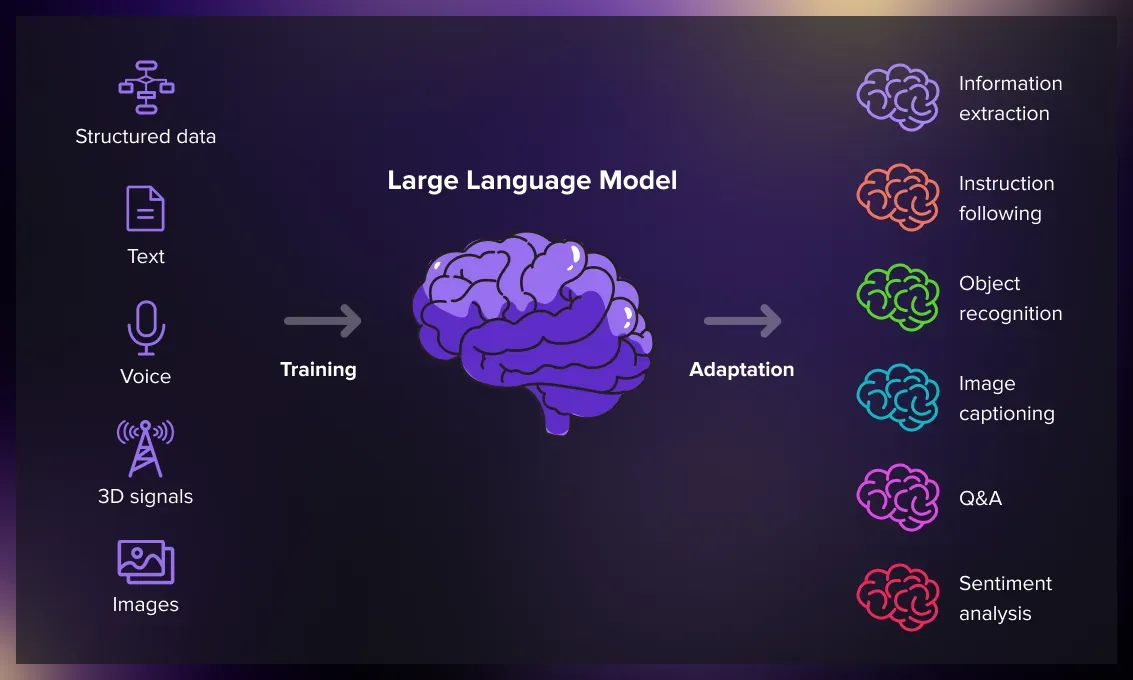

Large Language Models learn through a structured educational process called "training." During training, the model reads billions of text samples, identifies patterns, and repeatedly tries to predict the next word in a sentence, correcting itself each time it is wrong. After this pre-training stage, models can be fine-tuned for specific tasks such as helpfulness or safety. Training is computationally intensive, often requiring thousands of specialized processors running for months—one reason why state-of-the-art models are so costly to build.

The large language model market has experienced unprecedented growth, with current market valuations showing substantial increases year over year. Modern LLM training has evolved significantly with the introduction of advanced architectures featuring sparse attention mechanisms and extended context windows. These innovations reduce computational load while improving contextual understanding. Contemporary approaches also incorporate multimodal integration, allowing models to process text, images, and audio simultaneously during training. The training process now emphasizes efficiency through techniques like model compression via quantization and knowledge distillation, which can substantially reduce model size while maintaining performance.

Training methodologies have also embraced systematic data governance approaches. Modern frameworks emphasize semantic deduplication and FAIR-compliant dataset documentation to ensure training data integrity and reproducibility. Organizations now implement three-tiered deduplication strategies: exact matching through MD5 hashing, fuzzy matching using MinHash algorithms, and semantic clustering to eliminate redundant content that could lead to overfitting.

Why Should You Train an AI LLM on Your Own Data?

LLMs such as ChatGPT, Gemini, Llama, Bing Chat, and Copilot automate tasks like text generation, translation, summarization, and speech recognition. Yet they may produce inaccurate, biased, or insecure outputs, especially for niche topics. Training on your own domain data helps you:

- Achieve unprecedented accuracy in specialized fields (finance, healthcare, law, etc.).

- Embed proprietary methodologies and reasoning frameworks.

- Meet compliance requirements with fine-grained control over outputs.

- Realize 20–30 % accuracy improvements over general-purpose models.

Industry-specific adoption varies significantly, with retail and e-commerce showing strong market share, followed by financial services and healthcare showing rapid uptake in patient-facing applications.

What Are the Prerequisites for Training an LLM on Your Own Data?

Data Requirements

Thousands to millions of high-quality, diverse, rights-cleared examples (prompt/response pairs for instruction tuning). Modern approaches emphasize relevance over volume.

Technical Infrastructure

GPU/TPU clusters, adequate storage, RAM, and frameworks such as PyTorch or TensorFlow. Current market pricing for advanced GPUs requires significant investment; complete multi-GPU setups can be substantial.

Model Selection

Pick an open-source or licensed base model and choose between full fine-tuning or parameter-efficient methods like LoRA.

Training Strategy

Hyperparameter tuning, clear metrics, testing pipelines, and version control. Bayesian optimization approaches now identify optimal learning rates significantly faster than grid search.

Operational Considerations

Budgeting, timelines, staffing, deployment planning. Training costs for frontier models can range significantly depending on scope and requirements.

Evaluation

Use benchmarks and human feedback; iterate based on weaknesses.

Deployment

Optimize, serve, and monitor the model securely and efficiently.

Essential Data Governance and Quality-Assurance Frameworks

FAIR-Compliant Dataset Documentation

FAIR principles ensure dataset transparency and reusability.

Quality Control and Bias Mitigation

Human-in-the-loop annotation and tools like Snorkel provide weak supervision; bias audits with AI Fairness 360 help ensure fairness.

Most Effective Parameter-Efficient Fine-Tuning Methods

Low-Rank Adaptation (LoRA) & Variants

- LoRA inserts trainable low-rank matrices while freezing base parameters.

- QLoRA adds 4-bit quantization, enabling fine-tuning of large parameter models on a single GPU.

- Variants such as DoRA and AdaLoRA further optimize efficiency.

Parameter-efficient fine-tuning (PEFT) enables organizations to train a small percentage of total model parameters while retaining most of full fine-tuning performance.

Implementation Best Practices

- Rank values between 8–64 are typical.

- Alpha values of 16–32 balance stability and flexibility.

- Extend LoRA beyond attention layers to FFNs and embeddings for better results.

How Modern Data-Integration Platforms Streamline LLM Pipelines

- Evolution of open-source ETL brings containerized, cloud-native connectors (e.g., Airbyte).

- Vector databases like Weaviate, Qdrant, Milvus support scalable embedding storage.

- Orchestration tools (Dagster, Airflow) and data-versioning systems maintain reproducibility.

Cloud GPU pricing varies significantly across providers, with advanced GPU instances requiring careful cost optimization strategies for sustainable training operations.

Privacy-Preserving Architectures for Proprietary Data

- Homomorphic encryption allows computation on encrypted data.

- Federated learning with differential privacy enables cross-institution collaboration without sharing raw data.

- Confidential-computing hardware (Intel SGX, AMD SEV) isolates training processes.

Differential privacy offers mathematical guarantees that individual data points cannot be reliably extracted from trained models.

How to Train an AI LLM in 8 Easy Steps

.webp)

- Define Your Goals – establish KPIs, compliance needs, and success metrics.

- Collect & Prepare Data – platforms like Airbyte and its 600+ connectors simplify ingestion.

- Set Up the Environment – provision GPUs/TPUs, install frameworks, configure monitoring.

- Choose Model Architecture – GPT, BERT, T5, etc.; consider LoRA/QLoRA.

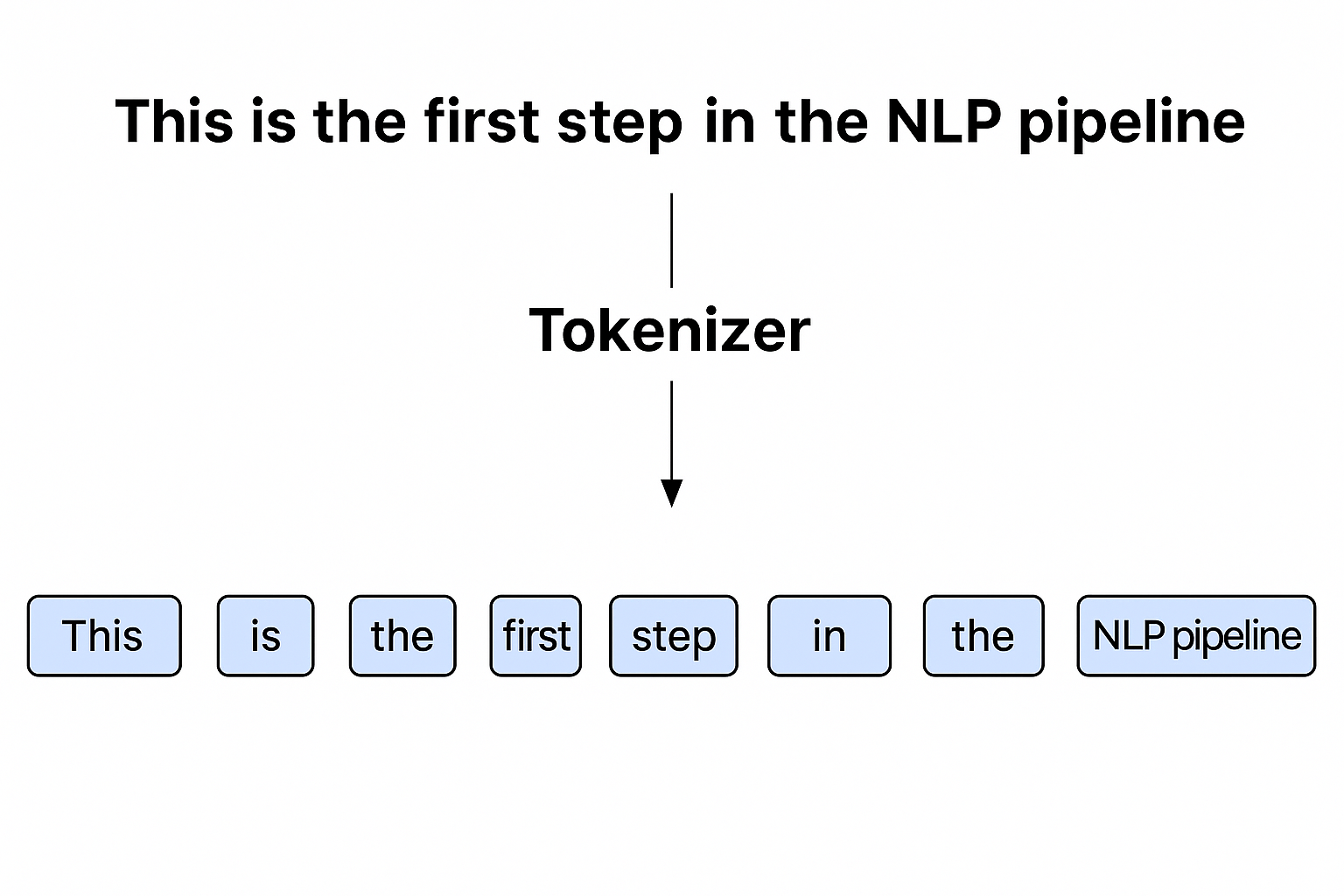

- Tokenize Your Data – see LLM tokenization guide.

- Train the Model – leverage mixed precision, gradient checkpointing, Bayesian hyperparameter search.

- Evaluate & Fine-Tune – iterate using benchmarks, human feedback, and PEFT methods.

- Implement the LLM – deploy via API, monitor, retrain as data drifts.

How Should You Evaluate an LLM After Training?

- Benchmark Testing – MMLU, GSM8K, HumanEval, etc.

- Task-Specific Evaluation – domain-relevant scenarios (finance, healthcare, legal…).

- Safety & Robustness – adversarial testing, bias assessment, red-teaming.

- Human Evaluation – domain experts review outputs.

- Performance Metrics – latency, throughput, memory, cost.

- Continuous Monitoring – detect drift, schedule retraining.

Key Challenges & Solutions in Proprietary-Data Training

Data preparation costs can vary significantly depending on the scope of training, ranging from moderate investments for fine-tuning to substantial costs for pre-training from scratch.

Conclusion

Training an LLM on your own data enables targeted usage, higher accuracy, bias reduction, and greater data control. By following the eight-step process outlined here—and by leveraging parameter-efficient fine-tuning, homomorphic encryption, and federated learning—you can build powerful, domain-specific AI solutions while maintaining security, compliance, and operational efficiency.

With the rapid growth in enterprise AI adoption and the increasing number of production use cases, organizations that master custom LLM training workflows will gain significant competitive advantages. The key lies in establishing robust data pipelines that can reliably deliver high-quality, domain-specific training data while maintaining security and governance standards.

FAQs

Why train an LLM on proprietary data instead of using a general-purpose model?

General-purpose models struggle with domain-specific nuance. Custom training typically yields 20–30 % accuracy gains.

How has the training process evolved recently?

Advances like LoRA/QLoRA, multimodal learning, and longer context windows make fine-tuning faster, cheaper, and more powerful.

What data and infrastructure are required?

Large volumes of high-quality, rights-cleared domain data plus GPU/TPU clusters and ML frameworks (PyTorch, TensorFlow, etc.).

How can you ensure data is high-quality, secure, and compliant?

FAIR documentation, multi-level deduplication, bias audits, differential privacy, and confidential computing.

What are the most efficient ways to fine-tune?

Parameter-efficient methods (LoRA, QLoRA) freeze most parameters and train lightweight adapters, enabling single-GPU fine-tuning of very large models.

Suggested Read:

.webp)