Best Hybrid Deployment for Database Solutions

Summarize this article with:

✨ AI Generated Summary

Hybrid database deployments separate the cloud-hosted control plane (orchestration, metadata, APIs) from the customer-controlled data plane (data processing, connectors), enabling local data control with cloud-level automation. This architecture improves latency by keeping data processing near source systems, ensures compliance with data residency laws, reduces total cost of ownership, and simplifies maintenance through centralized upgrades.

- Control plane runs in the cloud, managing orchestration without accessing raw data.

- Data plane executes locally within customer networks, preserving data sovereignty and reducing latency.

- Hybrid models address shortcomings of pure cloud (compliance, latency, cost) and on-premises (scalability, agility) deployments.

- Common patterns include tiered hybrid (regulated data), edge-optimized (low latency), and multi-region (jurisdictional compliance).

- Successful adoption requires assessing compliance, network topology, tooling, observability, and piloting before scaling.

Managing distributed databases often feels like an impossible trade-off. Move everything to the cloud and you gain elasticity, but you also hand over credentials and data to third-party infrastructure, risking residency violations and increased latency. Keep workloads on-premises and you retain control, yet every schema change or capacity spike demands days of infrastructure management and capital spend.

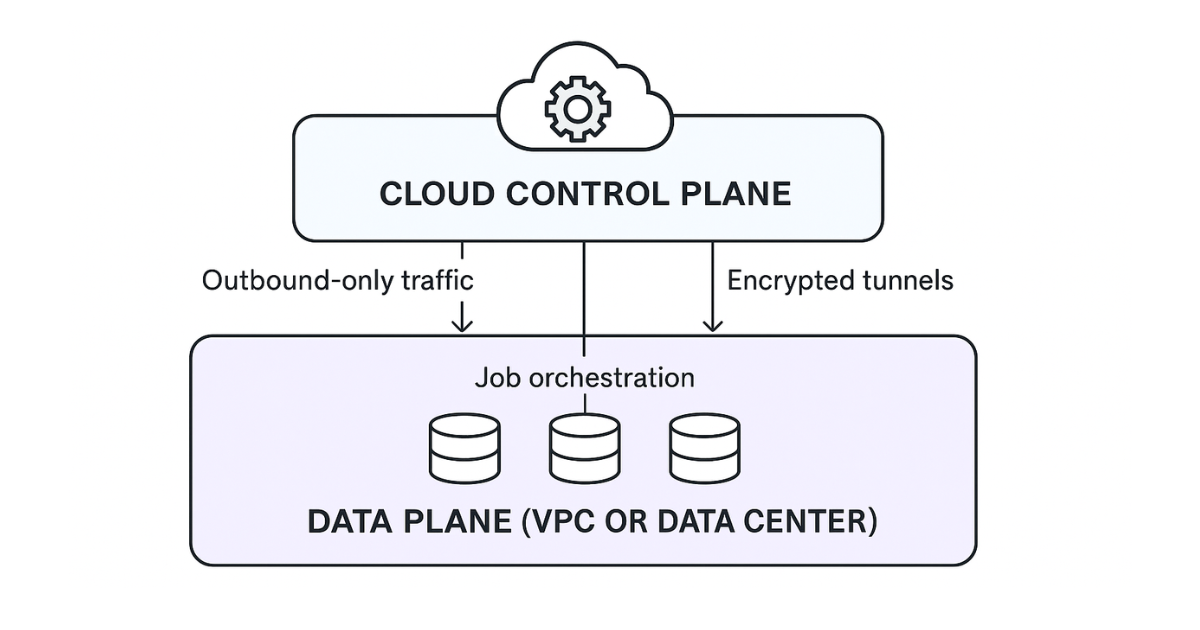

A hybrid deployment breaks that stalemate by splitting architecture into a cloud-hosted control plane and a customer-controlled data plane. Orchestration and upgrades stay in the cloud, while credentials, compute, and raw data remain in your network. The result is lower latency, simpler audits, and maintenance you barely notice.

What Is a Hybrid Database Deployment?

A hybrid database deployment separates the "brain" of your data platform from its "muscle." You run the control plane (scheduling, metadata, APIs, and orchestration) in the cloud, while the data plane (connectors and processing jobs) stays inside your VPC or on-prem network. This split lets you keep credentials and raw data under your control without giving up cloud-level automation.

Hybrid deployments have a precise meaning: centralized management with local execution. This isn't multi-cloud, and it isn't half-finished SaaS. By keeping data movement local and sending only encrypted logs or metrics outbound, you satisfy data sovereignty mandates while avoiding the latency of a round-trip to the cloud.

Why Pure Cloud or On-Prem Database Models Fall Short?

Running everything in one place sounds simpler, but it quickly turns into a trade-off you can't win. This fundamental tension explains why organizations increasingly turn to hybrid models that combine the best of both approaches.

Pure cloud deployments create control and compliance challenges:

- You lose direct control over credentials and runtime environments by storing secrets on third-party infrastructure, expanding your attack surface

- Cross-region round-trips add milliseconds that compound into minutes for high-volume CDC streams, especially when traffic traverses shared networks instead of dedicated local links

- Data sovereignty mandates outlined in regulations like GDPR and HIPAA often prohibit storing sensitive data on external infrastructure

- Egress fees accumulate every time data exits the provider's boundary, creating unpredictable costs as your data volume grows

- Changing providers later involves costly re-platforming (classic vendor lock-in)

Pure on-premises deployments sacrifice agility and efficiency:

- Hardware refresh cycles demand large capital outlays and months of lead time, while cloud peers spin up new instances in minutes

- Scaling for peak loads leaves idle servers during off-hours, driving up the total cost of ownership

- Upgrades require maintenance windows and manual patching which slow feature adoption

- Capacity planning becomes a guessing game, forcing you to over-provision to handle spikes

You see these problems everywhere. Regional banks struggle to push Oracle transaction data to cloud analytics without overnight lags. Hospitals can't move PHI off-site yet still need sub-minute replication for care dashboards. Telecom operators watch legacy ETL jobs drown under billions of call-detail records generated daily.

Each scenario exposes why a single deployment mode can't deliver both agility and control, and why a hybrid approach picks up where pure cloud and pure on-prem stop short.

What Does the Ideal Hybrid Deployment Look Like for Databases?

The best hybrid database architecture balances cloud agility with on-premises control through five design principles that create a clear separation of concerns:

- Cloud-managed control plane orchestrates scheduling, metadata, and APIs while never touching raw data

- Customer-controlled data planes run every query, extract, and transform inside your VPC or data center

- Outbound-only connectivity ensures data planes never accept inbound traffic, closing a common attack path

- External secrets management keeps credentials local and invisible to the SaaS layer

- Per-region scalability deploys additional data planes wherever residency laws require

With these guardrails, architecture becomes clear. The control plane (your management brain) lives in the cloud, directing jobs and collecting logs but never touching raw records. The data plane sits beside your databases, executing workloads close to the source to satisfy latency and compliance demands.

Lightweight agents or connectors act as runners, polling the control plane for instructions through encrypted, outbound tunnels. A shared security and identity layer spans both zones, enforcing the same RBAC and audit policies everywhere code executes.

When you draw the boundary correctly, upgrades become painless: patch the control plane once, let data planes inherit changes on their own schedule. Clear lines of responsibility keep regulators satisfied and your pipelines fast.

How Hybrid Deployments Improve Database Performance and Compliance?

Separating the cloud-based control plane from a local data plane changes the physics of your database operations. You keep data next to the systems that generate it, while orchestration lives in the cloud. This architectural shift delivers measurable improvements across five key dimensions.

1. Local Processing, Global Coordination

When the data plane runs inside your VPC, replication jobs never cross the public internet, eliminating the "cloud hop" that slows down Change Data Capture. The control plane pushes instructions outbound while raw records stay local and only metadata flows back for monitoring. Moving extract jobs on-prem cuts end-to-end CDC lag from multiple minutes to single-digit seconds by avoiding cross-region latency.

2. Compliance With Residency Regulations

GDPR fines can hit 4% of global revenue, and HIPAA violations can reach $50,000 per violation. Hybrid infrastructure meet these rules by letting you fence sensitive tables geographically while still managing pipelines centrally. Data never leaves its legal jurisdiction; only logs and job status reach the cloud. Banks keep EU transactions on-prem for DORA, hospitals store PHI locally for HIPAA audits, yet both teams view deployments through a single UI.

3. Lower Total Cost of Ownership

You pay for compute only where it runs. This model replaces idle on-prem hardware with burst capacity in the cloud for spikes, while steady workloads remain on existing servers. Reusing licenses with programs like Azure Hybrid Benefit trims software spend even further.

4. Simplified Maintenance and Upgrades

Because the control plane is cloud-hosted, you upgrade orchestration once, then let every data plane pick up the new logic automatically. Local processing engines remain untouched, so production databases stay online. This model reports near-zero downtime during version rollouts, since connectors update independently over outbound TLS connections. For multi-database estates this removes the weekend patch marathons you suffer today.

5. Unified Architecture Across Environments

A single codebase drives every deployment, preventing feature drift between SaaS and self-hosted instances. Your team learns one API, your governance team writes one set of policies, and audits cover every region with identical logs (no exceptions, no special cases). This consistency eliminates the operational complexity that typically plagues mixed deployment environments.

What Are Common Hybrid Database Deployment Patterns?

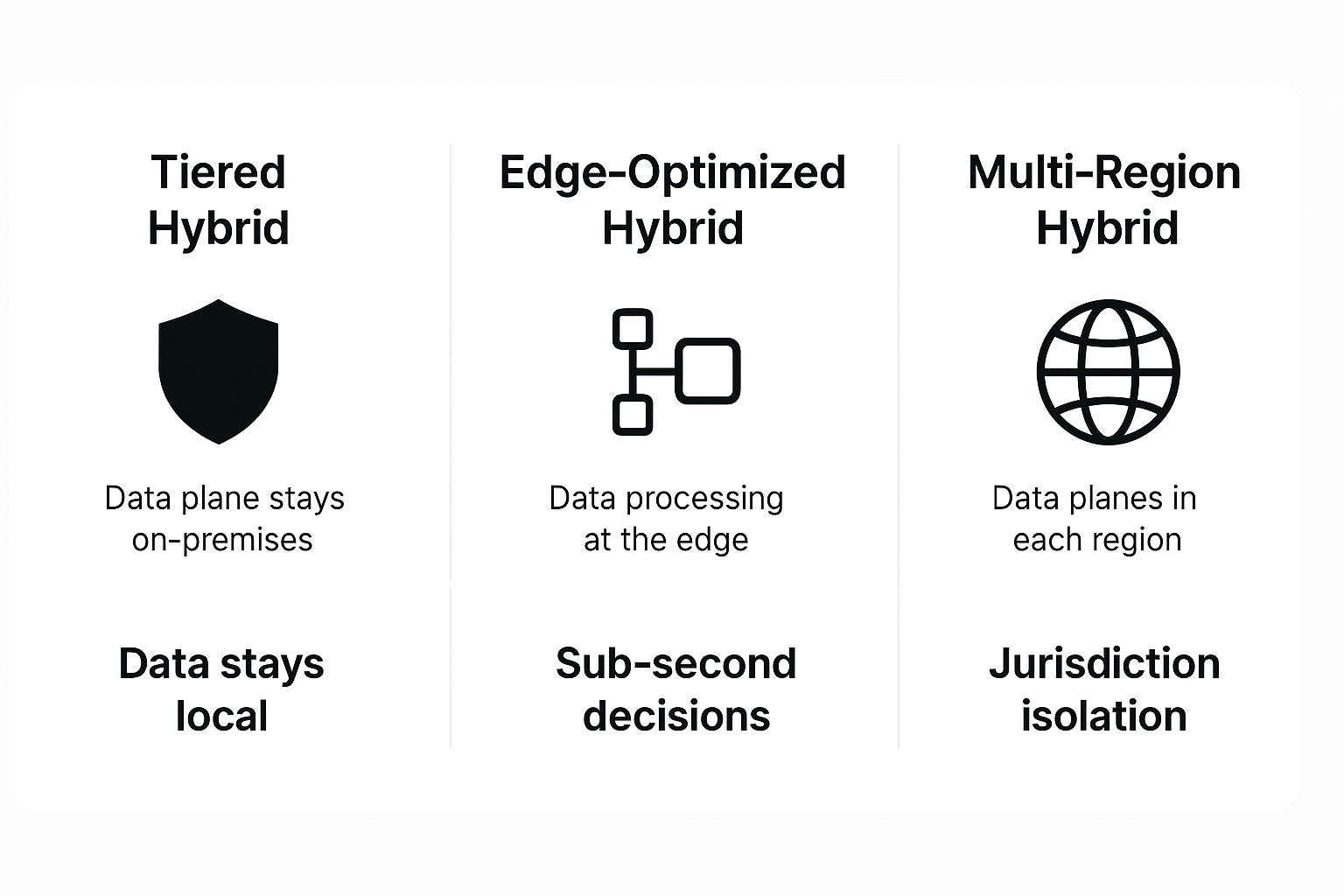

Hybrid architectures aren't one-size-fits-all. Organizations typically implement one of three core deployment patterns, often mixing them for different workloads based on specific compliance, latency, and operational requirements.

1. Tiered Hybrid for Regulated Data

The data plane stays on-premises (or inside a locked-down VPC) so raw records never leave your network. The control plane runs in the cloud, orchestrating jobs and receiving only anonymized or aggregated outputs.

This setup aligns with key sovereignty requirements for HIPAA or PCI datasets and helps enable safe cloud analytics, provided additional compliance measures like strong encryption, access controls, and audit logging are also implemented. You'll need clear data-classification policies and strong transformation steps before anything crosses the boundary.

2. Edge-Optimized Hybrid

When milliseconds matter, you push the data plane to the edge (close to factories, point-of-sale systems, or cell towers). The control plane remains centralized.

Local processing slashes round-trip latency, and outbound-only channels keep the attack surface tight. Implementation hinges on resilient networking and lightweight compute that can run unattended in small footprints. Think containers on retail gateways or ruggedized servers at telecom hubs.

3. Multi-Region Hybrid

Global enterprises often deploy separate data planes in each jurisdiction (EU, US, APAC) so data never crosses borders. They manage everything through a single cloud control plane.

This design streamlines audits by regional regulators while preserving a unified management layer for scheduling and policy updates, all without duplicating orchestration stacks in every country. Key considerations include consistent RBAC across regions and efficient cross-region reporting pipelines that merge only non-sensitive aggregates.

The following table summarizes how each pattern addresses different organizational needs:

Organizations rarely choose just one pattern. A bank might run tiered hybrid for card transactions, edge-optimized nodes at ATMs, and multi-region deployments for global analytics. The key is mapping each workload's compliance boundary and latency budget, then layering patterns accordingly to keep complexity (rather than data) under control.

How to Choose the Best Hybrid Deployment for Your Database Environment?

Picking the right hybrid approach requires a systematic evaluation that ties technical choices to your compliance, latency, and staffing realities. This five-step framework helps you make informed decisions while avoiding expensive rework later.

1. Assess Your Compliance Boundaries

Catalog regulated datasets, map them to GDPR or HIPAA rules, and note jurisdictional limits on storage or processing. Translating legal requirements into architectural constraints will guide your deployment choices.

2. Map Your Network Topology

Decide where control and data planes will live, then verify outbound-only connectivity works from every site. Test common firewall and routing configurations early in your planning process.

3. Select Your Connector and Integration Tooling

Pick platforms that ship the same codebase across cloud and self-hosted deployments so you don't sacrifice features in regulated regions. A hybrid deployment model that keeps processing local while orchestration stays in the cloud prevents you from having to maintain separate pipelines for different environments.

4. Plan Observability Up Front

Centralize logs, metrics, and lineage so auditors see one consistent story regardless of where jobs run. Designs that layer monitoring into the control plane prevent blind spots when incidents cross boundaries.

5. Pilot Before You Scale

Spin up a small data plane, run real workloads, and validate latency, credential isolation, and audit trails. Quick feedback loops uncover edge-case failure modes without jeopardizing production SLAs.

Common missteps include underestimating cross-region egress fees, assuming feature parity that doesn't exist, and migrating mission-critical databases first. Start with a low-risk workload, document every manual fix, and iterate. You'll build confidence (and a library of automation scripts) before touching systems that make or break the business.

What Are Key Outcomes of a Well-Designed Hybrid Database Architecture?

When you separate orchestration in the cloud from data processing in your network, measurable improvements emerge that pure cloud or on-premises models simply cannot deliver.

Latency improvements:

- Your data plane runs next to source systems, keeping large CDC jobs on local networks and eliminating the "cloud hop"

- Replication lag drops from minutes to seconds

- Pipelines continue running even when the control plane is temporarily unreachable, preventing critical workloads from stalling during network hiccups

Cost reductions:

- You avoid the expense of maintaining separate infrastructure for control functions while keeping compute resources local where they're needed most

- Teams often report lower staff time for migrations and reduced overall costs compared to full cloud transitions

- Operational overhead decreases through reduced manual intervention and better resource utilization

Compliance simplification:

- Data stays within your jurisdiction, giving auditors a smaller attack surface to review

- Ready-made controls for GDPR, HIPAA, and DORA requirements streamline certification processes

- Local key custody meets even stricter sovereignty mandates that traditional cloud deployments cannot satisfy

Maintenance transformation:

- You patch the control plane once while stable data-plane nodes keep serving traffic

- Weekend maintenance windows become standard rollouts with minimal business impact

- Platforms that maintain identical APIs and connector catalogs across deployment modes let you reuse existing pipelines instead of rebuilding them for each environment

The cumulative result: faster data movement, reduced operational costs, streamlined audits, and consistent performance across all environments. Benefits that compound over time as your data architecture scales.

Why Hybrid Is Now the Standard for Enterprise Databases?

The enterprise database landscape has fundamentally shifted toward hybrid architectures and infrastructures, driven by regulatory requirements and proven economic advantages. Data sovereignty mandates force local control, while hybrid models deliver significant TCO savings over pure cloud deployments through optimized resource allocation and reduced vendor dependencies.

The winning formula combines cloud-managed control planes with customer-owned data planes, delivering agility without vendor lock-in and compliance without performance trade-offs. Organizations achieve the operational efficiency of cloud services while maintaining complete control over their most sensitive data assets.

Airbyte Enterprise Flex exemplifies this approach, combining cloud orchestration with customer-controlled data planes for complete database sovereignty and performance optimization. The platform's hybrid architecture with 600+ connectors ensures your data never leaves your network while providing the same management experience as fully cloud-hosted solutions.

Talk to Sales to discuss your hybrid deployment requirements and see how Airbyte Flex delivers data sovereignty without compromising capability.

Frequently Asked Questions

What is the difference between hybrid deployment and multi-cloud deployment?

Hybrid deployment splits control and data planes between cloud and on-premises environments, with orchestration in the cloud and data processing staying local. Multi-cloud deployment runs workloads across multiple cloud providers but typically doesn't include on-premises infrastructure. Hybrid focuses on data sovereignty and local processing, while multi-cloud focuses on avoiding vendor lock-in across cloud providers.

How does a hybrid database deployment handle disaster recovery?

Hybrid deployments separate control plane availability from data plane resilience. The cloud control plane typically has built-in redundancy and automated failover. For data planes, you implement standard disaster recovery practices in your environment (backups, replication, failover nodes). Because data planes only initiate outbound connections, recovery procedures don't require reconfiguring inbound network rules.

Can I migrate from pure cloud to hybrid deployment without downtime?

Yes, with proper planning. You can deploy data planes in your infrastructure while keeping the existing cloud control plane operational. Move connectors and pipelines incrementally, testing each migration before proceeding. The control plane continues orchestrating jobs during the transition. Most organizations complete migrations with zero user-facing downtime by following a phased approach that validates each step.

What network requirements are needed for hybrid database deployments?

Hybrid deployments require reliable outbound connectivity from your data plane to the cloud control plane. Data planes initiate all connections through encrypted TLS channels (typically HTTPS), so you don't need to open inbound firewall rules. Bandwidth requirements depend on your metadata volume (job status, logs, metrics), not your data volume, since raw data stays local. Most organizations find existing network capacity sufficient without upgrades.

.webp)