Agent Engine Public Beta is now live!

Learn how to build a connector development support bot for Slack that knows all your APIs, open feature requests and previous Slack conversations by heart

Summarize this article with:

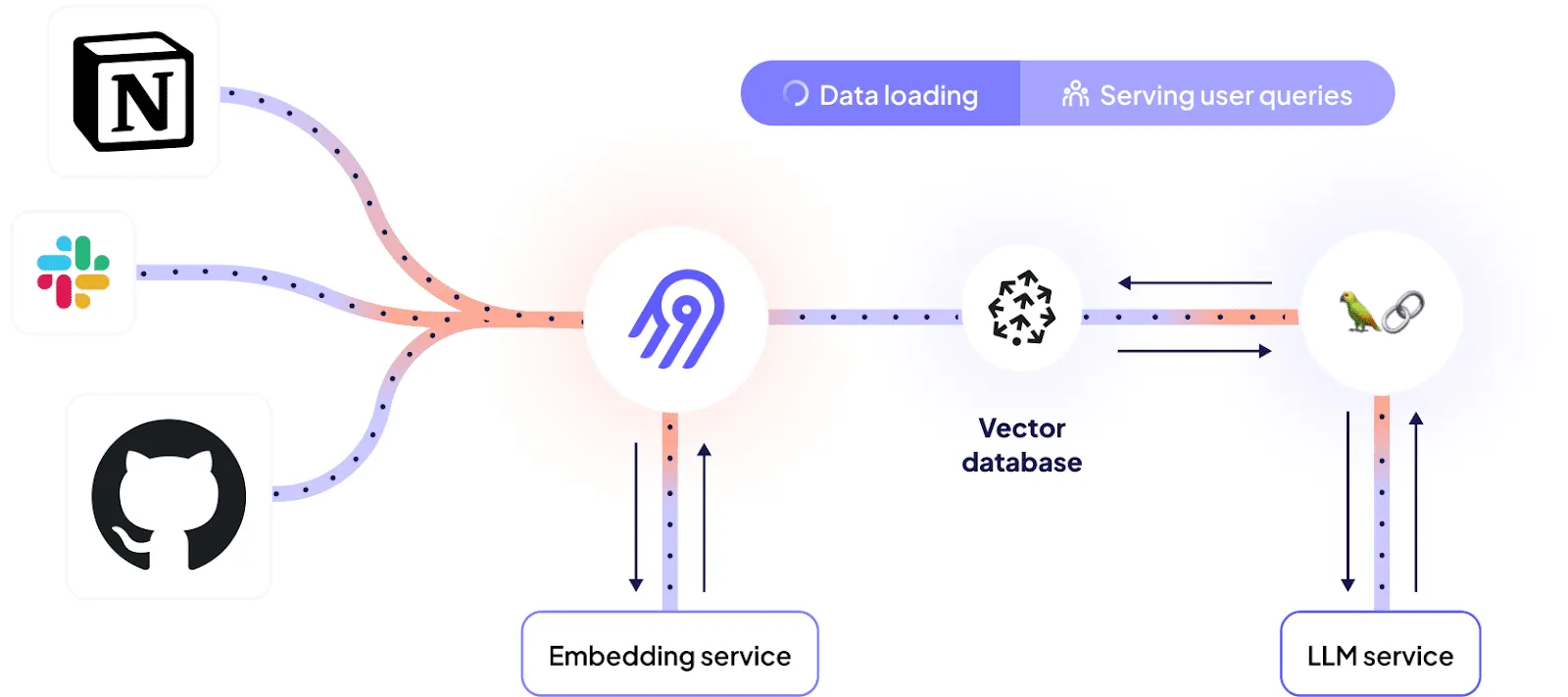

In a previous article, we explained how Dagster and Airbyte can be leveraged to power LLM-supported use cases. Our newly introduced vector database destination makes this even easier as it removes the need to orchestrate chunking and embedding manually - instead the sources can be directly connected to the vector database through an Airbyte connection.

This tutorial walks you through a real-world use case of how to leverage vector databases and LLMs to make sense out of your unstructured data. By the end of this, you will:

To better illustrate how this can look in practice, let’s use something that’s relevant for Airbyte itself.

Airbyte is a highly extensible system that allows users to develop their own connectors to extract data from any API or internal systems. Helpful information for connector developers can be found in different places:

This article describes how to tie together all of these diverse sources to offer a single chat interface to access information about connector development - a Slackbot that can answer questions in plain english about the code base, documentation and reference previous conversations:

In these examples, information from the documentation website and existing Github issues is combined in a single answer.

A Slack chatbot is an automated assistant that integrates with Slack workspaces to provide interactive responses to user messages. In this project, we're building a specialized knowledge base Slackbot that can access and respond to questions about data connector development by combining data from multiple sources.

For following through the whole process, you will need the following accounts. However, you can also work with your own custom sources and use a local vector store to avoid all but the OpenAI account:

1. Source-specific accounts

2. Destination-specific accounts

3. Airbyte instance (local or cloud)

Airbyte’s feature and bug tracking is handled by the Github issue tracker of the Airbyte open source repository. These issues contain important information people need to look up regularly.

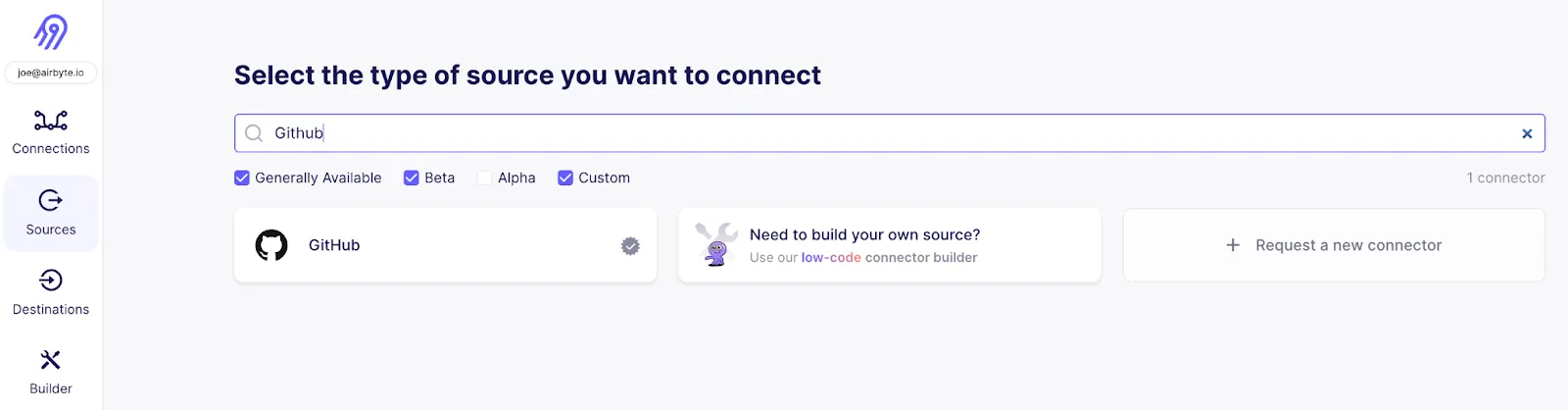

To fetch Github issues, create a new source using the Github connector.

If you are using Airbyte Cloud , you can easily authenticate using the “Authenticate your GitHub account”, otherwise follow the instructions in the documentation on the right side of how to set up a personal access token in the Github UI.

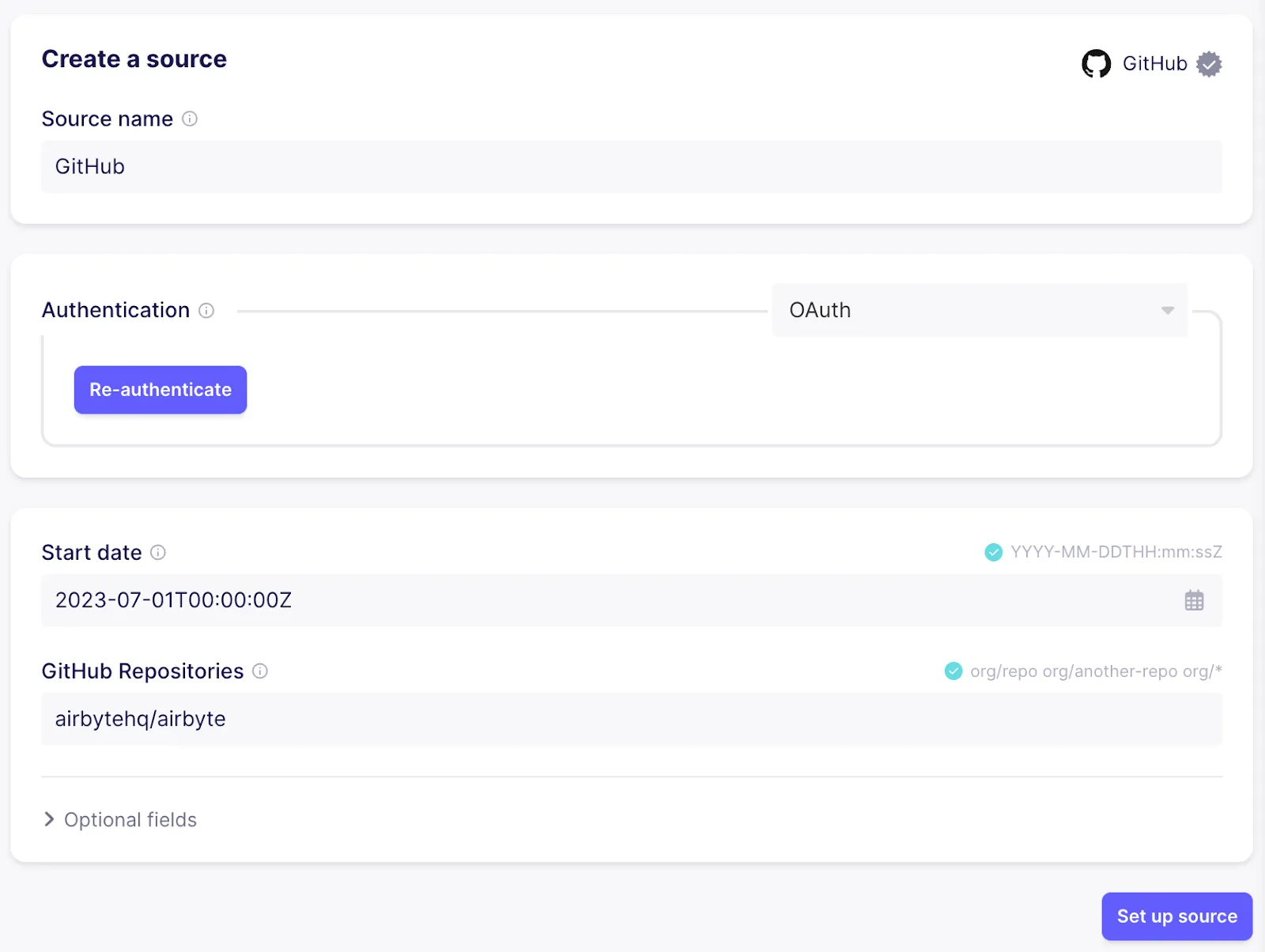

Next, configure a cutoff date for issues and specify the repositories that should be synced. In this case I’m going with “2023-07-01T00:00:00Z” and “airbytehq/airbyte” to sync recent issues from the main Airbyte repository:

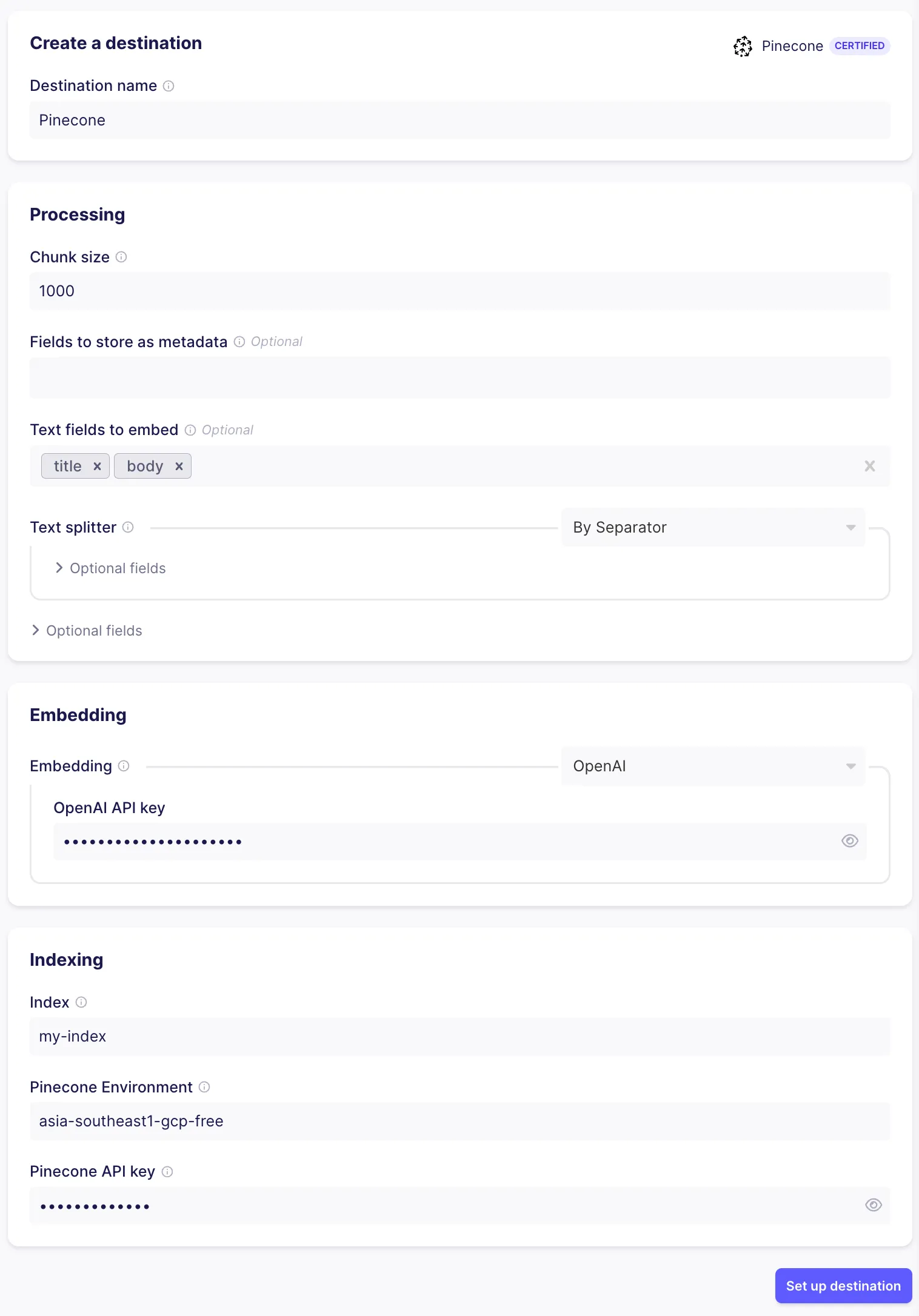

Now we have our first source ready, but Airbyte doesn’t know yet where to put the data. The next step is to configure the destination. To do so, pick the “Pinecone” connector. There is some preprocessing that Airbyte is doing for you so that the data is vector ready:

Besides Pinecone, Airbyte supports loading data into Weaviate, Milvus, Qdrant and Chroma.

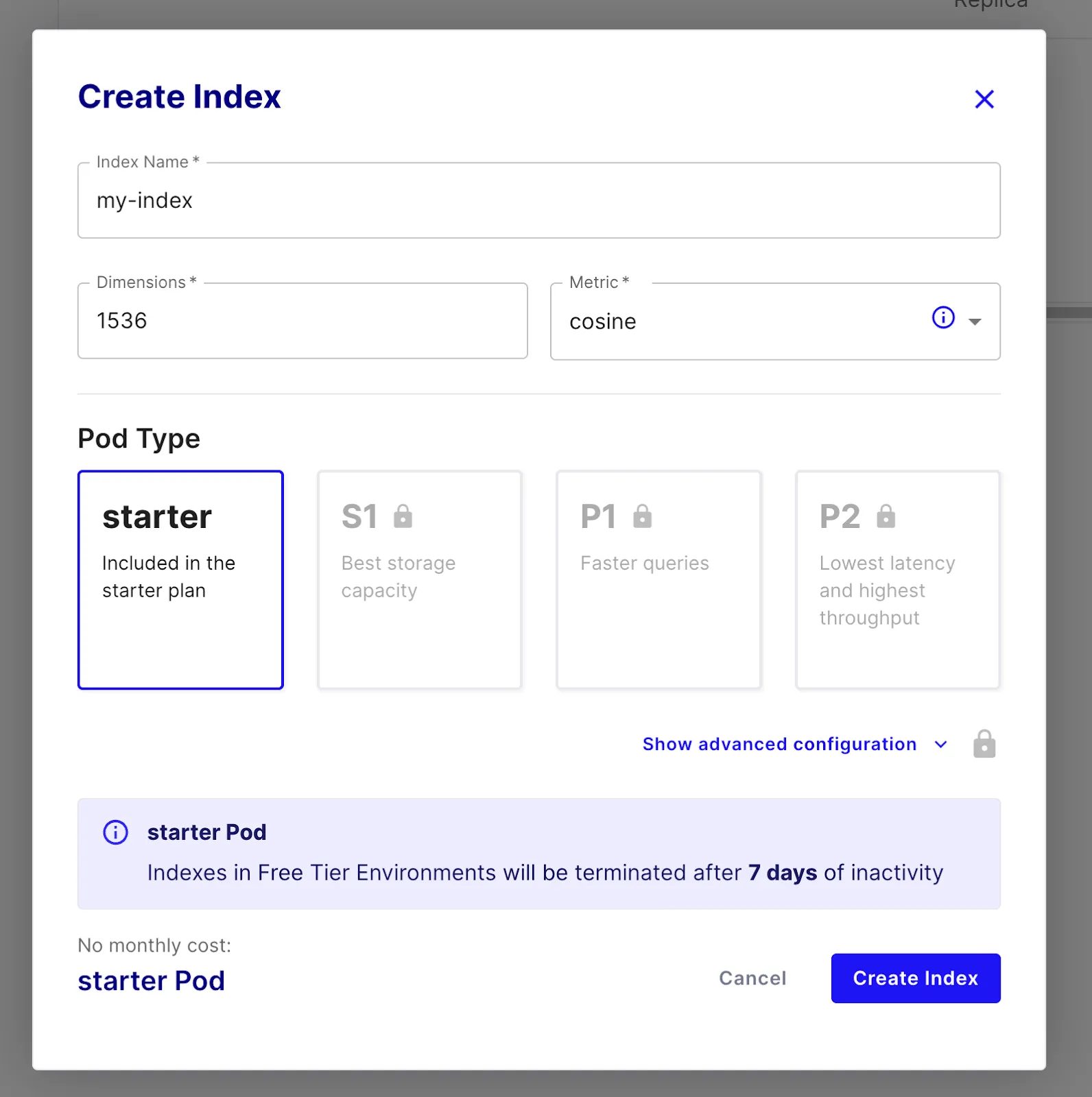

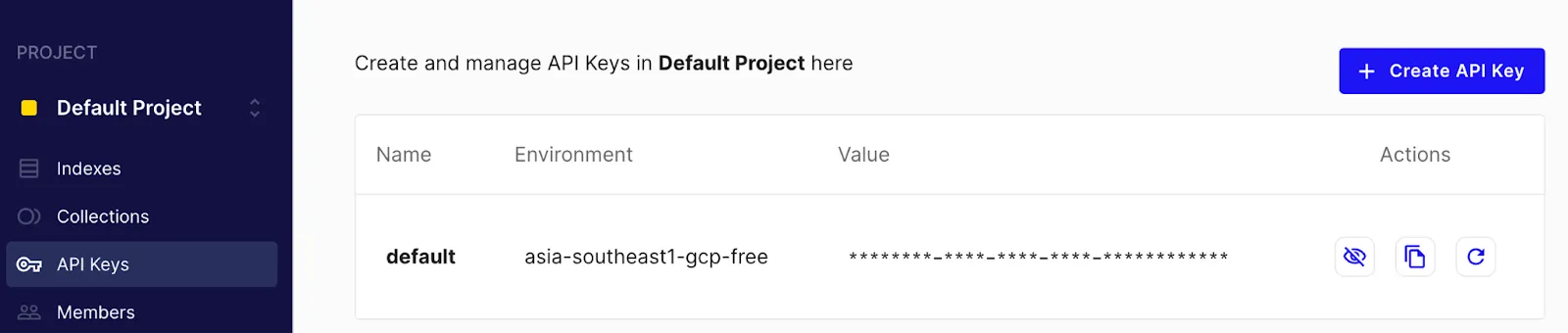

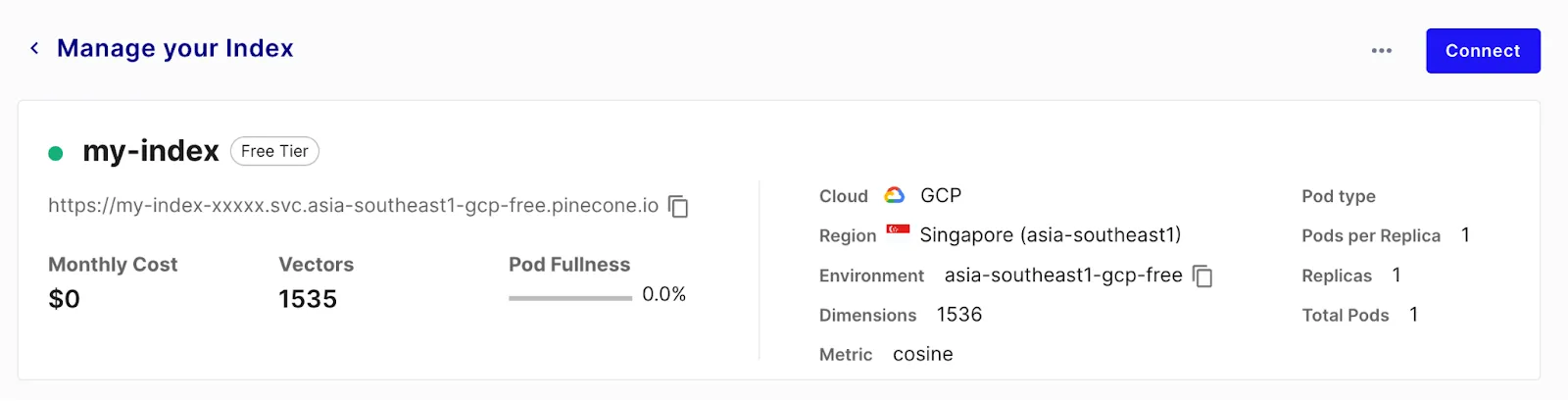

For using Pinecone, sign up for a free trial account and create an index using a starter pod. Set the dimensions to 1536 as that’s the size of the OpenAI embeddings we will be using

Once the index is ready, configure the vector database destination in Airbyte:

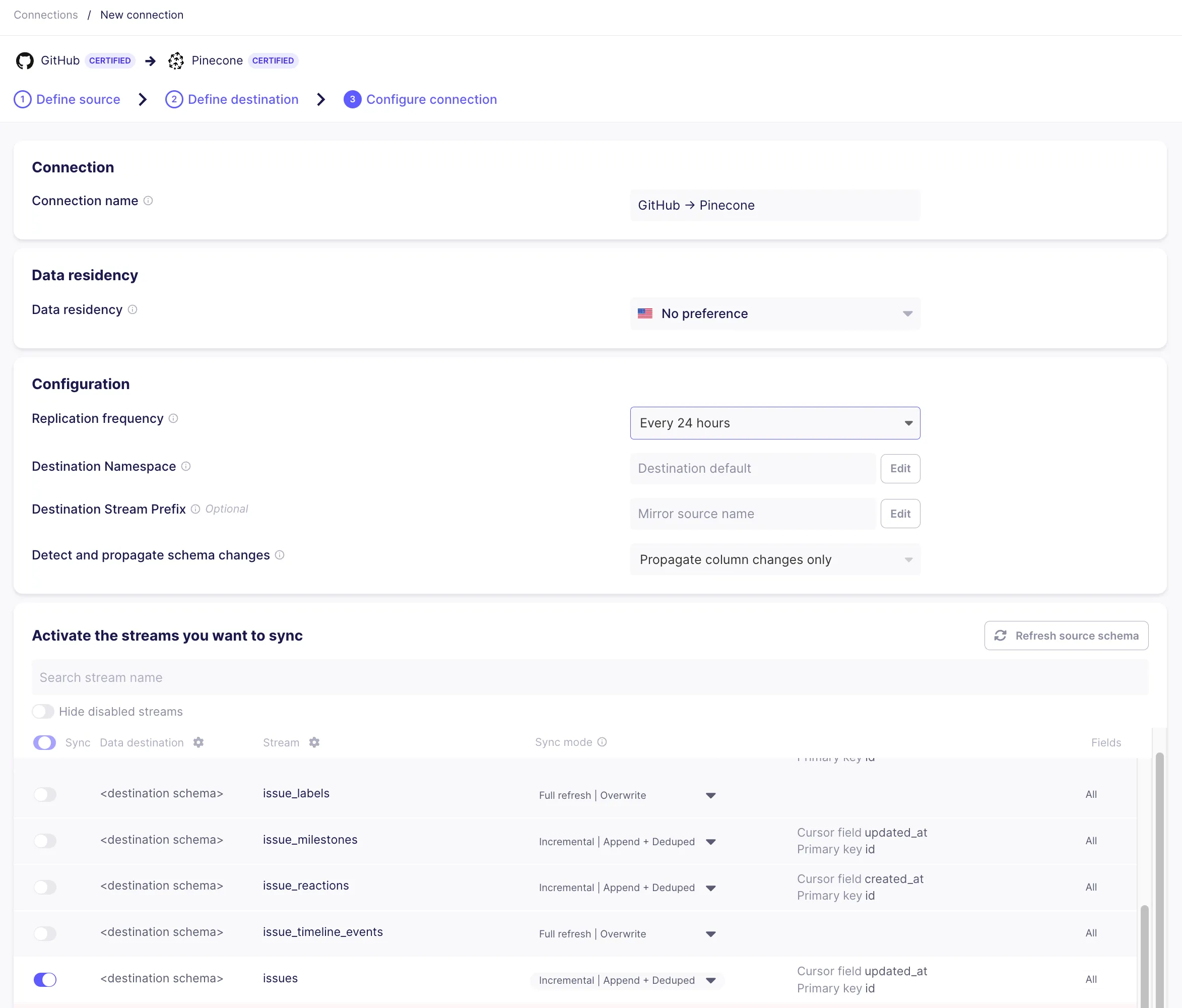

Once the destination is set up successfully, set up a connection from the Github source to the vector database destination. In the configuration flow, pick the existing source and destination. When configuring the connection, make sure to only use the “issues” stream, as this is the one we are interested in.

Side note: Airbyte allows to make this sync more efficient in a production environment:

If everything went well, there should be a connection now syncing data from Github to Pinecone via the Pinecone destination. Give the sync a few minutes to run. Once the first run has completed, you can check the Pinecone index management page to see a bunch of indexed vectors ready to be queried.

Each vector is associated with a metadata object that’s filled with the fields that were not mentioned as “text fields” in the destination configuration. These fields will be retrieved along with the embedded text and can be leveraged by our chatbot in later sections. This is how a vector with metadata looks like when retrieved from Pinecone:

On subsequent runs, Airbyte will only re-embed and update the vectors for the issues that changed since the last sync - this will speed up subsequent runs while making sure your data is always up-to-date and available.

The data is ready, now let’s wire it up with our LLM to answer questions in natural language. As we already used OpenAI for the embedding, the easiest approach is to use it as well for the question answering.

We will use Langchain as an orchestration framework to tie all the bits together.

First, install a few pip packages locally:

The basic functionality here works the following way:

This flow is often referred to as retrieval augmented generation. The RetrievalQA class from the Langchain framework already implements the basic interaction. The simplest version of our question answering bot only has to provide the vector store and the used LLM:

To run this script, you need to set OpenAI and Pinecone credentials as environment variables:

This works in general, but it has some limitations. By default, only the text fields are passed into the prompt of the LLM, so it doesn’t know what the context of a text is and it also can’t give a reference back to where it found its information:

From here, there’s lots of fine tuning to do to optimize our chat bot. For example we can improve the prompt to contain more information based on the metadata fields and be more specific for our use case:

The full script also be found on Github

This revised version of the RetrievalQA chain customizes the prompts that are sent to the LLM after the context has been retrieved:

So far this helper can only be used locally. However, using the python slack sdk it’s easy to turn this into a Slack bot itself.

To do so, we need to set up a Slack “App” first. Go to https://api.slack.com/apps and create a new app based on the manifest here (this saves you some work configuring permissions by hand). After you set up your app, install it to the workspace you want to integrate with. This will generate a “Bot User OAuth Access Token” you need to note down. Afterwards, go to the “Basic information” page of your app, scroll down to “App-Level Tokens” and create a new token. Note down this “app level token” as well.

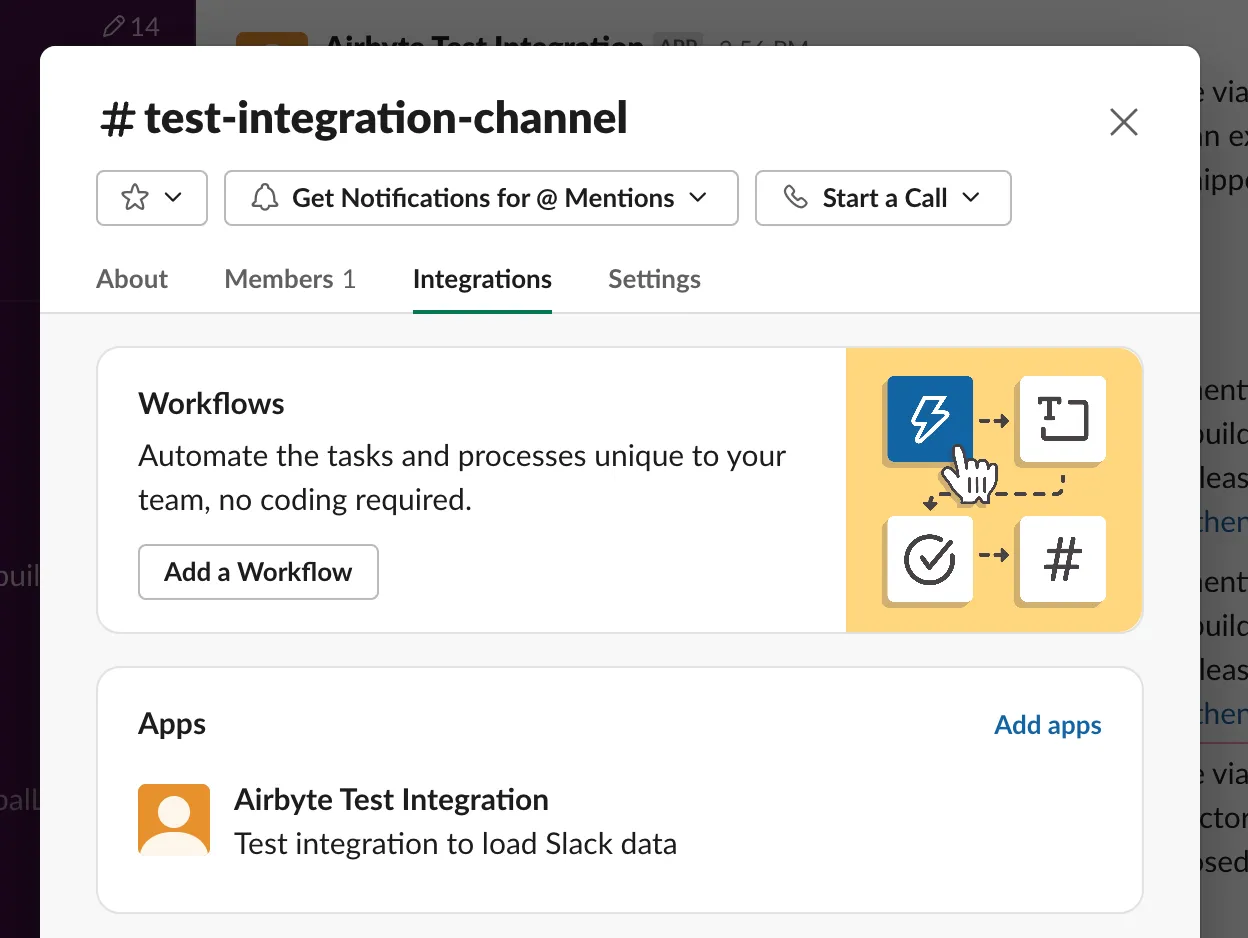

Within the regular Slack client, your app can be added to a slack channel by clicking the channel name and going to the “Integrations” tab:

After this, your Slack app is ready to receive pings from users to answer questions - the next step is to call Slack from within python code, so we need to install the python client library:

Afterwards, we can extend our existing chatbot script with a Slack integration:

The full script also be found on Github

To run the script, the environment variables for the slack bot token and app token need to be added as environment variables as well:

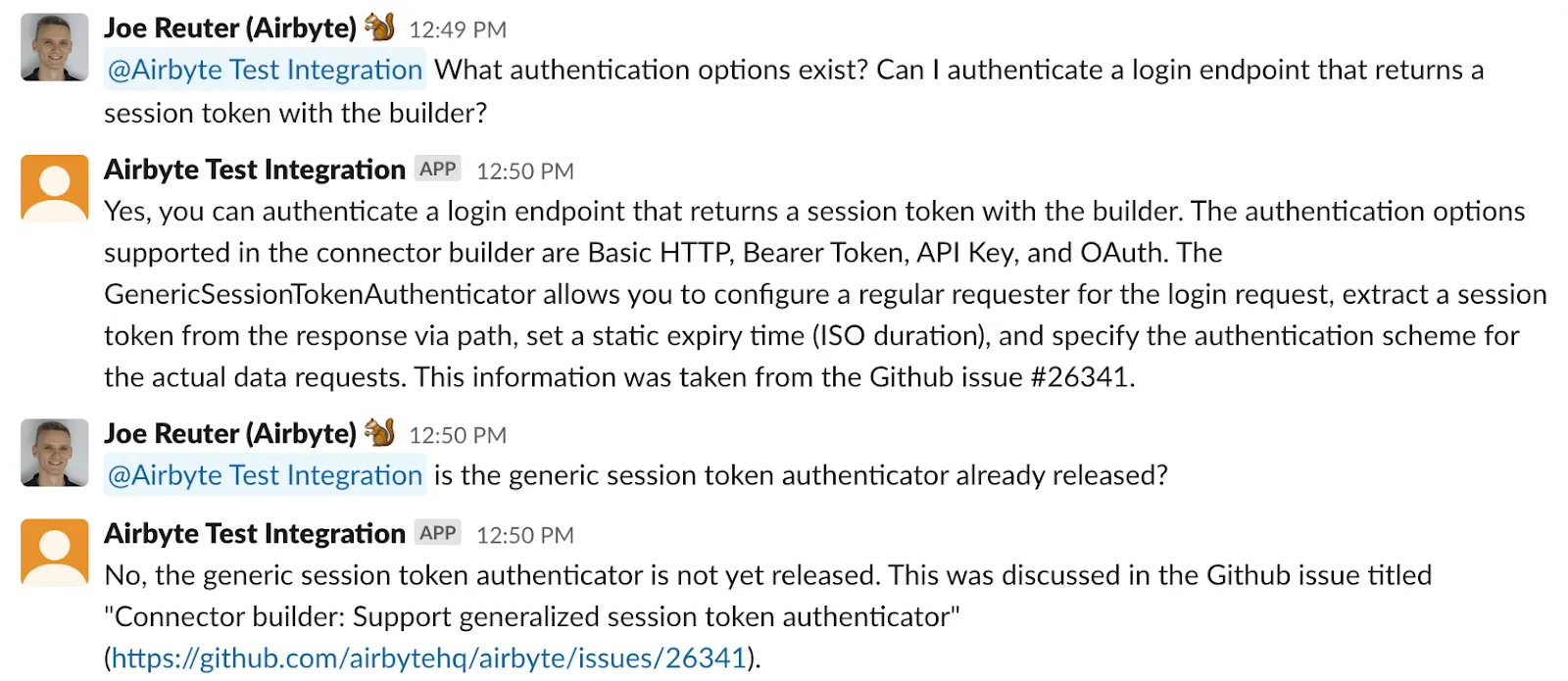

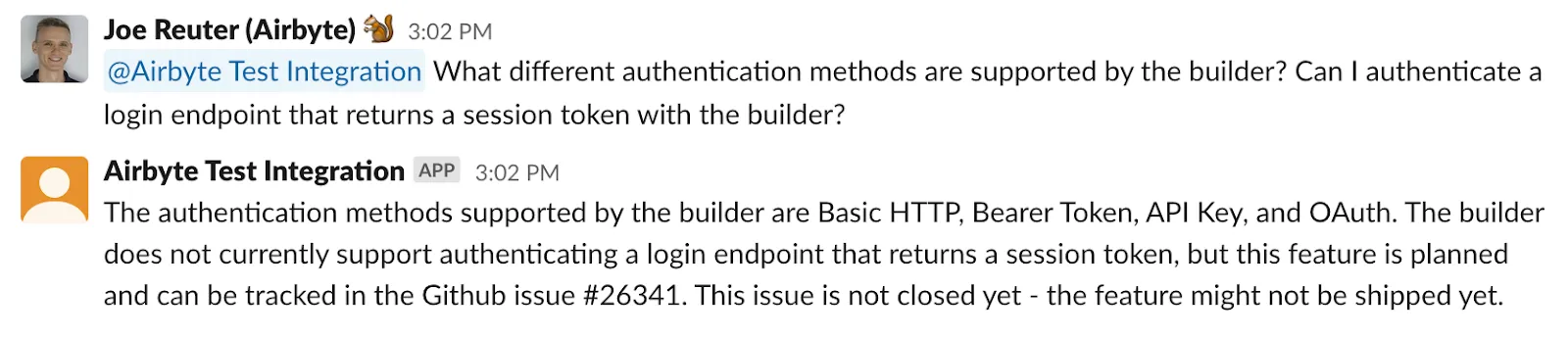

Running this, you should be able to ping the development bot application in the channel you added it to like a user and it will respond to questions by running the RetrievalQA chain that loads relevant context from the vector database and uses an LLM to formulate a nice answer:

All the code can also be found on Github

Github issues are helpful, but there is more information we want our development bot to know.

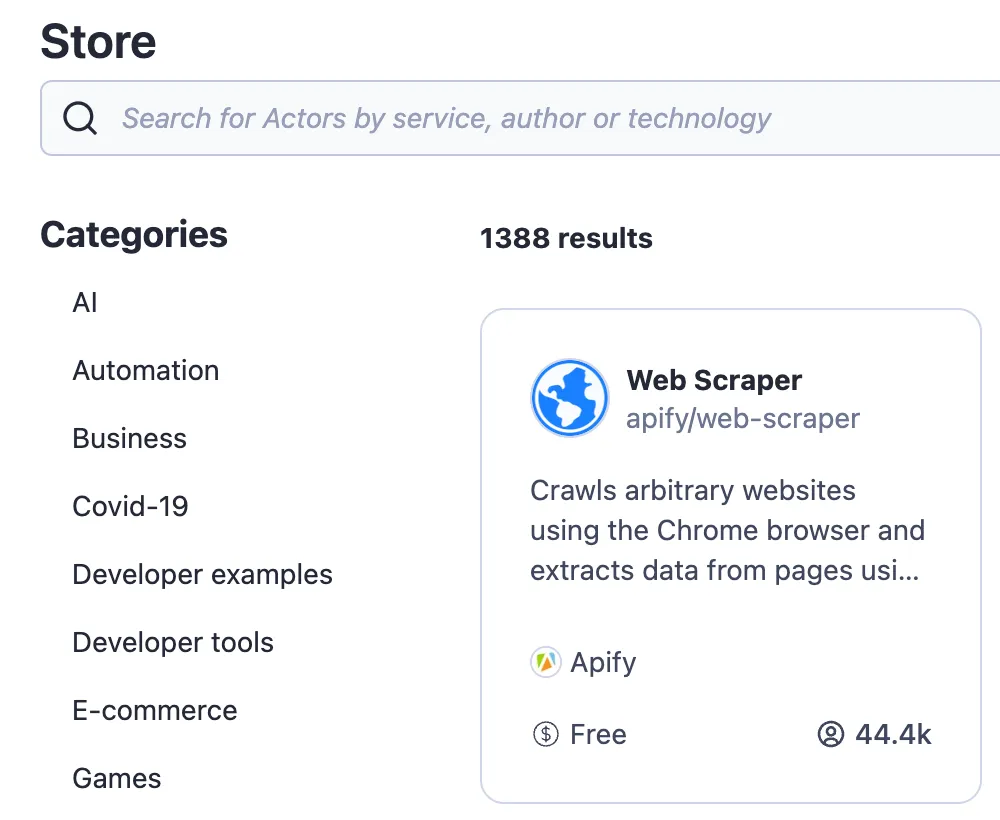

The documentation page for connector development is a very important source of information to answer questions, so it definitely needs to be included. The easiest way to make sure the bot has the same information as what’s published, is to scrape the website. For this case, we are going to use the Apify service to take care of the scraping and turning the website into a nicely structured dataset. This dataset can be extracted using the Airbyte Apify Dataset source connector.

First, log into Apify and navigate to the store. Choose the “Web Scraper” actor as a basis - it already implements most of the functionality we need

Next, create a new task and configure it to scrape all pages of the documentation, extracting the page title and all of the content:

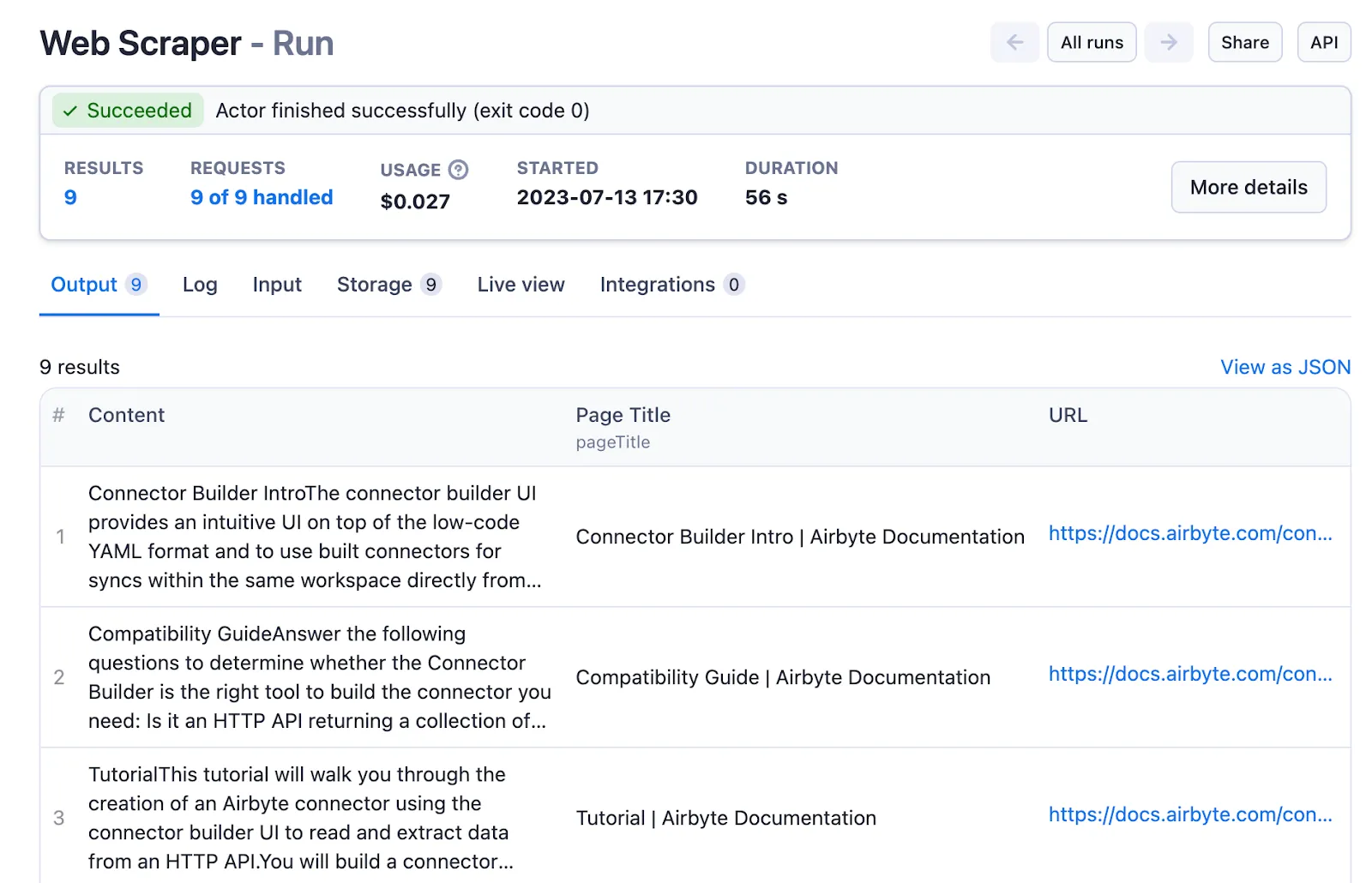

Running this actor will complete quickly and give us a nicely consumable dataset with a column for the page title and the content:

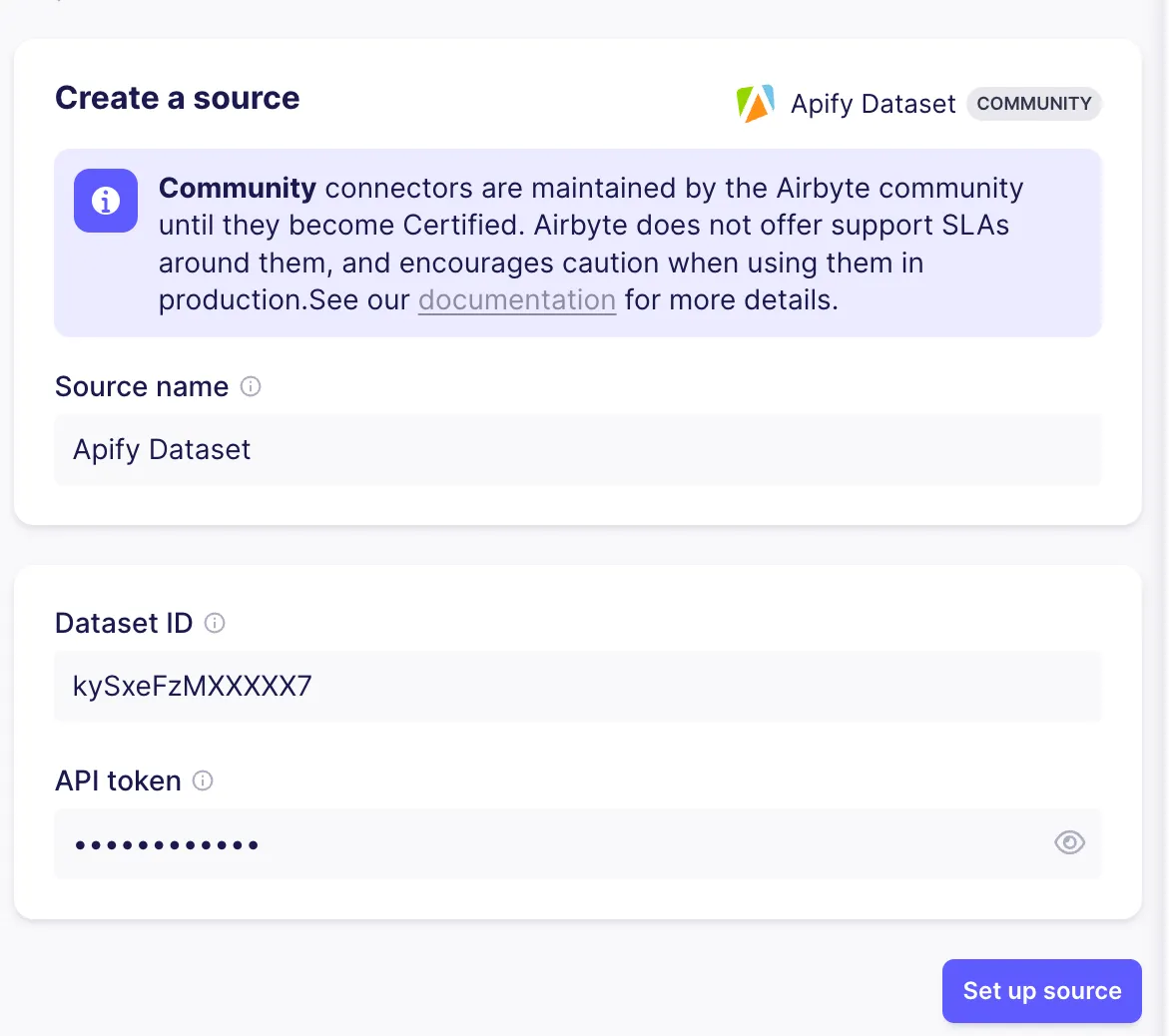

Now it’s time to connect Airbyte to the Apify data set - go to the Airbyte web UI and add your second Source - pick “Apify Dataset”

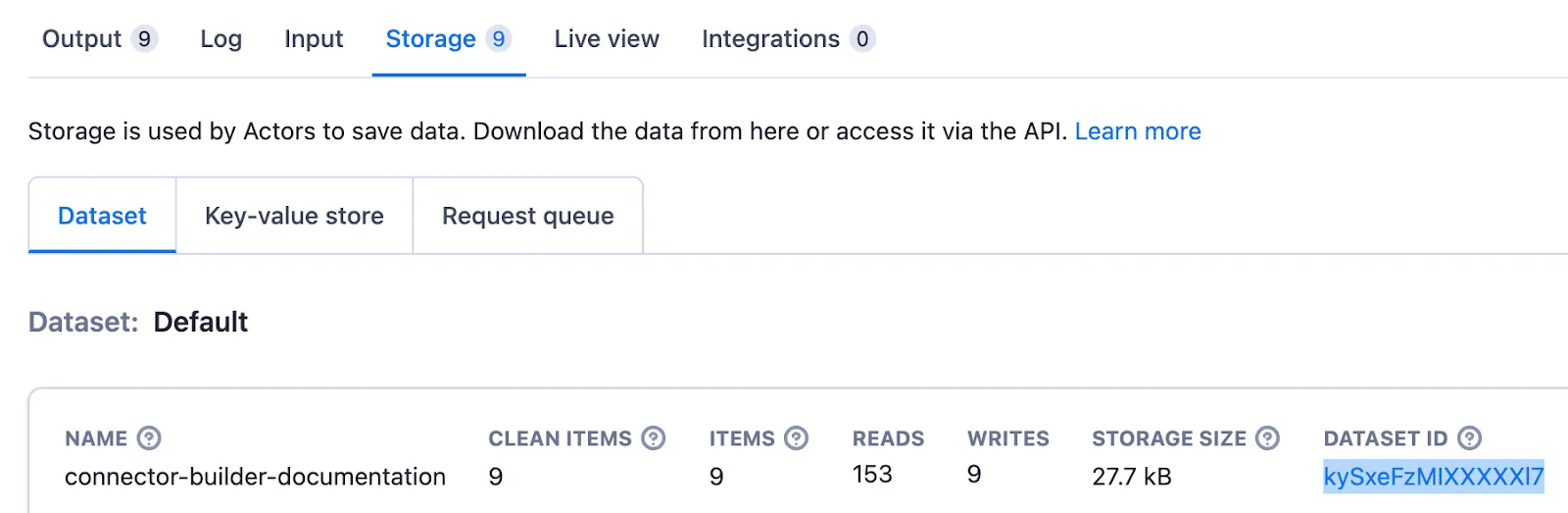

To set up the Source, you need to copy the dataset ID that’s shown in the “Storage” tap of the “Run” in the Apify UI

The API token can be found on the Settings->Integrations page in the Apify Console.

Once the source is set up, follow the same steps as for the Github source to set up a connection moving data from the Apify dataset to the vector store. As the relevant text content is sitting in different fields, you also need to update the vector store destination - add data.pageTitle and data.content to the “text fields” of the destination and save. Only the item_collection stream should be enabled for the sync.

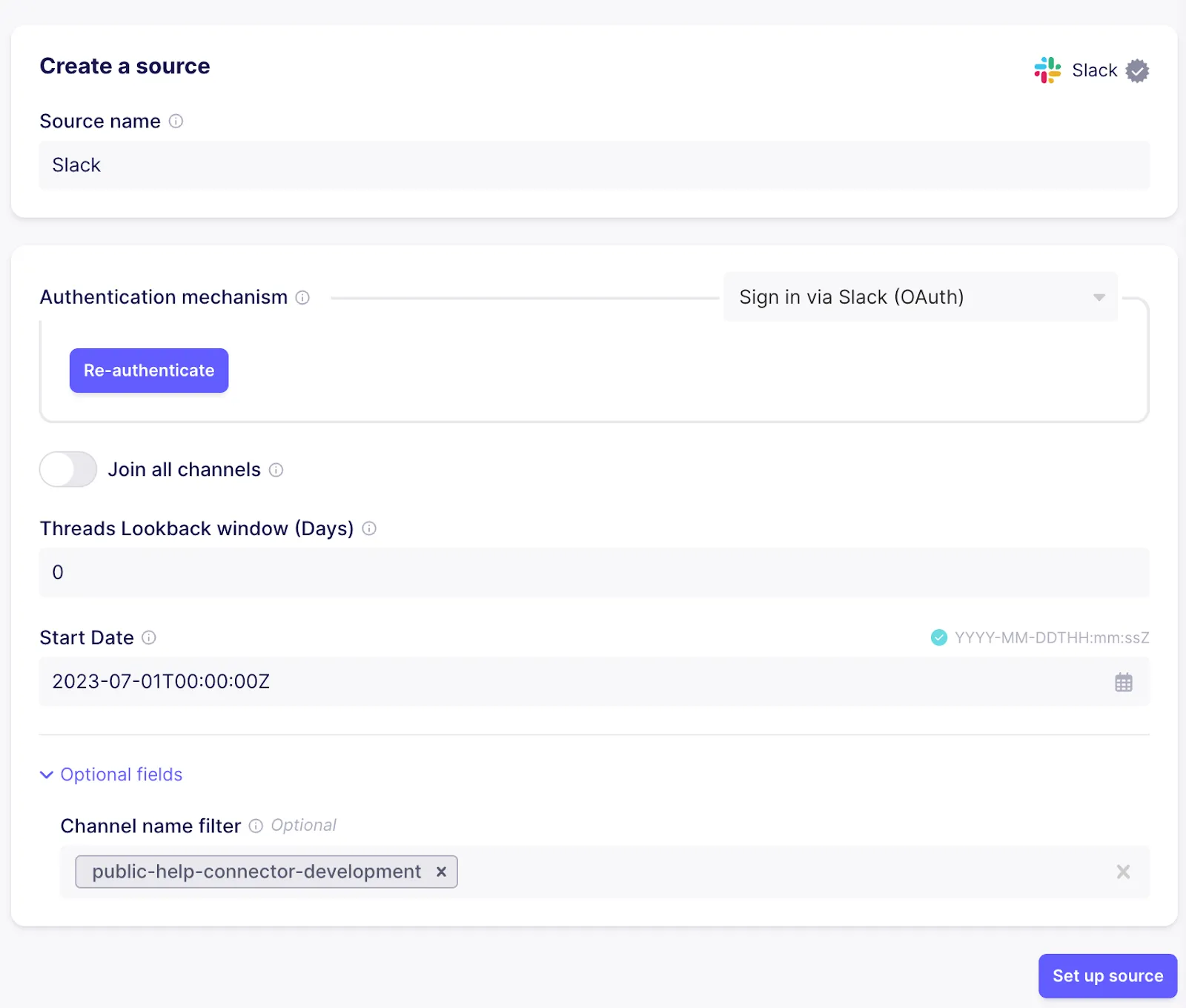

Another valuable source of information relevant to connector development are Slack messages from the public help channel. These can be loaded in a very similar fashion. Create a new source using the Slack connector. When using cloud, you can authenticate using the “Authenticate your Slack account” button for simple setup, otherwise follow the instructions in the documentation on the right hand side how to create a Slack “App” with the required permissions and add it to your workspace. To avoid fetching messages from all channels, set the channel name filter to the correct channel.

As for Apify and Github, a new connection needs to be created to move data from Slack to Pinecone. Also add text to the “text fields” of the destination to make sure the relevant data gets embedded properly so similarity searches will yield the right results.

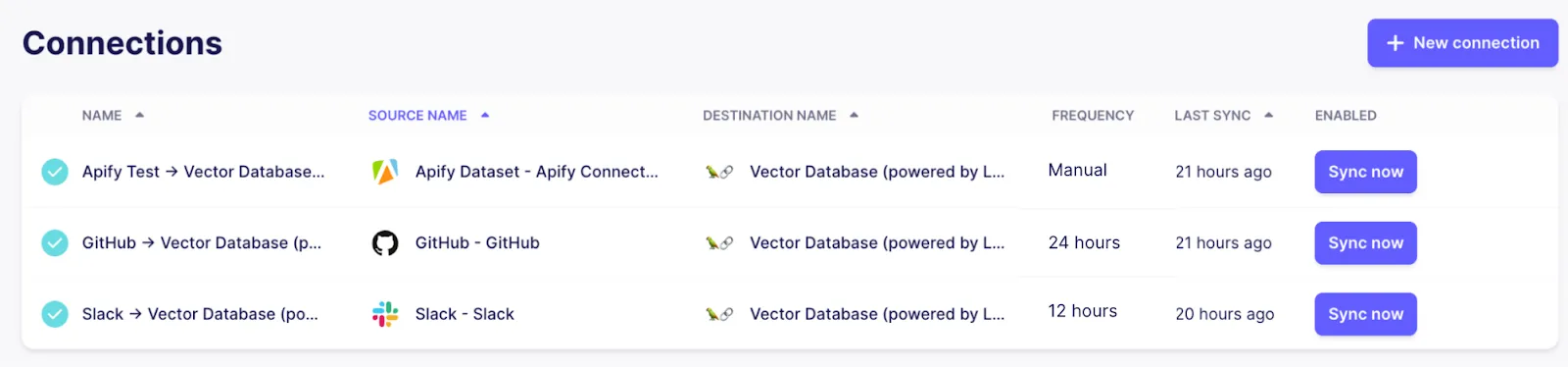

If everything went well, there should be three connections now, all syncing data from their respective sources to the centralized vector store destination using a Pinecone index.

By adjusting the frequency of the connections, you can control how often Airbyte will rerun the connection to make sure the knowledge base of our chat bot stays up to date. As Github and Slack are frequently updated and support efficient incremental updates, it makes sense to set them to a daily frequency or higher. The documentation pages don’t change as often, so they can be kept at a lower frequency or even just be triggered on demand when there are changes.

As we have more sources now, let’s improve our prompt to make sure the LLM has all necessary information to formulate a good answer:

By default the RetrievalQA chain retrieves the top 5 matching documents, so if it’s applicable the answer will be based on multiple sources at the same time:

The first sentence about Basic HTTP, Bearer Token, API Key and OAuth is retrieved from the documentation page about authentication, while the second sentence is referring to the same Github issue as before.

We covered a lot of ground here - stepping back a bit, we accomplished the following parts:

With data flowing through this system, Airbyte will make sure the data in your vector database will always be up-to-date while only syncing records that changed in the connected source, minimizing the load on embedding and vector database services while also providing an overview over the current state of running pipelines.

This setup isn’t using a single black box service that encapsulates all the details and leaves us with limited options for tweaking behavior and controlling data processing - instead it’s composed out of multiple components that be easily extended in various places:

Survey

If you are interested in leveraging Airbyte to ship data to your LLM-based applications, take a moment to fill out our survey so we can make sure to prioritize the most important features.

To build a Slackbot in Python, use Langchain's RetrievalQA chain with OpenAI to process questions and generate contextual answers from data stored in a vector database. Integrate with Slack by creating a Slack App with necessary permissions, using slack_sdk's WebClient and SocketModeClient to listen for app mentions in channels, and implementing an event handler that processes messages and responds with AI-generated answers based on the stored knowledge.

Yes, you can create your own chatbot using tools like OpenAI for natural language processing, Pinecone for vector storage, and Python with the Slack SDK. Your chatbot can be customized to access and respond based on your specific data sources and use cases.

Creating a basic chatbot isn't hard if you use modern tools and frameworks. With services like OpenAI for language processing and frameworks like Langchain for orchestration, the main complexity lies in setting up the data pipeline and integrating different services rather than in the core chatbot logic itself.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.