Learn how to build a robust Large Language Model application using ChromaDB for vector storage and Airbyte for data integration, simplifying your AI development workflow.

Summarize this article with:

Incorporating an LLM application into your workflow has become crucial for automating modern organizational tasks. It can help improve customer service, build a knowledge base, and assist with code generation, text summarization, and data analytics. However, engineering an LLM solution from scratch can be a challenging task. To simplify this complexity, you can leverage existing models with vector databases, like ChromaDB.

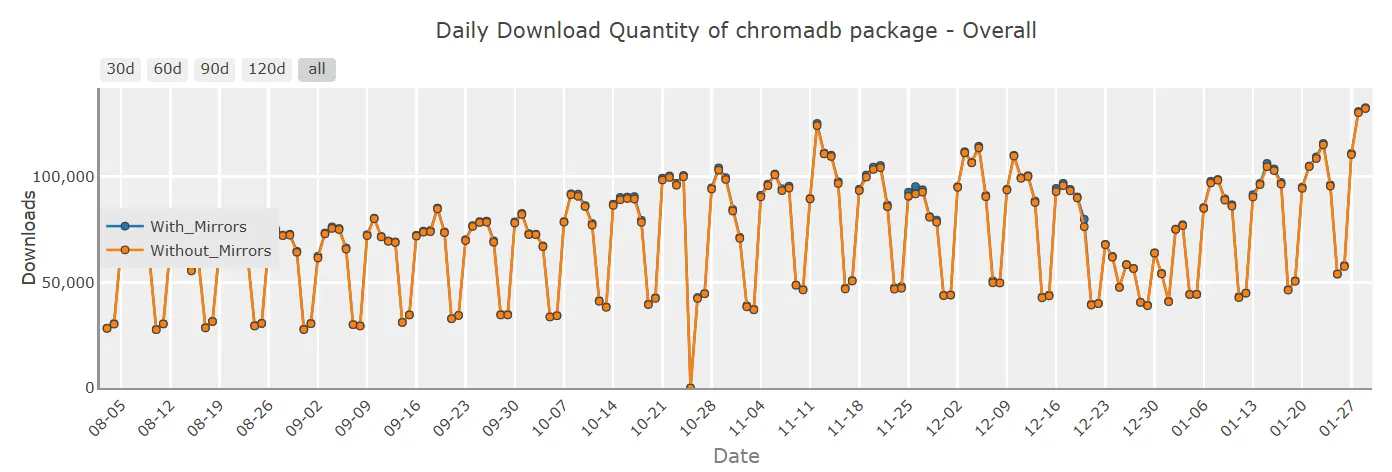

ChromaDB is a prominent vector store that is continuously being used by the developer community to build robust AI systems. The below graph shows the download trend of the chromadb python package for the past 30 days according to the PyPI Stats.

This article will discuss the steps you can take to create a ChromaDB LLM application that can aid you in optimizing data-driven workflows.

ChromaDB is an open-source vector database that allows you to store vector embeddings along with metadata. These embeddings can further provide the LLM with contextual understanding, which the model can refer to produce relevant results. The response generated by the model can cater to your specific needs depending on the vector data.

Airbyte is a no-code data integration platform that allows you to replicate data from multiple sources to the destination of your preference. It offers over 550 pre-built connectors, enabling you to move structured, semi-structured, and unstructured data into a vector database like ChromaDB. If the source you seek is unavailable, Airbyte provides Connector Builder and a suite of Connector Development Kits (CDKs) for generating custom connectors.

LLMs, or Large Language Models, are extensive machine learning models that are built explicitly for language-related tasks. Some of the everyday use cases of LLMs include text summarization, code generation, interaction with APIs, and sentiment analysis.

Contrarily, RAG—Retrieval Augmented Generation—is an AI framework that facilitates the retrieval of domain-specific factual information from an external data store. Instead of relying on LLM’s training data, RAG produces highly relevant content according to your specified data source. For instance, storing your GitHub issues data in a vector database facilitates the generation of LLM responses according to your GitHub repository.

Let’s use ChromaDB with Airbyte to build a chatbot that responds to your queries based on GitHub issues:

Open Google Colab and add a virtual environment to isolate dependencies by running:

Install all the required libraries that will be essential for this tutorial.

After installing all the required libraries, you can import them by executing the code below:

Import PyAirbyte:

To display the response generated by the LLM application in a structured way, import textwrap and rich.console.Console.

Import the os module to access and manage environment variables.

Using this os library, get the OpenAI API key, which will be crucial in generating embeddings.

Now, install the LangChain-specific modules to simplify LLM application development. Run:

In the above code:

Let’s now configure GitHub as the data source. To achieve this, use the get_source method. Replace the GITHUB_PERSONAL_ACCESS_TOKEN with your personal credentials and execute this code:

As an example, the above code includes Airbyte’s GitHub repository.

To verify if the connection works properly, run the following command:

This code must result in a success statement.

Check all the streams available in PyAirbyte’s GitHub repository by executing the following code:

For this tutorial, let’s select the issues stream. Run the following:

To temporarily store the data in PyAirbyte default DuckDB cache, run:

Examine the available fields by printing a single record from the stream.

To perform data transformations, convert the records into a list of documents. This step allows you to arrange the records into well-structured documents. The produced documents can further be split into smaller chunks and then converted into embeddings for efficient processing. Execute the code below:

In this code, the render_metadata parameter publishes the metadata properties with the markdown file. This parameter is useful for comparatively smaller documents, as it provides additional information about the data.

To store data in the vector database, you must split the documents into smaller, more manageable chunks. This can be done using the RecursiveCharacterTextSplitter as follows:

The above code splits the documents into chunks of 512 characters, and the chunk_overlap outlines how many characters can overlap in consecutive chunks.

Define a vector store variable that will use the OpenAI embeddings to convert the chunked documents into vector embeddings and use the ChromaDB to store the embeddings.

When defining the vectorstore variable, you can also use the persist_directory parameter to save the embeddings to a specific directory.

Now that the data is stored in ChromaDB in the form of embeddings, define a model to interact with your data. In this way, you can create an LLM application, such as a responsive chatbot. The model can summarize the content of the GitHub issues, give relevant content to specific products, and aid you in identifying unresolved issues.

For example, to create a RAG solution, first use ChromaDB as a retriever for the application.

Define a model and a prompt, specifying the model to behave in a certain way. For instance, use the LangChain Hub to pull the existing rlm prompt.

Run the following script:

Instead of directly responding to the user queries, you can also define a format in which you want the RAG to respond.

Use all this information to build a RAG chain that can reply to your questions. Execute:

Finally, create a console instance to output the data and ask questions to your ChromaDB LLM application.

This code will result in all the documentation-related issues with issue numbers in the format specified under the format_docs function. Check our PyAirbyte GitHub repository demo for detailed steps.

Here are a few real-world use cases of the ChromaDB LLM application:

By storing customer support documentation in ChromaDB, you can boost customer experience. The customer support chatbot can automate the customer handling process by providing replies to user queries, saving time and resources. Based on official documentation and past conversations, the model can respond to your customer’s specific requirements.

You can build a knowledge management system (KMS) by integrating official documents into a single source of truth, like ChromaDB. The KMS can improve data quality, boost internal communication, and enhance collaboration within your organization.

ChromaDB and Airbyte integration can be used to develop code assistance applications. The code assistant is beneficial in improving productivity by detecting errors, suggesting code completion, and enhancing code quality.

Storing multilingual data in ChromaDB enables the development of LLM applications that can translate one language to another. In this way, you can create your own Google Translate to learn or interpret different languages without a tutor.

The ChromaDB LLM application can function as a junior data analyst. For example, the RAG model built using this tutorial can analyze recent GitHub issues, outline resolved ones, and highlight the issue resolution rate. You can customize the application by defining a prompt that allows the RAG to operate as a data analyst.

Through this tutorial, you get a comprehensive overview of how to create a ChromaDB LLM application.

By extracting data from different sources into a vector data store, like ChromaDB, you can create a centralized knowledge base for developing AI-driven applications. This stored data can help you provide quick and efficient responses from your LLM.

Although this tutorial emphasizes ChromaDB, you can also use other vector databases, like Qdrant, which is supported by Airbyte. Learn more about the key differences between ChromaDB vs Qdrant.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.