Agent Engine Public Beta is now live!

Learn how to create a dbt package to analyze Github data extracted with Airbyte.

Published on May 04, 2022

Summarize this article with:

Today, more companies are built on top of a combination of SaaS applications to optimize every department of their business. These applications are collecting vast amounts of data that data teams are trying to extract.

Cerebrium is a data and AI platform for your business that doesn't require an entire data team. We are migrating our SaaS connectors over to Airbyte to consolidate our client's data across the applications our clients use. Airbyte is an open-source ETL platform that enables you to centralize SaaS application data into your data warehouse. While Airbyte takes care of moving data, to use this data effectively a data team needs to clean and transform it into a format that can be used by downstream applications and dbt is a tool that enables Cerebrium to do this.

Recently we started open-sourcing dbt packages for popular Airbyte sources like GitHub, Google Ads, and more with the aim of creating a community-driven approach to analytics engineering, allowing organizations to realize value from their data quickly.

In this article, we guide you through the process we went through to open-source a dbt package for the Airbyte GitHub connector. We cover best practices, style guidelines, making your packages compatible across different SQL databases, and how to make your package publicly available.

Prerequisites:

Use the dbt init command to create a new dbt project.

dbt init [package_name]

If you would like to submit this package to the dbt package registry, you need to create a public GitHub repository and link it to the package you just created. We recommend you keep the name of your package and your GitHub repository identical.

dbt has written articles on their recommended best practices for dbt, project structure as well as a style guide. Airyte also has written this style guide for your dbt projects. It is a good idea to go follow these guides to keep your package clean and to make it easy for the community to contribute. You can find a summary of these articles below.

The first layer of transformations in a dbt project should set up base models to be used throughout the project. We did the following in the GitHub repository:

All subsequent data models should be built on top of these models, reducing the amount of duplicated code across your project. If you check our dbt models in Github, you might notice we create duplicate columns. We chose to do this to ease codebase maintenance and to allow us to be resilient to changes in Airbyte schemas.

Your dbt project will most likely depend on raw data stored in your database. This can be data from your SaaS applications as well as your database, so there is always the possibility that data changes over time. To avoid breaking changes in your models and reduce duplication, it is easier to update models if the raw data is only referenced in one place. Typically we store this in tmp/ in our models folder and build these as views as you can see from the code in stg_github_stargazers_user_tmp.sql.

select

id as user_id,

site_admin as is_site_admin,

type as user_type,

login as username,

*

from {{ var('stargazers_user') }}

This leads to a faster build time, but it should be noted downstream tools should not query these views as query times are slow. You can see an example of how we achieved in our dbt_project.yml.

models:

airbyte_github:

materialized: table

tmp:

materialized: view

Using the ref function allows dbt to infer dependencies, ensuring that models are built in the correct order. If you aren’t using ref functions in dbt it would make the two previous points irrelevant. You should only be using ref functions in your final models. You can see an example here.

In any programming language, it is easier to split complex, verbose functions into smaller chunks of code to ensure reusability across a project as well as increase readability - dbt is no different. In dbt, you can separate complex models into CTEs (Common Table Expressions). It is often a good idea to break up complex models when:

You can see how we use CTEs with the WITH clause in our github__issues.sql model.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.

You have built your package and are ready to start testing that it works as intended. It is a good idea to create a new dbt project and have both code editors open side by side to quickly correct any issues. To test your package usage in another dbt project add the location of your package locally and run the command ‘dbt deps’. If you update your package, there is no need to re-run this command, it automatically pulls the latest version when packages.yml has the local setting.

packages:

- local: /Users/Cerebrium/dbt_packages/dbt_github

Remember to define the location of your schema in the project.yml file or via the command line of your new project if your raw data is not in the default location the package expects.

Now that we have created our package and tested it is working, we need to get it ready to serve a wide variety of customers with different data stack setups, such as the data warehouses they are using, the location of their data, etc.

When syncing data via Airbyte, you specify the location of where you would like your data to be stored. Unfortunately, not every user of our package is going to store their raw GitHub data in an Airbyte namespace named github. As such, we need to make the location where dbt finds the raw data configurable. We do this using dbt sources. In the model/ directory, we need to edit the schema.yml file to specify the database location of each source and tables we use in building our model.

Below we are defining the source airbyte_github and telling dbt to look for the raw GitHub data from Airbyte in the github_schema variable. You can specify this variable via the command line or in the project.yml file. If no variable is defined, dbt will use the default github. We do the same for the database name - if it is not defined, dbt uses the target database defined in your profiles.yml file. Lastly, all the tables we define in our source are the tables that we can refer to.

sources:

- name: airbyte_github

schema: "{{ var('github_schema', 'github') }}"

database: "{% if target.type != 'spark'%}{{ var('github_database', target.database) }}{% endif %}"

tables:

- name: pull_requests

- name: issues_pull_request

- name: issues

- name: issues_user

- name: labels

- name: issue_assignees

- name: pull_request_stats

- name: reviews

- name: commits

- name: commits_author

- name: commits_committer

- name: commits_commit

- name: commit_comments_user

- name: commit_comment_reactions_user

- name: issue_comment_reactions_user

- name: issue_events_issue_user

- name: issue_reactions_user

- name: pull_request_comment_reactions_user

- name: pull_request_commits

- name: pull_requests_user

- name: review_comments_user

- name: reviews_user

- name: stargazers

- name: stargazers_user

- name: issues_assignees

- name: issues_labels

Many SQL functions are specific to a particular database. For example, the function name and order of arguments to calculate the difference between two dates varies between Redshift, Snowflake, and BigQuery, and no similar function exists on Postgres! dbt has created a package, dbt_utils, to handle common use cases. Here is a code snippet of use using the datediff macro in the github__pull_requests.sql model.

({{ dbt_utils.datediff('pull_requests.created_at_timestamp', 'pull_requests.closed_at_timestamp', 'minute') }}/1440) as days_pull_request_open,

({{ dbt_utils.datediff('pull_requests.created_at_timestamp', 'pull_request_reviews.first_review', 'minute') }}/1440) as days_until_first_review

You can read more examples here of how you can handle database edge cases with dbt here.

dbt provides a framework to test assumptions about the results generated by a model as well as the ability to add documentation to your models in your schema.yml file. Some data warehouses, such as Snowflake, pull this meta-information directly through your tables so analysts better understand table structures and columns. We add our tests in our schema.yml file.

models:

- name: github__pull_requests

description: "A table storing all pull request information of your repositories in GitHub. Use this to extract information regarding pull requests."

columns:

- name: issue_id

description: "The unique identifier for the issue being resolved"

tests:

- unique

- not_null

- name: author_user_id

description: "The unique identifier for the user who created the pull request"

tests:

- not_null

Adding tests to a project helps provide various guarantees:

A README is crucial in any open-source project and allows the community to understand the core project aspects and easily get started with development. To write an effective README, you should ensure the document addresses the following:

Here is a link to an open-source project that can help you format your README easily. However, you can see how we wrote ours here and use it as a base.

By defining metrics in dbt projects, you encode crucial business logic in tested, version-controlled code that supports zero or more dimensions. Some examples of the metrics we included are:

We define metrics in the schema.yml file which requires a few key values for each metric you define. This includes the source model, the aggregate function, name, description, etc. Currently, dbt metrics are in beta and are subject to change.

metrics:

- name: number_of_pull_requests

label: Number of pull requests

model: ref('github__pull_requests')

description: "The number of pull requests"

type: count

sql: issue_id

timestamp: created_at_timestamp

time_grains: [day, week, month]

dimensions:

- state

- name: average_commits_per_pull_request

label: Average number of commits per pull request

model: ref('github__pull_requests')

description: "The average number of commits per pull request"

type: average

sql: commits

timestamp: created_at_timestamp

time_grains: [day, week, month]

You can expose these metrics definitions to downstream tooling, which drives consistency and precision in metric reporting. Check out this article on how you can use the headless BI Platform, Cube, to get up and running with dashboards using dbt metrics in 5 minutes.

Create a new release once you are ready for others to use your work! Be sure to use semantic versioning when naming your release. In particular, if new changes will cause errors for users of earlier versions of the package, be sure to use at least a minor release (e.g. go from 0.1.1 to 0.2.0).

The release notes should contain an overview of the changes introduced in the new version. You can see our release here.

Upload your package to dbt Hub (the package registry of dbt) to make your package available to the wider dbt community. To add your package, fork the dbt hub repository, alter the hub.json file, create a PR against main in your fork and then create a PR from your fork to the original repo. dbt usually takes 1 business day to give any commentary and approve your PR.

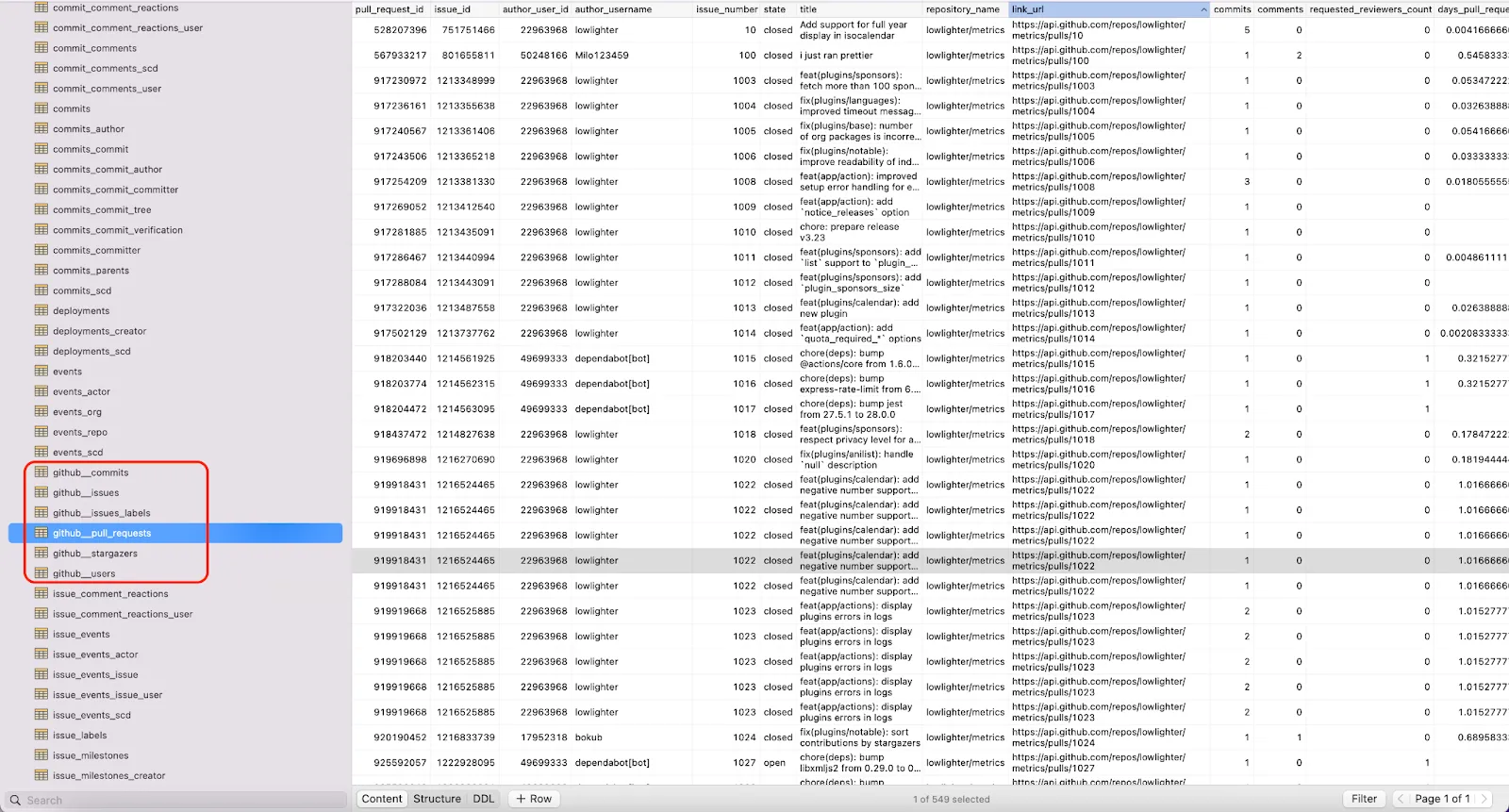

Below is the finished outcome of our package on an open-source Github repository. You can check out our package on dbt hub here.

Currently, our team is working with Airbyte on releasing packages for the most popular data sources. These include Hubspot, Salesforce, and Facebook Ads. We will also continue to update our existing models based on feedback from the community. You can find all the dbt packages for Airbyte connectors made by Cerebrium on our Github account.

If you would like to give us feedback or recommend other connectors, please let us know here. We look forward to seeing what you create and hope you will continue to build this community of open-source analytics engineering with us! If you have any questions you can join Cerebrium and Airbyte Slack communities.

Michael Louis is the Co-founder and CEO of Cerebrium, a modern data and AI platform for businesses that doesn’t require a single line of code or an entire data team.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.

Learn how to build robust ETL pipelines using Python, Docker, and Airbyte. A guide for data engineers covering setup, implementation, & best practices.

Using the Airbyte GitHub connector and Metabase, we can create insightful dashboards for GitHub projects.

Learn how to build an ELT pipeline to discover GitHub users that have contributed to the Prefect, Airbyte, and dbt repositories.