Build vs Buy: Data Pipeline Costs & Considerations

Summarize this article with:

✨ AI Generated Summary

Your business relies on data from various sources. To consolidate all this together, you need to deploy data pipelines that enable you to ingest data from diverse sources and make it available for downstream applications.

When deciding on data pipeline solutions, you can choose between building your custom solution or buying an existing one. Each path has unique advantages and challenges, and your choice can greatly impact your resources, efficiency, and overall business success.

This article will delve into the essential considerations and costs associated with the build vs buy dilemma in data pipeline management.

What Is the Build vs. Buy Framework?

The build vs. buy framework for data pipelines is a structured approach that assists you evaluate whether to build it yourself or buy a pre-built solution.

Build: This approach involves developing an in-house custom data pipeline. You may choose to build pipelines to achieve maximum control, customization, and alignment with specific business needs. However, this can be resource-intensive, requiring significant time, expertise, and ongoing maintenance.

Buy: Alternatively, you can opt for third-party solutions that provide pre-built connectors to build data pipelines. Buying can lead to faster implementation, reduced maintenance burden, and access to advanced features without extensive in-house development. However, it may come with limitations such as customization and flexibility.

Below is the table comparing the key differences between building and buying a framework.

What Is a Data Pipeline?

A data pipeline is a systematic approach to collecting, processing, and moving data from various sources to a destination where it can be analyzed and utilized effectively. Setting up a well-designed data pipeline allows you to streamline your data management processes, gain valuable insights from your data assets, and make informed decisions.

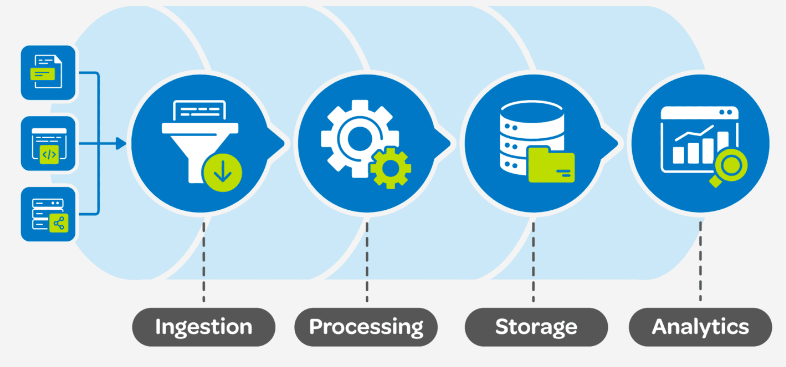

When setting up a data pipeline, you will typically go through several key phases:

Data Ingestion: The first phase in a data pipeline is data ingestion, where you bring data from multiple sources into the pipeline for processing. These sources could be databases, applications, social media platforms, or any other data repositories relevant to your organization.

Processing: Once you fetch the data from the source, you need to process it to clean, transform, and enrich the data. This phase may involve tasks such as data cleansing, normalization, aggregation, and the application of business rules to prepare the data for analysis.

Storage: After processing the data, you store it in a suitable data storage solution such as a data warehouse, data lake, or database. This allows you to efficiently access and retrieve the data for further analysis or reporting.

Analytics: In this phase, you analyze the stored data to extract insights, identify patterns, and make data-driven decisions. You may use tools like business intelligence software or data visualization platforms to derive valuable information from the data.

Build vs. Buy Data Pipelines: Why Does the Debate Occur?

The main difference between building and buying a data pipeline is that building allows for complete customization and control but requires significant time, expertise, and resources, while buying offers a ready-made solution with faster deployment and ongoing support but may have limitations in flexibility.

In the past, many organizations built their data pipelines from scratch to ensure they met their unique requirements. However, as data volumes grow, the process of building and maintaining custom data pipelines can become time-consuming and resource-intensive. This has led to the emergence of various data pipeline solutions and services in the market that offer pre-built functionalities.

Consequently, the debate about building vs. buying data pipelines has gained prominence. This debate centers around several key considerations: cost, time to market, flexibility, and scalability. On the one hand, buying a pre-built solution allows you to leverage industry best practices, reduce development time, and benefit from continuous updates and support.

On the other hand, building a custom pipeline offers you complete control and the ability to design a data pipeline that perfectly aligns with your specific data workflows and long-term strategic goals. Therefore, the decision isn't straightforward and depends heavily on your organization's existing capabilities, budget constraints, and specific business objectives.

Should You Build Vs. Buy Data Pipelines?

The decision to build or buy data pipelines is a critical one that can have significant implications for your organization. There are pros and cons to both approaches, and the right choice will depend on your specific business needs.

Pros of Building Data Pipelines

Let’s explore the benefits of building data pipelines in detail:

Tailoring to Specific Business Needs

Building your own data pipeline allows you to customize every aspect to fit your organization’s unique requirements. You can design the pipeline to handle specific data types, processing workflows, and transformations that are critical to your operations. This level of customization ensures that the pipeline aligns well with your business processes, enabling you to optimize performance and achieve desired outcomes.

Integration with Existing Infrastructure

When you build a data pipeline, you have the advantage of integrating it seamlessly with your existing systems and infrastructure. You can design the pipeline to work cohesively with your current databases and applications which can lead to improved data consistency and reliability. This capability is particularly beneficial if your organization relies on a complex ecosystem of tools and platforms that need to communicate effectively.

Full Control over the Technology Stack

One of the key advantages of building data pipelines is the ability to have full control over the technology stack used in the data processing workflows. You can choose the tools, frameworks, and technologies that best suit your requirements and align with your strategy. This control enables you to optimize performance, scalability, and security based on your organization's specific needs, ensuring that the data pipeline operates efficiently.

In-House Expertise and Knowledge Retention

Building data pipelines internally fosters the development of in-house expertise and knowledge retention. Your team gains valuable experience in designing, implementing, and maintaining data pipelines, enhancing their skills and capabilities in data management. This internal expertise not only supports the successful operation of the data pipeline but also enables ongoing improvement based on your organization's evolving data needs.

Cons of Building Data Pipelines

While building your data pipelines can offer several benefits, it's also important to consider the potential challenges. Let's explore some of the limitations associated with this process.

Development Time and Cost

Developing data pipelines from scratch can be time-consuming and resource-intensive, requiring significant investment in planning, design, and testing. This can result in delays in deploying the pipelines and increased development costs. You need to weigh these factors against the potential benefits to ensure that building a pipeline is the right choice for your organization.

Ongoing Maintenance

Once a data pipeline is built, it needs ongoing support to ensure its continued operation and performance. Maintenance tasks may include monitoring data flow, troubleshooting issues, optimizing processing workflows, and implementing updates. If your organization lacks sufficient resources or expertise, you may find it challenging to keep the pipeline running smoothly.

Need for Skilled Engineers

Building and maintaining data pipelines requires a team of skilled engineers with expertise in data engineering and related technologies. Additionally, the demand for skilled engineers in the data field is high, making it challenging to attract and retain top talent. Without a capable team, your ability to build and maintain an effective data pipeline could be impacted, leading to potential setbacks in your data strategy.

Potential Technical Debt

As with any custom development project, building your own data pipelines can lead to technical debt if not managed properly. Potential technical debt can arise if quick or suboptimal decisions are made during development. Over time, this technical debt can manifest as increased complexity and higher maintenance costs, requiring additional effort to rectify and optimize the data pipeline in the future.

Pros of Buying Data Pipelines

Let’s explore the key benefits of buying data pipelines in detail.

Reduced Time to Implementation

Commercial data pipeline solutions are ready to deploy and require minimal configuration, allowing you to integrate them into your existing infrastructure quickly. This lets you quickly start processing data and deriving insights without lengthy development cycles.

Instant Access to Advanced Features

Buying a data pipeline provides instant access to various advanced features that may be difficult or time-consuming to develop. Many commercial solutions have enhanced functionalities, such as automated workflows, transformation capabilities, and improved monitoring and alerting. You can enhance your data management processes by leveraging these features without requiring extensive development efforts.

High Availability and Scalability

Purchased data pipelines typically have built-in features for high availability and scalability, ensuring reliable performance even under heavy data loads. This enables you to process data efficiently, maintain performance under varying workloads, and scale your operations as your business expands.

Regular Updates and Improvements

Another significant advantage of purchasing data pipelines is the continuous updates and improvements offered by the vendor. Software providers regularly release updates to enhance functionality, fix bugs, and improve security. This ensures your data pipeline remains robust and aligned with your evolving business requirements without the need for you to manage these updates internally.

Cons of Buying Data Pipelines

Let's explore the limitations of buying data pipelines that you should be aware of:

Licensing Fees

When you purchase a pre-built solution, you may need to pay upfront licensing fees or ongoing subscription costs to use the software. Additionally, these fees can add up quickly if you have a large-scale data infrastructure. The licensing model may be based on factors like the number of users, data volume, or processing power, so the costs can be difficult to predict long term.

Limited Control over Technology

When you buy a data pipeline, you have limited control over the solution's underlying technology. You are bound by the constraints and limitations of the vendor's platform, which may not always align perfectly with your specific requirements or workflows. Customizations might be restricted or require additional costs, limiting your ability to tailor the solutions to your unique business needs.

Risk of Vendor Lock-in

Purchasing a data pipeline can potentially lead to vendor lock-in. It is a situation where you become heavily dependent on the vendor's solution and find it challenging to switch to an alternative provider. If the vendor's solution does not evolve in line with your requirements or industry trends, you may find yourself constrained by the limitations of the existing data pipeline.

Key Considerations for Build Vs. Buy Decision Making

When deciding whether to build or buy your data pipelines, there are several key factors to consider. Here are a few of them:

Business Needs

Your business needs should be the foremost consideration in this decision. Assess whether the data pipeline you require is unique to your organization or if existing solutions can meet your demands. Consider the nature of your data, the volume of data you need to process, the frequency of data updates, and the level of customization required.

Building a custom pipeline may be necessary if your organization has specific data processing requirements that off-the-shelf solutions cannot fulfill. Conversely, buying can save time and resources if your needs align with standard functionalities offered by commercial solutions.

Budget and Resources

Budget constraints and resource availability are crucial factors in the build vs. buy analysis. Building a custom solution may require a significant upfront investment in terms of time, expertise, and development costs.

On the other hand, purchasing a pre-built solution may involve licensing fees and ongoing maintenance costs. You should analyze the long-term financial implications of each option.

Complexity

Evaluate the complexity of your data processing requirements. Building a custom data pipeline gives you full control over the implementation, allowing you to address complex data processing scenarios specific to your business. However, this approach can be time-consuming and may require specialized expertise.

Buying a data pipeline offers a more straightforward and quick implementation but may lack the flexibility to handle complex data processing tasks.

Scalability

Scalability is crucial, especially as your organization grows and data volumes increase. A custom-built data pipeline can be designed to scale with your business as it expands, providing greater flexibility and long-term viability.

Conversely, a purchased solution may have limitations in terms of scalability, potentially leading to costly upgrades in the future.

Data Connectors

Evaluate the data connectors required for your data pipeline. Consider their compatibility with your existing data sources and systems. Building a custom data pipeline allows you to create tailored connectors to ensure seamless data integration, providing more flexibility in handling diverse data sources and formats.

In contrast, a pre-built solution may offer a range of connectors but may not fully align with your specific data sources and requirements.

How Can Airbyte Help with Your Data Pipeline Needs?

Manually building data pipelines involves significant custom coding and development efforts, which can be time-consuming and challenging. Therefore, to overcome this, you should leverage platforms like Airbyte to simplify the complexities associated with data pipeline creation.

Airbyte is a robust data integration and replication platform that provides a structured and automated approach to collecting and processing data, ensuring that sensitive information is handled securely.

It can significantly enhance your data pipeline needs, offering both an open-source solution and a cloud-based option tailored to your requirements.

Airbyte Open Source

With Airbyte's open-source platform, you gain access to a flexible and customizable data pipeline solution. This option allows you to easily connect to various data sources and destinations, enabling you to ingest, transform, and manage your data efficiently.

You can leverage a wide range of pre-built connectors, which saves you time and effort in the setup process. Additionally, being open-source means you can modify the platform to suit your specific needs. This ensures that your data pipeline can evolve alongside your business.

Airbyte Cloud

If you prefer a more managed approach, Airbyte Cloud offers a fully hosted solution that further simplifies the data pipeline process. With this option, you can focus on deriving insights from your data rather than managing infrastructure.

Airbyte Cloud offers automatic updates, scalability, and enhanced security features, allowing you to integrate data from multiple sources without any overheads seamlessly.

Here are the key features of Airbyte that simplify the process of building data pipelines:

Connectors: Airbyte provides an extensive library of over 350+ pre-built connectors, enabling you to connect and synchronize data from multiple sources seamlessly. These connectors help you to integrate data from diverse sources, including databases, files, APIs, and more, into a centralized repository without extensive coding.

Seamless Integration with AI Frameworks: Airbyte allows you to integrate with popular AI and machine learning frameworks, such as LangChain and LlamaIndex. This enables you to build retrieval-based LLM (Large Language Model) applications on top of the data synced using Airbyte.

Change Data Capture: Airbyte's Change Data Capture (CDC) feature enables you to capture all data changes at the source and reflect them in the destination system. This helps maintain data consistency across platforms.

Development flexibility: It offers a user-friendly interface and intuitive workflows, making it easily accessible for everyone. It offers diverse options for developing data pipelines, such as UI, API, Terraform Provider, and PyAirbyte, ensuring simplicity and ease of use.

Advanced Transformations: Airbyte supports integration with popular tools like dbt (data build tool). This enables you to leverage dbt's powerful features for customized data transformations within your Airbyte pipelines.

Alerts and Monitoring: Airbyte automates many processes involved in data integration, ensuring high-quality data flows. You can monitor the status of your sync jobs and receive notifications for any issues, which helps maintain data integrity.

Robust Security Measures: Airbyte incorporates various security features such as data encryption, audit trails, and role-based access control (RBAC). This ensures that your sensitive data is protected and compliant with industry standards like GDPR and HIPAA.

Summary

The decision to build or buy a data pipeline is a strategic one that requires consideration of your organization's specific needs, resources, and long-term goals. While building offers greater control and customization, it requires substantial investments and ongoing maintenance. Buying provides faster implementation and vendor support but may limit customization options.

However, opting for a ready-to-go solution like Airbyte Cloud can offer a streamlined and efficient approach to managing your data pipelines. It helps you enhance productivity, reduce implementation time, and drive better organizational outcomes.

FAQ’s

How much does it cost to build a data pipeline?

The cost of building a data pipeline can vary significantly based on its complexity and the specific requirements of your organization. According to Wakefield Research’s survey, the typical cost for a team of data engineers to build and maintain data pipelines is estimated at around $520,000 per year.

How long does it take to build a data pipeline?

Building a data pipeline takes 3 to 4 weeks on average for a basic setup. However, more complex pipelines can take significantly longer, potentially extending to several months.

Build Vs. Buy: Which is better for data pipelines?

Build offers customization and control but requires significant time, expertise, and maintenance effort. Buying pre-built data pipelines can save time and effort but may have limited customization and ongoing subscription costs. However, the best choice depends on your specific needs and resources.

Why is Airbyte the best data pipeline tool?

Airbyte is one of the best data pipeline tools due to its extensive features and flexibility. It offers over 350 pre-built connectors, enabling seamless data integration from various sources without extensive coding. Its low-code Connector Development Kit (CDK) further enhances this ease of use, allowing you to create custom connectors quickly.

.png)