Latest Data Engineering Roadmap For Beginners of 2026

Summarize this article with:

✨ AI Generated Summary

Modern data engineering in 2025 requires expertise beyond traditional ETL, including real-time streaming, AI automation, cloud-native platforms, and strong governance to avoid technical debt and burnout. Key skills include:

- Proficiency in Python and SQL for data manipulation, automation, and querying.

- Understanding of big data frameworks (Spark, Flink, Kafka) and cloud platforms (AWS, Azure, GCP).

- Familiarity with AI/ML integration, vector databases, and MLOps for scalable, real-time data workflows.

- Use of tools like Airbyte for efficient, compliant data integration and hands-on experience.

Building a solid foundation in programming, data structures, cloud services, and practical projects is essential for launching and advancing a data engineering career.

Modern data professionals face unprecedented pressures: managing explosive data growth, mastering rapidly evolving technologies, and building reliable systems. The data-engineering landscape of 2026 demands more than traditional ETL knowledge.

Today's engineers must navigate real-time streaming architectures, AI-powered automation, cloud-native platforms, and sophisticated governance frameworks. Poor infrastructure decisions create cascading problems: failed machine-learning deployments, unreliable analytics, and technical debt that consumes engineering resources without delivering business value.

This comprehensive roadmap addresses the realities facing aspiring data engineers in 2025. Whether you're transitioning from another technical field, entering the workforce, or updating your skills for career advancement, this guide provides the strategic framework needed to build expertise systematically while avoiding common pitfalls that derail technical careers.

What Is Data Engineering and Why Does It Matter in Today's Technology Landscape?

Data engineering is a dynamic field dedicated to designing, building, and maintaining robust data systems that store, process, and analyze vast amounts of data. It serves as the backbone of data science and analytics, enabling data scientists and analysts to extract meaningful insights from raw data.

Who Are Data Engineers and What Responsibilities Do They Handle Daily?

Data engineers are the architects of our data-driven world. They design, build, and maintain systems that move, store, and process data. Their primary responsibilities involve managing data pipelines, databases, and data warehouses.

Daily Tasks and Responsibilities

On any given day, a data engineer might design a cloud-based pipeline to ingest streaming data, automate ETL processes, or develop models to transform data and enhance performance. They troubleshoot workflows, monitor job failures, and optimize queries across relational databases or data warehouses, maintaining data-storage integrity and efficient data-pipeline development.

Their role is crucial in the broader context of data science, ensuring data is prepared and available for analysis by data scientists. Real-time data streaming is particularly important in IoT applications, utilizing tools like Google Pub/Sub and Azure Event Hubs to manage the influx of live data.

What Are the Current Challenges Facing Data Engineers in 2026?

Data engineers face a complex landscape of technical and organizational challenges that significantly impact their effectiveness and job satisfaction:

- Burnout Crisis: The industry is experiencing a burnout crisis, with many data engineers reporting burnout symptoms and planning to leave their current positions within the next year.

- Technical Debt: Technical debt represents one of the most persistent challenges, with organizations often skipping fundamental processes like proper data modeling in favor of short-term ROI. This creates long-term maintenance burdens that fall disproportionately on data professionals.

- Data quality issues: Data quality issues consistently rank as the most significant operational challenge, affecting the majority of data-engineering workflows. Poor data quality creates cascading problems throughout the entire data ecosystem, from inaccurate machine-learning models to unreliable business-intelligence reports.

- Manual processes: Manual processes consume an overwhelming portion of data engineers' time, with many citing this as a primary driver of burnout. Only a small fraction of data teams' time is spent on high-value activities.

- Access barriers: Access barriers and organizational silos limit engineers' effectiveness, leaving them assigned ambitious projects without adequate data access.

- Technological Change: The rapid pace of technological change creates continuous pressure for skills development, balancing staying current with delivering existing projects.

How Are AI and Machine Learning Reshaping Data Engineering Practices?

The integration of artificial intelligence and machine learning capabilities into data engineering workflows is transforming the field. These technologies introduce new requirements and opportunities that data engineers must understand and implement.

Vector databases like Chroma, Pinecone, and Milvus store and index high-dimensional vector data for similarity search at scale. MLOps integration obliges engineers to build pipelines that cover the entire ML lifecycle: feature stores, automated training, model versioning, and continuous deployment.

Real-time AI processing demands ultra-low-latency architectures for fraud detection, dynamic pricing, and other millisecond-level decisions. AI-powered automation optimizes pipelines, predicts failures, and improves data-quality monitoring automatically.

Knowledge graphs provide structured, contextual data representations to support advanced reasoning. Generative-AI workloads introduce new requirements for unstructured-data pipelines, vector storage, and high-performance compute.

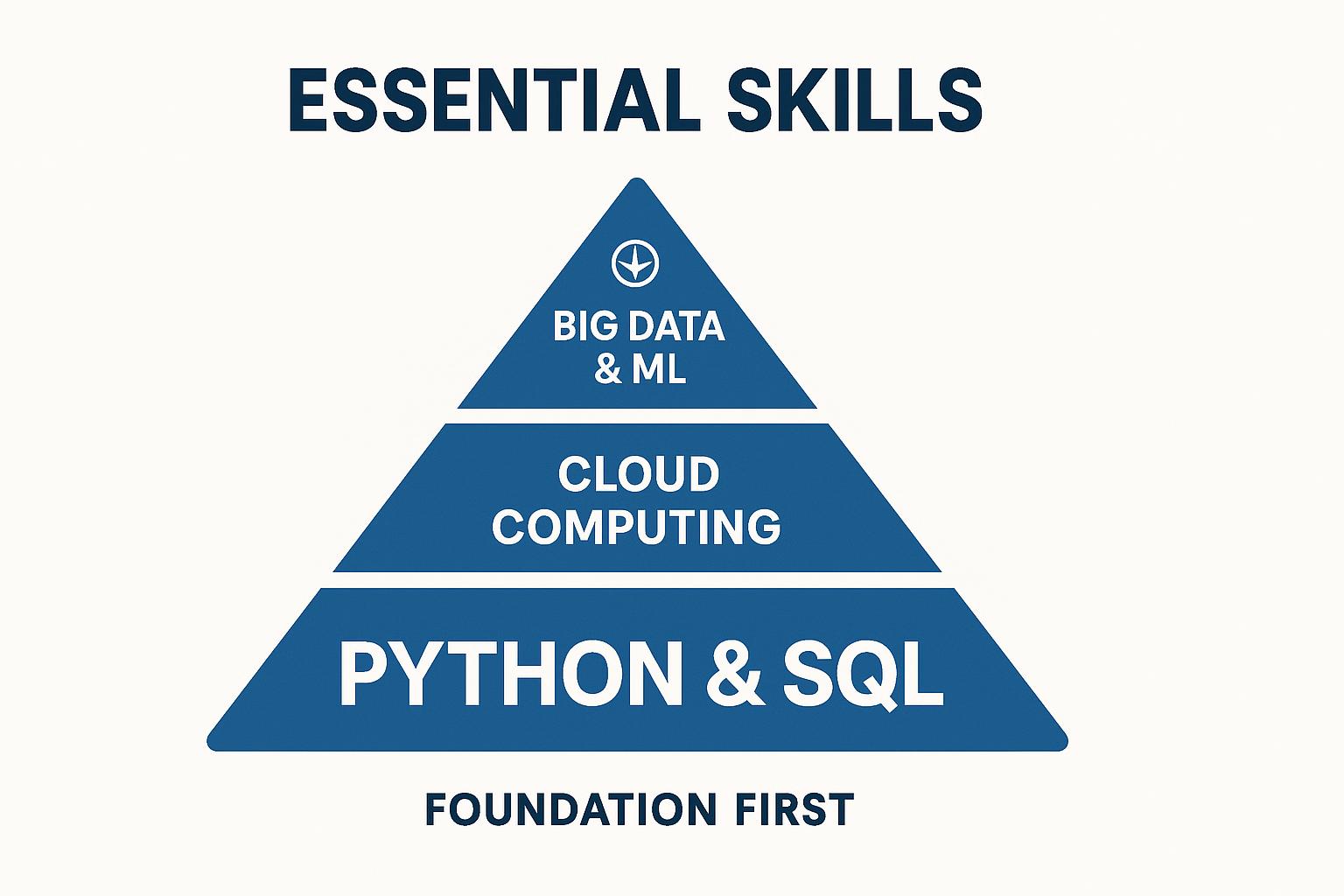

What Should You Know About Building Your Data Engineering Foundation?

Before diving into tools or projects, build a rock-solid base of fundamentals. This foundation will support all your future learning and professional growth in the field.

1. Learn Core Programming Languages

Python and SQL are non-negotiable. Start with functions, loops, and file manipulation in Python. Practice JOIN, WHERE, and GROUP BY in SQL.

2. Strengthen Your Understanding of Data Structures and Systems

Know arrays, hash maps, queues, and trees. Learn how distributed systems and cloud platforms like AWS and GCP handle data and memory.

3. Build Technical Discipline

Get comfortable with the command line, version control using Git, and scripting to automate everyday tasks. These skills will serve as the foundation for all your future data engineering work.

Why Should You Master Python and SQL for Data Engineering Success?

Why Python Matters for Data Engineers

Data cleaning, scripting, automation, and cloud-function integration all rely on Python. Libraries such as Pandas, PySpark, Matplotlib, and Seaborn extend Python's reach from ETL to visualization.

Python's versatility makes it essential for data engineers who need to work across different aspects of the data pipeline. From data extraction and transformation to automation and integration with cloud services, Python provides the flexibility and extensive library ecosystem needed for modern data engineering.

SQL Is Non-Negotiable

SQL powers relational-database interactions, ETL queries, and troubleshooting in cloud databases like Snowflake, BigQuery, and Redshift. Every data engineer must be proficient in writing complex queries, optimizing database performance, and understanding relational data models.

What Do You Need to Know About Big Data and Streaming Technologies?

Big Data vs. Traditional Data

Traditional relational databases struggle with the scale and complexity of big data, which often includes unstructured or semi-structured data. Frameworks such as Apache Spark, Apache Flink, and Kafka provide the fault tolerance and speed required.

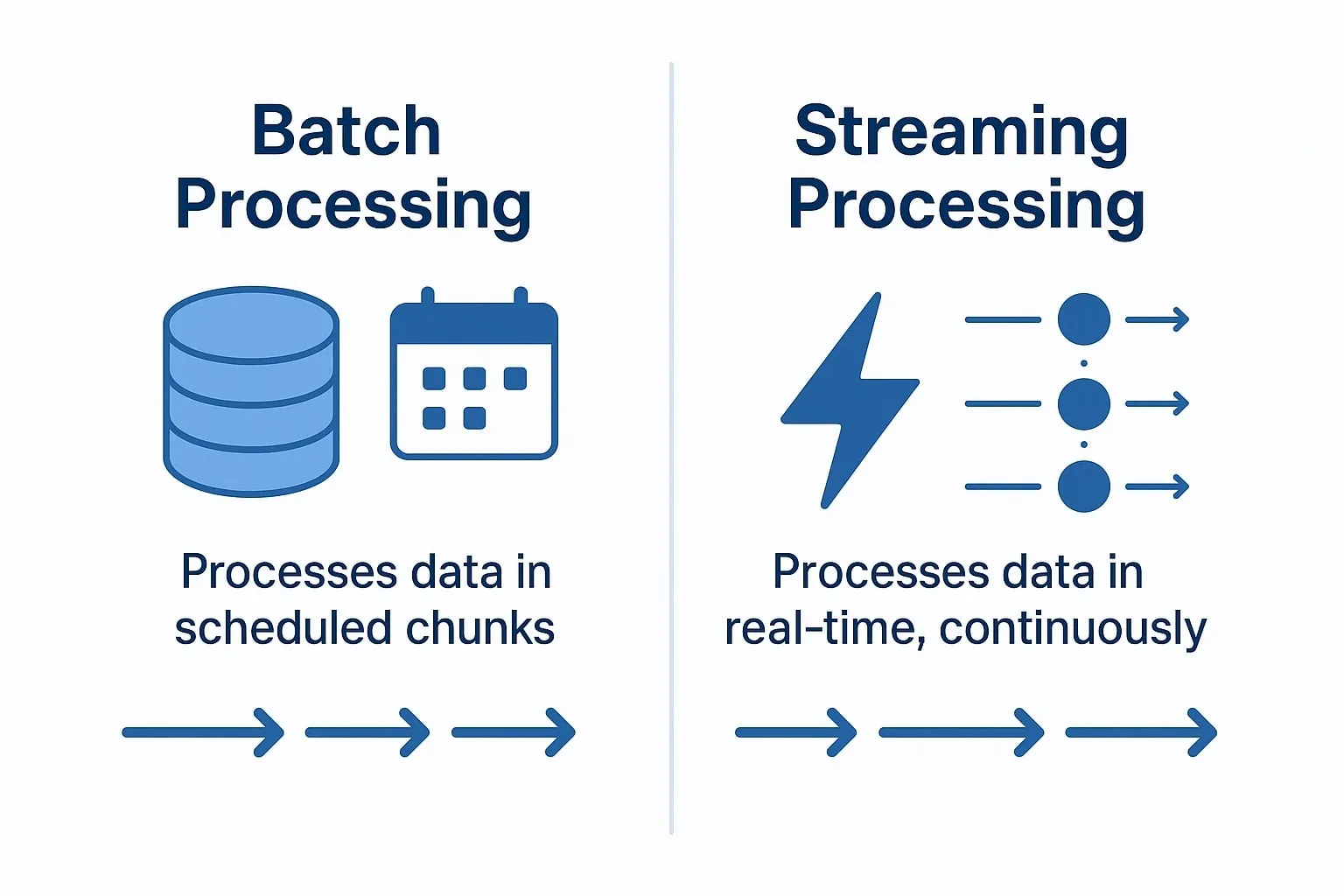

Batch vs. Streaming Processing

- Batch processing: collects and processes data in chunks, ideal for periodic reports.

- Streaming data: processes each event in real time, crucial for applications like fraud detection.

How Can You Master Cloud Computing and Cloud Platforms?

Most modern data pipelines run in the cloud, so understanding cloud computing is foundational.

Platforms like AWS, Azure, and Google Cloud offer flexibility, speed, and scalability. You'll use them to run Spark clusters, manage storage solutions like S3, and operate managed services like Redshift, BigQuery, and Databricks.

- Core Services to Explore: Start with virtual machines (EC2), object storage (S3/Blob), and serverless compute tools (Lambda/Azure Functions). Then dive into managed services for data integration, transformation, and orchestration.

- Get Certified (Optional): Certifications like AWS Certified Data Engineer, Google's Professional Data Engineer, or Azure Data Engineer Associate can strengthen your résumé.

How Can You Accelerate Your Data Engineering Journey with Airbyte?

Airbyte is an open-source platform with 600+ pre-built connectors, designed to reduce integration costs, modernize infrastructure, and support data democratization while maintaining governance.

Comprehensive Connector Ecosystem

The connector ecosystem covers databases, APIs, files, and SaaS applications, expandable via community contributions. The No-code Connector Builder and AI Assistant help create new connectors quickly without extensive development overhead.

Flexible Deployment Options

Deployment flexibility includes cloud, hybrid, and on-premises options. Enterprise features provide end-to-end encryption, RBAC, audit logging, and SOC 2/GDPR/HIPAA compliance.

Learning Benefits for Data Engineers

PyAirbyte integration offers hands-on experience with real-world governance exposure and modern-stack compatibility with Snowflake, Databricks, and BigQuery. Using Airbyte in your projects provides practical experience with enterprise-grade data integration tools.

The platform is designed to handle production-scale data engineering challenges and solutions, giving you exposure to large-scale data processing environments.

How Do You Launch Your Data Engineering Career with Confidence?

1. Master Essential Data Engineering Skills

Design scalable systems, master cloud computing, and lead complex workflows. Focus on building expertise in the technologies that matter most for modern data engineering.

2. Develop Collaboration and Business Impact

Partner with data scientists to optimize warehouses and drive innovation. Learn to translate technical solutions into business value and communicate effectively with stakeholders.

3. Apply Knowledge Through Practical Projects

Use tools like Airbyte, build real projects, and manage structured data securely. Create a portfolio that demonstrates your ability to handle real-world data engineering challenges.

4. Build Robust Technical Expertise

Hone abilities in data storage, processing, and infrastructure management. Stay current with emerging technologies while building deep expertise in fundamental concepts.

Moving Forward

Start small, iterate often, and stay curious. The world needs more engineers who can transform complexity into clarity through well-structured, validated, scalable data pipelines. Focus on continuous learning and practical application to build the skills that organizations desperately need in today's data-driven economy.

Frequently Asked Questions

What programming languages should I learn first for data engineering?

Python and SQL are essential. Python offers versatility for scripting and automation; SQL is fundamental for database operations and querying.

How long does it take to become job-ready as a data engineer?

With dedicated study and hands-on practice, 6–12 months is typical, depending on background and project depth.

Do I need a computer science degree?

Helpful but not required. Demonstrable skills, problem-solving ability, and project experience matter more.

What's the difference between data engineers and data scientists?

Engineers build and maintain the infrastructure; scientists use that infrastructure to extract insights and build models.

Should I focus on cloud platforms or on-premises technologies?

Cloud platforms like AWS, Azure, and GCP dominate modern data engineering. Start with one provider and its data services before branching out.

.webp)