What Are the Common Pitfalls to Avoid in ETL Pipeline Design?

Summarize this article with:

✨ AI Generated Summary

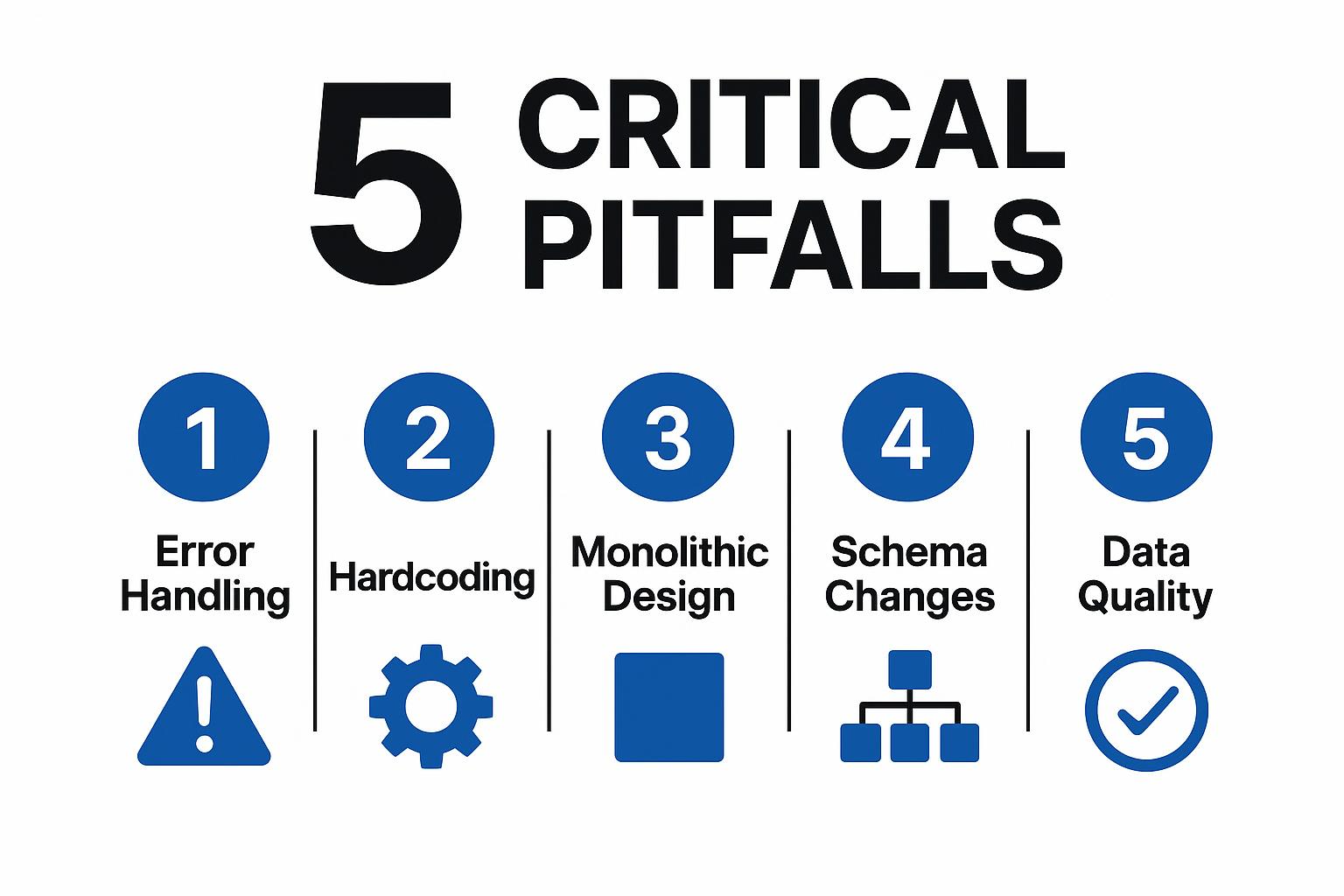

A rushed ETL pipeline can become critical infrastructure plagued by technical debt, causing data corruption and operational failures. The five key design pitfalls to avoid are:

- Poor error handling and monitoring, leading to silent failures and corrupted data.

- Hardcoded configuration values that hinder deployment flexibility and security.

- Monolithic pipeline designs causing single points of failure and difficult scaling.

- Neglecting schema change management, resulting in pipeline breaks or silent data corruption.

- Insufficient data quality validation, allowing bad data to propagate and erode trust.

Robust ETL architectures embrace failure tolerance, comprehensive observability, externalized configuration, modern integration platforms, infrastructure as code, and thorough testing to build resilient, maintainable data pipelines.

A "quick fix" ETL pipeline built for a quarterly report becomes critical business infrastructure supporting daily operations. Poor error handling masks data corruption for weeks while executives make decisions based on corrupted analytics. When the pipeline finally fails during a cloud migration, the team discovers months of garbage data processing.

This scenario illustrates how ETL design decisions made under pressure create technical debt that compounds exponentially. What starts as expedient shortcuts become maintenance nightmares that consume engineering resources and threaten business operations. Proper ETL architecture design prevents these expensive mistakes.

This guide examines the five most common and costly ETL pipeline design pitfalls that separate robust, maintainable systems from technical debt disasters. We'll explore why these mistakes happen, their business impact, and proven strategies to avoid them while building resilient data infrastructure.

Why ETL Design Mistakes Become Expensive

Poor design decisions create cascading maintenance problems that grow worse over time. Teams spend 60-80% of their time maintaining fragile systems instead of delivering business value. Data quality issues erode stakeholder confidence when dashboards show inconsistent numbers during critical reporting periods.

Design decisions that work with gigabytes fail catastrophically with terabytes. Monolithic architectures handling a few data sources become unmanageable with dozens of integrations. Performance problems compound when architectures can't scale horizontally or handle increasing data volumes efficiently.

The 5 Most Critical ETL Pipeline Design Pitfalls

1. Ignoring Error Handling and Monitoring

The most catastrophic ETL failures happen silently, corrupting data for weeks before discovery. Teams build pipelines that work perfectly during development but fail unpredictably in production without visibility into problems.

Common Error Handling Failures:

- No retry logic: Temporary network issues cause permanent pipeline failures

- Silent exception swallowing: Errors get caught but not logged, leading to incomplete processing

- All-or-nothing failure modes: Single record errors kill entire batch jobs

- Missing data validation: Pipelines continue processing malformed or empty datasets

Monitoring and Observability Gaps:

- No pipeline health metrics: Teams don't know if pipelines are running or processing incorrect volumes

- Missing business logic validation: Technical success doesn't guarantee data quality

- Inadequate alerting: Critical failures discovered through user complaints

- Poor logging practices: Insufficient detail for debugging production issues

Silent failures create the worst scenario: executives making strategic decisions based on corrupted data. Financial reporting shows incorrect revenue figures. Marketing campaigns target wrong customer segments. Inventory systems show phantom stock levels.

Design Solutions:

- Comprehensive retry mechanisms with exponential backoff for transient failures

- Dead letter queues for records that can't be processed after multiple attempts

- Circuit breaker patterns to prevent cascade failures across dependent systems

- Real-time monitoring of data volume, quality metrics, and processing latency

- Automated alerting for both technical failures and business rule violations

2. Hardcoding Configuration Values

Hardcoded configuration values create brittle pipelines that break during deployments and infrastructure changes. Database connections, file paths, and credentials embedded in code make pipelines impossible to promote across environments.

Common Hardcoding Mistakes:

- Database connection strings embedded in scripts with production credentials

- File system paths that only exist in specific environments

- API endpoints and tokens written directly into transformation logic

- Business logic parameters like thresholds coded as constants

- Environment-specific configurations requiring code changes for deployment

Hardcoded values turn simple deployments into complex code modification exercises. Infrastructure changes like server migrations break multiple pipelines simultaneously. Security becomes impossible when credentials are scattered throughout codebases.

Teams can't dynamically adjust pipeline behavior without code deployments. Business users can't modify processing parameters without developer intervention. New environments require extensive code modifications instead of configuration changes.

Configuration Management Solutions:

- Environment variables for system-level configurations like database connections

- Configuration files stored separately from code with environment-specific overrides

- Secret management systems for credentials and authentication information

- Parameter stores for business logic configurations needing runtime modification

- Feature flags for enabling/disabling pipeline components without code changes

3. Designing Monolithic, All-or-Nothing Pipelines

Monolithic pipeline designs create single points of failure that make debugging and scaling nearly impossible. When everything processes as one massive batch job, component failures kill entire workflows.

Monolithic Architecture Problems:

- Single failure point: One bad data source stops processing for all sources

- No restart capability: Failures require reprocessing everything from scratch

- Debugging complexity: Finding root causes requires analyzing entire workflows

- Resource contention: All processing competes for same compute resources

- Deployment risk: Updates require redeploying entire pipelines

Monolithic pipelines make incident response exponentially more difficult. Partial data recovery becomes impossible—teams must choose between incomplete datasets or waiting for full reprocessing. Performance optimization becomes guesswork when multiple sources share execution resources.

All-or-nothing processing means business users lose access to all data when any component fails. Critical reports become unavailable because one non-essential data source has connection issues.

Modular Design Solutions:

- Component isolation: Each data source runs as independent, loosely coupled processes

- Granular restart capability: Failed components restart from checkpoints without affecting others

- Resource allocation: Different components scale independently based on requirements

- Dependency management: Clear interfaces enable parallel processing and reduce cascade failures

- Incremental deployment: Individual components update without affecting entire pipelines

4. Neglecting Schema Change Management

Source system schema changes represent one of the most common pipeline failure modes. When upstream systems add columns or change data types, poorly designed pipelines break immediately or process corrupted data silently.

Schema Change Failure Modes:

- Hard-coded column mappings that break when systems add or remove fields

- Strict schema validation that rejects data when new columns appear

- Data type assumptions that fail when systems change field formats

- Missing column handling that creates null values or processing errors

- No change detection mechanisms to identify schema evolution

The most dangerous schema changes don't cause immediate failures. When source systems rename critical fields, pipelines may continue running while mapping wrong columns to destination tables. Financial systems might swap revenue and cost columns.

Schema changes typically happen without coordination between source system owners and data teams. Database upgrades and application deployments introduce changes that break multiple downstream pipelines simultaneously.

Schema Evolution Solutions:

- Automated schema detection that identifies changes before processing begins

- Flexible column mapping that adapts to new fields without code changes

- Schema versioning to track changes and maintain backward compatibility

- Data type coercion with fallback handling for incompatible format changes

- Change impact analysis to understand downstream effects before processing

Learn how to handle schema changes through automated detection and flexible mapping strategies.

5. Poor Data Quality Validation

Data quality validation represents the last defense against corrupted analytics and wrong business decisions. Pipelines that don't validate incoming data propagate garbage through entire analytics stacks.

Validation Gaps and Blind Spots:

- No data freshness checks: Processing stale data without detecting when sources stop updating

- Missing business rule validation: Allowing impossible values like negative quantities

- Incomplete record validation: Processing partial records that failed extraction

- No statistical anomaly detection: Missing dramatic changes in volume or patterns

- Insufficient referential integrity: Loading data with broken table relationships

Poor quality data compounds as it flows through transformation pipelines. Initial validation failures create cascading errors in calculated fields and derived metrics. Financial calculations become wrong when base data contains errors.

The cost of fixing data quality issues increases exponentially as bad data propagates. Correcting errors in source data requires simple updates. Fixing corruption in analytics requires rebuilding historical data and validating entire reporting chains.

Quality Validation Strategies:

- Data freshness monitoring to detect when source systems stop providing updates

- Business rule validation checking logical constraints and expected value ranges

- Statistical profiling to identify anomalies in volume, distribution, and patterns

- Cross-system consistency checks validating relationships between data sources

- Automated quality scoring providing continuous assessment of data reliability

How to Design Resilient ETL Architectures

Embrace Failure as Normal Operation

Robust data pipeline architecture assumes failures will happen and designs for graceful degradation. Networks become unreachable, databases become unavailable, and source systems provide corrupted data. Robust pipelines handle these scenarios without human intervention.

Design principles for failure tolerance:

- Idempotent processing: Pipeline reruns produce identical results without corrupting existing data

- Checkpoint-based recovery: Failed jobs restart from the last successful stage

- Circuit breaker patterns: Failing components are isolated to prevent cascade failures

- Graceful degradation: Critical processing continues even when non-essential components fail

Build Observability from Day One

Comprehensive monitoring enables proactive problem detection and rapid incident response. Teams need visibility into both technical metrics and business KPIs to understand pipeline health.

Essential observability components:

- Pipeline execution metrics: Processing times, success rates, and resource utilization

- Data quality indicators: Record counts, validation failures, and statistical anomalies

- Business rule compliance: Automated checking of critical business logic

- Dependency health: Monitoring of source systems, databases, and external services

- Real-time alerting: Immediate notification of failures and performance degradation

Design for Configuration and Evolution

Externalize all configuration to enable pipeline evolution without code changes. Business requirements change frequently, and architectures must adapt without requiring development cycles.

Configuration management strategies:

- Environment separation: Clean boundaries between development, staging, and production

- Parameter externalization: Business logic rules stored separately from code

- Feature flags: Runtime control over pipeline behavior and component activation

- Version compatibility: Backward-compatible changes that don't break existing deployments

Best Practices for Future-Proof Pipeline Design

Start with Modern Integration Platforms

Legacy ETL tools force teams to implement error handling and monitoring as custom development projects. Modern platforms provide these capabilities as built-in features, allowing teams to focus on business logic.

Platform selection criteria:

- Built-in error handling with retry mechanisms and dead letter queue support

- Configuration management through administrative interfaces rather than hardcoded values

- Modular architecture enabling independent component development and deployment

- Schema change detection with automated handling of source system evolution

- Integrated monitoring providing visibility into technical and business metrics

Plan for Integration Complexity

Modern data pipelines connect dozens of data sources, transformation tools, and destination systems. Poor integration planning creates brittle connections that break when any component evolves.

Integration complexity challenges:

- Point-to-point connections that create maintenance nightmares as systems multiply

- Custom connector development for every new data source instead of leveraging existing solutions

- Inconsistent data formats across different source systems requiring custom transformation logic

- Authentication sprawl with different credential management for each integration

- Version incompatibilities when source systems or destinations upgrade their APIs

Modern Integration Solutions:

- Standardized connector ecosystems that provide pre-built integrations for common data sources

- Unified authentication management across all data source connections

- Consistent data formatting and schema handling across different source types

- Version management with backward compatibility for API changes

- Integration monitoring with health checks and automatic retry mechanisms

Platforms like Airbyte provide 600+ connectors that eliminate custom development overhead while maintaining consistency across integrations. This reduces the maintenance burden and allows teams to focus on business logic rather than connection management.

Adopt Infrastructure as Code

Version control pipeline configurations alongside application code to enable reproducible deployments and easy rollback capabilities. Infrastructure as code prevents configuration drift and enables automated testing of pipeline changes.

Implement Comprehensive Testing

Test pipeline logic with realistic data volumes and failure scenarios before production deployment. Automated testing catches compatibility issues, performance problems, and data quality failures early in development cycles.

Following established data ingestion practices from the start prevents technical debt accumulation and operational nightmares. Performance testing with projected data volumes identifies bottlenecks before they impact production systems.

Conclusion

ETL pipeline design decisions made under pressure create technical debt that compounds exponentially. The five critical pitfalls: poor error handling, hardcoded configurations, monolithic architectures, inadequate schema management, and missing data validation, transform simple integration projects into operational nightmares.

Teams that invest in robust design patterns early save months of debugging and prevent data corruption incidents that erode stakeholder trust. Organizations that prioritize architectural quality gain competitive advantages through faster data delivery and higher system reliability. Ready to build resilient ETL pipelines? Explore Airbyte's capabilities for avoiding these expensive design mistakes

.webp)