What's the Best Way to Join On-Premise Data with Cloud Data?

Summarize this article with:

✨ AI Generated Summary

Hybrid data integration between on-premise and cloud systems can be achieved via three main approaches:

- Live Federation: Queries data in place with low latency, ideal for real-time, ad-hoc queries and strict compliance needs.

- Batch Replication: Scheduled data copying suitable for large volumes and analytics workloads with hour-level latency.

- Real-time CDC: Streams data changes continuously for sub-second freshness, supporting operational dashboards and event-driven apps.

Security best practices include encrypted connections, least-privilege access, and compliance with data residency laws. Airbyte offers flexible deployment options and 600+ connectors to simplify hybrid integration, with batch replication recommended as a low-risk starting point.

Data teams manually exports CSV files from on-prem SQL Server every night, uploads to S3, then imports to Snowflake. The process takes 3 hours, breaks monthly, and blocks morning reports until someone can fix the failed pipeline.

Three proven approaches exist for joining on-premise and cloud data: live federation, batch replication, and real-time CDC. Your choice depends on latency tolerance, data volume, and security requirements. Federation queries data in place with millisecond response times, batch replication copies data on schedule with hour-level latency, and CDC streams changes continuously with second-level freshness.

What Are Your Three Options for Hybrid Data Integration?

When Should You Use Live Federation?

Live federation queries on-premise data directly from cloud tools without copying anything. You always see the latest values while avoiding storage costs and data governance complexity. Every query depends on your source system's performance and network connectivity.

This approach is often used when real-time access to integrated data from multiple sources is needed, regardless of dataset size or update frequency. Ad-hoc BI queries where freshness matters more than heavy transformation also benefit from direct access. When regulations forbid moving data off-premises but you still need unified analytics, federation becomes the natural choice.

Example: Power BI Gateway connecting to on-prem SQL Server for real-time dashboard queries.

Pros: Zero data duplication, lowest storage cost, always current data

Cons: Network-dependent performance, complex security surface, limited transformation capabilities

When Is Batch Replication the Right Choice?

Batch replication moves data in scheduled chunks during off-peak hours. You extract full or incremental datasets, load them into cloud storage, and run transformation jobs to prepare analytics-ready tables. The cloud copy stays as fresh as your last sync.

This method handles large datasets exceeding 100GB where real-time streaming would be cost-prohibitive. Analytics workloads that tolerate hours of latency but demand consistent, query-ready data are ideal candidates. You get predictable resource usage and mature tooling ecosystems.

Example: Using Airbyte to sync nightly from Oracle to BigQuery with automatic schema detection and 600+ pre-built connectors.

Pros: Handles massive volumes, cost-effective for analytics, predictable performance

Cons: Data latency, potential for failed batches, requires scheduling coordination

How Do You Implement Real-Time CDC?

Change Data Capture tracks every database change by reading transaction logs and streaming those events to downstream systems. Only the deltas flow over the network, keeping cloud systems synchronized within seconds of on-premise updates.

You need CDC when operational dashboards require fresh metrics or when microservices must react to data changes immediately. Event-driven applications that span on-premise and cloud infrastructure depend on this continuous synchronization.

Example: Streaming order updates from on-prem PostgreSQL to cloud data warehouse for real-time inventory management.

Pros: Sub-second latency, minimal network impact, enables real-time analytics

Cons: Complex infrastructure requirements, potential data loss risks, requires specialized monitoring

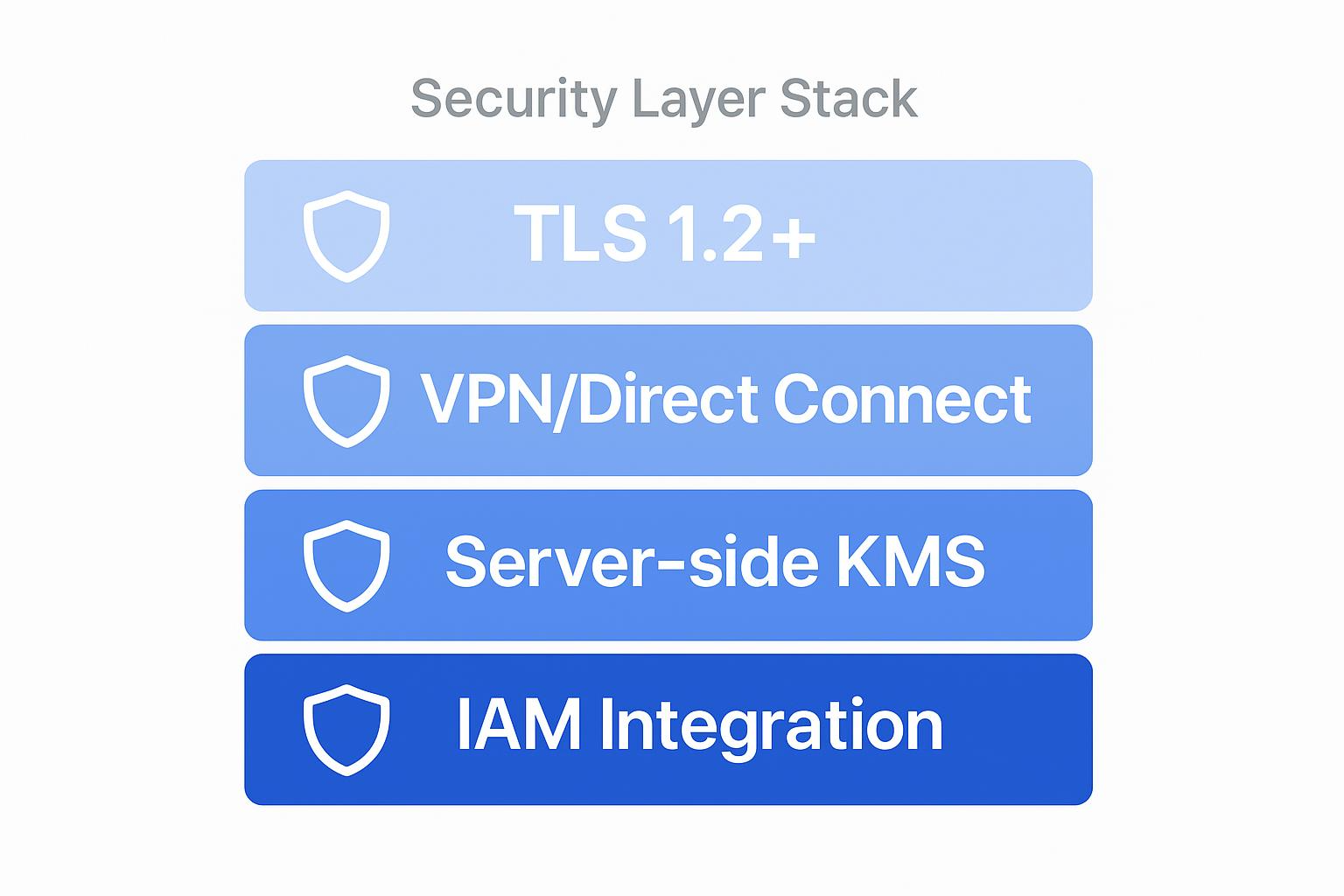

What Security Considerations Matter Most?

Network connectivity forms the foundation of secure hybrid integration. Private VPN connections shield data from internet exposure, while direct connect services reduce latency for high-volume transfers. The public internet requires additional encryption and access controls.

Encrypt data in transit using TLS 1.2 or higher for all connections. Protect data at rest with server-side encryption using cloud KMS or customer-managed keys.

Automate certificate rotation to prevent pipeline failures from expired credentials.

Implement least-privilege access using service accounts with specific database permissions. Integrate cloud IAM with on-premise directory services to maintain consistent access policies. Enable comprehensive audit logging to track all data movement and access patterns.

Consider data residency requirements that may restrict where information can be stored or processed. GDPR and HIPAA regulations often influence whether federation, replication, or CDC approaches are permissible for your use case.

How Do You Choose the Right Approach?

Your integration strategy should match your specific constraints and requirements. Work through these decision criteria to identify the best approach:

- Can data leave on-premises? Compliance restrictions may require federation to keep data stationary

- What latency can you tolerate? Less than 1 minute requires CDC, less than 1 hour allows batch replication, flexible timing works with federation

- How much data moves daily? Under 10GB enables federation, over 100GB favors batch replication

- What's your complexity tolerance? Low complexity suggests batch replication, high complexity enables CDC

Most organizations start with batch replication for predictable analytics workloads, then add federation for ad-hoc queries or CDC for real-time requirements as needs evolve.

How to Use Airbyte to Join On-prem and Cloud Data?

Airbyte simplifies hybrid data integration through flexible deployment options that work within your existing security infrastructure. The platform provides three deployment patterns that address different on-premise to cloud connectivity requirements.

Self-hosted Airbyte deployment runs entirely within your on-premise environment, connecting directly to local databases while pushing data to cloud warehouses through secure, encrypted connections. This approach keeps processing power and temporary data within your controlled infrastructure while leveraging cloud storage and analytics capabilities.

Hybrid configuration places Airbyte workers on-premise with cloud-based orchestration and monitoring. Source connectors access local systems directly while the control plane manages scheduling and observability from the cloud. This pattern reduces on-premise infrastructure overhead while maintaining data sovereignty during extraction.

Key capabilities for hybrid scenarios:

- Streamline data operations and automation

- 600+ pre-built connectors including enterprise databases like Oracle, SQL Server, and PostgreSQL

- Incremental sync and CDC capabilities to minimize data transfer volumes

- Automatic schema evolution handling for changing on-premise systems

- Built-in retry logic and error handling for network interruptions

What Should You Do Next?

Begin by auditing your current manual processes to identify time lost and failure points. Document every CSV export, file transfer, and import job that could be automated through proper integration using modern ETL tools.

Start with a batch replication proof of concept as the lowest-risk approach. This method provides immediate value while you learn integration patterns and validate security controls.

Plan your migration roadmap based on business priority. Identify which datasets need real-time access, which can tolerate batch processing, and which should remain federated due to compliance requirements.

Ready to test batch replication? Get started with Airbyte Cloud's 14-day free trial.

.webp)