PostgreSQL vs MySQL: A Detailed Comparison for Data Engineers

Summarize this article with:

✨ AI Generated Summary

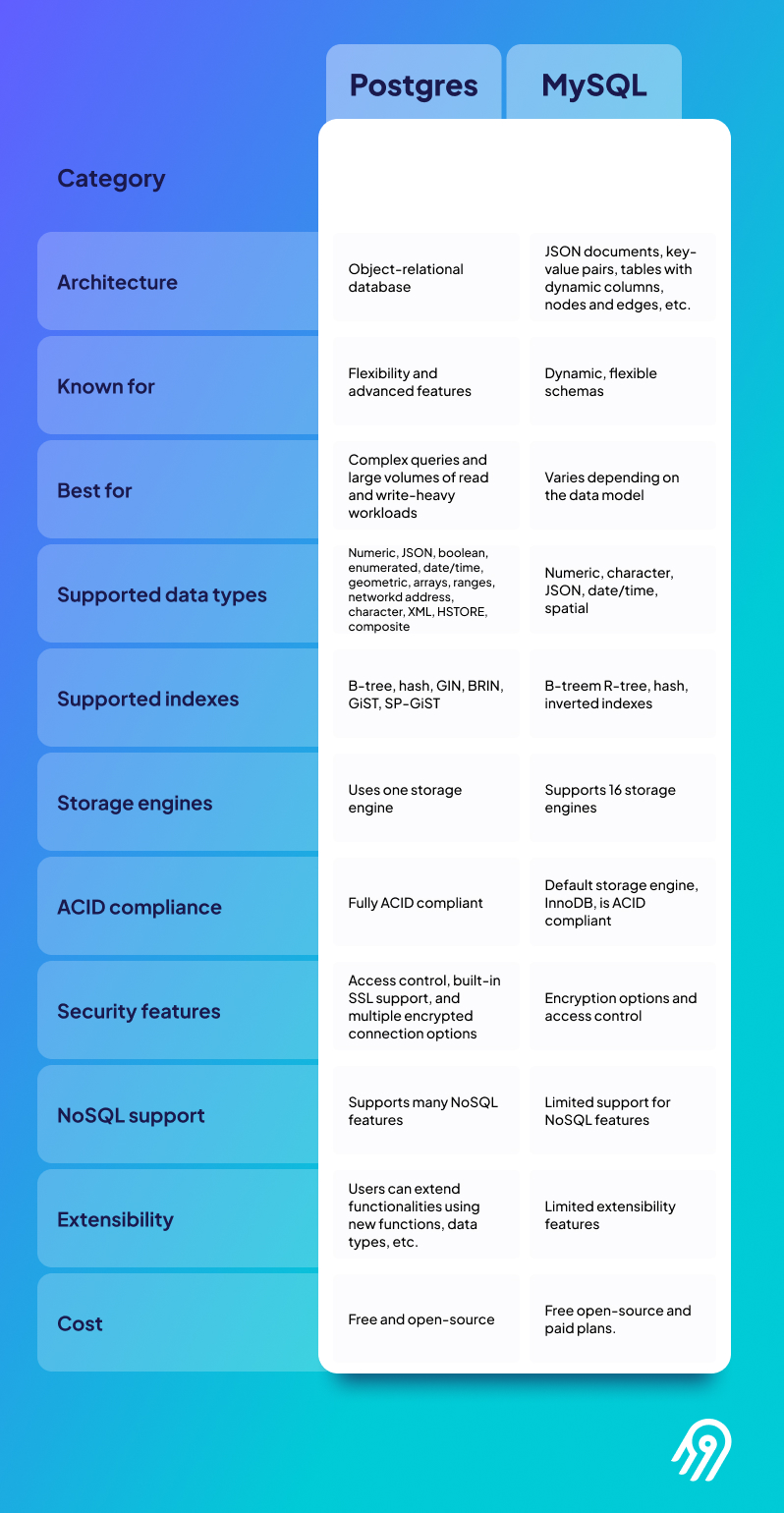

PostgreSQL and MySQL are leading open-source relational databases with distinct strengths: PostgreSQL excels in extensibility, complex queries, advanced data types, and write-intensive workloads, while MySQL is favored for speed, ease of use, and read-heavy web applications. Key differences include:

- PostgreSQL offers superior concurrency with native MVCC, advanced indexing, and strong SQL compliance.

- MySQL emphasizes performance optimization, replication, and AI-ready features like native VECTOR data types.

- Both support Change Data Capture (CDC) for real-time synchronization, with tools like Airbyte enhancing integration and security.

- PostgreSQL leads in developer adoption (45% vs. 41% in 2023) and is preferred for enterprise, AI, and analytical workloads, while MySQL suits simpler, high-availability web projects.

PostgreSQL and MySQL are the top two open-source relational databases, used in countless commercial, enterprise, and open-source applications. With the rapidly evolving landscape of data integration and cloud-native architectures, choosing between these database systems has become increasingly complex as organizations seek solutions that balance performance, scalability, and modern features.

PostgreSQL and MySQL have been around for decades, serving as reputed data-management systems with features to enable replication, clustering, fault tolerance, and integration with many third-party tools. Recent developments in both databases have introduced groundbreaking capabilities like asynchronous I/O, OAuth 2.0 authentication, atomic DDL operations, and enhanced cloud integration that fundamentally change how data professionals approach database selection.

Understanding the distinctions between these systems is essential for picking the right solution for your project. This comprehensive comparison explores the key differences between PostgreSQL and MySQL, examines their evolving capabilities, and provides guidance for modern data integration scenarios.

What Is PostgreSQL and What Are Its Core Capabilities?

PostgreSQL, or Postgres, is an open-source object-relational database management system (RDBMS) that has undergone significant evolution since its first production release in 1997. The database has evolved into a highly advanced, robust database management system with features like transactions, triggers, views, and stored procedures.

PostgreSQL combines the principles of a traditional relational database with the data model used in object-oriented databases. This makes it highly scalable and suitable for diverse environments, from small-scale applications to large-scale enterprise deployments. Recent versions have introduced substantial performance improvements, with PostgreSQL 17 featuring streaming I/O for sequential scans that reduces read latency by 40% in benchmarks.

One of the key features of PostgreSQL is its extensibility and support for NoSQL and advanced data types, such as arrays, Hstore (key-value pairs), and JSON. It is also highly concurrent, supporting simple transaction processing in OLTP workloads, complex analytical queries, and frequent write operations during OLAP processes.

PostgreSQL has achieved remarkable growth in developer adoption, with the 2023 Stack Overflow Developer Survey showing PostgreSQL at 45% usage compared to MySQL's 41%, marking a significant shift in developer preferences toward PostgreSQL's advanced capabilities.

Key features and strengths

- Extensibility: PostgreSQL enables users to add custom functionality to the database using various extension mechanisms, including User-defined Functions (UDFs), custom data types, procedural languages, and Foreign Data Wrappers (FDWs).

- Conformance to SQL standards: PostgreSQL actively adheres to SQL standards. SQL transactions are fully ACID-compliant.

- Advanced data types: Arrays, JSON, range, Boolean, geometric, Hstore, and network address types.

- Robust indexing: B-Tree, hash, GiST, GIN, and BRIN indexes, plus partial indexes.

- Enhanced security:OAuth 2.0 authentication via extensions, integrating with enterprise SSO systems like Okta and Azure AD.

- Revolutionary vacuum improvements:PostgreSQL 17 introduces the TidStore data structure that reduces memory consumption during vacuum operations by up to 20x while improving vacuum speed.

What Is MySQL and How Does It Compare in the Current Database Landscape?

MySQL is a leading relational database used by developers worldwide. Released in 1995, it is a purely relational DBMS that uses structured query language (SQL) to access and manage data.

Businesses of all sizes use MySQL for efficient data management. It powers well-known web applications like WordPress and Joomla and is used in mission-critical operations at companies including Facebook, Netflix, and Google. MySQL is known for its speed and reliability, especially for highly concurrent, read-only functions.

MySQL 8.0 introduced atomic DDL operations, combining dictionary updates, engine operations, and binary logs into single transactions to prevent partial failures. The transactional data dictionary replaced MyISAM metadata, enabling crash-safe schema changes. MySQL has implemented a new release strategy with MySQL 9.0 as the first Innovation release in July 2024, introducing native VECTOR data types for AI applications alongside JavaScript stored procedures.

MySQL can be used for OLTP transaction processing and applications operating on the LAMP (Linux, Apache, MySQL, and PHP/Python/Perl) stack. A MySQL database can be scaled horizontally and has built-in replication and clustering capabilities to improve availability and fault tolerance.

MySQL has strong security features and supports multiple storage engines, including InnoDB and MyISAM, which provide different trade-offs between performance and data integrity. Recent versions have deprecated mysql_native_password in favor of caching_sha2_password for FIPS-compliant encryption.

Key features and strengths

- Speed and performance:MySQL query optimization ensures rapid performance with its built-in query optimizer.

- Wide adoption and large community: A huge, active community offers support, code contributions, and shared knowledge.

- Replication and high availability: Asynchronous and semi-asynchronous replication, custom filters, and various topologies.

- Ease of use: Consistently ranked as one of the easiest databases to install and configure, with both GUI and CLI tools.

- Invisible indexes: Allow DBAs to test index removal without production impact.

- AI-ready capabilities:Native VECTOR data types for machine learning applications and JavaScript stored procedures through the Multilingual Engine component.

What Are the Key Technical Differences Between PostgreSQL and MySQL?

The main difference between PostgreSQL and MySQL is that PostgreSQL is an advanced, open-source relational database known for its extensibility and support for complex queries, while MySQL is a simpler, widely used relational database optimized for speed and ease of use.

The debate between these two leading database management systems continues to evolve as both platforms introduce new capabilities and optimizations.

Performance Characteristics

The speed and performance of PostgreSQL and MySQL depend on software and hardware configurations. However, each database serves different use cases with distinct performance profiles.

PostgreSQL is designed for complex operations on large datasets containing different data types. Recent benchmarks show PostgreSQL demonstrating superior performance across most categories, with PostgreSQL completing read operations approximately 1.6 times faster than MySQL on average. For write operations, the performance difference is even more pronounced, with MySQL operating 6.6 times slower than PostgreSQL on average across various write-intensive benchmarks.

MySQL is built to process simple transactions in near real-time. While MySQL maintains competitive performance in specific read-heavy scenarios, recent performance analysis indicates concerning CPU overhead increases in MySQL 8.0 compared to earlier versions, particularly affecting high-concurrency workloads.

Both systems offer performance-tuning features, mainly related to query optimization and indexing.

Query optimization

- PostgreSQL: sophisticated query planner, materialized views, full-text search, parallel query execution.

- MySQL:

EXPLAINfor execution plans, stored procedures, and caching mechanisms (InnoDB buffer pool, MyISAM key cache, query cache).

Indexing

Indexing speeds up queries on large datasets.

- MySQL: B-tree, spatial, R-tree, full-text, and hash indexes.

- PostgreSQL: All of the above plus GIN, SP-GiST, GiST, BRIN, partial, and expression indexes.

ACID Compliance and Concurrency Control

Both databases support ACID properties, but implementation details differ significantly.

PostgreSQL is ACID compliant by default with MVCC (Multi-Version Concurrency Control). This native implementation provides better performance for concurrent write operations and eliminates many locking conflicts.

MySQL achieves ACID compliance through the InnoDB engine, while concurrency control varies by storage engine. InnoDB uses gap locking for REPEATABLE READ isolation, which can cause contention in high-concurrency environments.

Both provide:

- Transaction isolation levels (Read Committed, Repeatable Read, Serializable)

- Locking mechanisms (row, page, table; PostgreSQL also supports advisory locks)

- MVCC (native in PostgreSQL; engine-dependent in MySQL)

Extensibility

PostgreSQL

- User-defined functions (UDFs) in multiple languages

- Procedural languages (PL/pgSQL, PL/Python, etc.)

- Custom data types

- Extensions (e.g., PostGIS, pgAdmin)

- Foreign Data Wrappers (FDWs)

MySQL

- UDFs, stored procedures, triggers

- Pluggable storage engines

- MySQL Connectors for many languages

- MySQL Enterprise Edition (commercial add-ons)

Verdict: PostgreSQL offers broader, deeper customization capabilities.

Data Types

Both support numeric, character, date/time, Boolean, binary, and JSON (MySQL ≥ 5.7) data types.

PostgreSQL adds:

- Arrays

- Hstore

- Advanced JSON support

- Range types

- Geospatial types and functions

Data Functions

Shared functions include mathematical, string, date/time, aggregate, control-flow, and full-text search.

Additional in PostgreSQL:

- Window functions

- Geospatial functions (

ST_Distance,ST_Contains, etc.) - Advanced full-text search (

to_tsvector,to_tsquery)

Licensing and Costs

- PostgreSQL: operates under the PostgreSQL License, free for all uses including commercial applications.

- MySQL: dual licensing—GPL (free) or commercial license from Oracle for proprietary use and paid support. MySQL 5.7 entered Sustaining Support in October 2023, ending patches for all but critical vulnerabilities.

Community and Ecosystem

- PostgreSQL: overseen by the PostgreSQL Global Development Group with extensive third-party extensions and rich documentation.

- MySQL: large, diverse community with a vast ecosystem of third-party tools; however, Oracle's ownership has led to some community fragmentation.

How Do Change Data Capture and Real-Time Synchronization Work With PostgreSQL and MySQL?

Change Data Capture (CDC) has become fundamental for real-time synchronization between PostgreSQL and MySQL, enabling organizations to maintain consistent data across multiple systems while minimizing performance impact on production databases.

Log-Based CDC Implementation

For MySQL, enabling binlog_format=ROW is critical for capturing granular row-level changes. Configuration requires server ID assignment and binary log expiration policies to balance storage and retention needs.

PostgreSQL utilizes Write-Ahead Logs (WAL) accessed via logical decoding plugins like pglogical or test_decoding. PostgreSQL 17 introduced enhanced logical replication capabilities with improved failover control and support for preserving logical replication slots during major version upgrades, significantly reducing upgrade complexity.

Best practices include binary log encryption for GDPR compliance, proper replication slot management to prevent WAL accumulation, and standardized Debezium connector deployment requiring appropriate database privileges.

Trigger-Based and Query-Based Alternatives

Trigger-based CDC creates audit tables but can impose 15–25% write performance penalties. Query-based CDC uses batch-oriented polling and is suitable only for small datasets where real-time requirements are less stringent.

Schema Conversion and Type Mapping

Automated tools like pgloader handle type transformations such as TINYINT(1) ➜ BOOLEAN and DATETIME ➜ TIMESTAMP WITH TIME ZONE. Complex scenarios require manual overrides for ENUMs, AUTO_INCREMENT fields, and spatial indexes.

What Are the Current Cloud-Native Database Trends and Deployment Considerations?

Multi-Cloud and Hybrid Architecture Patterns

Open-source databases now form the backbone of enterprise data strategies. Managed services like Amazon Aurora Serverless (PostgreSQL/MySQL) and Azure SQL Serverless offer auto-scaling and auto-pausing capabilities.

Recent benchmarking of Amazon RDS for PostgreSQL with Dedicated Log Volumes demonstrates nearly 50% runtime reduction for transaction-heavy workloads, with 91.83% improvements in transactions per second and 95.56% latency decreases for optimized configurations.

AI-Integrated Database Capabilities

Vector databases like Milvus and Weaviate enable semantic search. PostgreSQL's pgvector extension and MySQL's new Document Store with native VECTOR data types position both databases for AI-driven applications, though PostgreSQL maintains advantages in extensibility and community support for AI workloads.

Specialized Database Evolution

Time-series databases (InfluxDB, TimescaleDB) dominate monitoring use cases, while NewSQL systems (CockroachDB, Google Spanner) combine ACID compliance with horizontal scalability.

Enterprise Deployment Considerations

Data mesh architectures encourage domain-oriented ownership. Kubernetes deployment patterns show strong momentum for PostgreSQL, with the CloudNativePG operator capturing 27.6% market share and demonstrating enterprise-grade capabilities including volume snapshot backup and recovery for databases up to 4.5 TB.

When Should You Choose PostgreSQL vs MySQL for Your Project?

When to choose PostgreSQL

- Complex applications requiring custom functions or operators

- Projects demanding strict adherence to SQL standards

- Applications benefiting from advanced data types

- AI and machine-learning workloads requiring vector processing capabilities

When to choose MySQL

- Web applications prioritizing performance and ease of use

- Applications requiring high availability through replication

- Projects with smaller budgets or limited resources

- Legacy system integration with existing LAMP stack tooling

What Are the Migration Strategies and Best Practices Between PostgreSQL and MySQL?

Migrating from MySQL to PostgreSQL

- Address syntax differences, data-type conversions, stored procedure translations.

- Use tools like Airbyte, AWS DMS, or

pgloaderfor automated synchronization. - Organizations typically migrate from MySQL to PostgreSQL to access advanced features, stricter SQL compliance, and complex data modeling capabilities.

Migrating from PostgreSQL to MySQL

- Handle incompatible data types and reduced functionality (e.g., advanced indexing).

- Use tools like Airbyte or Full Convert, supplemented by custom scripts.

- Migration from PostgreSQL to MySQL is less common and typically occurs for specific circumstances like ecosystem compatibility or operational familiarity.

Modern Migration Considerations

CDC-based synchronization, automated validation, and cloud-native migration services with schema-drift detection reduce risk and downtime.

How Does Airbyte Enhance PostgreSQL and MySQL Data Integration?

Advanced Change Data Capture Architecture

Airbyte has introduced significant advancements that transform how organizations integrate PostgreSQL and MySQL databases into their data ecosystems, including redesigned CDC implementation that substantially improves replication resilience against schema modifications and large-scale data volumes.

Enterprise-Grade Security and Compliance

- SOC 2 Type II-certified protocols with TLS encryption, SSH tunneling, and audit logging with extended retention.

- Field-level hashing that anonymizes sensitive database columns during synchronization while maintaining referential integrity for GDPR and HIPAA compliance.

Platform Performance Optimization

- Automatic management of WAL retention configurations to prevent full refresh triggers during high-volume operations.

- Support for replica source configurations through logical replication slots, enabling read scaling while reducing production impact.

Operational Management and Monitoring

- Connection dashboard with latency histograms, volume trends, and error hotspot visualizations.

Conclusion

PostgreSQL and MySQL remain two highly efficient relational database management systems, each serving distinct use cases in the modern data landscape. PostgreSQL excels in complex analytical workloads, AI-driven applications, and scenarios requiring advanced extensibility, while MySQL continues to shine in high-performance web applications prioritizing simplicity and speed.

The market trends clearly favor PostgreSQL, with developer adoption reaching 45% compared to MySQL's 41% in 2023, reflecting the growing demand for advanced database features and sophisticated data processing capabilities. Performance benchmarking consistently shows PostgreSQL's advantages in mixed workloads and write-intensive scenarios, while MySQL maintains competitiveness in specific read-heavy applications.

Ongoing innovations—such as PostgreSQL 17's revolutionary vacuum improvements and streaming I/O capabilities, alongside MySQL's AI-ready features including native VECTOR data types—combined with modern data integration platforms like Airbyte, enable organizations to leverage the strengths of both systems while overcoming traditional challenges in data synchronization and schema management.

Every new release narrows the gap between these systems, making the final choice increasingly dependent on specific project requirements, existing infrastructure, and the broader data ecosystem rather than on fundamental technical limitations.

To learn more about databases, data engineering, and data insights, explore our content hub.

Frequently Asked Questions (FAQ)

Is PostgreSQL better than MySQL for enterprise applications?

PostgreSQL is often preferred for enterprise-grade applications because of its advanced SQL compliance, extensibility, and support for complex data types. MySQL is still widely used at scale, but enterprises handling analytical, AI, or highly concurrent workloads often lean toward PostgreSQL.

Which database is faster: PostgreSQL or MySQL?

It depends on the workload. MySQL generally performs better for simple, read-heavy queries, especially in web applications. PostgreSQL tends to outperform MySQL in write-intensive and analytical workloads where advanced query optimization and concurrency matter.

Can PostgreSQL and MySQL be used together?

Yes. Many organizations run both databases depending on use cases. PostgreSQL might handle analytics and complex modeling, while MySQL powers high-traffic websites. With modern CDC tools like Airbyte, it's possible to keep both synchronized in real time.

Is PostgreSQL harder to learn than MySQL?

MySQL is often considered easier to get started with due to its simplicity and widespread community resources. PostgreSQL has a steeper learning curve but offers greater flexibility and long-term scalability.

Suggested Reads:

.webp)