What’s the Best Way to Handle Data Deduplication in ETL?

Summarize this article with:

✨ AI Generated Summary

Data deduplication in ETL pipelines is essential to maintain data quality by removing duplicate records that cause inflated storage, inaccurate analytics, and slower processing. Common causes include multiple data sources, improper joins, faulty extraction logic, and inconsistent data entry.

- Deduplication should be applied at extraction, transformation, and loading stages using unique identifiers, SQL techniques, database constraints, or third-party ETL tools like Airbyte.

- Best practices include ensuring data consistency, using incremental loads, automating deduplication, and regularly monitoring data quality.

- Tools such as Airbyte, SQL queries, data cleansing platforms (Talend, Informatica), and data quality monitors (Great Expectations) help streamline and automate deduplication processes.

In any data pipeline, data quality is paramount, and one of the biggest hurdles in ensuring data quality is deduplication. Duplicate data can cause significant issues, including inaccurate reporting, inflated storage costs, and delays in decision-making.

Whether it’s caused by multiple data sources, system glitches, or improper data extraction logic, dealing with duplicates in your ETL (Extract, Transform, Load) pipeline is a must.

But how do you handle data deduplication effectively? The process of removing duplicate records might seem straightforward at first, but it’s full of potential pitfalls, especially as the complexity of your data pipeline grows.

In this post, we’ll explore the best ways to handle data deduplication in ETL, from the causes of duplicate data to the most efficient strategies for eliminating it.

By the end, you’ll understand how to implement robust deduplication processes in your ETL pipeline, ensuring cleaner data and more reliable insights.

What is Data Deduplication in ETL?

Data deduplication in ETL refers to the process of identifying and removing duplicate records from your datasets at various stages of the pipeline, whether during extraction, transformation, or loading.

It’s about ensuring that the data flowing through your pipeline is unique and accurate, which is critical for high-quality analytics and decision-making.

In an ideal world, data would come in perfectly clean, but we all know that’s rarely the case. Whether you’re aggregating data from multiple sources or transforming it along the way, duplicates often creep in, leading to:

- Inflated storage: Duplicates take up more space than necessary, driving up costs.

- Inaccurate analytics: Duplicates distort metrics and insights, making reports unreliable.

- Slower processing times: Redundant data can bog down the entire pipeline, increasing processing time and reducing efficiency.

By implementing a solid deduplication strategy, you can address these issues, ensure more accurate results, and improve the overall performance of your ETL pipeline.

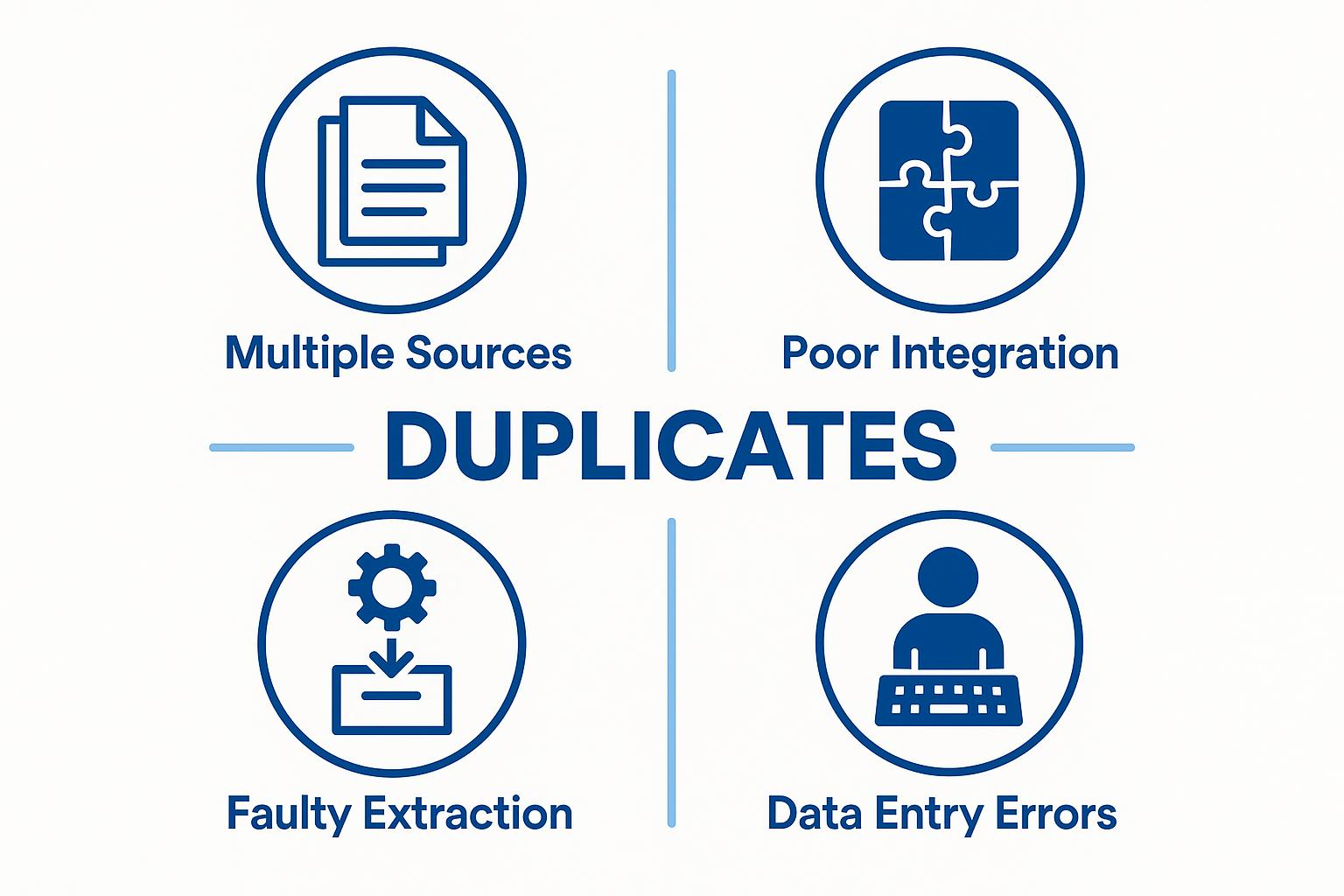

Common Causes of Duplicate Data in ETL Pipelines

Before we dive into the best methods of deduplication, it’s important to understand where duplicates come from. Identifying the root cause of duplicate data is the first step in creating a solution. Here are some common causes of duplicate data in ETL pipelines:

1. Data Source Redundancies

Cause: Multiple sources providing the same data.

In many cases, data comes from multiple systems or sources that contain similar or even identical records. For example, customer data may exist in both a CRM system and a marketing platform, and without proper management, both could feed into your ETL pipeline.

Tip: Make sure to track unique identifiers across your data sources, and establish data integration processes that identify overlaps before they enter the pipeline.

2. Improper Data Integration

Cause: Joining data from different sources without proper handling.

When merging data from different platforms, improper joins or aggregations can cause duplication. This is especially common in cases where datasets don’t align perfectly, leading to multiple records for the same entity.

Tip: Always verify your joins in transformation logic and test them with sample data to ensure no duplicates are introduced during integration.

3. Faulty Data Extraction Logic

Cause: Re-running extraction jobs or improper filtering.

In some cases, a faulty extraction process can result in the same data being pulled more than once. For example, if you rerun extraction jobs without resetting the state or properly filtering out previously extracted records, you can easily end up with duplicates.

Tip: Ensure proper state management in your extraction jobs, and if possible, implement incremental extraction where only new or updated records are pulled.

4. Inconsistent Data Entry or Updates

Cause: Human error or system failures.

Sometimes, data entry errors or system bugs can create duplicate records. For example, a user might mistakenly submit the same data multiple times, or an integration may fail to recognize that a record already exists.

Tip: Implement validation rules in both the source systems and the ETL pipeline to catch duplicate entries before they reach your database.

Key Methods of Data Deduplication in ETL

Once you’ve identified the sources of duplicate data, the next step is to implement strategies to eliminate them.

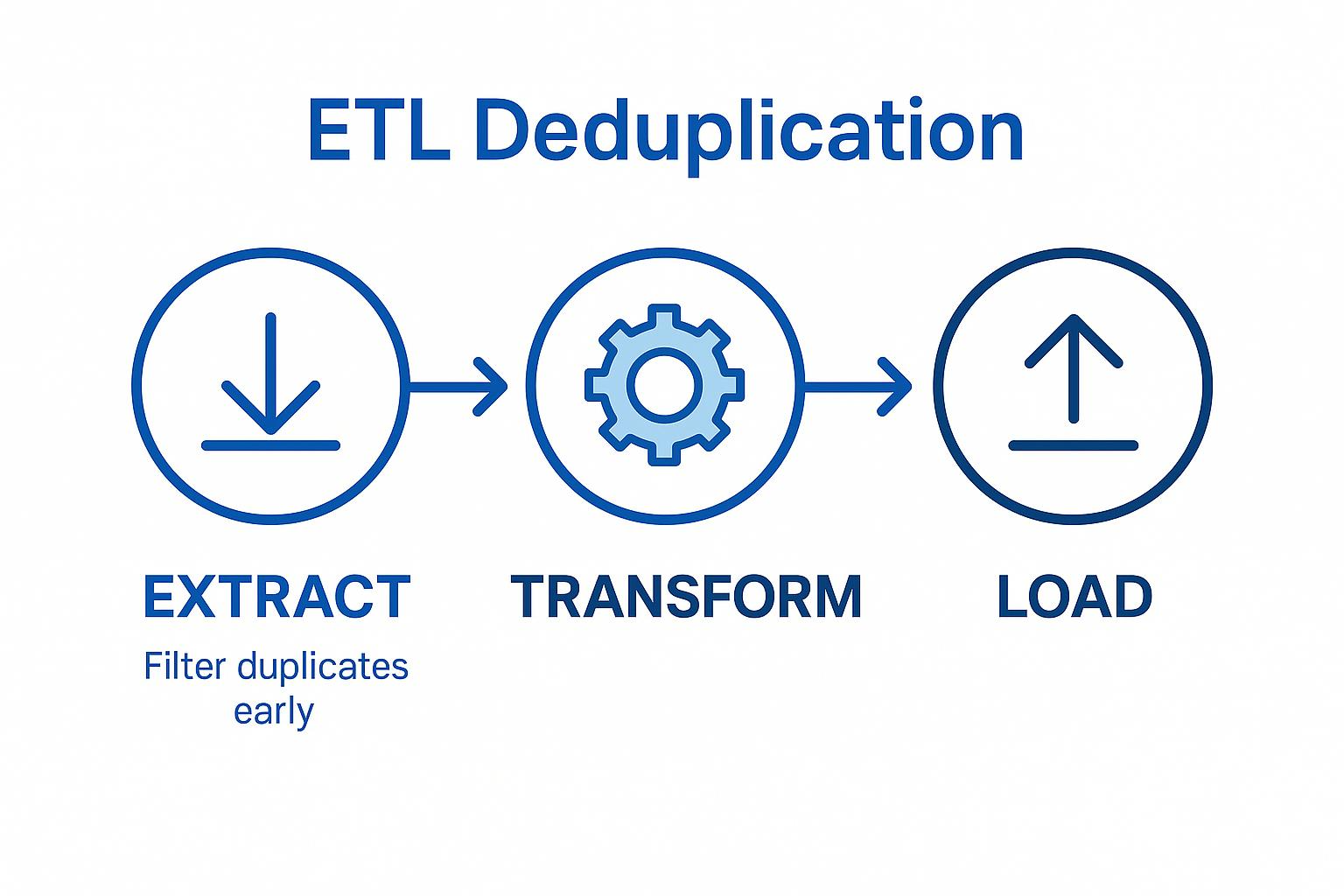

There are several methods for handling data deduplication during different stages of the ETL pipeline: extraction, transformation, and loading. Here’s a breakdown of the most effective techniques for each stage.

1. During Extraction

Method: Filter and identify duplicates at the source.

The ideal time to handle deduplication is as early as possible. By filtering out duplicate data during the extraction phase, you can prevent redundant records from entering the pipeline. This can be done by identifying unique identifiers in the source data or checking for duplicates within the extraction logic itself.

How to Check:

- Use unique identifiers (e.g., customer IDs or transaction IDs) in the source data to filter out duplicates before pulling data.

- Run sample queries on the source data to identify common duplicate patterns.

Tip: Whenever possible, leverage incremental extraction to only pull new or changed records, reducing the chance of pulling duplicate data.

2. During Transformation

Method: Remove duplicates using transformation logic (SQL, scripts, etc.).

If duplicates make it through the extraction phase, the next line of defense is the transformation phase. In this stage, you can use SQL queries or custom scripts to identify and remove duplicates based on unique fields.

Common techniques include using DISTINCT, GROUP BY, or window functions like ROW_NUMBER() to filter out redundant data.

How to Check:

- Use SQL queries such as GROUP BY or DISTINCT to aggregate and filter duplicate records.

- In programming languages like Python, use data processing libraries (e.g., pandas) to identify duplicates based on a key column.

Tip: Consider using hashing or windowing functions to detect and remove duplicates more efficiently, especially in large datasets. This method is particularly useful for complex transformations involving joins or aggregations.

3. During Loading

Method: Prevent duplicates by using constraints and validation.

Once your data is transformed and ready to be loaded, ensuring that duplicates don’t make their way into the final destination is key. You can use primary keys, unique constraints, or upsert operations (insert or update) in your database to prevent duplicate entries from being inserted into the destination system.

How to Check:

- Ensure that the destination table or database enforces primary keys or unique constraints on key fields to avoid duplicates.

- Use upsert operations to either insert new records or update existing ones based on unique identifiers.

Tip: In addition to using database constraints, implement deduplication checks just before loading data. For instance, use batch processing to validate whether incoming records already exist in the target system before inserting them.

4. Using Third-Party Deduplication Tools

Method: Leverage ETL tools with built-in deduplication features.

Many modern ETL tools, including Airbyte, offer features that automate deduplication during data ingestion and transformation.

These tools can handle common data quality issues, including deduplication, without requiring complex custom logic. For instance, some tools have built-in change data capture (CDC) features, which can prevent the reloading of already ingested data.

How to Check:

- Use the tool's native deduplication capabilities and verify that it’s configured correctly (e.g., setting up CDC, defining unique keys).

- Test the data flow to ensure that duplicate records are being identified and removed during the process.

Tip: If using an ETL platform like Airbyte, take advantage of its pre-built connectors that handle deduplication automatically for certain data sources. This can save you time and effort, especially with complex integrations.

Best Practices for Efficient Data Deduplication

Handling deduplication effectively in your ETL pipeline requires more than just implementing the right techniques—it’s about creating a process that’s scalable, efficient, and automated.

Here are some best practices to ensure your data deduplication process is as effective as possible:

1. Ensure Data Consistency Across Sources

Best Practice: Maintain unique identifiers for all records.

When dealing with data from multiple sources, consistency is key. Use unique identifiers (such as customer IDs, transaction IDs, or product SKUs) across your systems to track records reliably and prevent duplication. This will make it easier to identify duplicates during the extraction and transformation phases.

Tip: Ensure that every data source has a consistent structure for unique identifiers. If this is not possible, consider creating a canonical ID system that maps records from multiple systems to a single, unified identifier.

2. Use Incremental Loads

Best Practice: Avoid full data refreshes when possible.

Full data reloads often reintroduce duplicates, especially when dealing with large datasets. Instead, use incremental loads to only pull new or updated records since the last load. This reduces the chance of pulling duplicate records and optimizes processing time.

Tip: Set up timestamp-based or change-tracking mechanisms in your source systems to easily identify new or modified records, making incremental loads more efficient.

3. Automate Data Deduplication Processes

Best Practice: Implement automated deduplication in your ETL pipeline.

Manual deduplication is error-prone and time-consuming. Automating the deduplication process ensures that it’s done consistently every time the pipeline runs. Use built-in features of ETL tools like Airbyte, or set up scheduled deduplication checks using scripts or SQL.

Tip: Automate deduplication at multiple points in your ETL pipeline—during extraction, transformation, and loading—so that duplicates are caught early and don’t make it through to the final destination.

4. Monitor and Audit Data for Duplicates Regularly

Best Practice: Conduct routine data audits to ensure no duplicates slip through.

Even with automated processes in place, it’s important to regularly monitor and audit the data to ensure the deduplication system is working effectively. This will help you catch any edge cases or gaps in the deduplication process before they become significant issues.

Tip: Set up automated alerts that notify your team whenever a deduplication failure occurs, so that it can be addressed immediately. Regular audits will also help improve the deduplication process over time.

5. Combine Deduplication with Data Quality Monitoring

Best Practice: Use deduplication as part of a broader data quality strategy.

Deduplication is just one aspect of ensuring high-quality data. Make it part of an ongoing data quality monitoring system that also checks for other issues like missing values, incorrect formats, and outliers. By adopting a comprehensive approach to data quality, you can avoid duplicates as well as other data integrity issues.

Tip: Consider integrating data quality tools like Great Expectations or Talend into your pipeline to automatically check for and fix issues like duplicates, inconsistencies, and missing values.

Tools and Technologies to Help with Deduplication in ETL

While manual methods and custom scripts can help with deduplication, using the right tools and technologies can simplify the process and enhance its efficiency.

Here are some of the best tools and platforms that can assist you with data deduplication during ETL:

1. ETL Platforms (e.g., Airbyte)

Why Use Them: Many modern ETL platforms, like Airbyte, offer built-in features that handle data deduplication automatically. These platforms come with pre-built connectors, change data capture (CDC), and data integration tools that automatically detect and remove duplicates as data is ingested and processed.

Tip: When choosing an ETL platform, look for one with built-in data quality and deduplication features that suit your data volume and integration needs. Airbyte’s open-source connectors can help you streamline data flows and eliminate duplicates with minimal setup.

2. SQL-based Deduplication Techniques

Why Use Them: SQL queries are a powerful tool for detecting and removing duplicates. Common techniques such as DISTINCT, GROUP BY, ROW_NUMBER(), and JOIN can be used to identify duplicate records and ensure that only unique data gets loaded into your data warehouse.

Tip: In SQL, use window functions like ROW_NUMBER() and RANK() to assign a rank to each row in a partition and filter out duplicate entries based on your chosen criteria. This is especially useful for large datasets with multiple identical records.

3. Data Cleansing Tools (e.g., Talend, Informatica)

Why Use Them: Dedicated data cleansing tools are designed specifically to improve data quality. They often include features for detecting and removing duplicates, normalizing data, and ensuring consistency across datasets. These tools can be easily integrated into your ETL pipeline to automate the deduplication process.

Tip: Look for tools like Talend or Informatica that offer deduplication components as part of their ETL or data integration functionality. These platforms can integrate with databases and other sources to clean up data before it even enters the pipeline.

4. Data Integration Libraries (e.g., pandas, PySpark)

Why Use Them: If you're handling deduplication within custom ETL pipelines using programming languages like Python, pandas or PySpark are great libraries for data manipulation. They provide functions to identify duplicates based on specific conditions and help you automate data cleansing in your transformations.

Tip: With pandas in Python, use the .drop_duplicates() method to easily remove duplicates from your DataFrame. For larger datasets, consider using PySpark for distributed processing to handle deduplication efficiently.

5. Data Quality Monitoring Tools

Why Use Them: These tools go beyond simple deduplication—they monitor your data pipeline in real-time, checking for data quality issues, including duplicates. Tools like Great Expectations and Datafold allow you to define expectations and rules to ensure data is clean before it moves through the pipeline.

Tip: Implement Great Expectations in your ETL pipeline to automatically check for duplicates, missing values, or incorrect formats as part of your data quality framework.

Conclusion

Data deduplication is a critical component of maintaining high-quality ETL pipelines. Duplicates in your data can lead to inefficiencies, inaccurate insights, and higher storage costs.

By understanding the common causes of duplicates and applying effective strategies—whether during extraction, transformation, or loading—you can ensure that your data pipeline remains clean and reliable.

The methods and best practices shared in this post will help you streamline the deduplication process, and the right tools and technologies can automate and optimize these tasks, saving you time and reducing errors.

Regular audits and automated monitoring are essential to keeping your pipeline free of duplicate data.

If you’re looking for an ETL platform that simplifies data deduplication and improves your pipeline’s efficiency,

Airbyte is the solution you need. With over 600 pre-built connectors, robust support for change data capture (CDC), and features that automate data ingestion and transformation, Airbyte ensures your data is accurate and up-to-date—no duplicates allowed.

Start optimizing your ETL pipeline with Airbyte today and experience seamless, deduplicated data integration. Learn more about Airbyte.

Frequently Asked Questions

What is the difference between data deduplication and data cleansing?

Data deduplication focuses specifically on removing duplicate records from your datasets, while data cleansing is a broader process that also addresses issues like missing values, formatting inconsistencies, and invalid data. Deduplication is often considered a subset of data cleansing.

At which stage of the ETL pipeline should deduplication happen?

Ideally, deduplication should occur as early as possible—during the extraction phase. This prevents duplicates from flowing downstream. However, effective pipelines include multiple safeguards, applying deduplication checks during extraction, transformation, and loading to catch anything that slips through.

What are common causes of duplicate data in ETL pipelines?

Duplicates often arise from multiple overlapping data sources, improper joins during data integration, faulty extraction logic (such as rerunning jobs without state management), or inconsistent data entry. Identifying the root cause helps you design more reliable deduplication strategies.

How can SQL help with deduplication?

SQL offers several techniques for deduplication, including DISTINCT, GROUP BY, and window functions like ROW_NUMBER() or RANK(). These queries help identify and filter out duplicate records based on defined key columns or unique identifiers.

Why is incremental loading important for avoiding duplicates?

Incremental loading ensures that only new or updated records are extracted from the source system, instead of reloading entire datasets. This reduces the likelihood of introducing duplicates and improves pipeline efficiency.

.webp)

.png)