Agent Engine Public Beta is now live!

Learn how to easily export Postgres data to CSV, JSON, Parquet, and Avro file formats stored in AWS S3.

Summarize this article with:

Transactional databases like Postgres are an excellent choice for OLTP workloads. Still, sometimes you need to serialize your business data into a different format, like an apache Parquet column-oriented format that is better suited for analytical workloads.

Then you can easily run analytics jobs on your S3 data lake. Exporting your Postgres data to file formats like Parquet, Avro, JSON, CSV, etc., can empower Data Analysts and Data Scientists to work on your operational data without impacting production workloads. It’s common to export data from Postgres to S3 to efficiently store big data, convert data to “self-describing” file formats and run analytical workloads.

Store big data efficiently

Big data file formats can reduce file sizes significantly using data compression techniques, making it possible to store more data using less space. In some cases, you may want to offload historical data that is not queries anymore from your production database to a data lake built on S3.

Run fast analytics workloads

You can run analytical workloads faster on column-based file formats like Parquet.

Airbyte is a data integration platform with open-source connectors for the most popular databases, data warehouses and data lake storages. In this tutorial, you will learn easily set up a data pipeline from Postgres to AWS S3 using different file formats like Avro, CSV, JSON and Parquet with Airbyte.

You can skip this step if you already have a PostgreSQL. For this tutorial, you will use Heroku's managed PostgreSQL add-on to create a database host.

You first need to create a new Heroku app to host a new database on Heroku. Login to your Heroku dashboard and choose to create a new app. Also, you can change the Region to have the app's servers closer to your location.

To add a PostgreSQL database to your app, install Heroku’s Postgres Add-on. Go to Resource and look for the Heroku PostgreSQL add-on.

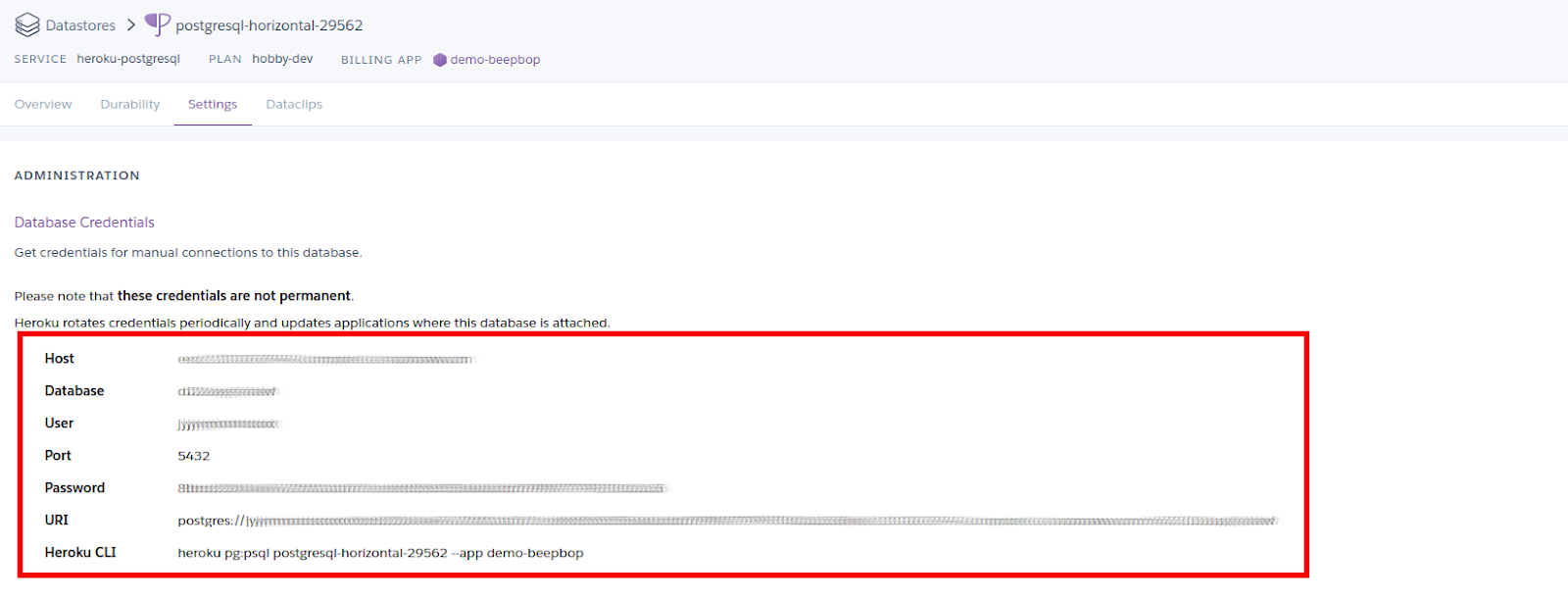

The next step asks you to pick up a pricing plan for this resource. To find the credentials and the connection URL for the PostgreSQL database, you need to navigate to the Resources tab in your app's dashboard again and select the Heroku Postgres resource.

This will bring you to the configuration screen for your database. Click on the Settings tab and View Credentials to find the database credentials. Take a note of the Host, Database, User, Post, and Password. You will need these details while setting up the Airbyte source.

To test out if you can set up a connection to the newly created database. Copy the URI provided by Heroku inside Credentials and run the following command.

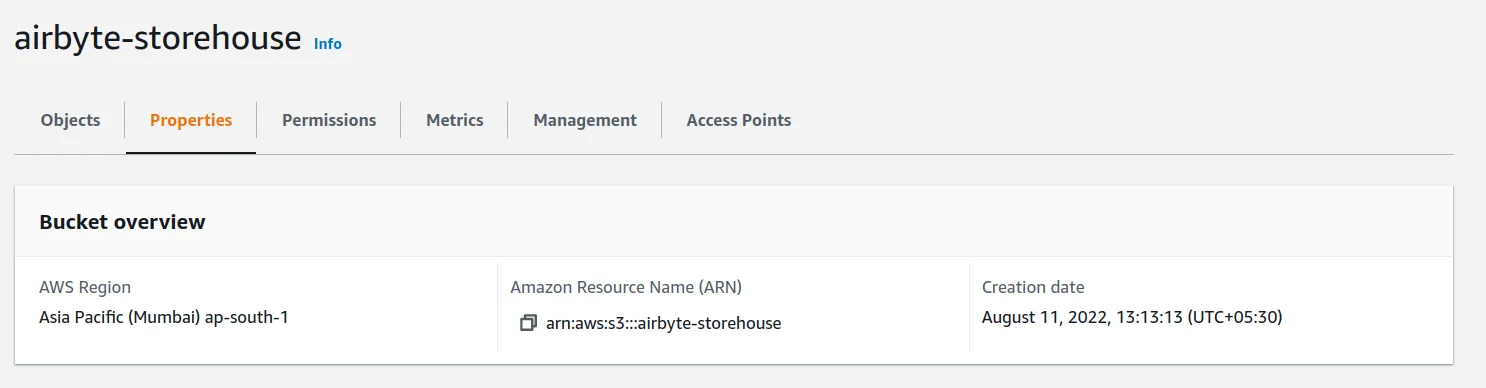

You will need to create an S3 bucket to store the files exported from Postgres. Here are some simple steps to set up an S3 bucket if you don’t already have one.

Once you create the bucket, take a note of the region, you will need this later on to set up the S3 destination on Airbyte.

Head over to IAM > Policies > Create Policy. Give the group a name and attach a policy with write access to S3.

Before you can start using your bucket, you need to have an appropriate set up for who can access it. For this, you need to create a new user group.

To set up a new Postgres source, go to Sources in your Airbyte cloud account and click on New Source. Enter the Host, Port, DB Name, Username, and Password obtained from Heroku like this.

For the database replication method, go with the Standard option; you also choose to go with Postgres logical replication (CDC) if you want to capture all changes on the source database, but that requires more configuration.

Once satisfied with your settings, click on Set up source and wait while Airbyte tests the connection to the database.

Next, create a new S3 destination to copy your Postgres data.

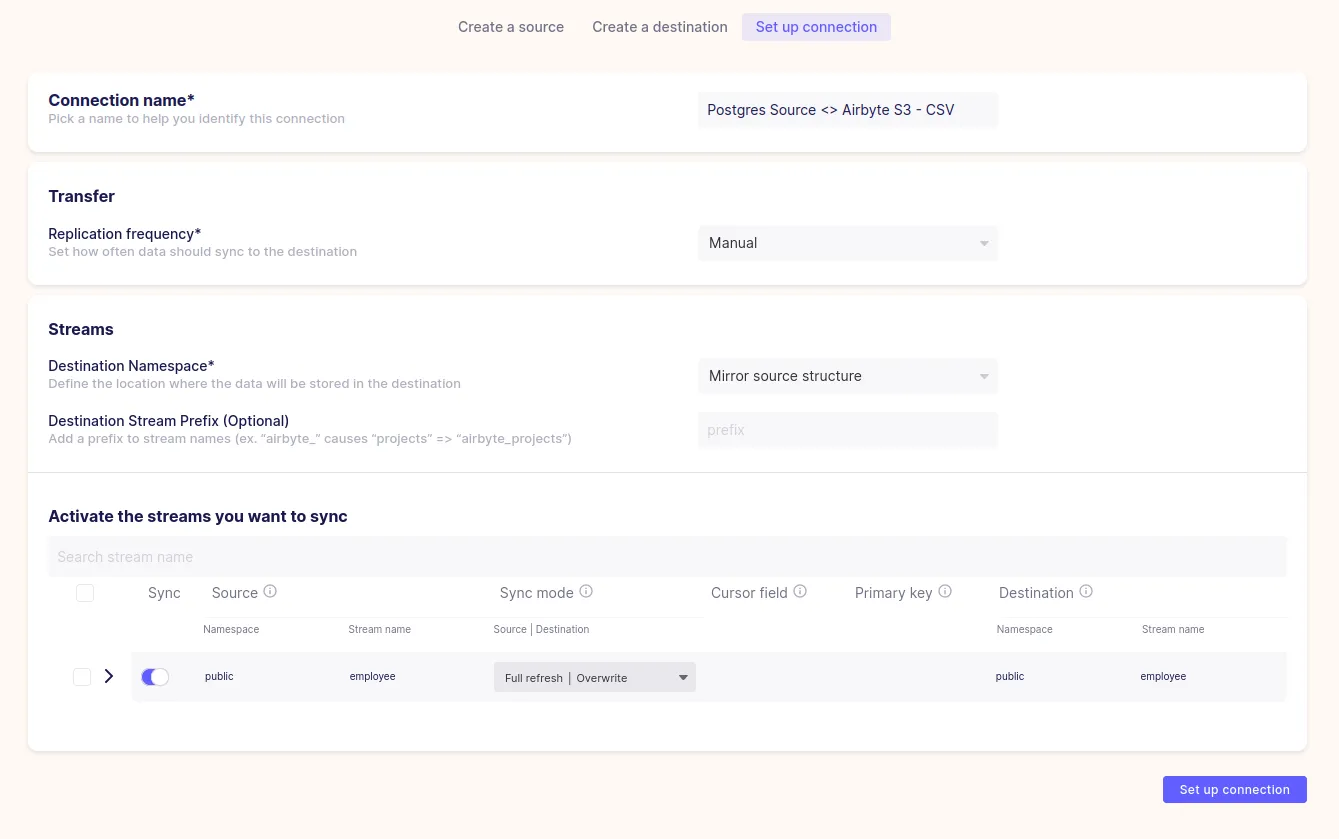

Next, you will set up a connection from your Postgres database to the S3 bucket. Head over to Connections and pick New Connection. Select the Postgres source from the existing sources and the S3 destination you just created.

Then you need to set up the connection settings. Airbyte has correctly found the sample table while setting up Postgres. Choose the Full refresh, overwrite sync mode. This will delete any existing file before importing again.

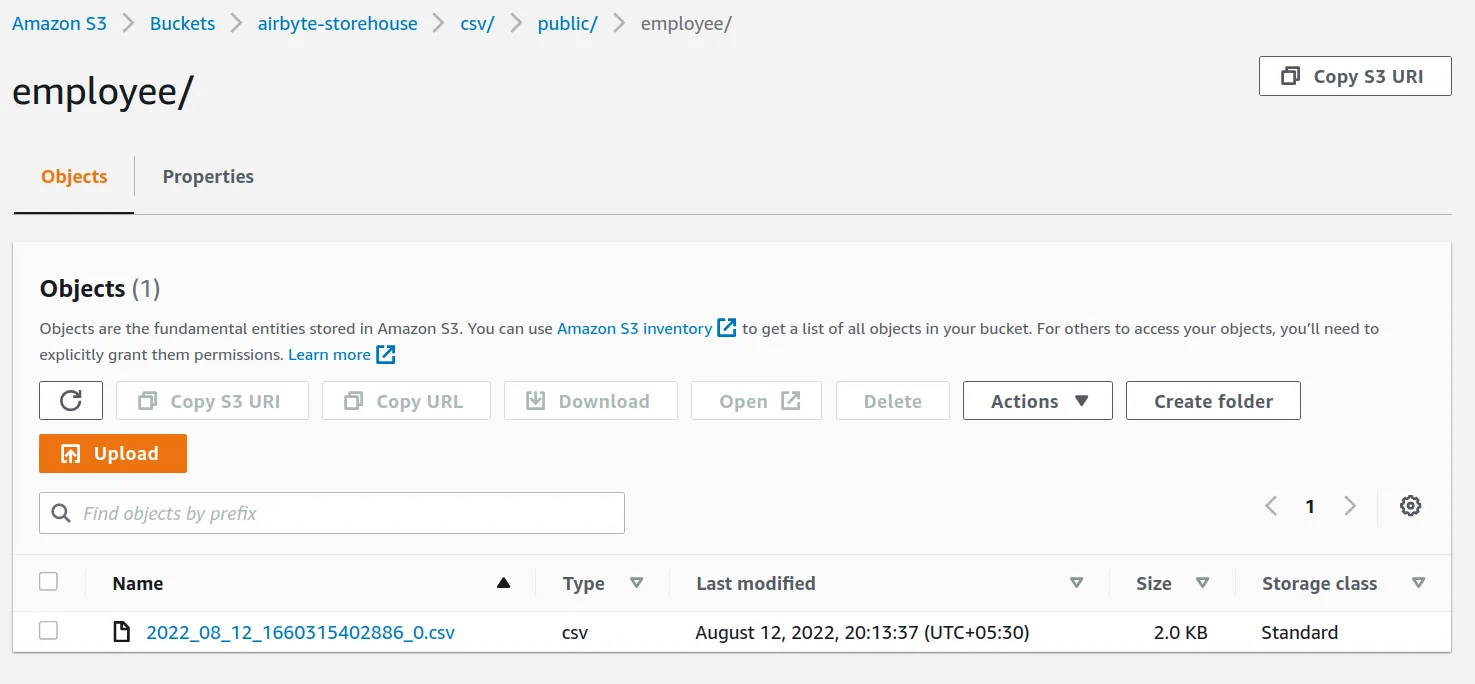

Once satisfied with the configuration, choose Set up connection and wait for Airbyte to import data from your Postgres table to the S3 bucket. Once the import is done, go back to your AWS console and select the S3 bucket where this data is exported. The path of the imported CSV should have the following format:

bucket_name/path/schema/table_name/YYYY_MM_DD_Millseconds_since_epoch.csv.

If you download the CSV file, you will see that the data was correctly imported.

Apache Avro is a row-based file format widely used for data serialization. Avro stores data and schema on the same file or message, so you can use it to stream your data. If you want to export your whole data from Postgres, and read it from elwsewhere, Avro is a very efficient data serialization format. To export Postgres data to Avro, update the Output Format in the Airbyte S3 destination settings or create a completely new connection to convert the data in Avro format.

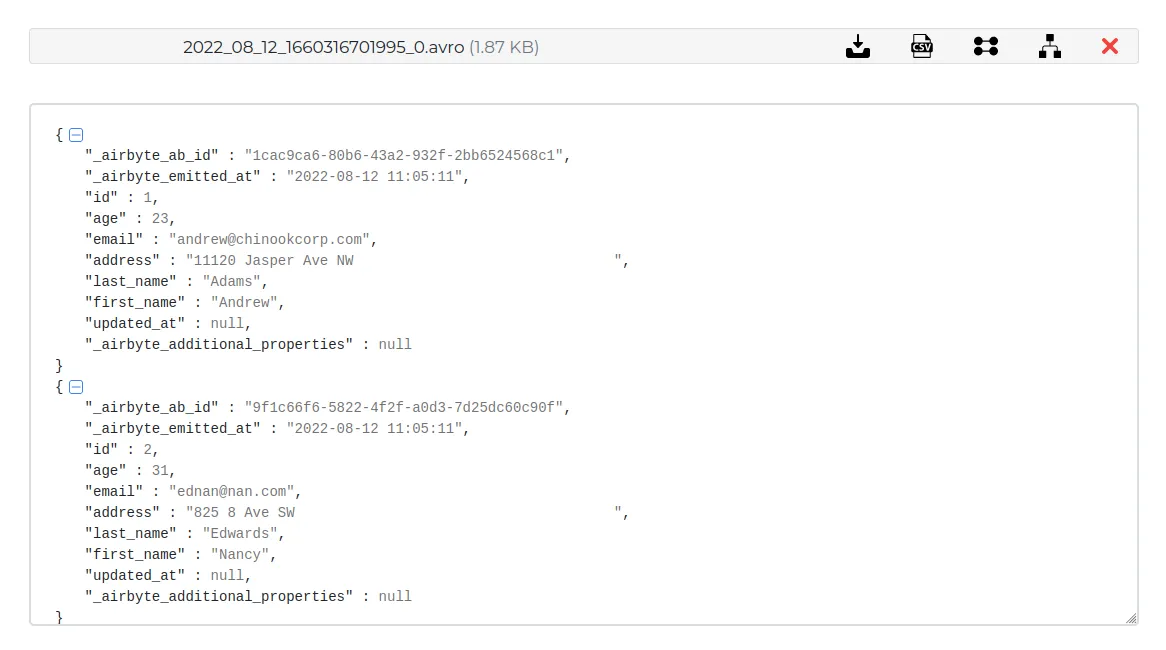

Here is a sample output of Airbyte Avro output. You can read more about how Airbyte serializes data to Avro in the S3 destination docs.

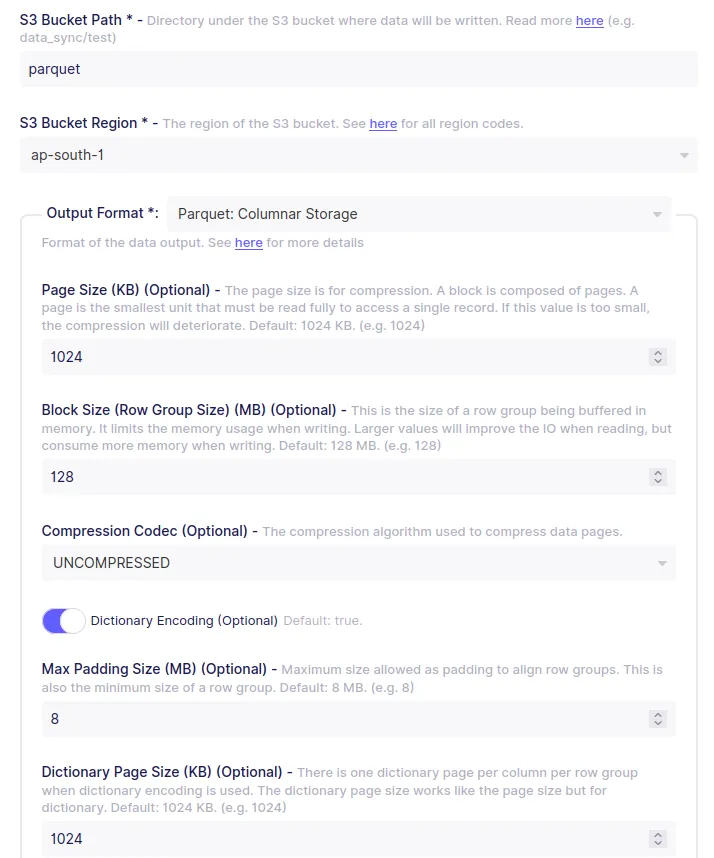

Apache Parquet is an open source big data file format built to handle flat columnar storage data formats. It is built to support very efficient compression and encoding schemes, making it an ideal choice for building analytical pipelines. Airbyte gives out-of-the-box support for converting data from a transactional database like Postgres to Parquet file format.

Here is the sample Aibyte Parquet conversion for our data in the Employee table. You can read more about how Airbyte serializes data to Parquet in the S3 destination docs.

Airbyte supports converting data to JSONL while the destination is S3. You can also export the JSON file in the GZIP compression format for faster uploads/downloads. The output file will have the .jsonl.gz extension if you enable compression.

Airbyte exports the JSONL data in the following format.

Here is how the Employees data looks like after the JSONL conversion. Notice the actual data is inside the _airbyte_data key.

To summarize, this tutorial has shown you how to:

With Airbyte, the data integration possibilities are endless! We invite you to join the conversation with on our community Slack with thousands of data engineers or sign up for our data engineering newsletter to stay in touch.

Suggested Read:

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.