Data Warehouse Testing: Strategies for Quality & Performance

Summarize this article with:

✨ AI Generated Summary

Data warehouse testing is essential to ensure data accuracy, reliability, and integrity, directly impacting business decisions and revenue. Key testing types include unit, integration, system, and user acceptance testing, supported by strategies like test case design, test data management, and automation.

- Data warehouses centralize and transform data from multiple sources for BI and reporting.

- Quality assurance focuses on metrics such as accuracy, consistency, completeness, and integrity.

- Performance testing involves load testing, bottleneck identification, and optimization tools.

- Challenges include handling large data volumes, complex transformations, and security during testing.

- Tools like Airbyte facilitate scalable data pipelines, transformation, and secure data integration.

- Future trends emphasize AI-driven automation, big data analytics, and compliance with evolving regulations.

Data is the crucial asset of your business, driving decision-making and strategic initiatives. However, studies show bad data can affect 15% to 25% of overall business revenue. On a larger scale, the U.S. economy loses around $3 trillion each year due to poor data quality. These figures underscore the importance of robust data quality assurance measures.

One of the most effective ways to ensure high data quality is by thoroughly testing your data warehouse, which is a central hub of all your data assets. In this article, you'll understand how to test a data warehouse and various data warehouse testing strategies that help ensure the reliability of your data.

What is a Data Warehouse?

A data warehouse is a centralized repository for storing and analyzing large volumes of data. Your business integrates data from multiple sources, such as databases, transactional systems, and applications, transforms it, and loads it into the warehouse. Unlike relational databases that store data by rows, data warehouses have a fixed schema and use columnar storage.

Data warehouses support business intelligence (BI) and reporting activities by providing a consistent, reliable, and historical view of data. They serve as the foundation for generating meaningful insights and making informed decisions. Therefore, testing data warehouses is necessary to ensure data accuracy, reliability, and integrity.

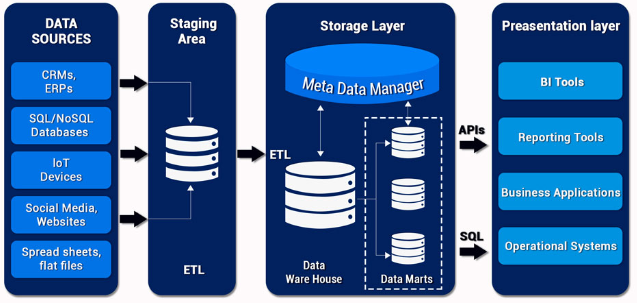

Overview of Data Warehouse Components

Let’s understand each of the components of a data warehouse in detail:

Data Sources: The data sources component represents the various systems and databases from which data is collected for the data warehouse. This can include CRMs, ERPs, IoT devices, spreadsheets, and more.

Staging Area: The staging area is a temporary storage within the data warehouse where raw data from different sources is initially loaded before being processed and loaded into the warehouse. Data in the staging area may undergo data cleansing, transformation, and validation to ensure quality and consistency.

Storage Layer: The storage layer is where the actual data is stored within the data warehouse. It stores the cleansed and transformed data from the staging area in a structured format suitable for analytical queries and reporting.

Metadata Manager: It is responsible for managing the metadata within the data warehouse. Metadata is data about the data, such as definitions, data lineage, relationships, and transformations.

Data Marts: These are subsets of the data warehouse that focus on your specific business areas or departments, allowing for targeted analysis and reporting. For example, you can have separate data marts for marketing and financial departments.

Presentation Layer: The Presentation layer is the interface between the data warehouse and the end-users. It includes reporting tools, dashboards, query interfaces, and business intelligence (BI) applications that enable you to retrieve and explore data meaningfully.

What is Data Warehouse Testing?

Data warehouse testing is the process of validating the accuracy and reliability of the data stored in a data warehouse. It involves designing and executing various test cases to assess the quality of data and validate the data warehouse's functionality and performance. The primary objective is to ensure that the data warehouse meets your specific business requirements and provides trustworthy data for analysis and reporting.

Types of Data Warehouse Testing

There are various types of testing to ensure the data warehouse performs well and provides you with high-quality data and insights. Here is an overview:

Unit Testing

Unit testing focuses on testing individual components or modules of the data warehouse in isolation. It verifies the correctness and functionality of each unit, such as data types, data mappings, transformation rules, etc. Unit testing ensures that each component performs as intended and produces accurate results when tested independently.

Integration Testing

Integration testing involves verifying the interaction and data flow between various components of the data warehouse environment. This helps ensure that the data warehouse operates as intended and integrates effectively with other modules. It helps provide reliable and accurate data for downstream applications such as OLAP (Online Analytical Processing) tools, reporting systems, or business intelligence platforms.

System Testing

System testing evaluates the overall functionality, performance, and behavior of the data warehouse system. It verifies whether the system meets the specified requirements and performs as expected. System testing includes end-to-end data flows, data transformations, data loading processes, query performance, and system scalability.

User Acceptance Testing

User acceptance testing (UAT) involves validating the data warehouse from the end user's perspective to ensure it aligns with their requirements. UAT typically involves real-world scenarios and data sets to assess the system's usability, functionality, and performance.

Strategies for Effective Data Warehouse Testing

Data warehouse testing requires the implementation of appropriate strategies. Let's explore them in detail:

Designing Test Cases

It's essential to consider various factors when designing test cases to ensure thorough coverage and validation of the data warehouse usability. Here are some steps to help you design effective test cases:

- Clearly define the objectives of each test case, specifying what aspect of the data warehouse is being tested.

- Thoroughly analyze the data warehouse requirements, including data sources, transformations, target systems, and expected functionality.

- Break down complex data warehouse scenarios into smaller, more manageable test cases. This allows for focused testing and easier identification of issues.

- Test cases should include variations in data types, formats, and volumes to assess the system's ability to handle different data scenarios.

- Continuously review and refine the test cases based on feedback, evolving requirements, and lessons learned during testing.

Managing Test Data

Here are some strategies for effectively managing test data:

- Instead of using the entire production dataset for testing, create a subset of data that includes representative sample records from different scenarios. This reduces the size of the test data and speeds up the testing process.

- Ensure sensitive information is masked or anonymized in the test data. This protects data privacy and ensures compliance with data protection regulations.

- Include variations of test data to cover different scenarios and edge cases. This involves considering different combinations of values, null values, outliers, and negative test cases.

- Regularly refresh and reset the test data to maintain data integrity and consistency between test cycles.

Automation in Testing

Automation in data warehouse testing helps improve efficiency, reduce errors, and accelerate testing. Select appropriate test automation tools based on the data warehouse's architecture, technology stack, and testing requirements.

Various tools, such as QuerySurge, iceDQ, and Datagaps are widely utilized. These tools provide comprehensive features for data validation, performance testing, and more, enabling you to achieve greater efficiency and reliability in the data warehouse testing efforts.

Quality Assurance in Data Warehouse Testing

Quality assurance involves implementing strategies to ensure that the data stored, processed, and delivered by the data warehouse meets predefined quality standards. This guarantees that the data collected from various sources is accurate and useful for making business decisions.

Quality Metrics and Benchmarks

Quality metrics help you measure and evaluate the performance and effectiveness of the testing process. They provide a standardized way to assess the quality of the data warehouse and identify any potential issues or areas for improvement. Here are a few key aspects to consider:

Data Accuracy: It refers to the correctness of the data stored in the data warehouse. Techniques such as data profiling, data cleansing, and data validation are used to ensure the accuracy of data.

Data Consistency: This involves ensuring uniformity and coherence of data across different datasets within the data warehouse. It ensures that data is presented consistently and can be relied upon for analysis.

Completeness: Completeness refers to whether all the required data is present in the data warehouse. It ensures that there are no missing or incomplete records that could affect the analysis or decision-making process.

Data Integrity: This evaluates the reliability and validity of data by examining its conformity to predefined rules, constraints, and business logic. It helps identify any data inconsistencies or anomalies that might affect the accuracy of analysis.

Role of Data Quality in Warehouse Testing

Data quality plays a crucial role in warehouse testing as it directly impacts the credibility of the insights derived from the data warehouse. Here's how data quality is important:

Trustworthy Insights: High data quality ensures that the insights derived from the data warehouse are accurate and reliable. You can confidently rely on the data to make informed decisions and drive strategic initiatives.

Cost Efficiency: Maintaining high data quality helps reduce the cost associated with data errors, compliance penalties, and business losses due to incorrect decisions. Effective warehouse testing identifies and rectifies data quality issues early in the process, preventing downstream impacts on your business operations.

Performance Testing Strategies

Performance testing of a data warehouse involves evaluating its ability to handle large volumes of data, complex queries, and concurrent user loads while maintaining acceptable response times. Here are some strategies to consider:

Identify Performance Goals: Define specific performance goals important for your data warehouse. This could include response time thresholds for different types of queries, data loading times, etc. These goals will guide your performance testing efforts.

Design Realistic Test Scenarios: Create test scenarios that resemble real-world usage patterns and workloads. Assess factors such as the number of concurrent users, types of transactions, and data volumes. This ensures that your performance tests accurately reflect the system's behavior under typical usage conditions.

Load Testing: Perform load testing to check the system's performance under varying levels of user load. Simulate multiple users executing transactions concurrently and measure response times, throughput, and resource utilization. This helps identify performance bottlenecks and assess how the system scales under different loads.

Identifying and Resolving Performance Bottlenecks

Here are some key techniques and tools to help identify and address performance bottlenecks:

- Use query profiling tools to analyze query execution plans and identify inefficient queries. Tools like SQL Server Profiler and Oracle SQL Tuning Advisor provide insights into query performance and optimization recommendations.

- Use performance testing tools such as Apache JMeter and LoadRunner to help simulate heavy workloads and evaluate the performance of the data warehouse under stress conditions.

- Utilize caching frameworks like Redis or Apache Ignite to store frequently accessed data in memory, reducing the need for expensive operations.

- Use tools like ER/Studio to optimize logical data models for improved performance and efficiency.

Best Practices for Data Warehouse Testing

Let’s explore some best practices to ensure the effectiveness and integrity of data warehouse testing processes.

Planning and Scheduling Tests

Effective planning and scheduling of tests are crucial for ensuring comprehensive coverage and timely execution of testing activities. This involves the following:

Define Test Objectives: Clearly identify the goals and objectives of the testing phase. This includes determining what aspects of the data warehouse will be tested, such as data accuracy, performance, usability, and security.

Establish a Testing Environment: Set up a dedicated test environment that resembles the production environment. This includes configuring the necessary hardware, network settings, and security measures required for testing.

Test Schedule and Milestones: Establish a schedule for test execution, defining milestones and deadlines. This helps in managing the testing process efficiently and ensures that testing activities are completed within the allocated time frame.

Collaborative Approaches Between Teams

Effective collaboration between teams plays a crucial role in ensuring comprehensive testing coverage and quality assurance for data warehouse systems. Consider the following practices:

Cross-Functional Collaboration: Form cross-functional testing teams composed of members from data engineering, data analytics, business intelligence, and end-user domains. This ensures that the system is collectively verified from diverse perspectives, enhancing the thoroughness of the testing process.

Clear Roles and Responsibilities: Clearly define the responsibilities of each team member involved in testing. This helps ensure accountability and avoids any confusion or overlap in tasks.

Knowledge Sharing: Encourage knowledge-sharing sessions to enhance understanding of the data warehouse and its testing process. This helps build a collective knowledge base and strengthens the overall expertise of the teams involved.

Documentation and Reporting

Comprehensive documentation and reporting are essential for capturing test outcomes, identifying defects, and communicating findings effectively. Key practices include the following:

Test Plan: Create a detailed test plan that outlines the testing approach, objectives, scope, and test coverage. The test plan should serve as a reference document for all testing activities and provide a clear roadmap for the testing process.

Test Reports: Generate comprehensive test reports summarizing the testing activities, findings, and recommendations. These reports should be easily understandable for all stakeholders and provide insights into the data warehouse's quality and usability.

Challenges in Data Warehouse Testing

Here are some key challenges often encountered in data warehouse testing:

Handling Large Volumes of Data

Data warehouses store vast amounts of data from diverse sources, which can make it challenging to efficiently handle and validate the data during testing. Testing such massive datasets requires robust strategies and tools to ensure thorough validation and accuracy.

Complex Transformations

Data warehouses often involve complex transformations that are performed on the data before it is loaded into the warehouse. These can include data cleansing and data aggregation. Testing such transformations can be challenging as it requires a deep understanding of the business logic and the data flow.

Ensuring Security During Testing

Data warehouses contain sensitive and confidential information, making security a critical aspect of data warehouse testing. Ensuring the confidentiality and integrity of data during testing involves implementing robust access controls, encryption, and data masking techniques to protect information from unauthorized access.

Streamline Data Warehouse Testing with Airbyte

To overcome the above challenges and perform successful testing, a robust data pipeline is essential to ensure seamless data flow. This is where Airbyte, a robust data integration and replication platform, can greatly assist.

With Airbyte, you can build and manage scalable data pipelines that can handle large data volumes efficiently. It offers over 350 pre-built connectors, allowing you to seamlessly connect to various data sources, including databases, APIs, and files. This makes it easy to extract and load data into your data warehouse.

Here are some of the key features of Airbyte:

CDK: If you can't find the desired connector from the pre-existing list, you can leverage Airbyte's Connector Development Kit (CDK) to create a custom one within 30 minutes. This enables you to effortlessly build a pipeline with your desired sources.

Change Data Capture: Airbyte's CDC capabilities enable you to capture and replicate incremental changes in your data systems. You don't need to perform full refreshes, resulting in faster and more efficient data pipelines.

Flexibility: It offers multiple data pipeline development options, making it easily accessible to everyone. These options include UI, API, Terraform Provider, and PyAirbyte, ensuring simplicity and ease of use.

Support for Vector Store Destinations: Airbyte simplifies AI workflows by allowing you to load unstructured data directly into popular vector store databases like Pinecone, Weaviate, and Milvus.

Complex Transformations: Airbyte enables you to seamlessly integrate with data transformation tools like dbt (data build tool). This empowers you to perform customized and advanced transformations within Airbyte pipelines.

Integrated Support for RAG-Specific Transformations: Airbyte supports Retrieval-Augmented Generation (RAG) transformations, including chunking and embeddings powered by providers like OpenAI and Cohere. This allows you to load, transform, and store data efficiently in a single operation, streamlining your AI workflows.

Chat with Your Data: Airbyte's vector database search capabilities and integration with LLMs allow you to build a conversational interface that can answer questions about your organization's data.

Data Security: It prioritizes data security by adhering to industry-standard practices and utilizes encryption methods to ensure your data is safe both during transit and at rest. Airbyte incorporates role-based access controls and authentication mechanisms to protect your data from potential breaches.

Case Study

In a case study conducted by Codoid, an MNC E-Learning company faced data quality (DQ) issues in their data warehouse. The company offers educational content, technology, and services for various markets globally. It has multiple e-learning platforms and uses Snowflake Warehouse for analytics purposes. However, the data collected had several issues, including duplicates in the source and target, null values, data integrity problems, and invalid patterns.

To address the data quality issues encountered during testing, the team decided to implement a customized automation testing framework in Python using Behave. This approach helped overcome the challenges associated with data quality and enabled the team to identify and resolve any issues early in the testing process.

Future Trends in Data Warehouse Testing

Here are some key trends that are expected to shape the future of data warehouse testing:

Advances in Automation Tools

Automation will play a significant role in streamlining and enhancing Data Warehouse Testing. Advanced automation tools will leverage artificial intelligence (AI) and machine learning (ML) algorithms to automate test case generation, data validation, and performance testing. This will accelerate testing cycles, improve efficiency, and reduce manual effort to ensure data accuracy and quality.

The Impact of Big Data and Analytics

Big data and analytics pose unique challenges for data warehouse testing. Testing frameworks must adapt to handle large volumes of diverse data sources and complex analytical processes. Advanced analytics capabilities will be integrated into testing processes to identify patterns, anomalies, and performance bottlenecks within large-scale data warehouses.

Evolving Standards and Regulations

As data privacy and security concerns continue to increase, the need for compliance with evolving standards and regulations will significantly impact data warehouse testing. You must ensure that the testing processes align with regulations such as GDPR, CCPA, and industry-specific guidelines.

Wrapping Up

Data warehouse testing is a crucial aspect of data management, as it guarantees the reliability and accuracy of data for decision-making. It is essential to implement a comprehensive testing strategy encompassing data integration, quality, performance, and security to maintain the credibility of the data warehouse.

By adhering to best practices, utilizing automation, and conducting regular testing, you can build robust data warehousing solutions that empower data-driven insights and facilitate better business decisions.

FAQ’s

How is data mapping tested in a data warehouse?

Data mapping is tested by verifying that the data from source systems is correctly mapped to the corresponding fields in the data warehouse. This involves reviewing the data mapping document and tracing the data flow from source to target to ensure accurate transformation.

How is data quality ensured in a data warehouse?

Data quality in a data warehouse is ensured through a combination of techniques, including data validation, profiling, data governance, and continuous monitoring and improvement. Implementing robust data quality processes and fostering a culture of data stewardship is crucial for maintaining high-quality data in the data warehouse.

What is the role of a data warehouse test plan?

A data warehouse test plan outlines the overall testing strategy, approach, and schedule for the data warehouse. It defines the test objectives, test scenarios, test data requirements, and the roles and responsibilities of the testing team. The test plan ensures that testing is conducted in a structured and comprehensive manner.