What Is Database Replication: Tools, Types, & Uses

Summarize this article with:

✨ AI Generated Summary

Ensuring your data assets are always accessible and reliable is critical for your organization's continued operation. However, storing data in a single location can make you vulnerable, as a disruption at that site can significantly impact your business.

With data breaches affecting a significant proportion of organizations each year and system failures costing enterprises millions in lost revenue, the stakes for data availability have never been higher. Modern data professionals face an impossible choice: accept the risk of single points of failure or invest in complex replication strategies that consume valuable engineering resources.

This challenge becomes even more acute as organizations scale their data operations across cloud environments, handle real-time analytics demands, and navigate increasingly complex compliance requirements that make data protection essential for business survival.

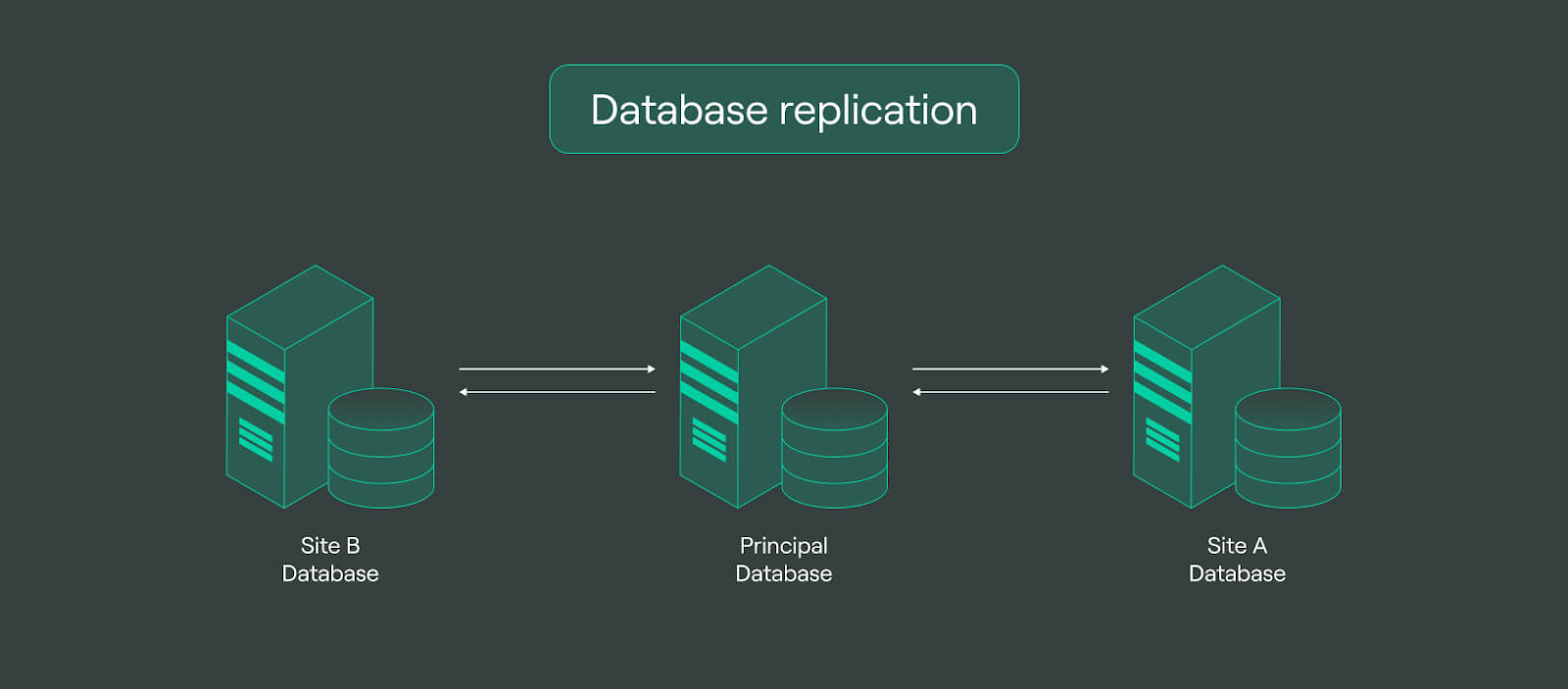

Database replication solves this fundamental challenge by creating multiple copies of your databases across different locations, but the traditional approaches that worked for previous generations of data infrastructure are struggling to meet the demands of modern distributed systems.

This article delves into various aspects of database replication, including its types, techniques, and benefits, while exploring cutting-edge solutions that address contemporary challenges like replication lag, conflict resolution, and cloud-native deployment requirements. It also examines several tools that can help you make this process effortless and enable your organization to function normally, even during technical disruptions.

What Is Database Replication and Why Does It Matter?

Database replication involves frequently creating electronic copies of a primary database across different locations or servers to ensure data accessibility, fault tolerance, and reliability. These replicas can be located within your organization or other geographic locations, establishing a distributed database system that provides redundancy and improved performance for critical business operations.

With database replication, you can facilitate continued data availability by providing multiple access points to the same information, even during hardware failures or disasters. This process typically occurs in real time as you create, update, or delete data in the primary database, but you can also execute it in scheduled batch operations depending on your performance requirements and consistency needs.

Modern database replication extends beyond simple data copying to include sophisticated conflict resolution mechanisms, automatic failover capabilities, and intelligent routing that can adapt to changing network conditions and business requirements. The evolution of replication technology now encompasses support for heterogeneous database environments, cloud-native architectures, and real-time streaming scenarios that were impossible to achieve with traditional approaches.

What Are the Different Types of Database Replication?

You can categorize database replication types based on the method and frequency of data transfer. Understanding these variations is crucial for selecting the optimal approach for your organization's specific use cases, performance requirements, and consistency needs.

Asynchronous Replication

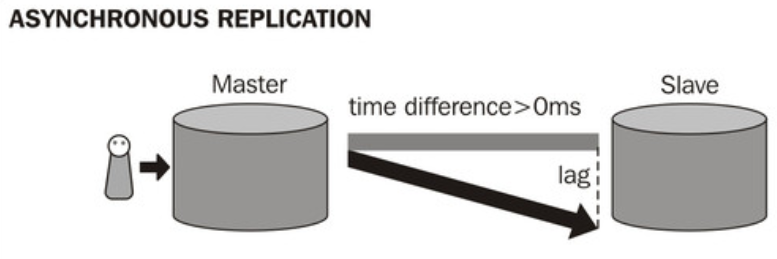

Asynchronous replication enables you to copy the data changes made in the primary database to secondary databases with a delay. This approach offers higher performance and scalability but introduces the possibility of data inconsistencies between the primary and secondary databases due to replication lag.

You can use this type of replication in analytics and reporting scenarios where eventual consistency is acceptable. The delayed nature of asynchronous replication makes it particularly suitable for use cases where immediate consistency is not critical but performance optimization is essential.

Synchronous Replication

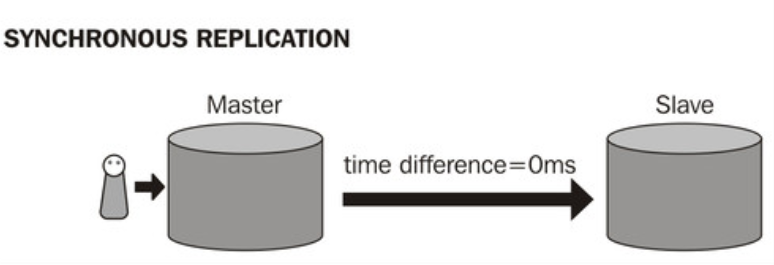

Synchronous replication allows you to immediately copy all changes made in the primary database to all replicas before the transaction is considered complete. While this guarantees data integrity, it can introduce some latency as the system must wait for confirmation from all replica nodes before confirming transaction success.

This approach is essential for mission-critical applications where data consistency across all systems is paramount. Financial systems and healthcare applications often require synchronous replication to maintain regulatory compliance and ensure accurate data across all locations.

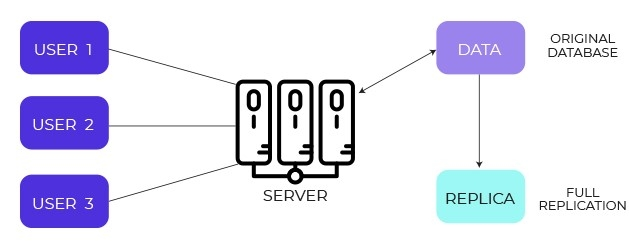

Full Replication

Full replication involves copying the entire contents of a table from a source to one or more target databases. This approach facilitates the propagation of all source data changes, including inserts, updates, and deletions to the replicas.

While full replication offers high consistency and complete data coverage, it can be computationally expensive due to high-volume data transfers and storage requirements. Organizations typically use this method for critical data sets where complete historical data availability is essential for business operations.

Partial Replication

Partial replication empowers you to strategically replicate only specific portions of your database across multiple servers. This approach optimizes resource utilization and reduces storage costs by promoting data deduplication and ensuring critical data availability.

You can implement filtering based on geographical regions, customer segments, or data classification levels. This selective approach allows organizations to balance data availability with cost efficiency while maintaining compliance requirements.

Incremental Replication

With incremental replication, you can efficiently transfer only new or updated data from a source database to a target system. This approach minimizes data movement, processing, and storage requirements, making it ideal for large datasets with relatively few changes.

Incremental replication is particularly valuable for organizations dealing with high-volume transactional systems where full synchronization would be prohibitively expensive. The method tracks changes through timestamps, version numbers, or change flags to identify what data needs replication.

What Are the Key Techniques Used to Replicate Databases?

Replication of databases allows you to employ various techniques to sync your data across multiple systems. These methods dictate how you capture and propagate data changes to replica databases, each with specific advantages and use cases.

Log-Based Replication

Log-based replication enables you to efficiently capture and replicate data changes by directly reading a database's transaction log. This method avoids the overhead of tracking individual data modifications, making it faster and less resource-intensive than alternative approaches.

The technique provides near real-time data synchronization while minimizing impact on source system performance. Transaction logs contain comprehensive information about all database changes, ensuring complete data capture without missing modifications.

Trigger-Based Replication

Trigger-based replication helps you capture database changes using triggers added to the source tables. This approach ensures all changes directly relate to user actions on the system and provides fine-grained control over what data gets replicated.

Database triggers execute automatically when specific events occur, such as insert, update, or delete operations. This method allows for sophisticated transformation logic and conditional replication based on business rules.

Row-Based Replication

Row-based replication uses a replication-specific log that contains enough information to uniquely identify rows and the changes made to them. This approach allows for data replication across different database versions and platforms while maintaining data integrity.

The method captures actual row changes rather than SQL statements, providing better consistency across heterogeneous database environments. Row-based replication also handles complex scenarios like stored procedures and functions more reliably than statement-based alternatives.

What Are the Modern Challenges in Database Replication?

Contemporary database replication faces several sophisticated challenges that traditional approaches struggle to address effectively. Understanding these obstacles is crucial for implementing successful replication strategies in modern data environments.

Replication Lag and Performance Bottlenecks

Replication lag represents delays between when changes occur on the primary database and when they become visible on replica systems. This latency can impact business operations that depend on real-time data consistency across multiple locations.

Network latency, processing overhead, and resource contention contribute to replication lag. Organizations must balance consistency requirements with performance expectations, particularly in geographically distributed systems where network delays are unavoidable.

Conflict Resolution Complexity

Multi-leader replication scenarios introduce sophisticated conflict resolution challenges when multiple nodes modify the same data concurrently. These conflicts require intelligent resolution mechanisms that preserve data integrity while maintaining system availability.

Traditional last-write-wins approaches often prove inadequate for complex business scenarios. Modern systems need sophisticated conflict resolution strategies that consider business logic, data relationships, and organizational priorities when resolving concurrent modifications.

Scalability and Infrastructure Management

Modern organizations face scalability challenges that extend beyond simple data volume growth to encompass the number of replicas, transformation logic, and diversity of target systems. Managing replication across dozens or hundreds of endpoints requires automation and intelligent orchestration.

Infrastructure complexity increases exponentially with the number of replication targets and the variety of database technologies involved. Organizations need solutions that can handle heterogeneous environments while maintaining consistent performance and reliability standards.

How Do Advanced Replication Technologies Address Contemporary Needs?

Modern replication technologies incorporate cutting-edge capabilities designed to address the limitations of traditional database replication approaches. These advancements focus on automation, intelligence, and scalability to meet contemporary business requirements.

AI-Powered Intelligence and Automation

Artificial intelligence integrations transform manual processes into intelligent, self-managing platforms. Machine learning algorithms can predict replication failures, optimize resource allocation, and automatically adjust replication strategies based on changing data patterns.

AI-powered systems continuously learn from operational patterns to improve performance and reliability. These capabilities include predictive scaling, intelligent conflict resolution, and automated performance tuning that reduces administrative overhead while improving system reliability.

Cloud-Native and Serverless Architectures

Cloud-native replication architectures eliminate operational overhead and provide automatic scaling, with many offering pay-per-use or alternative flexible pricing models. These platforms handle infrastructure management, monitoring, and maintenance automatically while providing enterprise-grade reliability and security.

Serverless replication solutions scale dynamically based on actual usage patterns, eliminating the need for capacity planning and resource provisioning. Organizations benefit from reduced operational complexity and improved cost efficiency while maintaining high availability and performance.

Real-Time Streaming and Advanced CDC

Modern CDC tools incorporate advanced log-based capture mechanisms that handle complex schema evolution scenarios without manual intervention. These systems provide microsecond-level latency for critical business applications while maintaining data consistency across all replicas.

Streaming architectures enable real-time analytics and decision-making capabilities that were impossible with traditional batch-based replication approaches. Organizations can respond to business events immediately rather than waiting for scheduled synchronization cycles.

What Are the Main Advantages of Database Replication?

Database replication delivers comprehensive benefits that extend beyond simple data backup to encompass performance optimization, business continuity, and operational efficiency. Understanding these advantages helps organizations justify replication investments and design optimal strategies.

Improved Performance and Reduced Downtime

Replication distributes query load across multiple database instances, significantly improving response times for read-intensive applications. Users can access data from geographically closer replicas, reducing network latency and improving user experience.

Automatic failover capabilities ensure business continuity during system failures or maintenance windows. Organizations can achieve near-zero downtime by seamlessly redirecting traffic to healthy replicas when primary systems become unavailable.

Reduced Server Load and Optimized Resource Utilization

Load distribution across multiple replicas prevents any single database instance from becoming a performance bottleneck. This approach enables organizations to handle increased user demand without expensive hardware upgrades or complex scaling solutions.

Resource optimization extends beyond computational efficiency to include network bandwidth and storage utilization. Intelligent routing can direct queries to the most appropriate replica based on current load conditions and geographic proximity.

Enhanced Disaster Recovery and Business Continuity

Geographically distributed replicas provide comprehensive protection against natural disasters, cyber attacks, and infrastructure failures that could impact business operations. Organizations can maintain operations even when entire data centers become unavailable.

Recovery time objectives decrease dramatically when current replicas are immediately available rather than requiring restoration from backup systems. This capability is particularly critical for industries with strict regulatory requirements or high-availability expectations.

Data Integrity and Consistency Assurance

Modern replication systems include sophisticated validation mechanisms that ensure data accuracy across all replicas. Automated consistency checks detect and resolve discrepancies before they impact business operations.

Transactional integrity is maintained across distributed systems through advanced coordination mechanisms. Organizations can trust that business logic and data relationships remain consistent regardless of which replica processes a given transaction.

Scalability and Flexibility for Growing Organizations

Horizontal scaling through replication provides more cost-effective growth paths than vertical scaling approaches that require expensive hardware upgrades. Organizations can add replicas incrementally as demand increases without disrupting existing operations.

Flexible deployment options support hybrid cloud strategies and regulatory requirements that may restrict data location or processing. Organizations can adapt their replication topology as business needs evolve without major architectural changes.

How Does Database Backup Differ from Replication?

In database backup, you create point-in-time copies of data at specific intervals for recovery purposes. These backups typically represent static snapshots taken during scheduled maintenance windows or based on predetermined schedules.

Database replication, in contrast, creates near-real-time copies across multiple locations to maintain operational continuity. Replicas remain synchronized with the primary database and can quickly be promoted to serve production traffic when needed, typically after a brief failover process.

Backup systems focus on data recovery after failures, while replication systems enable continuous operations during failures. The fundamental difference lies in their operational purpose: backups restore past states, while replicas maintain current operations.

Recovery from backups often requires significant time for restoration and verification processes. Replication enables immediate failover with minimal service interruption, making it essential for high-availability applications.

How Is Change Data Capture Used in Database Replication?

Change Data Capture (CDC) identifies and tracks data modifications in real time, providing a more efficient and scalable approach to database replication that minimizes system impact. CDC captures only the actual changes rather than scanning entire tables for modifications.

This approach dramatically reduces network bandwidth requirements and processing overhead compared to traditional polling methods. CDC systems can handle high-volume transactional environments without impacting source system performance.

Modern CDC implementations support complex scenarios including schema evolution, large object handling, and heterogeneous database environments. These capabilities enable organizations to implement sophisticated replication strategies across diverse technology stacks.

What Tools Can Simplify Database Replication Processes?

Organizations can choose from various categories of replication tools, each designed to address specific use cases, technical requirements, and organizational preferences. The selection depends on factors like budget, technical expertise, and integration requirements.

Database Native Tools

Native database replication features provided by systems like MySQL, PostgreSQL, and SQL Server offer tight integration and optimized performance for homogeneous environments. These tools provide reliable replication with minimal additional infrastructure requirements.

Native solutions typically offer the best performance and reliability for single-vendor environments but may lack advanced features needed for complex scenarios. Organizations benefit from simplified management and reduced licensing costs when using built-in capabilities.

ETL and Data Integration Platforms

Modern data integration platforms like Airbyte, Microsoft SSIS, and Informatica provide comprehensive replication capabilities alongside broader data integration features. These solutions support heterogeneous environments and complex transformation requirements.

Integration platforms excel at handling diverse source systems and complex business logic requirements. They provide unified management interfaces and sophisticated monitoring capabilities that simplify operations across multiple replication streams.

Specialized Replication Solutions

Dedicated replication tools like Oracle GoldenGate and Qlik Replicate focus specifically on database replication scenarios with advanced features for complex environments. These solutions provide sophisticated conflict resolution, performance optimization, and monitoring capabilities.

Specialized tools often provide the most advanced replication features but may require significant expertise to implement and maintain effectively. Organizations benefit from comprehensive replication capabilities at the cost of increased complexity.

Cloud-Based Managed Services

Cloud providers like AWS, Azure, and Google Cloud offer managed replication services that eliminate infrastructure management overhead while providing enterprise-grade reliability and security. These services scale automatically and integrate seamlessly with other cloud services.

Managed services reduce operational burden and provide predictable pricing models based on actual usage. Organizations benefit from reduced complexity and improved reliability while focusing resources on business value rather than infrastructure management.

Next-Generation Platforms

Emerging platforms based on Kafka-native engines and AI-powered optimization represent the future of database replication technology. These solutions provide real-time streaming capabilities with intelligent automation and predictive optimization.

Next-generation platforms focus on eliminating manual configuration and ongoing maintenance through intelligent automation. Organizations can achieve enterprise-grade replication capabilities without dedicating significant technical resources to system management.

What Are the Essential Best Practices for Database Replication?

Successful database replication implementation requires careful planning, appropriate technology selection, and ongoing optimization to meet evolving business requirements. Following established best practices ensures reliable, scalable, and cost-effective replication strategies.

1. Define Your Replication Scope and Strategy

Clearly identify which data requires replication based on business criticality, regulatory requirements, and operational needs. Not all data needs the same level of replication, and strategic prioritization optimizes resource utilization.

Document recovery time objectives (RTO) and recovery point objectives (RPO) for different data categories. These requirements guide technology selection and architectural decisions while establishing clear success criteria.

2. Choose Appropriate Replication Methods

Select replication techniques that align with your consistency requirements, performance expectations, and technical constraints. Synchronous replication provides stronger consistency but may impact performance, while asynchronous approaches offer better performance with eventual consistency.

Consider hybrid approaches that use different replication methods for different data sets based on their specific requirements. Critical transactional data may require synchronous replication while analytical data can use asynchronous methods.

3. Implement Comprehensive Disaster Recovery Planning

Develop and regularly test failover procedures to ensure smooth transitions during system failures. Automated failover capabilities reduce recovery time but require careful configuration and ongoing validation.

Document rollback procedures and data reconciliation processes for scenarios where failover events create data discrepancies. Regular disaster recovery drills identify potential issues before they impact production operations.

4. Monitor and Optimize Replication Performance

Implement comprehensive monitoring that tracks replication lag, error rates, and resource utilization across all replica systems. Early detection of performance degradation prevents business impact and enables proactive optimization.

Establish performance baselines and alerting thresholds that trigger investigation when replication systems deviate from expected behavior. Regular performance analysis identifies optimization opportunities and capacity planning requirements.

5. Implement Robust Security Measures

Secure replication channels using encryption for data in transit and at rest. Access controls should ensure that only authorized systems and personnel can access replica data, maintaining the same security standards as primary systems.

Regular security audits verify that replication processes maintain compliance with organizational policies and regulatory requirements. Security configurations should be tested as part of disaster recovery exercises.

6. Plan for Schema Evolution

Design replication systems that handle schema changes gracefully without requiring manual intervention or system downtime. Modern applications frequently modify database schemas, and replication systems must adapt automatically.

Implement version control and testing procedures for schema changes that affect replication processes. Coordinated deployment procedures ensure that schema modifications don't disrupt replication operations.

What Makes Airbyte the Easiest Way to Replicate Databases?

Airbyte is an AI-powered, open-source data integration and replication platform that transforms complex database replication challenges into streamlined, automated processes. The platform addresses the fundamental limitations of traditional replication approaches through innovative technology and comprehensive feature sets.

Comprehensive Connector Ecosystem

Airbyte provides 600+ pre-built connectors that eliminate custom development overhead for most replication scenarios. These connectors support popular databases, cloud platforms, and SaaS applications while maintaining enterprise-grade reliability and performance standards.

The connector ecosystem includes sophisticated CDC functionality for real-time replication with minimal source system impact. Automated schema detection and evolution handling reduce ongoing maintenance requirements while ensuring data consistency across all replicas.

Advanced Schema Change Management

Automated schema evolution capabilities handle database structure changes without manual intervention or system downtime. Airbyte detects schema modifications automatically and propagates them to all replica systems while maintaining data integrity and consistency.

This capability is particularly valuable for organizations with rapid development cycles or complex application environments where schema changes occur frequently. Traditional replication systems often require manual reconfiguration for schema changes, creating operational overhead and potential service disruptions.

Sophisticated CDC Functionality

Native Change Data Capture support provides near real-time replication for critical business applications. Log-based CDC minimizes source system impact while ensuring comprehensive change capture across all database operations.

The CDC implementation handles complex scenarios including large transactions, schema evolution, and heterogeneous database environments. Organizations can achieve near real-time analytics capabilities without investing in complex streaming infrastructure.

Flexible Pipeline Management

Airbyte supports multiple interaction methods including intuitive UI interfaces, programmatic API access, Infrastructure as Code through Terraform, and Python integration via PyAirbyte. This flexibility accommodates different organizational preferences and technical requirements.

Development teams can integrate replication processes into existing workflows and automation frameworks. The variety of management options ensures that Airbyte fits seamlessly into existing operational processes rather than requiring workflow changes.

Enterprise-Grade Security and Governance

Comprehensive security capabilities include end-to-end encryption, role-based access control, and compliance support for SOC 2 and GDPR requirements. These features ensure that replication processes maintain the same security standards as primary data systems.

Advanced governance capabilities provide detailed audit trails, data lineage tracking, and compliance reporting that meet enterprise and regulatory requirements. Organizations can implement sophisticated data governance policies without sacrificing operational efficiency.

Key Takeaways

Database replication empowers you to protect your data while maintaining high accessibility, availability, and resilience against various failure scenarios. The evolution from traditional backup-based approaches to real-time replication represents a fundamental shift in how organizations approach data protection and business continuity.

Modern replication strategies now include AI-assisted connector building, near-real-time data movement via Change Data Capture, and cloud-native deployment options that reduce complexity while improving reliability. These advances enable organizations to achieve enterprise-grade data protection without the traditional resource investments and operational overhead.

The choice of replication technology should align with your organization's specific requirements for consistency, performance, scalability, and operational simplicity. Platforms like Airbyte demonstrate how modern approaches can eliminate the traditional trade-offs between functionality and ease of use, enabling organizations to implement sophisticated replication strategies without extensive technical expertise.

FAQs

What is the data replication process?

Data replication is the process of creating multiple copies of your data across different locations to enhance availability and reliability.

Why do we need database replication?

Replication provides backups for disaster recovery, improves performance through load distribution, and enables geographic data access optimization.

When should you replicate a database?

Replicate a database when priorities include load balancing, low-latency access, geographic distribution, disaster recovery preparation, and high availability.

What is an example of database replication?

Creating copies of a banking database across multiple data centers so customers can continue transactions even if the primary site fails.

Is database replication always real-time?

No. Replication can be real-time or scheduled, depending on the chosen method and requirements.

How do data types impact database replication performance?

Efficient data types improve replication speed, while complex types (e.g., large objects, JSON) may require additional processing that can impact throughput and latency.

Suggested Read:

.webp)

.jpeg)