What Is a Serverless Data Pipeline: A Comprehensive Guide

Summarize this article with:

✨ AI Generated Summary

Serverless data pipelines enable organizations to build scalable, cost-efficient, and flexible data processing workflows without managing infrastructure, using cloud-managed functions triggered by events or schedules. Key components include event triggers, serverless functions, data ingestion, transformation, storage, orchestration, analytics, and security, supported by tools like AWS Lambda, Azure Functions, and Airbyte.

- Advantages: pay-as-you-go cost model, faster development, automatic scaling, minimal operational overhead, high availability, and future-proofing.

- Use cases: IoT data processing, automated ETL, real-time sentiment analysis, backend processing, batch reporting, data integration, and log analysis.

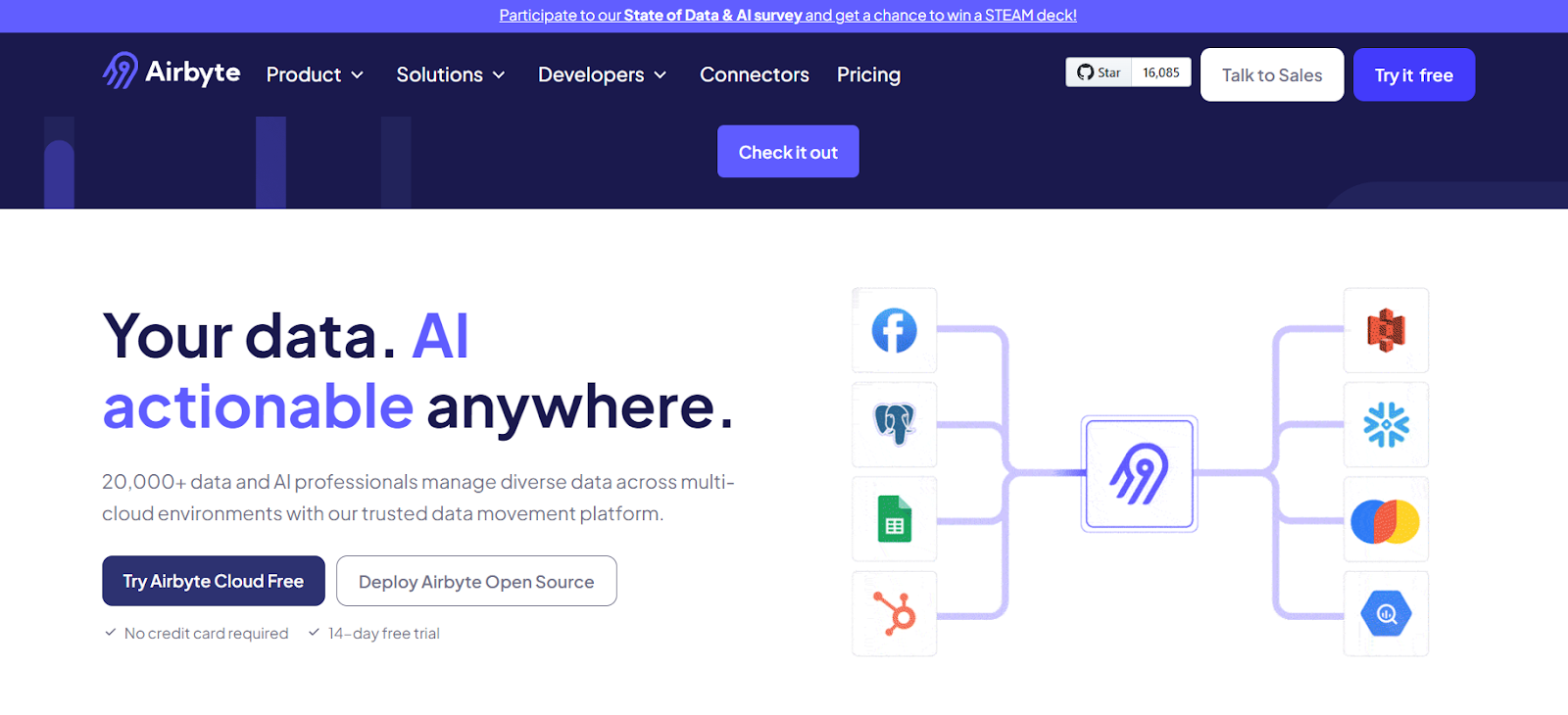

- Airbyte simplifies serverless pipeline creation with pre-built connectors, AI-assisted custom connectors, and integration with orchestration tools for streamlined, scalable workflows.

Effective data processing is very important for organizations to gain timely insights and monetize them by quickly responding to changing market conditions. However, conventional data pipelines often involve managing and maintaining complex infrastructure and scaling them according to your needs can be computationally expensive. This is where serverless data pipelines can be helpful.

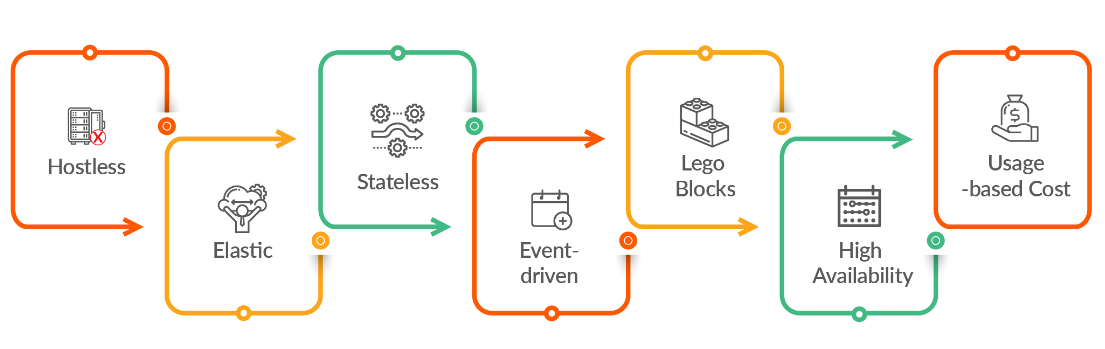

However, before discussing serverless data pipelines, let’s explore serverless computing and its role in facilitating these pipelines. Serverless computing is where the cloud provider manages the underlying servers and software. You can rent these resources on-demand, pay for what you use, and build and deploy data pipelines without worrying about the overhead of managing servers.

This article will provide you with a detailed overview of serverless data pipelines, how they work, their advantages, and use cases.

What are Serverless Data Pipelines?

Serverless data pipelines are a modern approach to building and managing data processing workflows. They are a data processing architecture in which cloud providers like AWS, Azure, or Google Cloud handle the scaling, provisioning, and maintenance of the required infrastructure.

You can build serverless data pipelines to perform ETL (extract, transform, load) tasks while easily adding or removing resources. They are flexible, fault-tolerant, and cost-efficient solutions that enable you to work efficiently with fluctuating data, batch processing, and real-time data streams.

Serverless data pipelines utilize small, independent code snippets known as functions that can be triggered by events or scheduled to run periodically. You can develop and deploy these functions using technologies like Google Cloud Functions, AWS Lambda, Apache OpenWhisk, and Azure Functions and create resilient data pipelines.

Key Components of Serverless Data Pipelines

Serverless data pipeline architecture has several key components that work together to help you efficiently process and analyze data. By understanding these components, you can build data pipelines that suit your requirements. Here is a brief rundown:

- Event Triggers: Serverless pipelines are often initiated by events, such as file uploads, database changes, or scheduled tasks. These events act as triggers that automatically start the data processing workflow. You can use Azure Functions or AWS Lambda to trigger functions when new data is uploaded or scheduled triggers like EventBridge Scheduler or AWS CloudWatch.

- Serverless Functions: These small, stateless functions, typically managed by cloud providers, execute computational tasks such as data transformation, enrichment, or validation. You can execute them through triggers, and because they are stateless, they rely on external storage to maintain state or pass data between stages of the pipeline.

- Data Ingestion: This is the first step in building pipelines. You can use tools like Airbyte, Amazon Kinesis, Google Cloud Pub/Sub, and Azure Event Hubs to collect raw data from relevant sources. These scalable services allow you to capture large volumes of data from databases, APIs, IoT devices, and streaming platforms without needing dedicated servers.

- Data Transformation and Processing: This step involves transforming or manipulating raw data into a suitable format for analysis or storage using filtering, aggregation, or normalization. You can use tools like Apache Airflow, AWS Glue, and Google Cloud Dataflow to automate data-heavy operations within your serverless ETL pipelines.

- Data Storage: To store your data for further processing or archiving, you can use cloud solutions such as Amazon S3, Google Cloud Storage, or Azure Blob Storage. These solutions help you store both structured and unstructured data while ensuring data availability and durability.

- Data Orchestration: Data orchestration involves managing the flow and dependencies between several tasks within the pipeline. By using serverless orchestration tools like AWS Step Functions or Google Cloud Composer, you can define workflows and coordinate the different stages of a data pipeline.

- Data Analytics and Visualization: Once your data is analysis-ready, you can leverage Google Data Studio, AWS Athena, Azure Power BI, or AWS QuickSight to derive insights and visualize results. These tools help you run queries directly on the data stored in serverless databases or storage solutions and create charts, real-time dashboards, and reports.

- Security and Compliance: Your data pipelines should have robust security measures like encryption, monitoring, and access control to protect sensitive information and meet regulatory standards (GDPR or HIPAA). You can also utilize AWS, Azure, or Google, which offer built-in features such as Identity and Access Management, logging, and auditing to implement data governance.

How do Serverless Data Pipelines Work?

In serverless data pipelines, every task, such as ingestion, transformation, and orchestration, can be executed as a function triggered by events or schedules. Cloud providers provide you with resources, services, or workflow engines to implement these functions that define and manage the pipeline.

The arrival of new data acts as a trigger, and tools like Azure Event Hubs or AWS Kinesis begin the data extraction process. These tools collect data from various sources, such as web applications, marketing platforms, and sensors. After ingestion, serverless computing services like AWS Lambda or Azure Functions can be used to implement cleaning, filtering, or reformatting to transform data for further processing.

In the next step, this transformed data is loaded into serverless storage solutions. Additionally, serverless orchestration tools can help ensure that data moves through the pipeline in the correct order and without interruption. The last step involves using this enriched data to gain insights into your business operations, perform root-cause analysis, or create visually interactive dashboards.

While there is no one way to develop serverless data pipelines, you can depend on Airbyte, an AI-powered data movement platform, to offer you a streamlined approach. This tool can easily be deployed on your existing infrastructure and integrated with serverless architectures using AWS Lambda triggers. These serverless services help you initiate your data syncs effortlessly.

Below are some key features of this platform that help you simplify the pipeline development process:

- Intuitive User Interface: The UI is easy to use and enables even people with no coding experience to build and deploy data pipelines. It helps them explore data independently, generate reports, or perform other downstream tasks.

- Pre-Built Connectors: Airbyte provides a library of over 400 pre-built connectors, which allow you to integrate easily with platforms that support serverless implementations in minutes. Some examples include Amazon SQS, Azure Blob Storage, Amazon S3, Google PubSub, and Amazon Kinesis.

- Custom Connector Development: You can also build pipelines tailored to your project’s requirements using the low-code Connector Development Kit (CDK), Java-based CDK, Python-based CDK, and Connector Builder. Airbyte also offers an AI assistant to speed up the development process while using Connector Builder by pre-filling and configuring several fields. It also provides intelligent suggestions to fine-tune your configurations.

- Support for Vector Databases: With Airbyte, you can simplify your GenAI workflows by directly storing your semi-structured and unstructured data in vector databases like Pinecone, Chroma, Qdrant, and Milvus. It also allows you to integrate with LLM frameworks like LangChain and LlamaIndex to perform RAG transformations like automatic chunking, embedding, and indexing.

To future-proof your data workflows, Airbyte has also announced the general availability of the self-managed enterprise edition. This version offers you scalable data ingestion capabilities and supports multi-tenant data mesh or data fabric architectures. You can also operate the self-managed enterprise solution in air-gapped environments and ensure no data leaves your environment.

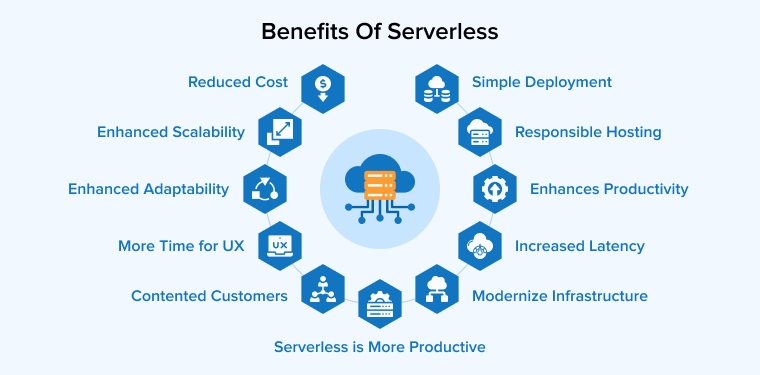

Advantages of Serverless Data Pipelines

Serverless pipelines eliminate the risk of managing expensive hardware and are a great solution for your modern data processing needs. Below are some reasons why you should adopt serverless data pipelines:

- Cost-Efficiency: Unlike conventional data pipeline services that require an upfront investment in hardware and continuous maintenance charges, serverless pipelines operate on a “pay-as-you-go” model. This implies that you only have to pay for the resources you use during processing and will not be charged for idle servers. As your data load changes, the serverless pipelines automatically scale up or down so that your expenses align with your usage and budget.

- Faster Development Cycles: Serverless pipelines speed up the development process by offering built-in components and automation capabilities to implement your everyday tasks. This allows you to dedicate more time to creating and refining the data logic rather than dealing with complex configurations. These serverless solutions usually have easy-to-use APIs and pre-configured environments, enabling your teams to rapidly prototype, test, and deploy data solutions.

- Scalability and Flexibility: Manually managing the sudden ups and downs of the data traffic is challenging and time-consuming. However, with the flexible, automatic scaling feature of serverless pipelines, you can expect consistent performance, especially if your data workload is unpredictable or continuously increasing.

- Minimal Operational Overhead: With serverless data pipelines, instead of continuously looking after your infrastructure’s maintenance needs, tracking software updates, and performing capacity planning, you can prioritize strategic activities. This includes optimizing data migrations, analyzing data, and generating reports. The cloud providers take care of all the operational tasks, saving time and effort.

- High Availability and Resilience: Serverless data pipelines are designed for resilience and high availability. The cloud providers usually distribute workloads across multiple data centers, ensuring that there is no single point of failure. This ensures that your data pipelines remain operational even during hardware failures or outages.

- Future-Proof Solution: Cloud service providers automatically update your infrastructures and offer additional services. This allows your data pipelines to stay relevant to the modern tech stack and makes it easier to integrate with advanced tools and capabilities over time.

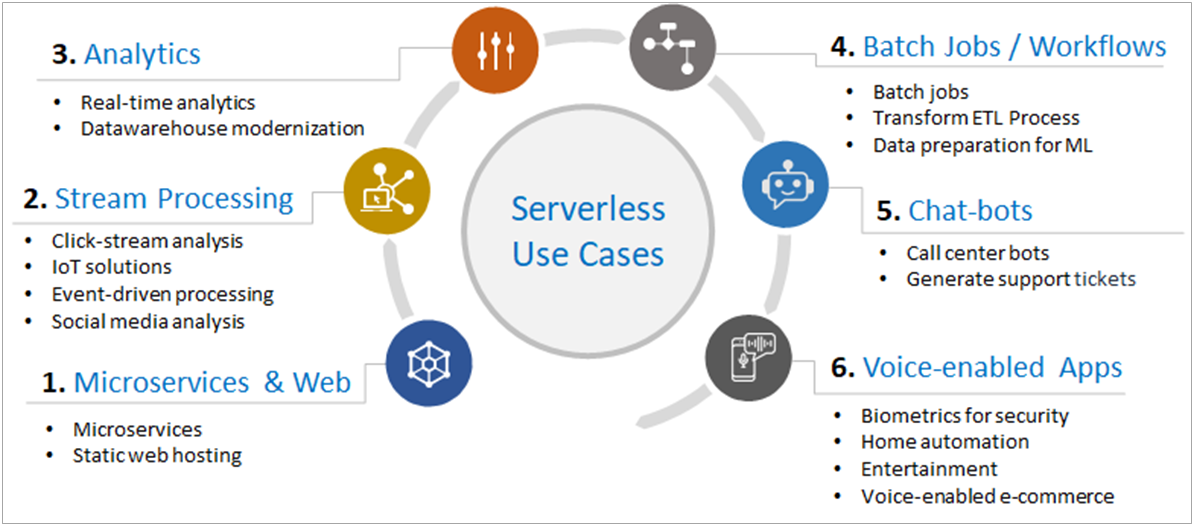

Use Cases of Serverless Data Pipelines

Serverless data pipelines have many use cases. Some of them are mentioned below:

IoT Data Processing for Smart Homes

Smart home systems depend on IoT sensors to track energy use, security systems, and weather conditions. With serverless pipelines, you can ingest data from multiple sensors, analyze it in real-time, and trigger specific actions like adjusting thermostats or sending security alerts.

Automated ETL Workflows for Data Warehousing

Serverless pipelines help you gather raw data from various sources, clean and transform it based on business needs, and load it into cloud-based data warehouses. This end-to-end automation of ETL workflows reduces the chances of bottlenecks or errors and keeps data consistently ready for downstream analysis.

Real-Time Sentiment Analysis of Social Media Posts

Serverless data pipelines are best suited for real-time sentiment analysis. Brands can scrape data from social media platforms like Twitter or Instagram to analyze how people feel about their products or events. By using serverless pipelines, they can process high-volume data continuously and provide insights on customer reactions and trends, enabling them to make faster business decisions.

Backend Processing for Web and Mobile Applications

You can use pipelines with serverless functions to implement backend processes like managing user authentication, processing orders, and searching products for web and mobile apps. For example, an e-commerce platform can use serverless functions to trigger tasks like updating inventory or sending order confirmations.

Batch Processing for Monthly Reports

Financial or marketing teams that deal with massive datasets can leverage serverless pipelines to automate batch data processing at the end of each month. The pipeline can process data based on predefined rules and generate detailed reports.

Data Integration Across Multiple Systems

When making crucial decisions, you require a unified view of your data residing in disparate systems; serverless pipelines can help you with this. These pipelines can collect data from CRM systems, ERP platforms, and third-party APIs into a centralized repository, enhancing business intelligence and data visualization efforts.

Log Data Analysis for Application Performance Monitoring

Serverless pipelines can process logs in real-time. They allow you to combine performance metrics from different systems and quickly identify potential risks or failures. This provides you the chance to fix issues immediately and improve the reliability and overall user experience of the application.

Serverless Data Pipelines with Airbyte

Creating a serverless data pipeline with Airbyte involves integrating Airbyte with serverless cloud services. This approach allows you to leverage Airbyte’s robust AI-powered data integration capabilities while utilizing serverless resources for scalability and reduced management overhead.

Here is a step-by-step guide for you to get started with building a serverless pipeline using Airbyte:

Step 1: Choose Your Deployment Option

You can deploy Airbyte locally on your computer, into a Kubernetes cluster, or onto a single node (EC2 virtual machine). Additionally, cloud providers like AWS, GCP, or Azure can also be used. By deploying Airbyte using cloud providers or Kubernetes, you can build serverless data pipelines. This is a simpler approach but may require more manual management. However, it is advisable to use Airbyte Cloud for a streamlined experience.

Airbyte Cloud is a fully managed version that supports automatic scaling and performance optimization, lowering the complexity of managing pipelines. It also provides advanced features like automated schema propagation and rolling connector upgrades. Airbyte Cloud handles all the updates and maintenance tasks of the underlying infrastructure, allowing you to focus on your data flows.

Additionally, you can control data synchronization settings and reset a connection's jobs by configuring Airbyte to trigger these tasks based on events or schedules. This feature of the platform aligns with the event-driven nature of serverless architectures.

Step 2: Triggering Airbyte Syncs

Airbyte offers various ways to trigger data synchronizations. You can either use Airbyte’s built-in scheduler to schedule syncs at specific intervals or its other two options: cron and manual scheduling.

To synchronize your data programmatically, you can also leverage PyAirbyte, Airbyte’s open-source Python library, and simplify the process. Here is a detailed guide for reference.

Step 3: Set Up Your Data Source

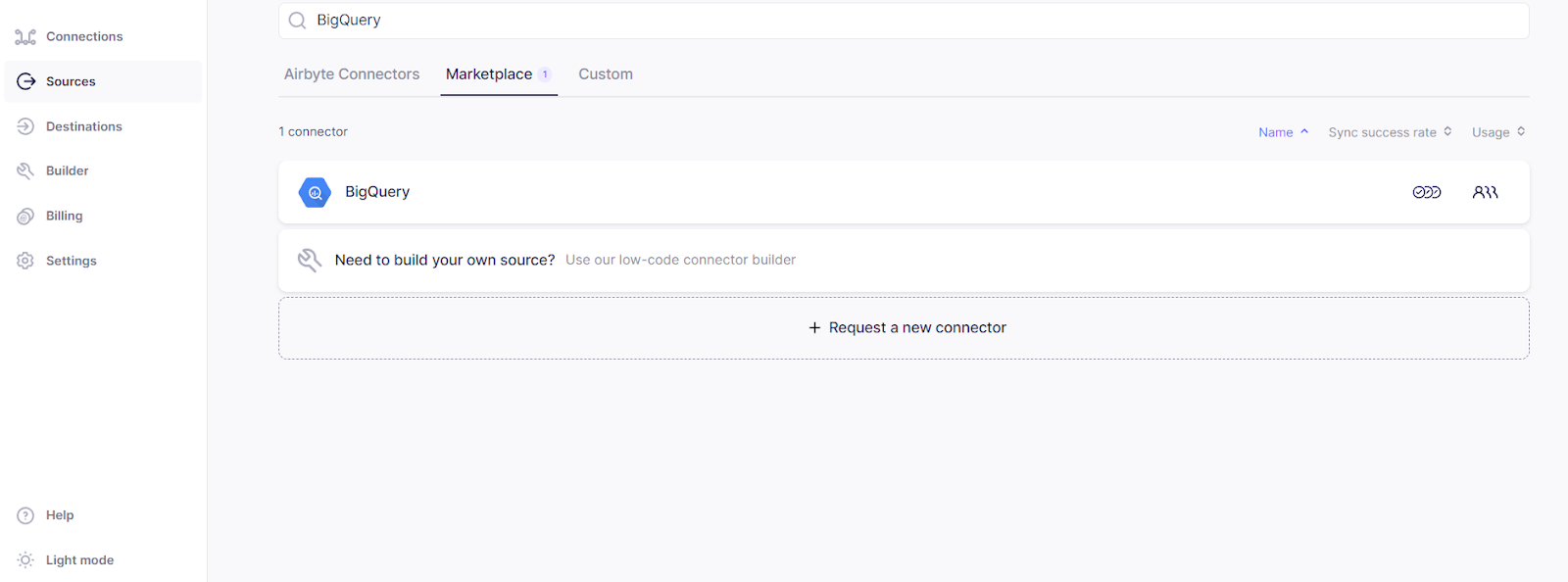

By following the steps below, you will be able to configure the serverless data source part of the pipeline:

- Go to Airbyte Cloud and sign up for an account.

- Click on the Sources tab on the left panel.

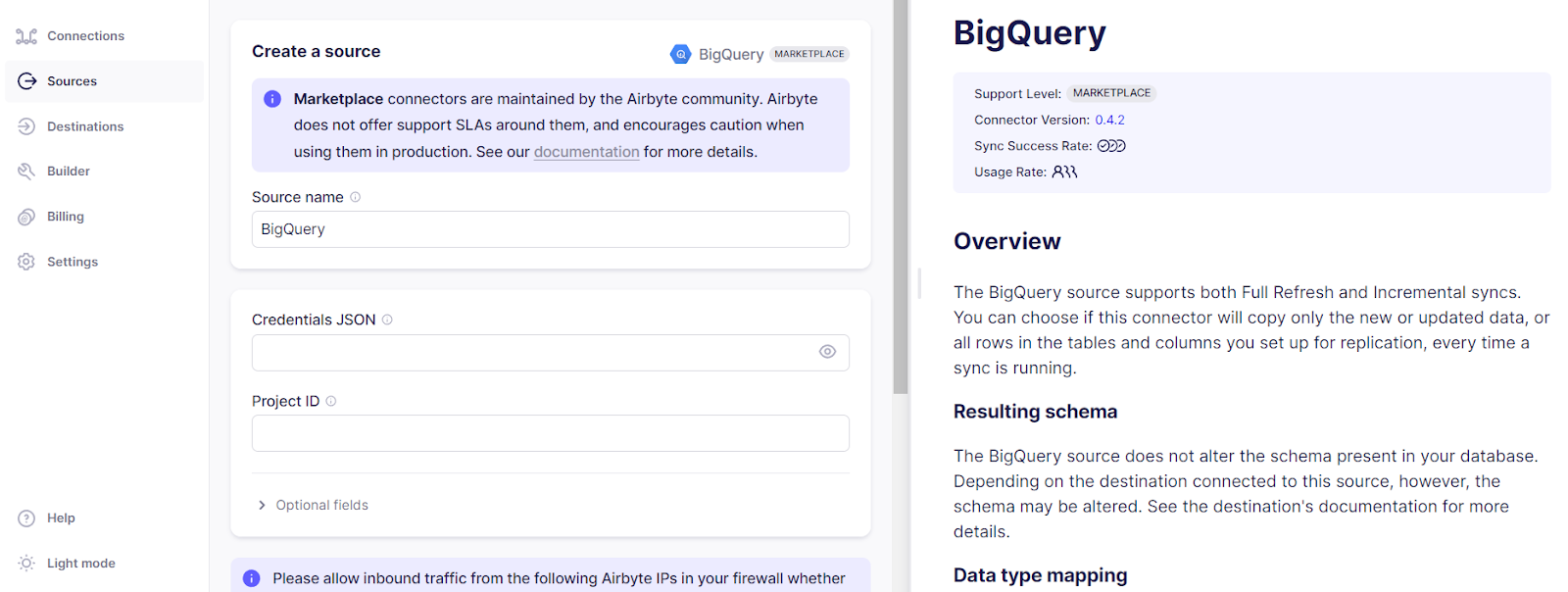

- Enter the serverless data source of your choice and click on the respective connector. For this tutorial, let’s consider Google BigQuery as the data source.

- Once you click on the connector, you will be redirected to the source configuration page, where you will enter all the mandatory details. These include the Source name, Credentials JSON, and Project ID.

- After this, click on the Set up Source button. Airbyte will run a quick test to verify the connection to the data source.

Step 4: Set Up Your Destination

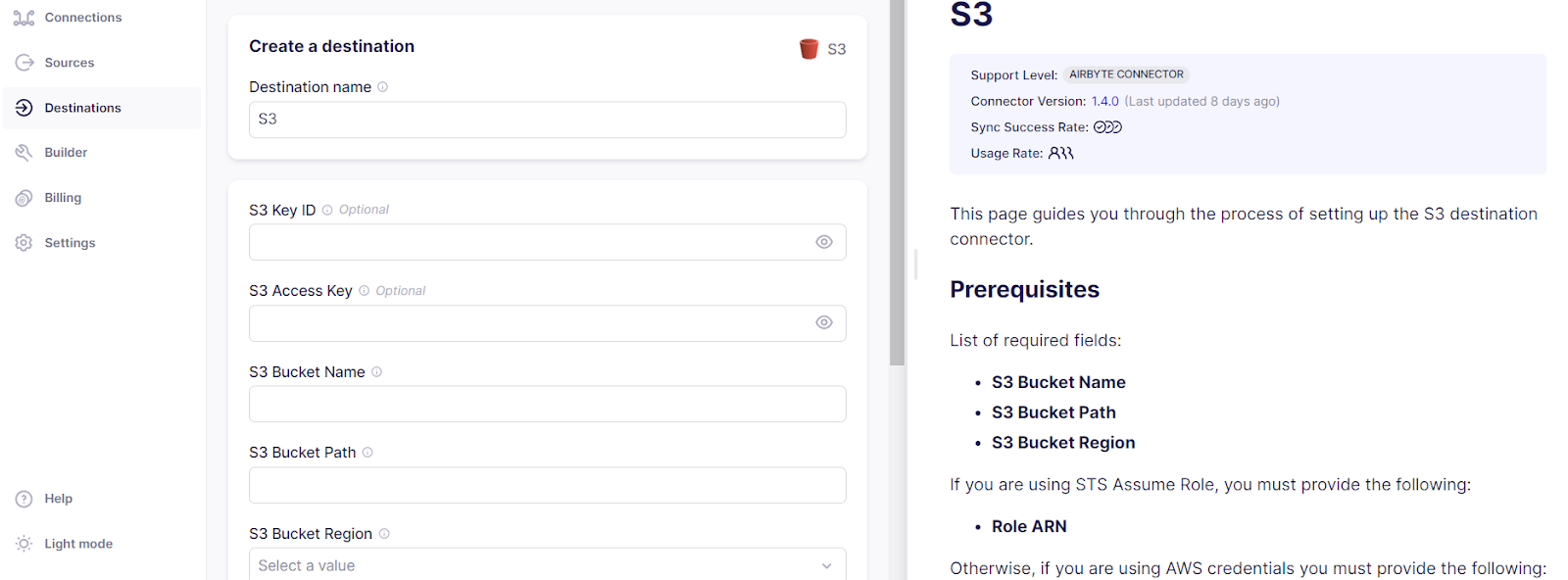

- Similarly, click on the Destinations tab on the left panel and enter your preferred destination.

- Click on the respective connector and enter all the mandatory details related to the destination. For convenience, let’s opt for Amazon S3 as the destination.

- Input all the necessary information, such as Destination Name, S3 Key ID, S3 Bucket Name, and S3 Bucket Region. Then, click the Set up Destination button.

- Airbyte will run a verification check to ensure a successful connection.

Alternatively, you can use Connector Builder and AI assistant to configure the source and destination quickly.

Step 5: Set Up a Connection

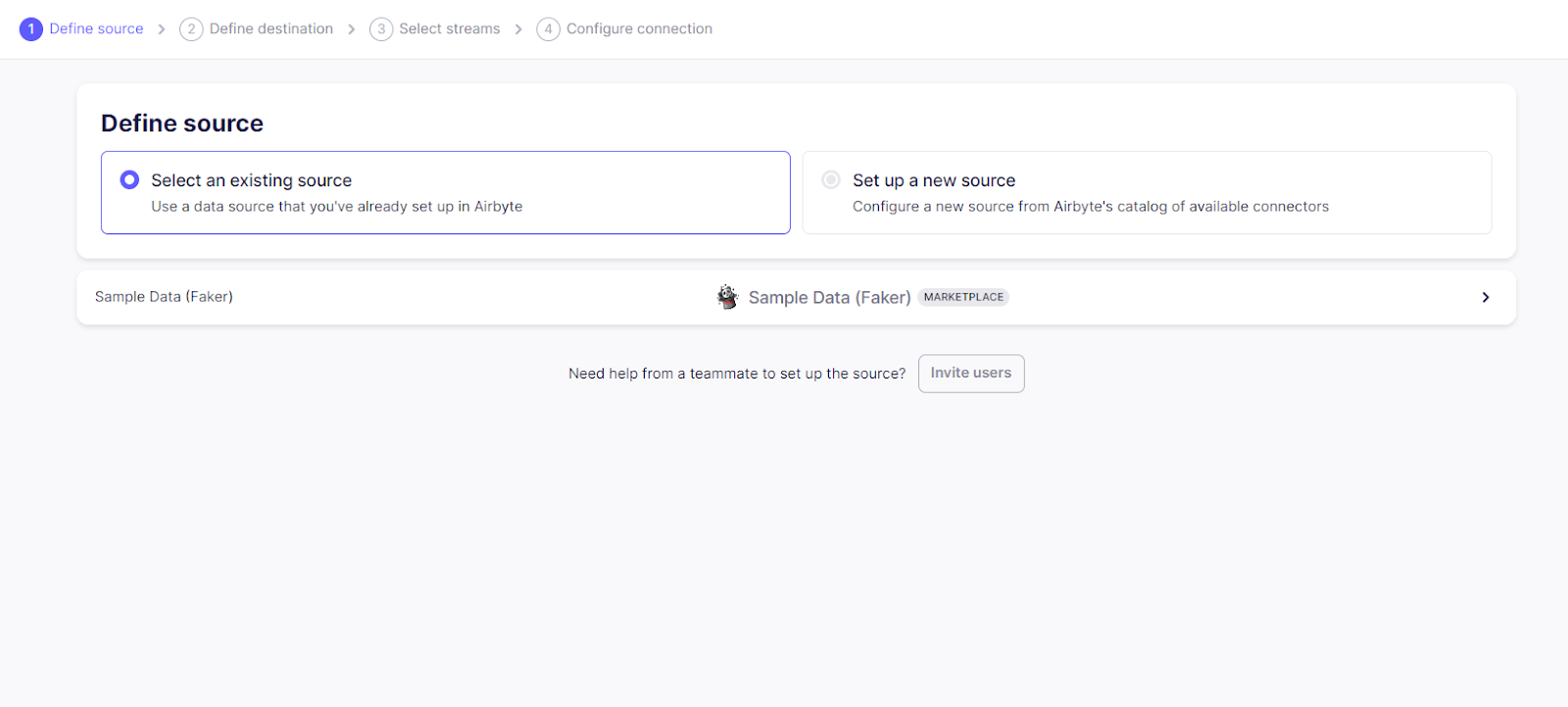

- Click on the Connections tab present on the left side of the dashboard. You will see a four-step process to complete the connection.

- The first two steps involve selecting the source and destination that you previously configured.

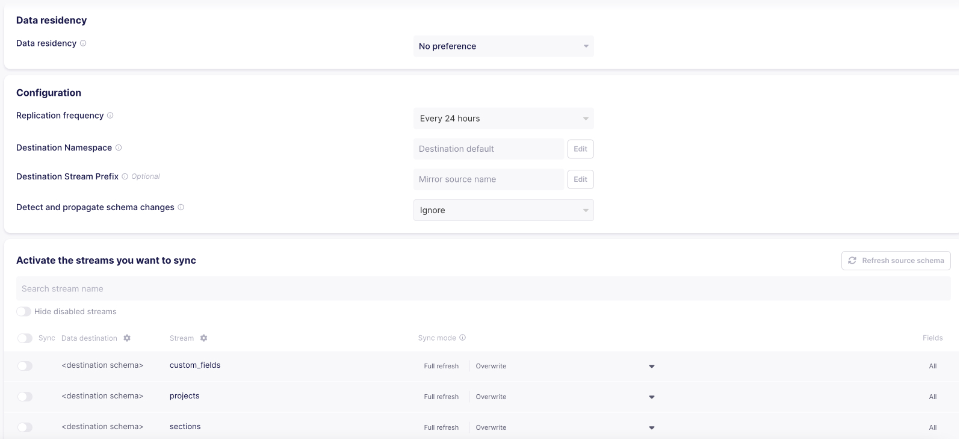

- The next two steps involve defining the frequency of your data syncs and the mode of synchronization (full refreshes or incremental).

- Mentioning the replication frequency (for ex, hourly, every 24 hours, weekly, etc.) implies that you are specifying a schedule for your data syncs. Once the schedule is set, Airbyte takes over and triggers the sync at those specified intervals.

- Click on Test Connection and save your configuration. When your pipeline setup passes the test, click on Set up Connection.

Step 6: Orchestrate the Pipeline with Serverless Orchestration Tools

To manage and automate serverless data pipelines, you can integrate Airbyte with data orchestrators like Apache Airflow, Dagster, Prefect, and Kestra. Additionally, the Kestra plugin, available for open-source and enterprise versions, allows you to schedule data synchronizations.

By combining Airbyte with cloud-native serverless services, you can build a scalable serverless data pipeline.

Wrapping It Up

Serverless data pipelines offer a flexible, cost-effective, and scalable approach to building and managing data processing workflows. By leveraging serverless functions, event-driven architectures, and cloud-based services, you can streamline data ingestion, transformation, and analysis without the complexities of managing infrastructure.

Some of the advantages of using this technology include reduced operational overhead, improved scalability, flexibility, and availability, and enhanced reliability. By integrating serverless platforms with easy-to-use tools like Airbyte, you can simplify the process of developing serverless data pipelines. Adopting such advanced methods for faster, more reliable data workflows can give you a competitive edge and help you succeed in the long run.