Agent Engine Public Beta is now live!

Learn to build efficient data pipelines using Airbyte, dbt, and DuckDB. A comprehensive guide for data engineers with practical implementation steps.

Summarize this article with:

Your organization’s data might be available in dispersed locations and varied formats. Integrating this data into a single repository, like a data warehouse, is often considered beneficial for facilitating high accessibility within your organization.

However, ensuring data compatibility with the destination schema can be challenging. For this reason, you must first apply transformation logic to the data to enhance its quality.

One prevalent approach to handling this data workflow is using tools like dbt and DuckDB. DuckDB is a lightweight SQL database system for temporary and persistent data storage in the development environment. dbt, conversely, is a transformation tool that offers adapters to connect to prominent databases like DuckDB to orchestrate the transformation logic.

This article will walk you through the process of integrating dbt DuckDB with Airbyte, one of the best data pipeline tools.

DuckDB is an in-process relational database management system (RDBMS) that offers online analytical processing (OLAP) functionality. This makes it a prominent option for handling analytical query workloads. Using DuckDB, you can perform operations like aggregation and joins on large-scale data tables.

dbt, or data build tool, is an open-source transformation solution that allows you to prepare data for analysis. Using dbt, you can modify your source data into a format compatible with the downstream applications. To accomplish this, dbt supports data modeling capabilities, allowing you to create custom transformation models using SQL. Eventually, you can analyze the transformed data and generate actionable insights that can enhance business performance.

dbt offers two flexible products, including the Cloud and Core versions. dbt Cloud is a fully managed service, while dbt Core is a self-managed, open-source option.

Airbyte is an AI-powered, no-code data replication tool that enables you to move data from various sources to your preferred destination. It offers an extensive library of 550+ pre-built connectors, allowing you to develop data pipelines to extract and load structured, semi-structured, and unstructured data. In case a connector is unavailable, Airbyte provides a suite of Connector Development Kits (CDKs) and a Connector Builder for custom connector development.

You can choose to use the Airbyte Cloud version for building the dbt DuckDB pipeline. Its intuitive user interface and pre-built connectors simplify your journey of extracting the data from your preferred sources into DuckDB or Motherduck. The Airbyte DuckDB destination connector is compatible with Motherduck—an analytics database—for persistent storage.

To perform custom transformations on the consolidated data, you can integrate Airbyte with dbt. All you have to do is navigate to the Setting tab and configure dbt Cloud integration by providing the Service Token.

However, if you intend to use DuckDB local storage, you can leverage PyAirbyte to develop a custom data pipeline.

When integrating dbt DuckDB using PyAirbyte, it is essential to follow a structured procedure. This involves establishing a data pipeline architecture. In this architecture, PyAirbyte aids you in extracting data to your local machine as a DuckDB cache. Following this, you can create a dbt DuckDB connection that allows data transformation. Eventually, you can store the data in a data warehouse or a database based on your requirements.

Before getting into the steps, you must ensure that all the prerequisites are satisfied. You must have:

Let’s explore how PyAirbyte enables you to build data pipelines using dbt and DuckDB.

Create a virtual environment to isolate all your dependencies in Jupyter Notebook. For a Unix-based system, you can use:

Install all the necessary libraries, including PyAirbyte, DuckDB, dbt core, and dbt DuckDB adapter plugin, on your system by executing:

After installing the required libraries, import them into the editor to begin the data movement.

To check the number of available connectors provided by Airbyte, run the following:

In this tutorial, we will use Polygon.io—a stock market API—as a source. To extract data from this API, you must first log in to the dashboard and access the API key. After accessing the key, execute:

Output:

Provide the API key in the terminal. This step will retrieve the past year’s records for the company Apple's stock, starting from 1st January 2024 to 31st December 2024. You can now verify the connection by running the check method:

Output:

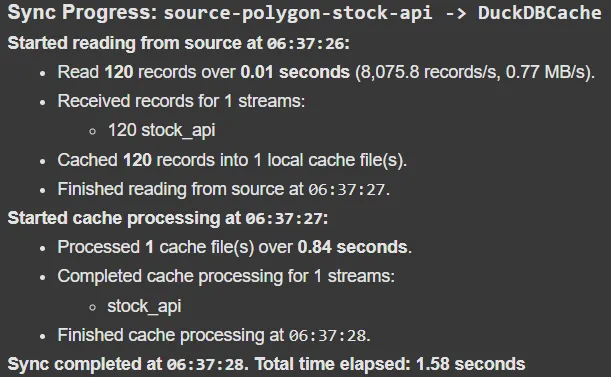

After successfully establishing a connection, select all of the source's streams and load data into the internal DuckDB cache:

Output:

The .cache folder relative to the current working directory will store this cache data.

To effectively work with the DuckDB adapter for dbt, create a connection to the dataset. Replace the file_location placeholder with the exact location where the default_cache.duckdb file is available:

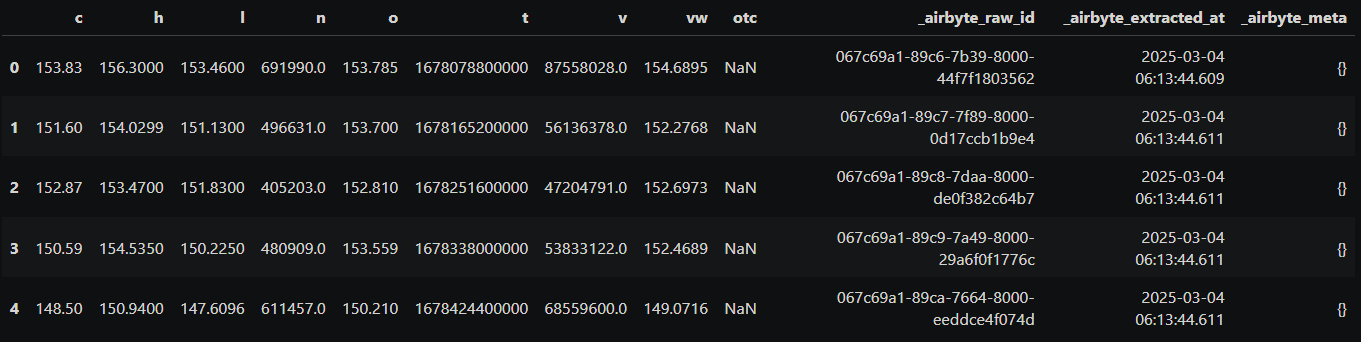

Verify the successful connection to the dataset by executing:

Using this df variable, you can print the Apple stock prices to check the format of the dataset. For example, to print the first five rows in the dataset, use:

Output:

To initialize a new dbt Project, you can execute the following code:

The terminal will prompt you to select the adapter database. Here, you can choose the available duckdb option. This step will create a new folder called dbt_duckdb_project. You can either navigate to the project folder or use the command:

In the .dbt folder, locate the profiles.yml file. This file contains all the dbt connections to the database.

Ensure the profiles.yml file has the DuckDB connection with the correct dataset directory location. If not, you edit the file with this format:

Replace the path with the actual location of the dataset.

You can add custom SQL statements to define models that modify the Polygon stock market data stored in DuckDB, removing inconsistencies and unnecessary values. The models folder lets you specify how you intend to modify the dataset. If you wish to load this dataset in a target system, transform it according to the destination schema. Another option is to use the same Python environment to analyze and visualize the dataset based on the business requirements.

Finally, you can test and execute the dbt project.

To validate your dbt project configuration, execute:

You will see a success message as if everything works as expected. After this, run the dbt project using:

Here are some of the use cases of integrating dbt with DuckDB:

DuckDB assists you in building applications that provide faster data access to end users. dbt can play an essential role in supporting data transformation for this system without requiring an external data storage solution. Integrating DuckDB and dbt pipeline with a binary instruction format for virtual machines, like WebAssembly (WASM), is considered beneficial. This enables the execution of high-performance analytics engines within a browser.

Directly storing data available from various sources in a warehouse can increase storage and computation costs. With dbt DuckDB integration, you can clean the data, remove unnecessary information, and enrich the dataset before loading it into the target system. This can help you cut down additional costs occurring due to redundancy.

Working with dbt and DuckDB in your local environment enables you to implement strategies to improve data quality in the development stage. This can help ensure the generation of high-quality data. After aligning the strategy with the incoming data stream, you can deploy them in the production environment. This is especially helpful for scenarios like schema change management and performance optimization of existing data infrastructures.

This article comprehensively demonstrates how to develop a dbt DuckDB data pipeline that enables you to transform, manage, and analyze data using SQL-based modeling and automation.

Airbyte Cloud simplifies this process by offering pre-built connectors, along with seamless dbt integration. This approach allows you to develop pipelines using a no-code user interface.

On the other hand, PyAirbyte provides a flexible approach. It enables you to extract data from various platforms and load it into a DuckDB cache. The cached data can then be used with the dbt DuckDB adapter, allowing you to create dbt models and apply business logic. Depending on your use case, you can either analyze this transformed data or load it into a database or a data warehouse.

To automate data migration workflows within your organization, sign up for Airbyte.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.