Agent Engine Public Beta is now live!

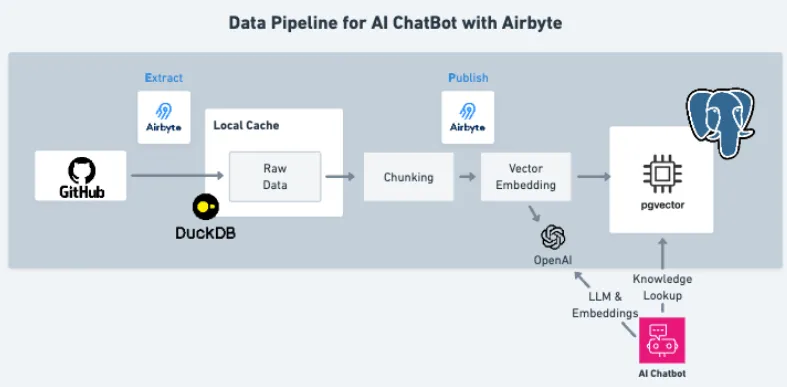

Learn how to build a GitHub documentation chatbot with PyAirbyte and PG Vector for seamless data retrieval and enhanced user experience.

Summarize this article with:

Developing a custom GitHub chatbot is crucial to streamline business operations. By creating an internal chatbot, you can foster effective management of pull requests and identification of newly added issues. This assists in reducing the delays in addressing critical tasks and maintaining project transparency.

Although there are multiple ways that can aid you in creating a custom chatbot, we are going to discuss the best one using PyAirbyte and pgvector. Following this tutorial, you will build a robust GitHub chatbot that uses the issues created in your repository to respond to your questions.

pgvector is an extension of PostgreSQL that allows you to store, query, and index vector embeddings. Designed as a relational database, Postgres lacks the ability to perform vector similarity searches.

Bridging this gap, pgvector enables Postgres to support specialized vector operations alongside regular Online Transaction Processing (OLTP) tasks. This feature is especially advantageous if your application’s backend utilizes PostgreSQL as the primary database. By querying the vector embeddings stored in the database, you can incorporate robust similarity search techniques.

Airbyte is a no-code data integration solution that enables you to migrate data from various sources to the destination of your choice. With over 550 pre-built data connectors, it lets you move structured, semi-structured, and unstructured data to the destination of your choice.

If the connector you seek is unavailable, you can use the Airbyte Connector Development Kits (CDKs) or the Connector Builder to create custom connections.

Here are the key features offered by Airbyte:

Among the outlined data pipeline development methods is using PyAirbyte, a Python library. PyAirbyte empowers you to leverage Airbyte connectors in the Python development environment. Utilizing this library, you can extract data from dispersed sources and load it into prominent SQL caches like DuckDB, Postgres, or Snowflake. These caches are compatible with AI frameworks like LangChain and LlamaIndex.

Let’s see how you can use PyAirbyte to build a custom chatbot by migrating data from a GitHub repository to pgvector.

Now that you have an overview of the tools required for this tutorial, let’s start with the steps to build a GitHub chatbot. But before initiating, you must ensure that the necessary prerequisites are satisfied.

For the first step, create a virtual environment in a code editor like Google Colab Notebook to isolate dependencies. To perform this step, execute this code:

Now, you can get started with installing the necessary libraries. In this tutorial, we will use the uv library to speed up the installation process.

Install PyAirbyte and OpenAI:

Install JupySQL to work with SQL in Colab Notebook:

After installing the dependencies, it is essential to import the libraries that will be used throughout the project.

Import PyAirbyte and specify the Google Drive cache that will store the connection data by replacing the drive_name and sub_dir with a path:

To allow standard inputs, run:

For accessing Google Drive:

Configure rich library for styling and formatting terminal output:

Import OpenAI and set up the connection. Replace the OPENAI_API_KEY with your credential in the code below:

For working with SQL, import specific sqlalchemy methods:

Before connecting to Postgres, it’s beneficial to set up the JupySQL library to perform SQL in the same Colab Notebook. Load the JupySQL extension in Colab and configure the max row limit:

To connect to the Postgres instance with pgvector installed, get the SQLAlchemy 'engine' object for the cache:

Pass the engine to JupySQL:

Use JupySQL to execute SQL statements. You can verify PostgreSQL query execution in Google Colab using the following:

This code will output the available schema names. Install pgvector extension:

To configure GitHub as a source connector, you must have the necessary parameters, including the repository name and access credentials (PAT). After accessing the required credentials, provide the repositories and credentials in the config parameter and execute the code below:

The above code will configure Airbyte’s GitHub repository as the source. To check whether the connection to the API was successful, run the following code:

The above code must output a success message.

After configuring the data source, check the available data streams that you can use to train your chatbot.

This code will output a list of available data streams for your selected repository. If you wish to select a subset of all the available streams to train your chatbot, execute the code below:

You can either add or remove stream names from this list, depending on your requirements. The above code selects the issues stream. Read the results of this connection in the colab_cache:

When configuring pgvector as a destination, PyAirbyte enables you to perform automatic RAG techniques. Outline the configuration mode by mentioning indexing, embedding, and processing parameters. To set up the destination connector, specify account credentials and execute this code:

Splitting the text into smaller manageable components is a beneficial processing step that enhances the search performance. For better search results, adjust the chunk_size and chunk_overlap parameters. The chunk_size parameter represents the number of tokens in a single chunk. The chunk_overlap highlights the total number of tokens that can overlap in different chunks.

Write data to pgvector:

The data is available in pgvector and can be leveraged to build your GitHub chatbot. To create the chatbot, you must define three functions.

In the following sections, you will learn how to generate vector embeddings for the input questions, perform relevant searches based on the knowledge base, and retrieve the data to answer the questions.

To create a function that calculates a vector representing the original question, run the code below:

Test this function by providing a sample question to the function.

This code will print the vector equivalence of the question provided.

Defining an SQL statement is a crucial step. The given RAG_SQL_QUERY variable contains an SQL statement that will aid in querying the required data.

To get an easily understandable output format, create a horizontal line with a newline character (\n).

Generate a search relevance function that returns the GitHub issue number with the specific issue posted for the question using the code below:

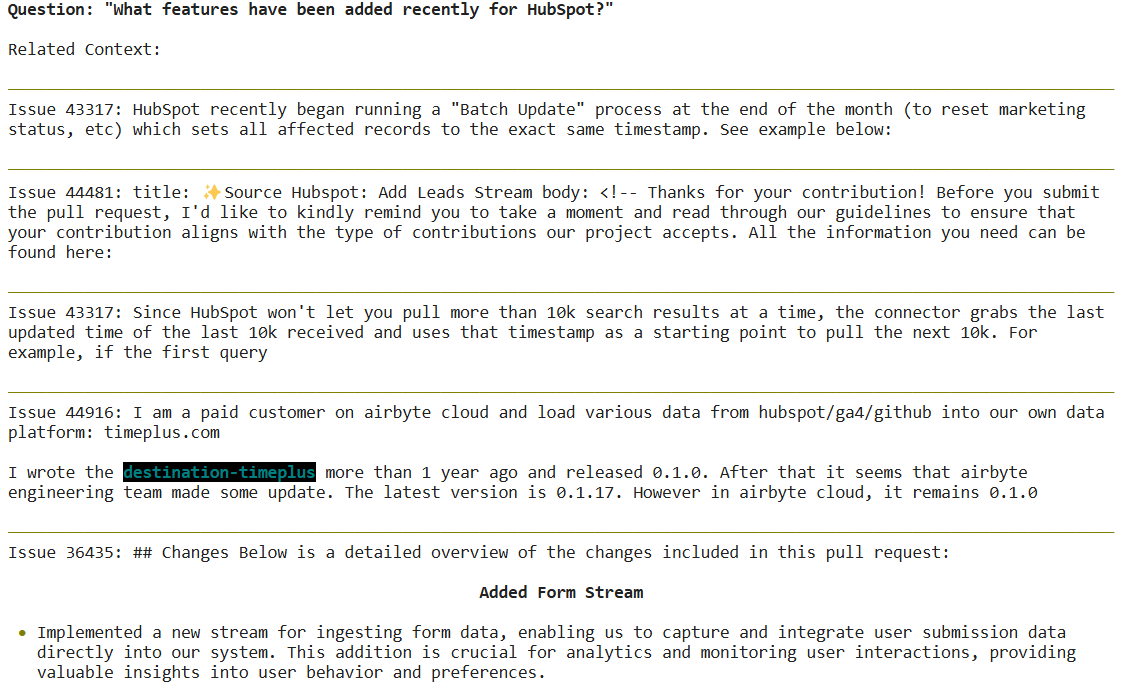

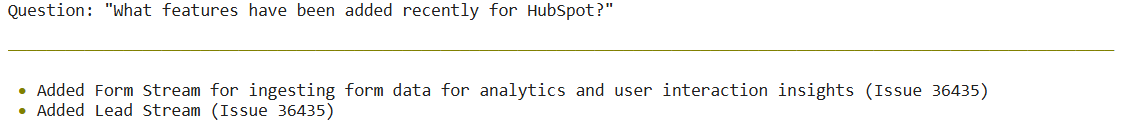

To test the get_related_context function, provide a question and store the results of this function in a variable. For example, asking a question about the recently added features for HubSpot can be done by running:

Output:

By defining a prompt template, you can highlight the behavior of the large language model. This will enable the model to produce a response in a certain way.

You can get the answers based on the defined prompt by populating it with the questions and the context generated by the get_related_context function.

To verify the response generated by the chatbot, provide a sample question to the get_answer function.

Output:

The output signifies that the chatbot is correctly responding to the questions you provide. By implementing the steps mentioned in this process, you can generate an efficient chatbot to answer your GitHub issues queries. To learn more about this process, follow this GitHub tutorial. You can also leverage PyAirbyte to build chatbots or other AI applications with diversified sources. For example, build a chatbot with LangChain and Pinecone.

This article demonstrates how to use PyAirbyte with pgvector to develop a custom GitHub chatbot. PyAirbyte simplifies the ETL process for you by providing pre-built connectors to migrate data to vector stores without requiring custom transformations. Automated chunking, embedding, and indexing allow you to modify raw GitHub data and arrange it in the specified vector database.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.