AWS S3 Replication: Step-by-Step Guide From Data Engineers

Summarize this article with:

✨ AI Generated Summary

Amazon S3 replication automatically copies objects between buckets within the same or different AWS regions, enhancing data durability, availability, and compliance. Key replication types include Same-Region Replication (SRR) for intra-region redundancy and Cross-Region Replication (CRR) for disaster recovery and regulatory compliance.

- Advanced features: Replication Time Control (RTC) guarantees replication within 15 minutes; Batch Replication handles existing data; multi-destination and bidirectional replication support complex architectures.

- Best practices emphasize enabling versioning, managing permissions, monitoring with CloudWatch, and optimizing costs and performance.

- Limitations include complex permissions, lack of data transformation, asynchronous replication delays, and cost considerations.

- Airbyte offers an alternative with enhanced transformation, monitoring, and integration capabilities for sophisticated data workflows beyond native S3 replication.

Most businesses rely on cloud storage platforms like Amazon Web Services (AWS) S3 for scalable, secure data management. However, configuring S3 replication can be challenging due to complex setup requirements and limited transformation capabilities.

S3 replication automatically copies new and updated objects from a source bucket to a destination bucket, enhancing data durability and availability. Whether you need Cross-Region Replication for compliance or Same-Region Live replication for low-latency access, understanding the setup process is essential for effective data management.

This guide provides a step-by-step approach to AWS S3 replication, helping you confidently replicate data while exploring advanced features that enhance your storage strategy.

What Is Amazon S3 and Why Does It Matter for Data Storage?

Amazon S3 (Simple Storage Service) is AWS's cloud storage solution that allows users to store and retrieve any amount of data from anywhere on the web. It automatically stores copies of all objects across multiple systems within an AWS Region.

S3 matters because it delivers exceptional durability, protecting against data loss, and scales seamlessly from gigabytes to petabytes without manual intervention. Its robust security features—including encryption, access control policies, and integration with AWS—protect data from unauthorized access. This combination makes S3 ideal for backup, disaster recovery, content distribution, and big data analytics.

What Is AWS S3 Replication and How Does It Work?

AWS S3 replication is a fully managed feature that automatically and asynchronously copies objects from a source bucket to one or more destination buckets. This can occur within the same AWS Region (Same-Region Replication, SRR) or across different Regions (Cross-Region Replication, CRR).

Replication matters because it enhances data durability and availability without interfering with normal bucket operations. Object tags help manage individual object replication and maintain metadata synchronization, ensuring data integrity and compliance throughout the process.

Understanding S3 Replication Architecture

The replication architecture relies on versioning-enabled buckets that facilitate automated, asynchronous copying of objects across different storage locations. Source buckets continuously monitor object-level changes and propagate these modifications to designated destination buckets according to predefined replication rules and policies.

This fundamental architecture supports both intra-regional and inter-regional data distribution patterns, enabling organizations to maintain multiple synchronized copies of their data assets across geographically dispersed AWS infrastructure. The system preserves object metadata, access permissions, and version histories throughout the replication process.

What Are the Different Types of S3 Replication Available?

S3 replication offers two types based on destination bucket location: Same-Region Replication (SRR) for buckets within the same AWS Region, and Cross-Region Replication (CRR) for buckets across different Regions.

Replication operates asynchronously, copying objects after the original write operation completes. This approach prevents performance issues and enables consistent data access across production and test environments while maintaining metadata synchronization.

Cross-Region Replication (CRR)

S3 Cross-Region Replication (CRR) allows data to be replicated across multiple AWS regions, which are geographically separate data centers. It is a critical feature for disaster recovery and data protection, ensuring that data is available in a geographically distant location in case of a regional failure. The feature provides higher levels of data protection and ensures data durability.

Cross-Region Replication addresses critical business requirements including disaster recovery preparedness, regulatory compliance mandates that require data residency in specific geographical locations, and performance optimization through data locality improvements that reduce latency for geographically distributed user bases.

The CRR implementation maintains object-level granularity while preserving all associated metadata, including custom user-defined metadata, system metadata, and access control permissions, ensuring that replicated objects maintain full functional equivalence with their source counterparts. Live replication automatically copies new and updated objects from a source bucket to a destination bucket as they are written, typically ensuring data availability in the destination bucket within a defined time frame, but not immediately.

Same-Region Replication (SRR)

SRR focuses on maintaining multiple copies of data within the same AWS region but in different availability zones. Availability zones are separate, isolated data centers within the same AWS region. This provides additional protection against localized failures and accidental deletions.

Same-Region Replication complements CRR by providing data redundancy and availability improvements within a single AWS region, typically across multiple availability zones. This approach proves particularly valuable for scenarios involving data sharing between different AWS accounts within the same region, creating separate data copies for development and testing environments without impacting production systems.

SRR implements compliance strategies that require multiple data copies while maintaining regional data sovereignty requirements. Using SRR to replicate objects between test accounts and production accounts while maintaining metadata ensures consistency across different environments. Additionally, it is also useful for creating distinct copies of data for development or testing purposes without affecting the primary production dataset.

What Are the Advanced S3 Replication Features and Technologies Available?

Amazon S3 replication has evolved significantly beyond basic data copying to encompass sophisticated enterprise-grade capabilities that address complex business requirements. These advanced features transform S3 replication from a simple backup mechanism into a comprehensive data management platform that supports predictable service levels, automated batch processing, and intelligent data distribution strategies.

S3 Replication Time Control (RTC)

S3 Replication Time Control (RTC) introduces service-level agreements that commit to replicating 99.9% of objects within 15 minutes of their creation or modification, subject to certain conditions. This technology addresses a critical gap in traditional replication systems where replication timing remained unpredictable, making it difficult for organizations to design reliable disaster recovery and business continuity strategies.

RTC provides the predictability necessary for meeting strict recovery point objectives and compliance requirements. Organizations can now design data architectures with confidence in replication timing, enabling more sophisticated disaster recovery and business continuity planning.

S3 Batch Replication and Multi-Destination Features

S3 Batch Replication provides a systematic, scalable approach for replicating existing objects, objects that previously failed replication attempts, and objects that require replication to additional destination buckets. This feature addresses the common challenge of implementing replication for buckets with existing data.

Multi-destination replication enables organizations to replicate data from single source buckets to multiple destination buckets simultaneously without complex custom solutions. Bidirectional replication ensures complete data synchronization between two or more buckets across different AWS regions, supporting active-active architectures.

How Do You Set Up AWS S3 Replication Using the Management Console?

Prerequisites

AWS S3 must have the necessary permissions to replicate objects. See Setting up permissions. Versioning must be enabled on both source and destination buckets. See Using Versioning in S3 buckets.

If the source bucket owner doesn't own the objects, the object owner must grant READ and READ_ACP permissions via ACL. See ACL overview. If the source bucket has S3 Object Lock enabled, destination buckets must also have it enabled. See Using S3 Object Lock.

Step-by-Step Implementation Process

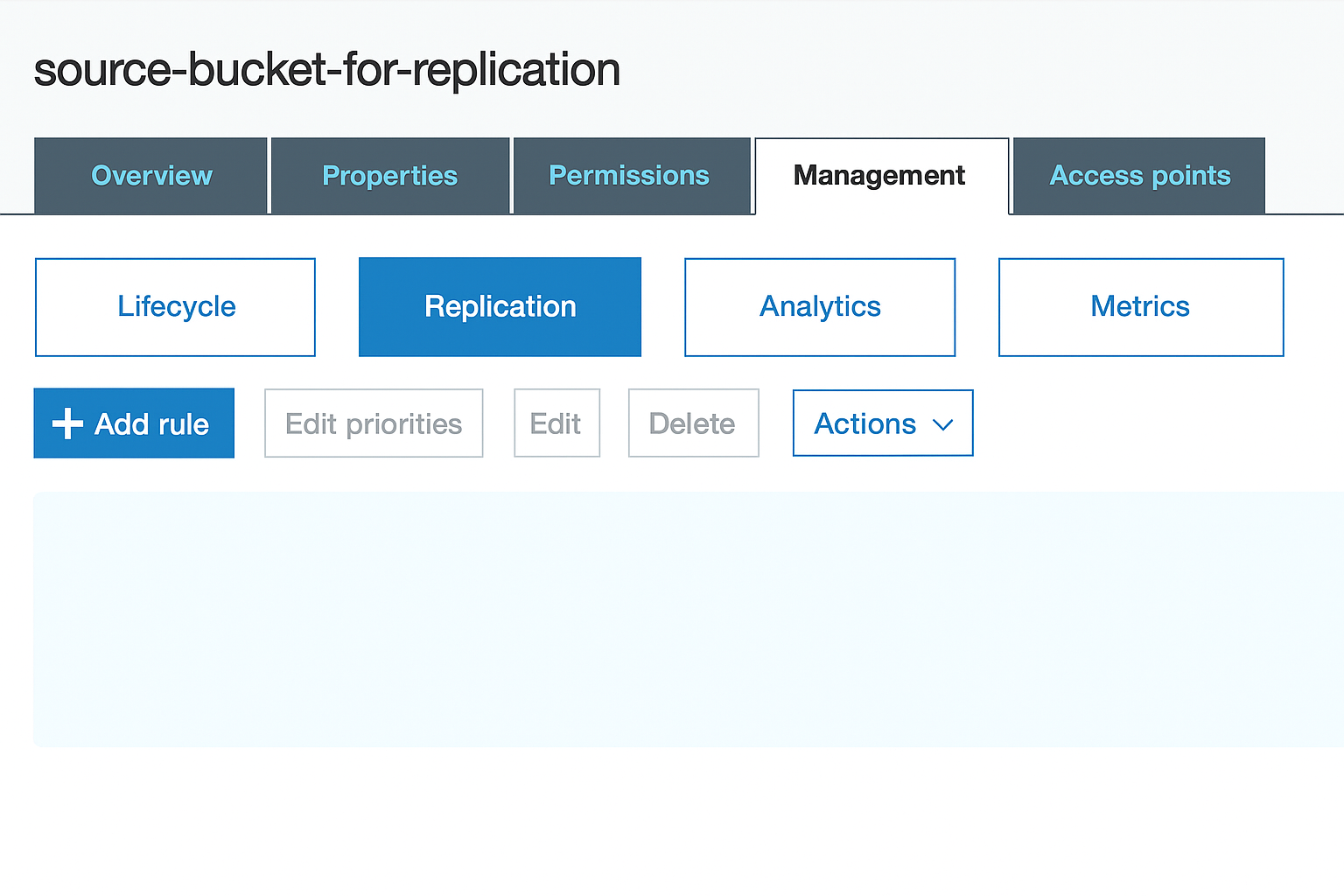

- Navigate to the AWS S3 management console, authenticate, and select the source bucket.

- Go to Management → Replication → Add rule.

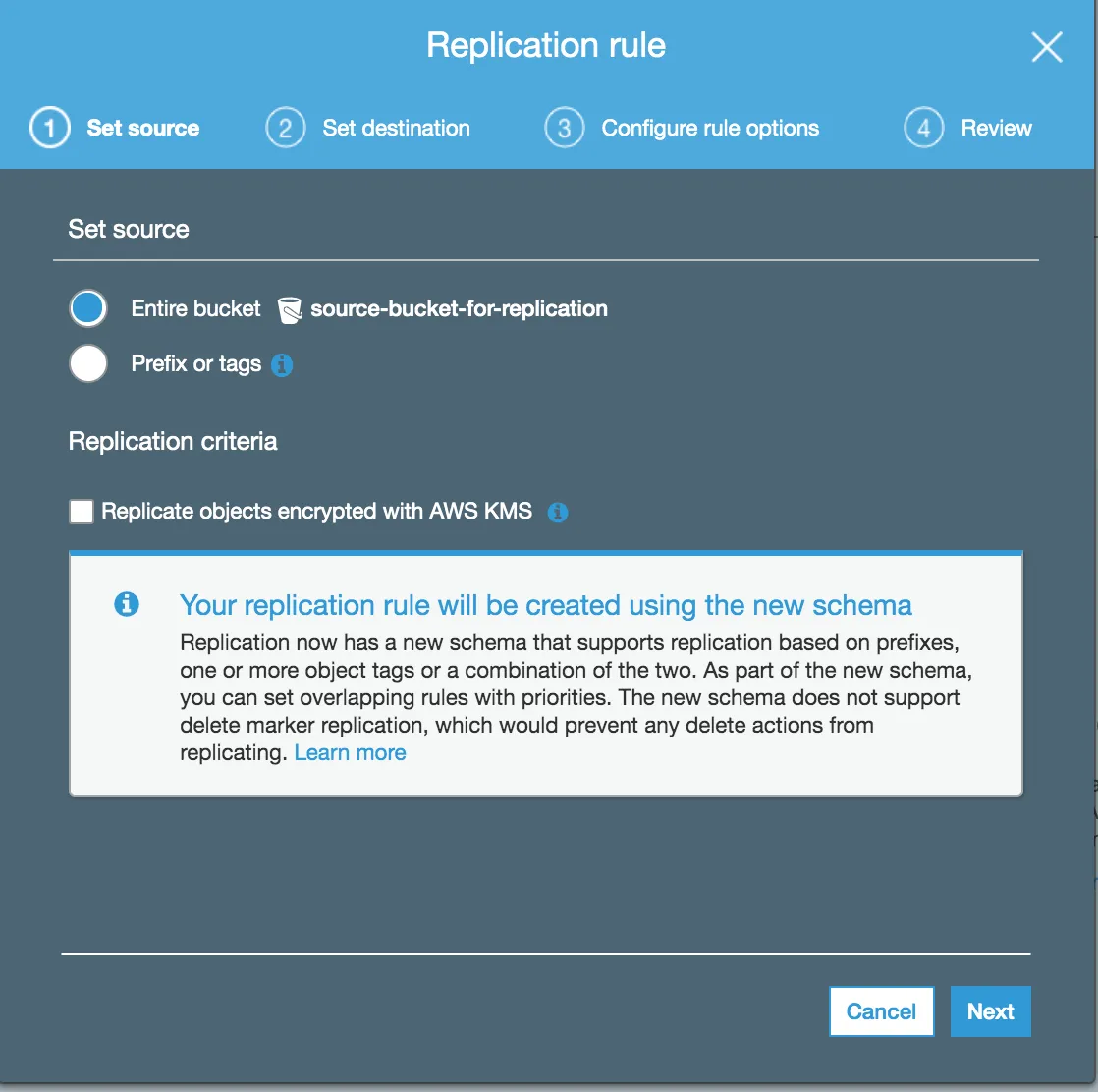

- In Replication Rule, choose Entire Bucket → Next. If the bucket is encrypted with AWS KMS, select the appropriate key.

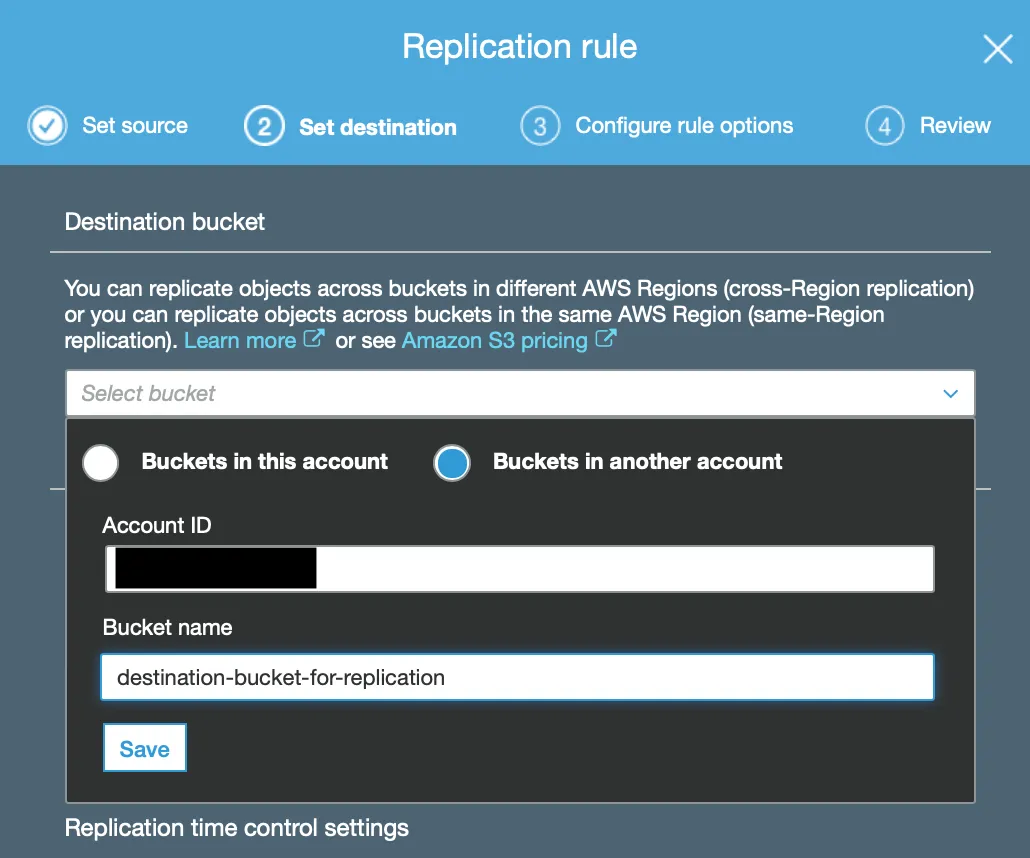

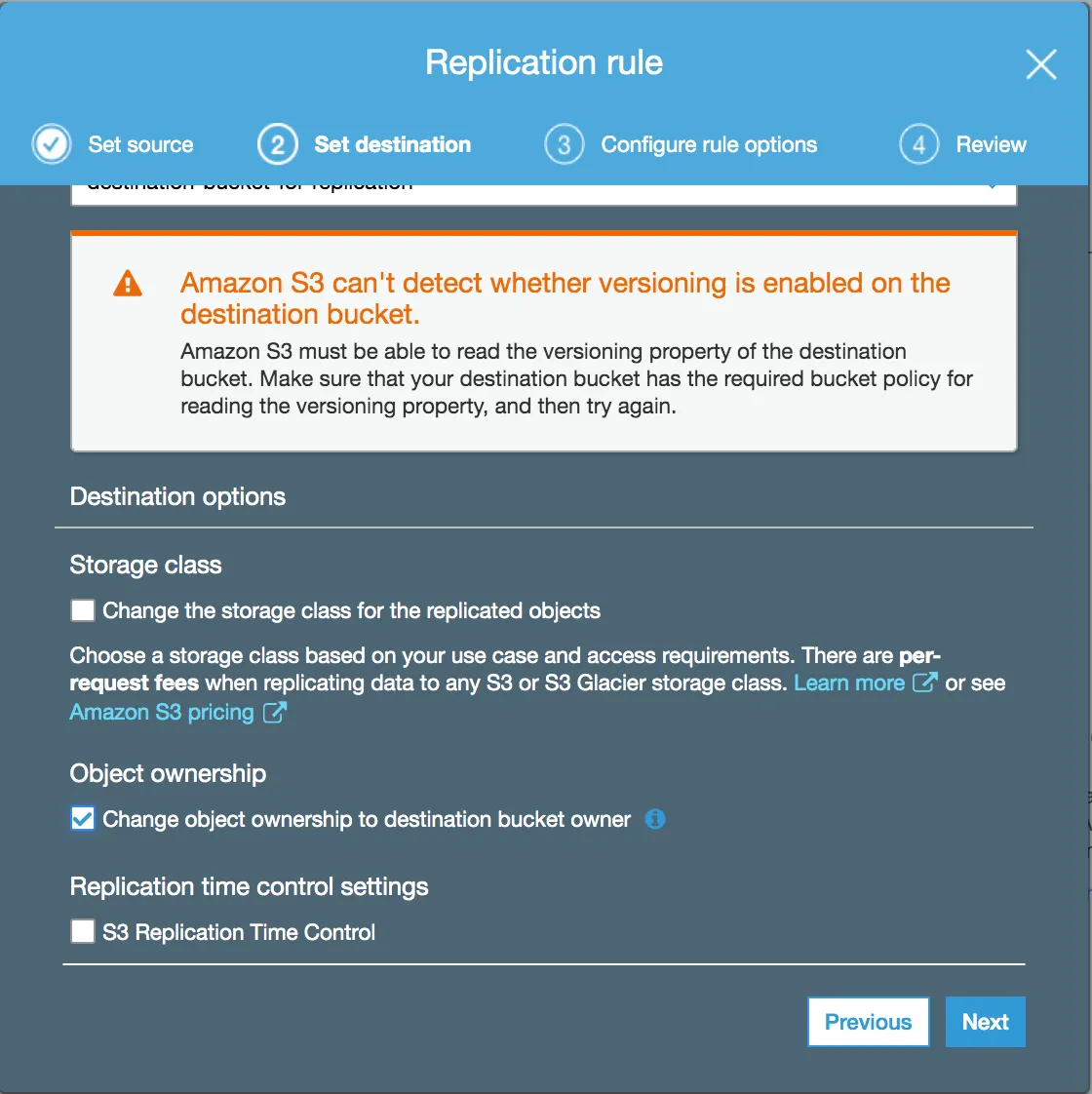

- Under Set Destination, choose Bucket in this account for same-account replication, or specify another account and its bucket policies.

- In Destination options, optionally change the storage class for replicated objects.

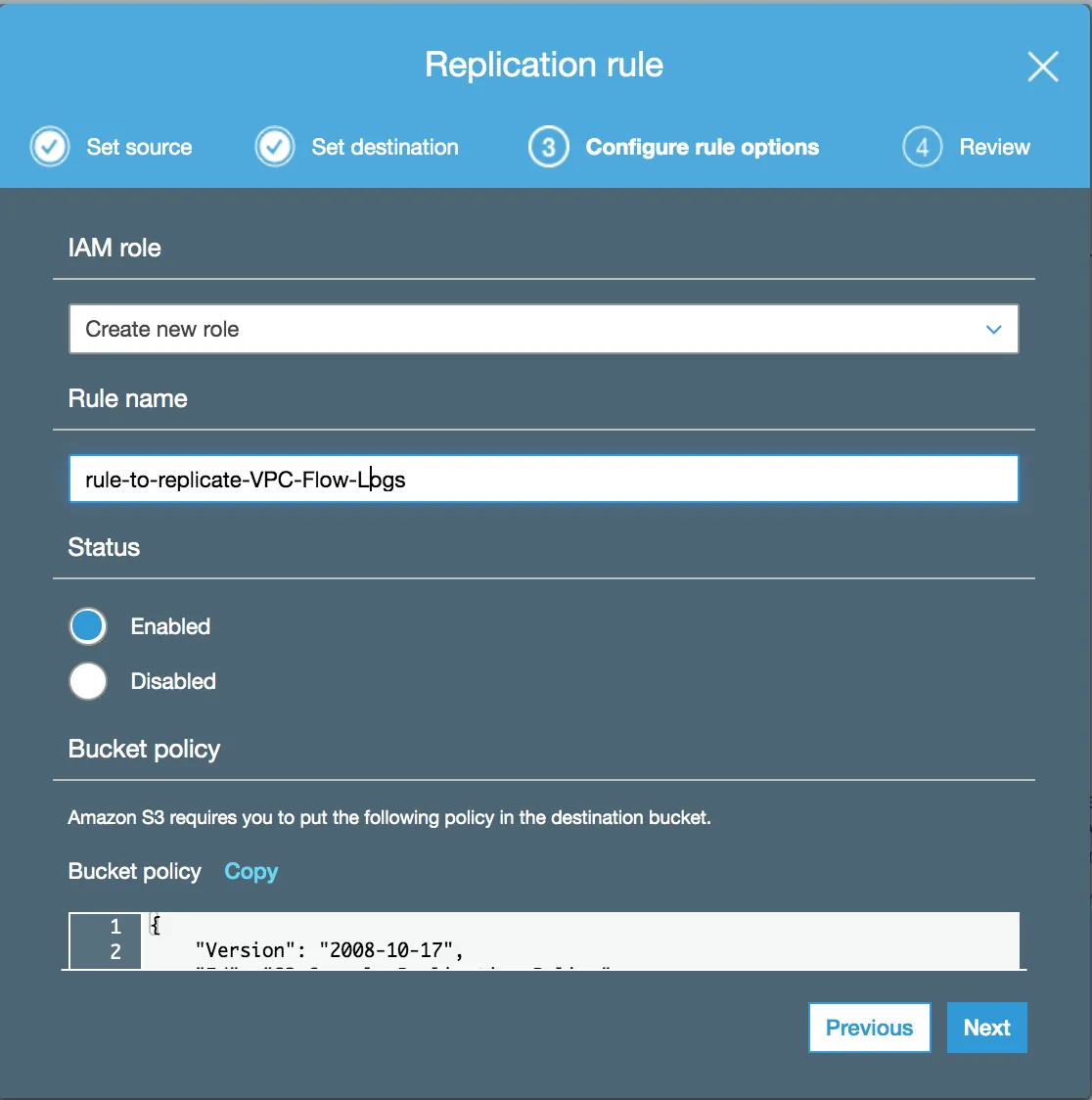

- In Configure options, choose an existing IAM role with replication permissions or create a new one.

- Under Replication time control, enable the option to guarantee replication within 15 minutes (additional cost).

- Set Status to Enabled, click Next, and start replication. Verify by checking the destination bucket after a few minutes.

What Are the Edge Computing and Hybrid S3 Replication Strategies?

The integration of S3 replication with edge computing platforms represents a significant evolution in how organizations can architect distributed data management strategies. Amazon S3 on Outposts brings the full capabilities of S3 replication to edge locations while maintaining strict data locality requirements that are increasingly important for regulatory compliance and performance optimization.

S3 Replication on Outposts enables organizations to create sophisticated data distribution architectures that span from centralized cloud regions to distributed edge locations without compromising on data locality requirements. Replication operations between Outposts locations occur entirely over the customer's local gateway, ensuring data never travels back to AWS regions during replication operations.

Implementing Tiered Edge Architectures

Edge computing integration enables tiered architectures where initial processing occurs at the edge for immediate decision-making, while aggregated data flows to centralized systems for comprehensive analysis and long-term storage. This hybrid approach optimizes both responsiveness and analytical capabilities.

Organizations can implement sophisticated data processing pipelines that begin with edge collection and preprocessing, continue with regional aggregation and analysis, and conclude with centralized data warehousing and historical analytics. This architecture maximizes both operational efficiency and analytical insights while maintaining strict data governance requirements.

What Are the Best Practices for Implementing AWS S3 Replication?

Implementing AWS S3 replication effectively requires careful consideration of performance, security, and cost factors. Request-rate performance considerations show that with S3 RTC, each prefix can handle significant PUT/COPY/POST/DELETE or GET/HEAD requests per second.

When estimating replication request rates, each replicated object results in a PUT operation to each destination. The number and type of source bucket operations (GET/HEAD/PUT) are not explicitly detailed in AWS documentation. If S3 RTC traffic exceeds certain thresholds, request a limit increase via Service Quotas or AWS Support.

Security and Monitoring Best Practices

AWS KMS-encrypted object replication counts against KMS request quotas, so proper planning is essential for encrypted data replication. Implement comprehensive monitoring and alerting using CloudWatch metrics and EventBridge for real-time visibility into replication operations.

Security and compliance require implementing encryption, least-privilege IAM roles, and cross-account policies carefully. Cost optimization involves analyzing data-transfer charges, storage class selection, and lifecycle policies to ensure replication strategies align with business objectives and budget constraints.

What Are the Key Limitations of AWS S3 Replication?

AWS S3 replication, while powerful, has several limitations that organizations should consider when planning their data architecture. Complex permission models in cross-account scenarios can create administrative overhead and potential security vulnerabilities if not properly managed.

The lack of built-in data transformation capabilities means organizations cannot modify or enrich data during the replication process, requiring separate transformation pipelines for data processing needs. Handling existing data requires additional configuration through Batch Replication, which adds complexity to implementation projects.

Cost and Performance Considerations

Pricing complexity across transfer, storage, PUT requests, and premium features can make cost prediction challenging for organizations with variable data loads. Potential performance bottlenecks in high-throughput environments may require careful architecture planning and testing.

The asynchronous nature of replication may not satisfy real-time use cases that require immediate data consistency across locations. Mandatory versioning may conflict with certain lifecycle policies, creating potential storage cost increases and management complexity.

How Can You Replicate S3 Data Using Airbyte as an Alternative?

While AWS S3's native replication capabilities are powerful, organizations often require more sophisticated data integration features. Airbyte offers a comprehensive alternative that addresses many native limitations while providing transformation, monitoring, and flexibility.

Step 1: Configure AWS S3 as the Source

- Login or register for Airbyte.

- In Sources, select the S3 connector.

- Enter a Source name and Bucket name.

- Add a Stream (CSV, Parquet, Avro, JSON).

- Click Set up source.

Step 2: Configure the Destination

Select Destinations, choose a connector, fill in the required fields, and click Set up destination.

Step 3: Create a Connection

- Go to Connections.

- Provide a Connection Name, Replication Frequency, etc.

- Choose a sync mode (Full Refresh / Incremental).

- Click Set up connection.

Why Choose Airbyte for S3 Data Integration?

Airbyte provides a no-code interface for rapid deployment and log-based Change Data Capture capabilities. The PyAirbyte library enables automation and programmatic control of data pipelines.

The platform maintains SOC 2, GDPR, ISO, HIPAA compliance while offering integrations with Datadog, Airflow, Prefect, Dagster for comprehensive workflow management. Airbyte's transparent pricing model avoids volume-based markup that can create unpredictable costs.

With over 600+ pre-built connectors and a strong contributor community, Airbyte offers extensive integration capabilities. Deep dbt integration enables in-pipeline transformations, allowing organizations to process and enrich data during replication rather than requiring separate transformation steps.

AWS S3 replication provides essential data redundancy and distribution capabilities for modern data architectures. Understanding the different replication types, advanced features, and implementation best practices enables organizations to design robust data management strategies. While native S3 replication handles basic requirements effectively, platforms like Airbyte offer enhanced transformation and integration capabilities for complex data workflows. The choice between native and third-party solutions depends on specific business requirements, existing infrastructure, and long-term data strategy objectives.

FAQs

What is AWS S3 replication?

AWS S3 replication is a fully managed feature that enables automatic copying of objects between S3 buckets in the same or different regions, even across separate AWS accounts.

Does S3 replicate existing objects?

Yes. Use S3 Batch Replication to replicate existing objects. Standard replication handles new and updated objects automatically.

Is replication better than backup?

They serve different purposes: backups are for long-term retention, whereas replication keeps data continuously synchronized, often across regions for higher availability and compliance.

What is S3 Replication Time Control and why is it important?

S3 RTC provides a service-level agreement guaranteeing objects will replicate within 15 minutes, critical for stringent recovery point objectives.

How does multi-destination replication work in S3?

Multi-destination replication lets you copy data from a single source bucket to multiple destination buckets simultaneously, supporting multi-region DR, compliance, and performance optimization strategies.

.webp)