Best Practices for Deployments with Large Data Volumes

Summarize this article with:

✨ AI Generated Summary

The global data volume is rapidly increasing, expected to exceed 180 zettabytes by 2025, creating challenges in data management and integration due to data silos and complexity. Airbyte offers a scalable, user-friendly data integration platform with features like incremental sync, parallel processing, and extensive connectors to efficiently handle large datasets.

- Key considerations include scalability, data integrity, cost-effectiveness, and optimized infrastructure.

- Best practices involve proper resource allocation, network optimization, data partitioning, load balancing, and indexing.

- Monitoring, automated data quality checks, and compliance with data governance are essential for maintaining large-scale deployments.

- Airbyte supports integration with orchestration tools, Kubernetes, and provides security features like encryption and PII masking for compliance.

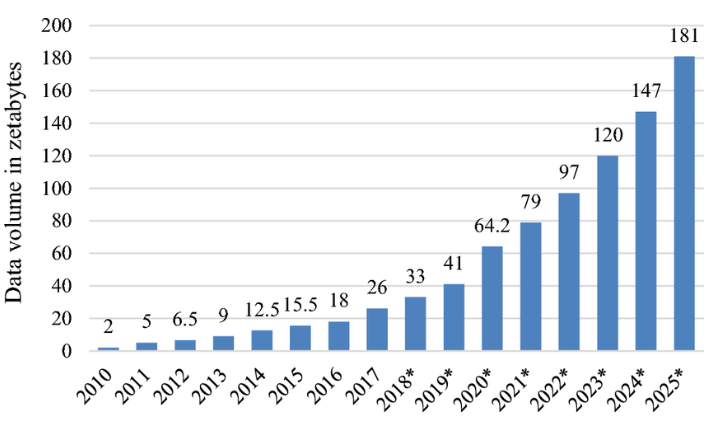

The amount of data generated worldwide is growing at an extraordinary rate. According to IDC Global DataSphere research, the total data created and consumed reached 64.2 zettabytes in 2020. By 2025, global data creation is expected to exceed 180 zettabytes.

Managing and deploying large datasets has become increasingly challenging with data expanding so rapidly. Data silos, where information is stored in separate systems, might further complicate the process of data management. These silos lead to inefficiencies and incomplete analysis, which can hinder decision-making and overall performance.

To address these challenges, you can consider using data integration tools to consolidate data from diverse sources to your target system. This centralized data management enhances operational efficiency and promotes a data-driven culture within your organization.

In this article, you'll explore some of the best practices for deployments with large data volumes. However, before getting into those specifics, let's first look at what constitutes large data volumes.

Understanding Large Data Volume Scenarios

Large data volumes refer to the massive amounts of data generated and collected by organizations daily. This data can come from various sources, including social media interactions, online transactions, sensor data from IoT devices, and more.

Industries like healthcare, finance, e-commerce, and telecommunications often handle big data. For example, the healthcare sector generates extensive data through electronic health records and wearable devices. Analyzing this data can improve patient outcomes but requires advanced data processing solutions to manage its complexity and volume.

Traditionally, handling such large data sets required hiring ETL developers to build data pipelines manually. Although this approach works, it often proves to be time-consuming and resource-intensive. Developers must spend significant time coding, testing, and maintaining pipelines, which can delay insights and hamper productivity.

To overcome these challenges, modern data movement tools like Airbyte can boost productivity. With its user-friendly interface and an extensive library of over 550+ pre-built connectors, you can quickly set up data pipelines that can handle complex workflows without coding expertise.

Key Considerations for Large-Scale Data Deployments

Deploying data at scale isn't just about moving large datasets from point A to point B—it's about ensuring that every layer of your system is designed to handle increasing data volumes without compromising performance, accuracy, or reliability.

As organizations generate more data, the challenges of maintaining data integrity, minimizing latency, and keeping infrastructure cost-effective continue to grow. Whether you're integrating massive amounts of data from multiple sources or refreshing pipelines across environments with millions of records, even small inefficiencies can quickly compound into major operational headaches.

The following considerations will help you build scalable, resilient data operations capable of managing large data volumes while maintaining speed, consistency, and control.

Scalability and Performance Requirements

Ensuring that your system can effectively scale to accommodate growing data volumes and user demands is essential. This involves choosing a database or processing framework that can expand as needed, whether through horizontal scaling (adding more servers) or vertical scaling (upgrading existing hardware). Additionally, performance optimization techniques, such as indexing and caching, should be implemented to maintain fast response times even as the workload increases.

Data Integrity and Consistency Challenges

Ensuring data integrity and consistency is critical when managing large data workloads. Implementing validation techniques, such as input checks and anomaly detection, can help prevent errors and data corruption. Furthermore, establishing a strong data governance framework is vital to uphold integrity standards.

Cost-Effectiveness and Resource Management

You should evaluate storage and processing solutions to balance performance and cost. Cloud services can provide flexibility and scalability while optimizing resource usage. Regular monitoring of resource allocation and performance can help identify areas for improvement, ensuring that the system operates efficiently.

Essential Features for Handling Large Data Volumes with Airbyte

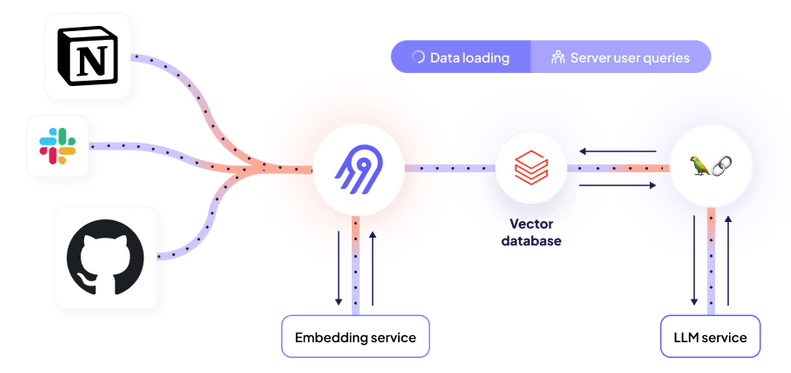

Airbyte simplifies the process of managing large datasets. Its powerful connectors streamline the replication of big datasets across various platforms, ensuring smooth data transfer. Airbyte’s architecture is fully compatible with Kubernetes, facilitating scalable and resilient deployments.

Here are the essential features of Airbyte for handling large data volumes effectively:

Incremental Synchronization Capabilities

Airbyte offers robust incremental synchronization options that let you replicate only the data that has changed since the last sync. This method is particularly beneficial for managing large datasets, reducing the amount of data transferred and processed during each sync.

Parallel Processing and Multi-Threading

Airbyte's worker-based architecture facilitates efficient parallel processing of data synchronization tasks, enabling you to manage large volumes of data effectively. This architecture separates scheduling and orchestration from the core data movement processes for more flexible management of data jobs.

Data Normalization and Transformation Techniques

Airbyte provides the flexibility to perform custom transformations using SQL, dbt (data build tool), or Python scripts. Its normalization process leverages dbt to ensure that your data is loaded in a format that is most suitable for your destination. Airbyte Cloud enables you to integrate with dbt for post-sync transformations.

Flexible Scheduling Options

Airbyte provides flexible scheduling options for data syncs through three main methods: Scheduled, cron, and Manual. Scheduled syncs enable you to set intervals ranging from every 1 hour to every 24 hours. Cron syncs offer precise control using custom expressions for specific timing. Manual Syncs require you to start them through the UI or API.

Record Change History

This feature helps avoid sync failures caused by problematic rows. If a record is oversized or invalid and causes a sync failure, Airbyte modifies that record in transit, logging the changes and ensuring the sync completes. This significantly boosts the reliability of your data movement.

Pipeline Orchestration

Airbyte facilitates integration with popular data orchestration tools like Apache Airflow, Dagster, Prefect, and Kestra. This streamlines the management of large-scale data pipelines and guarantees smooth data transfer across systems.

Best Practices for Large-Scale Data Integration

Here are some of the best practices to consider for effective large-scale data integration:

Proper Infrastructure Sizing and Resource Allocation

When integrating large-scale data, it’s essential to size your infrastructure and allocate resources effectively. Determine which workloads are most critical to your operations. These applications should be your top priority when allocating resources.

Utilize automated tools that can monitor resource usage in real-time. This helps you scale resources dynamically based on current demand, ensuring your infrastructure remains responsive to changing workloads.

Network Configuration Optimization

Data transfer efficiency across different systems depends mainly on the network configuration. Optimizing your network configuration results in high data throughput and low latency, critical for real-time data processing.

Implementing Quality of Service (QoS) settings can prioritize critical data flows, ensuring that essential operations receive the necessary bandwidth. Regularly update your network architecture to accommodate growth and changes in data traffic patterns. This ensures performance and reliability over time.

Implementing Effective Data Partitioning Strategies

Data partitioning involves dividing large datasets into smaller, easier-to-manage partitions. It helps speed up query execution by allowing queries to be executed on specific subsets instead of scanning the whole database.

There are different ways to partition data, such as splitting it by rows (horizontal), by columns (vertical), or based on operational needs (functional partitioning). You can choose the right one depending on factors like data size, access patterns, and processing requirements.

Efficient Load Balancing and Job Scheduling

Balancing the load and organizing tasks is crucial to maintaining system effectiveness when handling extensive data integration tasks. Load balancing guarantees that workloads are evenly distributed across all resources, preventing any one resource from becoming overwhelmed.

Additionally, effective job scheduling prioritizes tasks based on urgency and resource availability, minimizing idle time and maximizing throughput. You can significantly enhance operational efficiency by implementing intelligent load-balancing algorithms and dynamic scheduling techniques.

Index Strategically to Improve Performance

Custom indexes can significantly enhance query performance on large datasets for databases and platforms like Salesforce. Focus on fields used in filters, joins, or sorting operations—especially in SOQL queries.

Standard indexes typically exist on record IDs and master-detail relationships, but custom fields used in WHERE clauses should be indexed manually if they impact performance.

Use Skinny Tables to Reduce Load

Skinny tables are optimized versions of standard tables, containing only the most accessed fields. Storing a subset of fields in one physical table reduces query run time, especially in environments with massive amounts of records.

Skinny tables work well in high-read use cases but should be updated cautiously to avoid impacting data integrity.

Apply Batch Processing for Data Loads

When working with large volumes of source data, use Batch Apex or the Bulk API. These approaches break down operations into manageable jobs and reduce timeouts.

When using batch tools, track system performance and job completion rates to avoid overloading processing queues.

Review Sharing Rules and Calculations

In Salesforce-like platforms, sharing rules and calculated fields, such as formula fields or sharing calculations, can significantly impact data load time and system speed. Limit complex formula fields on objects with large data volumes.

Document and simplify data access strategies to keep users on the same page, improve customer experience, and reduce inconsistencies.

Monitoring and Maintaining Large Data Deployments

Here are a few key factors to consider for large-scale data center deployments:

1. Establish Clear Monitoring Metrics

Define key performance indicators (KPIs) critical for your data operations, such as latency, throughput, and error rates. Airbyte enables you to integrate with data monitoring tools like Datadog and OpenTelemetry to track and analyze your data pipelines.

2. Leverage MPP Databases for Scalability

Massively Parallel Processing (MPP) databases, such as Amazon Redshift and Google BigQuery, facilitate efficient scaling for large datasets. They distribute queries across multiple nodes to improve performance.

3. Automate Data Replication

Schedule regular data replication and backups to ensure data availability and minimize loss. To achieve this, Airbyte offers various sync modes. These modes include Full Refresh Sync, which retrieves the entire dataset and overwrites or appends it to the destination. Incremental Sync transfers only new or updated records since the last sync to minimize system load.

4. Monitor Logs

Effective log monitoring is key to detecting connection failures or slow syncs. Airbyte provides extensive logs for each connector, giving detailed reports on the data synchronization process.

5. Conduct Data Quality Checks

Data quality checks are essential in large-scale deployments to maintain data accuracy, completeness, and consistency. Automating these checks before and after data transfers can significantly reduce the risk of data anomalies.

6. Continuously Monitor Query and Sync Patterns

Track SOQL query run times and log database bottlenecks. Look for recurring queries on non-indexed fields, and flag sync operations that repeatedly fail or slow down due to data volume spikes.

Airbyte logs and observability integrations can pinpoint slow syncs, schema drift, and connector-specific failures.

7. Audit Data Relationships and Dependencies

Monitor foreign key relationships, especially in environments with multiple related objects. Maintaining data integrity across linked records becomes more time-consuming as data volume grows without clear constraints or indexing.

Map out master-detail or lookup relationships that affect data load performance.

8. Flag Slow Sandbox Refreshes and Test Environments

Slower sandbox refreshing is typical when handling large data volumes in staging or test environments. Where possible, use synthetic data or smaller datasets for testing, and document data subsets used in test jobs to replicate production issues effectively.

9. Track Long-Running Jobs and Sync Failures

Monitor job duration, retry frequency, and connector-level metrics. Alert when syncs exceed threshold durations or when data sets being transferred fail to match schema expectations.

Set up alerts for when batch jobs, data replication tasks, or streaming syncs are delayed or stalled due to schema issues, permission changes, or infrastructure strain.

10. Use Big Objects or Archive Strategies for Infrequently Accessed Data

In Salesforce environments, Big Objects are used for storing historical or rarely queried large amounts of data. Consider a similar archival or tiered storage strategy for systems managing massive datasets.

Archived data should remain accessible via external queries or APIs but not actively involved in critical sync jobs.

Relationship of Data Governance and Compliance with Large Data Volumes

Data governance and compliance are crucial to managing large data volumes effectively. Data governance focuses on creating policies and processes that ensure data quality, security, and availability, while compliance involves adhering to regulations like GDPR and HIPAA.

As data volumes grow, protecting sensitive information and meeting compliance standards become increasingly complex.

To address these challenges, Airbyte offers robust security features. It records all platform changes to provide an audit trail for compliance and historical analysis. Airbyte employs encryption methods such as TLS for data in transit and AES-256-bit encryption for customer metadata at rest. Additionally, it provides PII masking, which hashes personal data as it moves through pipelines. This guarantees compliance with privacy regulations.

Simplify Large Data Deployments with Airbyte

Managing and deploying large data volumes doesn’t have to mean building from scratch or constantly firefighting sync failures. With Airbyte, you get a scalable, open-source data integration platform designed to handle massive datasets with speed, reliability, and control.

With over 550+ pre-built connectors, support for incremental syncs, parallel processing, and integrations with tools like dbt, Kubernetes, and Airflow, Airbyte helps you scale your data operations without sacrificing performance.

Whether syncing billions of records across cloud platforms or orchestrating complex pipelines across teams, Airbyte gives you the flexibility to deploy, monitor, and manage your data with confidence.

Ready to simplify how you handle large data volumes? Explore Airbyte’s platform or get started for free today.