Agent Engine Public Beta is now live!

Learn the inner workings of Airbyte’s full refresh overwrite and full refresh append synchronization modes.

Published on Aug 02, 2022

Summarize this article with:

Your organization likely collects large amounts of data in various systems, and you are probably already aware that accessing and analyzing data that is spread across multiple systems can be a challenge. To address this challenge, data integration can be used to create a unified view of your organization's data. In order for your data integration strategy to provide the maximum value, you need to have a consistent and up-to-date unified view of your data, which means that data from various systems needs to be in sync.

Data synchronization involves replicating data from a source system into a destination system so that the destination always has an up-to-date copy of the source data. Data consistency is achieved by continually synchronizing modifications in the source system to the destination system. Data replication tools help in copying and distributing data to different locations, ensuring redundancy and availability. These tools play a crucial role in maintaining data integrity and facilitating efficient data management.

Data synchronization can be done in several ways including: full replication, incremental replication, or change data capture (CDC) replication.

This tutorial is the first in a three part series on exploring Airbyte synchronization modes. The purpose of this series is to provide you with step-by-step instructions so that you can see for yourself how Airbyte-replicated data looks for different synchronization modes, and to help you understand how each synchronization mode functions behind the scenes.

This tutorial focuses on Airbyte’s full refresh synchronization mode, and is followed-up with the following related tutorials:

In this tutorial you will:

ℹ️ If you are not sure which replication mode is the most appropriate for your use case, you may be interested in reading An overview of Airbyte's replication modes before continuing with this tutorial.

This tutorial was written in July 2022 and due to the fast-changing nature of Airbyte may become less relevant at some point in the future. The following tools/versions were used:

Airbyte is an ELT (extract, load, transform) tool as opposed to an ETL (extract, transform, load) tool. This means that raw data is first loaded into a destination and then after the data has been written, it goes through a transformation stage.

In Airbyte, data that is extracted from a single record on the source system is sent to the destination as a single JSON blob, where it remains unmodified. This conforms to a core tenet of the ELT philosophy, which is that data should be untouched as it moves through the extracting and loading stages so that raw data is always accessible – if an unmodified version of the data exists in the destination, it can be re-transformed in the future without the need for a resync of data from source systems.

As a more concrete example, if your source data is in a table that looks as follows:

id | name

----+--------

1 | Mary X

2 | John D

Then Airbyte stores the following JSON objects in the destination:

{"id": 1, "name": "Mary X"}

{"id": 2, "name": "John D"}

For destinations that support normalization, SQL is then executed to transform the raw JSON back into a relational table. Internally Airbyte leverages dbt to create the SQL used for normalizing and/or transforming data. The data synchronization and normalization process is shown below:

Full refresh synchronization always retrieves all available data requested from the source and writes it in the destination, regardless of whether the data has previously been synced or not. This contrasts with incremental sync modes, which only replicate data that has been inserted or updated since the previous sync. Full refresh synchronization has two variants, overwrite and append, and it may alternatively be referred to as full load data synchronization.

In the full refresh | overwrite data synchronization mode, new syncs will overwrite all data in the destination table with a new copy of the source data. One of the big advantages of this approach is that data that has been deleted from the source will also be deleted in the destination. This is in contrast to incremental sync modes, for which this is not always the case.

In the full refresh | append data synchronization variant mode, every new sync will append the entire data set onto the data from previous syncs. This may be useful for historical report generation or for regulatory compliance.

ℹ️ Because every full refresh sync operation copies the entire source data set, this approach may be infeasible for large amounts of data, and other replication modes (such as incremental sync) are likely to be more appropriate. For example, if the source data set contains 10MB of data, and the replication frequency is every 5 minutes, then in one year there will have been 105120 replication syncs. This means that there will be 105120 full loads of data from the source to the destination, which means transmitting 10MB * 105120 = 1051200 MB or approximately 1TB of data. Furthermore, if you are making use of full refresh | append synchronization, then you would also end up with 1TB of data stored in your destination, even if the source data only contains 10MB.

Now that I have discussed the theory, you are ready to see how full load ELT data synchronization looks in practice!

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.

To keep the focus of this article on the exploration and discussion of replication modes rather than on setup, Postgres is used as both the source and destination. Other supported databases or application APIs would follow a similar methodology.

The setup of the source and destination that I present below is similar to the setup presented in Replicate Data Between PostgreSQL Databases, with minor differences corresponding to loading of simple data that is used for this particular tutorial.

Start a source Postgres container running at port 2000 on localhost as follows:

docker run --rm --name airbyte-source -e POSTGRES_PASSWORD=password -p 2000:5432 -d debezium/postgres:13

Start a destination Postgres container running at port 3000 on localhost as follows:

docker run --rm --name airbyte-destination -e POSTGRES_PASSWORD=password -p 3000:5432 -d debezium/postgres:13

In this section I demonstrate the steps for logging into the source Postgres database and populating it with simple data that will be used for demonstrating the various replication modes.

ℹ️ Note that I create a table with just a few columns, which should be sufficient to understand the main points of this article. A real dataset would likely have many more columns.

You can open a shell on the source container and login to the source Postgres database with the following commands:

docker exec -it airbyte-source /bin/bash

psql --username=postgres

You should now see a posgres=# prompt, which indicates that you are inside the Postgres terminal.

From the Postgres terminal, create a simple table called full_refresh_demo as follow:

CREATE TABLE full_refresh_demo(

id integer PRIMARY KEY,

name VARCHAR(200)

);

You can populate the full_refresh_demo table with some data as follows:

INSERT INTO full_refresh_demo(id, name) VALUES(1, 'Mary X');

INSERT INTO full_refresh_demo(id, name) VALUES(2, 'John D');

Have a look at the new table by executing:

SELECT * FROM full_refresh_demo;

Which should respond with a table that looks as follows:

id | name

----+--------

1 | Mary X

2 | John D

(2 rows)

Now that you have data in your source database, you are ready to set up a source connector in Airbyte!

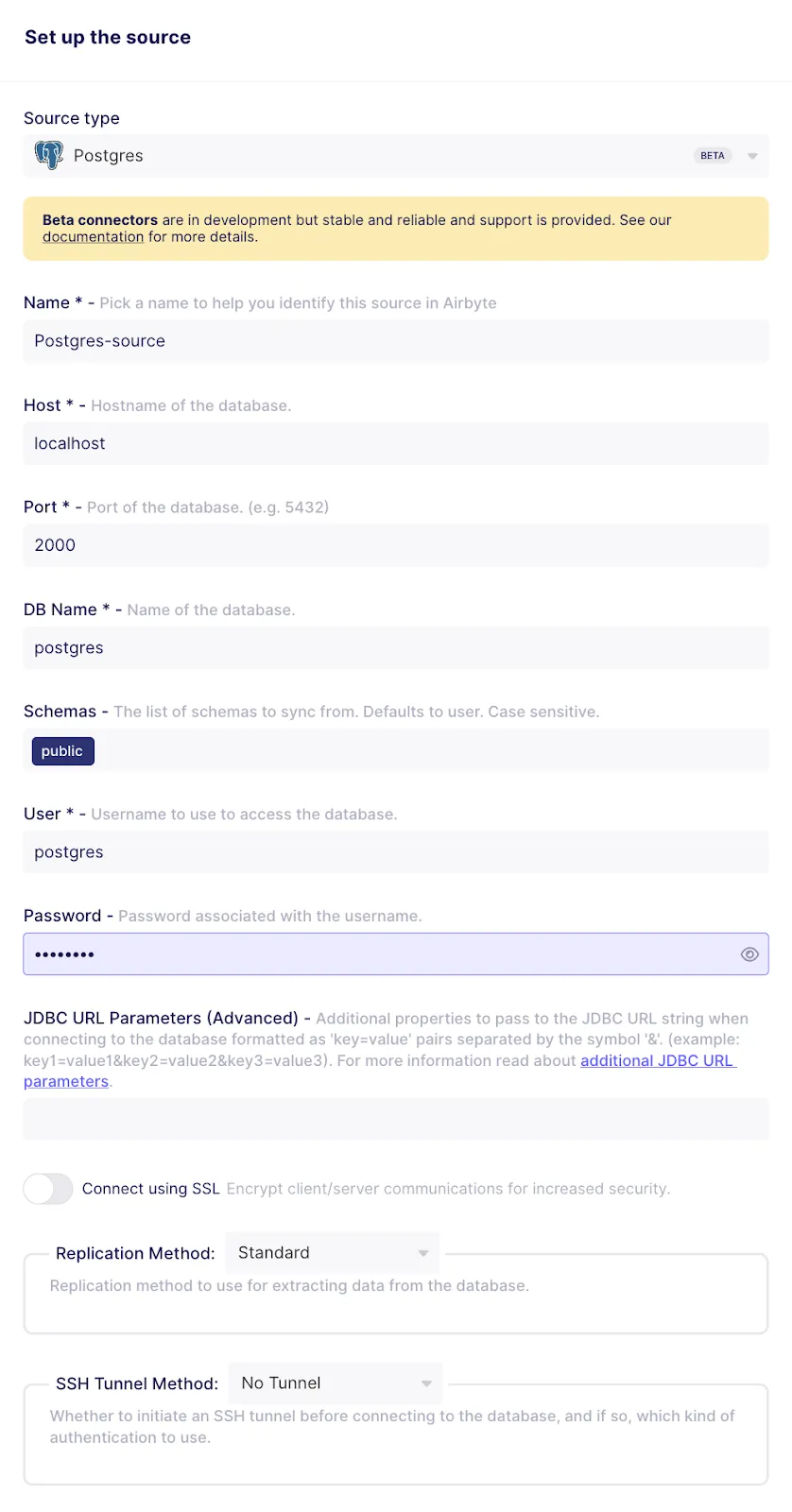

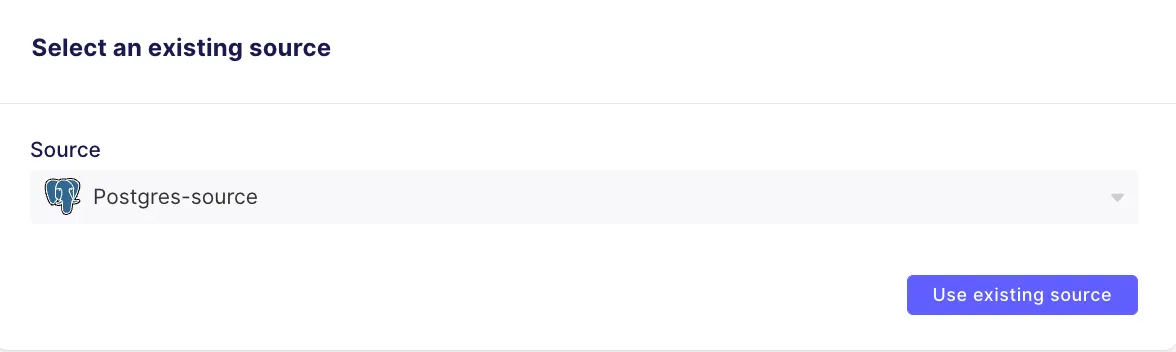

An Airbyte source will be used in your Airbyte connection(s) to retrieve data from the source database that you have just populated. Create a Postgres source called Postgres-source, as shown below:

In this section you will create an Airbyte connection that reads data from the Postgres source and drives it into the Postgres destination using the full refresh | overwrite replication mode. In this mode, all data in the source database is sent to the destination database, regardless of whether it has been synced before or not. You can see this for yourself by following along the steps presented below.

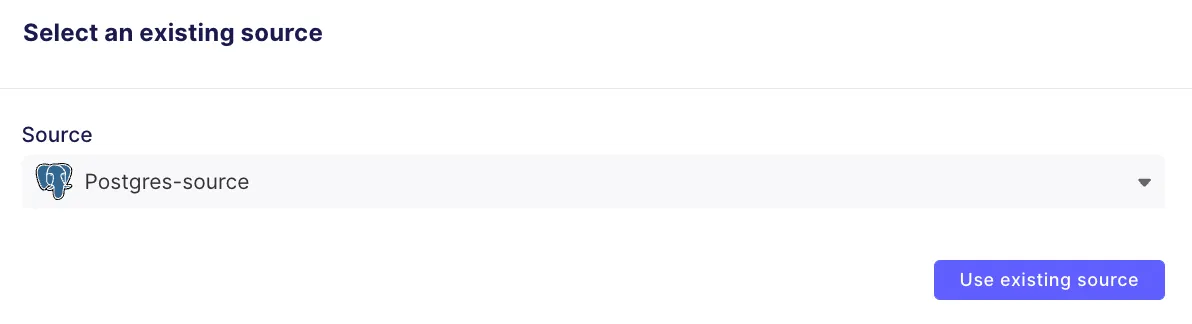

Create a new connection that will be used for verifying the functionality of full refresh | overwrite synchronization. Select the Postgres-source source, which you previously defined, as the source for this connection.

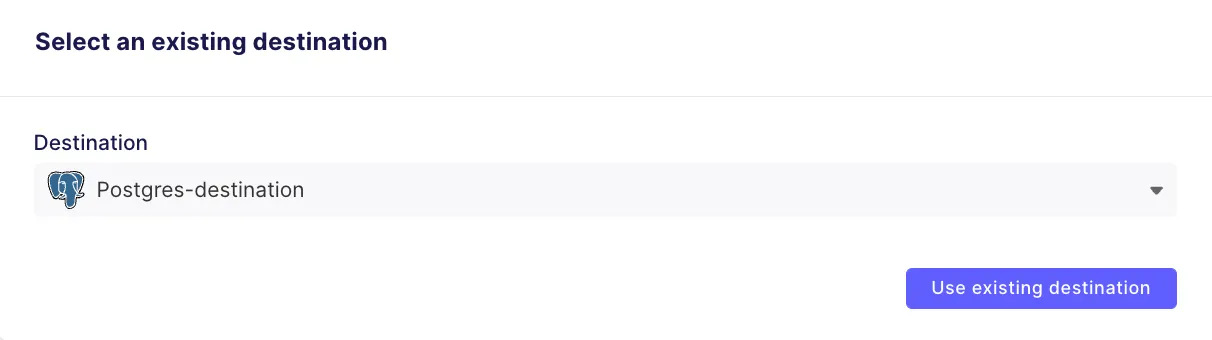

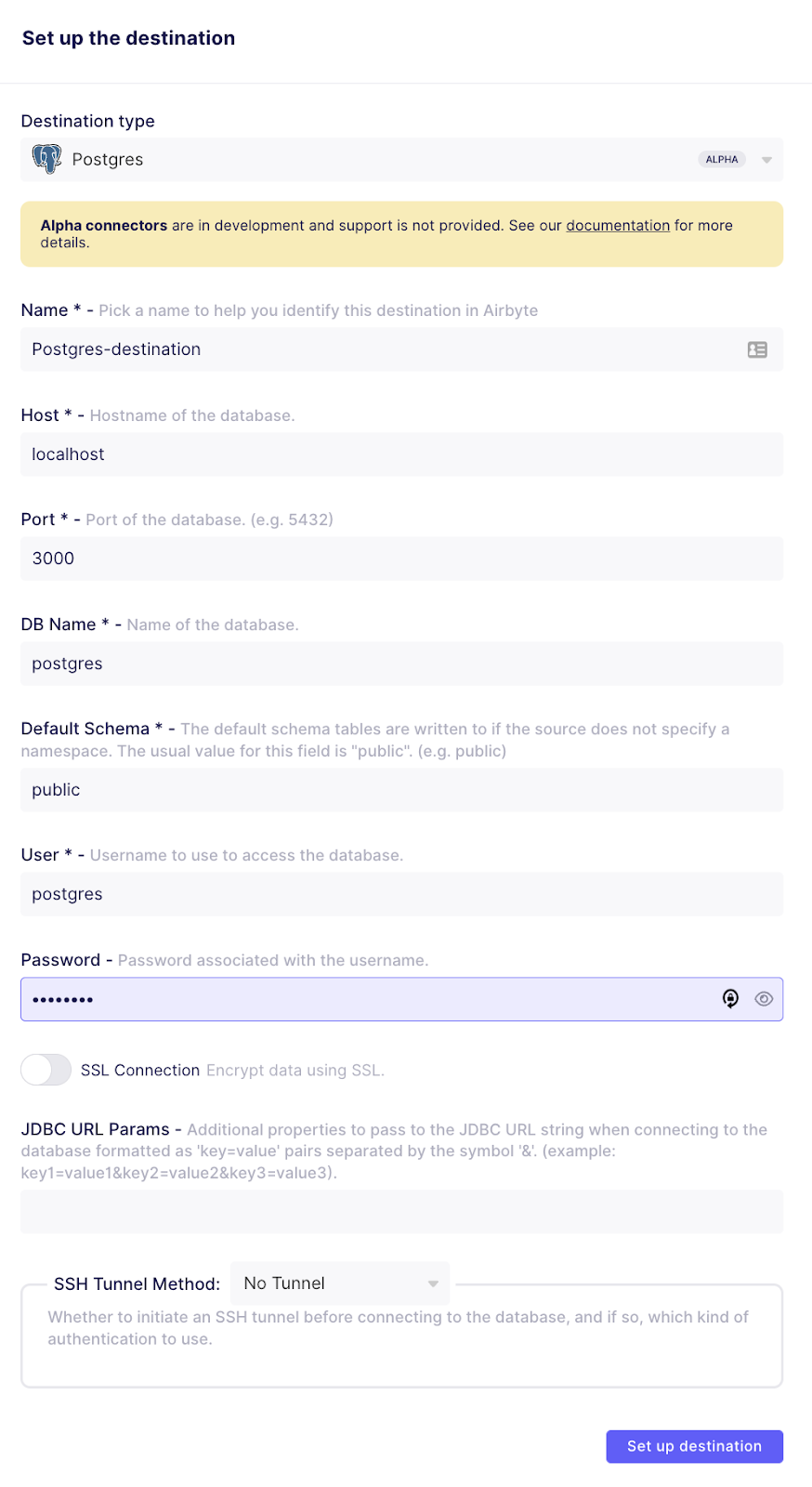

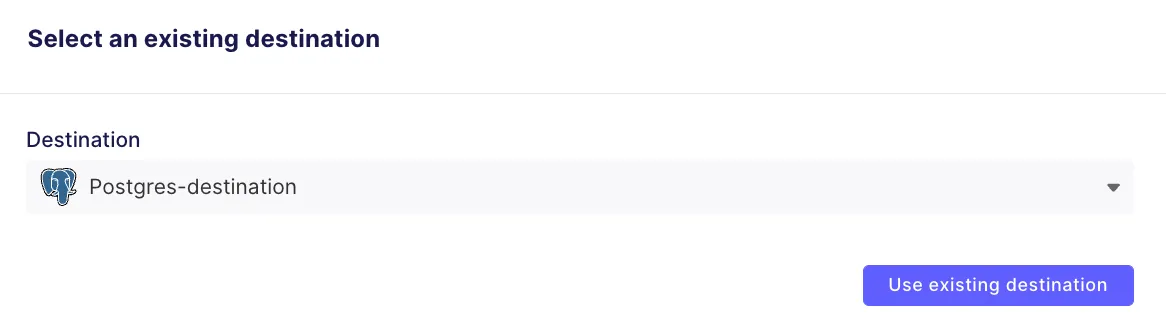

And select Postgres-destination, which you previously defined, as the destination for this connection.

You will see a set up page as shown below. Set the name of the connection to full-refresh-overwrite, and the destination stream prefix as overwrite_ as shown below.

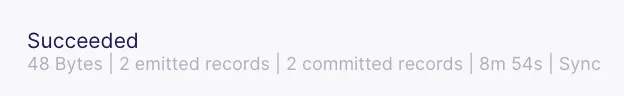

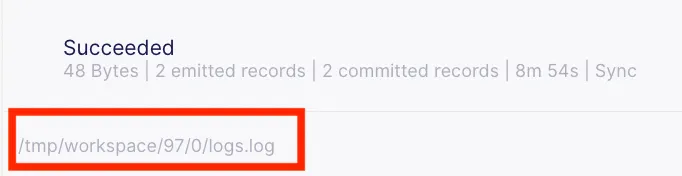

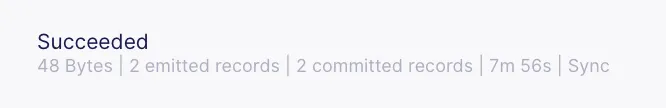

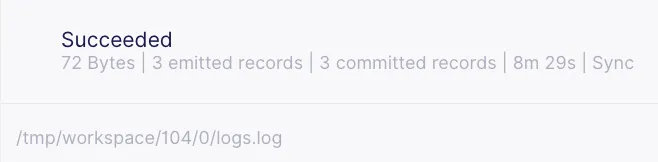

After you set up the connection, you should see that a first sync has automatically started. Once it completes, you should see a message indicating that two records have been emitted as follows:

.

Now that the first sync has completed you can take a look at the Postgres destination to see how the replicated data looks. Execute the following command to open a shell to the destination Postgres database:

docker exec -it airbyte-destination /bin/bashpsql --username=postgres

psql --username=postgres

You can view the tables in the destination Postgres database by executing the following command from the Postgres shell that you have just opened .

\dt;

Which should respond with the following:

List of relations

Schema | Name | Type | Owner

--------+------------------------------------------+-------+----------

public | _airbyte_raw_overwrite_full_refresh_demo | table | postgres

public | overwrite_full_refresh_demo | table | postgres

(2 rows)

ℹ️ Notice that there are two tables. As discussed earlier, Airbyte converts each source record into a JSON blob that contains all of your data, and writes it into the _airbyte_raw_overwrite_full_refresh_demo table. This is then normalized into a separate table.

Have a look at the _airbyte_raw_overwrite_full_refresh_demo table by executing the following command:

SELECT * FROM _airbyte_raw_overwrite_full_refresh_demo;

Which should respond with a table similar to the following:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

44c96530-94ab-4117-8867-07b153a3b20c | {"id": 1, "name": "Mary X"} | 2022-07-27 13:51:03.182+00

e74f26d1-3695-494c-9a65-2b5765ea9356 | {"id": 2, "name": "John D"} | 2022-07-27 13:51:03.182+00

(2 rows)

In addition to the field containing the source data there are two additional fields:

If you have Basic Normalization enabled, Airbyte will have created a normalized table by extracting data from the JSON object. You can view the normalized data by executing the following command:

SELECT * FROM overwrite_full_refresh_demo;

Which should respond with a table similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_overwrite_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+-------------------------------+---------------------------------------------

1 | Mary X | 44c96530-94ab-4117-8867-07b153a3b20c | 2022-07-27 13:51:03.182+00 | 2022-07-27 13:57:22.346497+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | e74f26d1-3695-494c-9a65-2b5765ea9356 | 2022-07-27 13:51:03.182+00 | 2022-07-27 13:57:22.346497+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(2 rows)

You can see that this normalized table contains columns corresponding to each of the columns in the source table as expected (id and name). In addition to the Airbyte-created fields that appeared in the raw data table, the normalized table also has a few more airbyte-generated columns:

Let's go into detail about how this data has been normalized in the next section!

Airbyte executes SQL commands on the destination database to normalize your data. This is covered conceptually in the following pages in Airbyte’s documentation.

To help you to understand the differences between different synchronization modes you can look at the actual SQL that is used for normalizing your data. In order to find the SQL code that has been executed, you need to find the job ID and attempt ID for the sync that you are interested in. You can get this by looking in the Sync History, as shown below.

In this case the job ID is 97 and the attempt ID is 0, as annotated by the red square in the image above.

Create a bash shell into the airbyte-server docker container as follows:

docker exec -it airbyte-server /bin/bash

Using the job ID and the attempt ID, you can find the SQL that has been used for executing the normalization. Based on the job ID of 97 and attempt ID of 0 (extracted above), I execute the following:

cat /tmp/workspace/97/0/build/run/airbyte_utils/models/generated/airbyte_tables/public/overwrite_full_refresh_demo.sql

Which returns the SQL that has been used for converting the raw data into the normalized table. In order to explain what the SQL does to get your data normalized, I have broken it into four main sections below.

ℹ️ Airbyte leverages dbt to create the SQL for normalizing the data, and makes use of common table expressions (or CTEs) to break it into more manageable pieces.

The first section of SQL extracts the source fields from the JSON blob.

create table "postgres".public."overwrite_full_refresh_demo__dbt_tmp"

as (

with __dbt__cte__overwrite_full_refresh_demo_ab1 as (

-- SQL model to parse JSON blob stored in a single column and extract into separated field columns as described by the JSON Schema

-- depends_on: "postgres".public._airbyte_raw_overwrite_full_refresh_demo

select

jsonb_extract_path_text(_airbyte_data, 'id') as "id",

jsonb_extract_path_text(_airbyte_data, 'name') as "name",

_airbyte_ab_id,

_airbyte_emitted_at,

now() as _airbyte_normalized_at

from "postgres".public._airbyte_raw_overwrite_full_refresh_demo as table_alias

-- overwrite_full_refresh_demo

where 1 = 1

),

The next portion of the SQL is responsible for casting each of the fields that has been extracted from the JSON to the correct type.

__dbt__cte__overwrite_full_refresh_demo_ab2 as (

-- SQL model to cast each column to its adequate SQL type converted from the JSON schema type

-- depends_on: __dbt__cte__overwrite_full_refresh_demo_ab1

select

cast("id" as

float

) as "id",

cast("name" as text) as "name",

_airbyte_ab_id,

_airbyte_emitted_at,

now() as _airbyte_normalized_at

from __dbt__cte__overwrite_full_refresh_demo_ab1

-- overwrite_full_refresh_demo

where 1 = 1

),

The next section is responsible for adding in an md5 hash from the fields that have been passed in the source JSON. This article about md5 hashing on the dbt blog discusses the value of this.

__dbt__cte__overwrite_full_refresh_demo_ab3 as (

-- SQL model to build a hash column based on the values of this record

-- depends_on: __dbt__cte__overwrite_full_refresh_demo_ab2

select

md5(cast(coalesce(cast("id" as text), '') || '-' || coalesce(cast("name" as text), '') as text)) as _airbyte_overwrite_full_refresh_demo_hashid,

tmp.*

from __dbt__cte__overwrite_full_refresh_demo_ab2 tmp

-- overwrite_full_refresh_demo

where 1 = 1

)

Finally, the last part of the SQL selects all of the fields to be written into the overwrite_full_refresh_demo__dbt_tmp table.

-- Final base SQL model

-- depends_on: __dbt__cte__overwrite_full_refresh_demo_ab3

select

"id",

"name",

_airbyte_ab_id,

_airbyte_emitted_at,

now() as _airbyte_normalized_at,

_airbyte_overwrite_full_refresh_demo_hashid

from __dbt__cte__overwrite_full_refresh_demo_ab3

-- overwrite_full_refresh_demo from "postgres".public._airbyte_raw_overwrite_full_refresh_demo

where 1 = 1

);

ℹ️ New SQL is generated for each sync, but for the full refresh | overwrite mode the SQL is identical every time. If you want to see this for yourself, then in the subsequent synchronizations you can note the job ID and attempt ID and view the SQL as you have just done above.

The astute reader may wonder why this SQL creates a table called "postgres".public."overwrite_full_refresh_demo__dbt_tmp", while the table that you see in the Postgres destination is simply called overwrite_full_refresh_demo. To understand it better, take a look in the logs, which in my case (for this job ID and attempt ID) are located at /tmp/workspace/97/0/logs/dbt.log on the airbyte-server container. In the log file you should find an alter table/rename command that has renamed the table, similar to the following:

13:57:23.468838 [debug] [Thread-5 ]: On model.airbyte_utils.overwrite_full_refresh_demo: /* {"app": "dbt", "dbt_version": "1.0.0", "profile_name": "normalize", "target_name": "prod", "node_id": "model.airbyte_utils.overwrite_full_refresh_demo"} */

alter table "postgres".public."overwrite_full_refresh_demo__dbt_tmp" rename to "overwrite_full_refresh_demo"

You will now insert a new record in the source data, and then see how this is replicated to the destination. Go back to the terminal where you opened a shell to the source Postgres database, and add a new record and then view the source table as follows:

INSERT INTO full_refresh_demo(id, name) VALUES(3, 'Jane Y');

SELECT * FROM full_refresh_demo;

The source table should look as follows:

id | name

----+--------

1 | Mary X

2 | John D

3 | Jane Y

(3 rows)

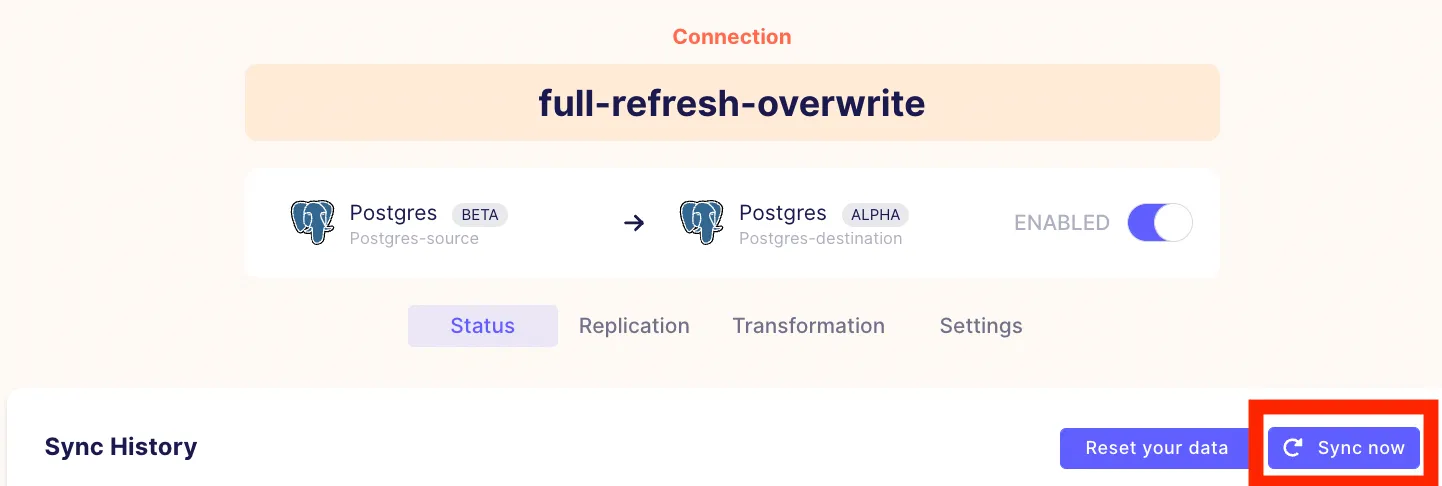

Execute a new sync by clicking on Sync Now in the connection page.

Once the sync has completed, you will see that 3 records have been emitted. This is because full refresh synchronization modes extract and emit all of the records in the source data set, regardless of whether they have previously been replicated to the destination or not.

Have a look at the _airbyte_raw_overwrite_full_refresh_demo table by running the following:

SELECT * FROM _airbyte_raw_overwrite_full_refresh_demo;

Which should look similar to the following:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

87619a8e-7381-4f56-acda-d4f6c375ccc2 | {"id": 1, "name": "Mary X"} | 2022-07-27 14:24:39.743+00

a33a60fd-e948-47fa-b847-e9bfeb64cc84 | {"id": 2, "name": "John D"} | 2022-07-27 14:24:39.743+00

255e5402-8973-41fc-85b2-2ddaf6eabff9 | {"id": 3, "name": "Jane Y"} | 2022-07-27 14:24:39.743+00

(3 rows)

Notice that the _airbyte_emitted_at field for every record has been updated – this is further confirmation that all of the records in this table have been overwritten – not just the new record that you added to the source table. This is expected with the full refresh synchronization mode.

The normalized data in the overwrite_full_refresh_demo table can be seen by executing:

SELECT * FROM overwrite_full_refresh_demo;

Which should respond with a table that looks similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_overwrite_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+------------------------------+---------------------------------------------

1 | Mary X | 87619a8e-7381-4f56-acda-d4f6c375ccc2 | 2022-07-27 14:24:39.743+00 | 2022-07-27 14:30:52.64373+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | a33a60fd-e948-47fa-b847-e9bfeb64cc84 | 2022-07-27 14:24:39.743+00 | 2022-07-27 14:30:52.64373+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Jane Y | 255e5402-8973-41fc-85b2-2ddaf6eabff9 | 2022-07-27 14:24:39.743+00 | 2022-07-27 14:30:52.64373+00 | ff431c3133aaae79477dc34b4cbba40b

(3 rows)

Every record in the normalized table has been overwritten, as can be seen by comparing the value of the _airbyte_normalized_at field to the value that each row had for that field prior to the sync, as shown earlier in this tutorial.

Update a record on the full_refresh_demo table on the source Postgres database, and view the source table by running the following commands:

UPDATE full_refresh_demo SET name='New Jane' WHERE id=3;

SELECT * FROM full_refresh_demo;

And the source full_refresh_demo table should now look as follows:

id | name

----+----------

1 | Mary X

2 | John D

3 | New Jane

(3 rows)

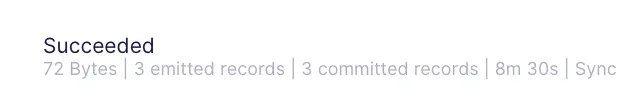

As before, execute a new sync by clicking on Sync Now in the connection pane and wait for it to complete. The UI will indicate that 3 records have been emitted as follows:

Have a look at the _airbyte_raw_overwrite_full_refresh_demo table in the destination Postgres database with the following command:

SELECT * FROM _airbyte_raw_overwrite_full_refresh_demo;

Which should respond with a table similar to the following

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-------------------------------+----------------------------

9d647452-21cd-4e6b-b9e6-1021b4eea06f | {"id": 1, "name": "Mary X"} | 2022-07-27 14:38:26.594+00

d0c7d8a6-ed0e-4280-a768-08dfc424abda | {"id": 2, "name": "John D"} | 2022-07-27 14:38:26.594+00

23472df3-9ac7-48fb-8675-84b240367dc4 | {"id": 3, "name": "New Jane"} | 2022-07-27 14:38:26.594+00

(3 rows)

Once again, by comparing the value of _airbyte_emitted_at to the prior values (shown earlier in this tutorial), you can see that every record has been overwritten, not just the record that was updated.

Have a look at the normalized data in the overwrite_full_refresh_demo table with the following command:

SELECT * FROM overwrite_full_refresh_demo;

Which should be similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_overwrite_full_refresh_demo_hashid

----+----------+--------------------------------------+----------------------------+------------------------------+---------------------------------------------

1 | Mary X | 9d647452-21cd-4e6b-b9e6-1021b4eea06f | 2022-07-27 14:38:26.594+00 | 2022-07-27 14:44:07.34507+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | d0c7d8a6-ed0e-4280-a768-08dfc424abda | 2022-07-27 14:38:26.594+00 | 2022-07-27 14:44:07.34507+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | New Jane | 23472df3-9ac7-48fb-8675-84b240367dc4 | 2022-07-27 14:38:26.594+00 | 2022-07-27 14:44:07.34507+00 | 7c134641d9dba0fd57f776a972abbcaf

(3 rows)

Once again the _airbyte_normalized_at field for every record has been updated – in other words all of the records in this table have been completely overwritten/re-normalized as expected.

See what happens if you delete a record on the source database by executing the following commands:

DELETE FROM full_refresh_demo where id=3;

SELECT * FROM full_refresh_demo;

The source full_refresh_demo table should look similar to:

id | name

----+--------

1 | Mary X

2 | John D

(2 rows)

Execute a Sync Now in the connection page, and wait for the sync to complete. Once it is complete, you should see that two records have been emitted, as shown below.

Have a look at the raw data by executing:

SELECT * FROM _airbyte_raw_overwrite_full_refresh_demo;

Which should respond with something similar to:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

770a8f48-b871-4b67-b803-755d4d6521f5 | {"id": 1, "name": "Mary X"} | 2022-07-27 14:55:11.578+00

282ee38c-b909-465b-be0c-9ceecc10a7f8 | {"id": 2, "name": "John D"} | 2022-07-27 14:55:11.578+00

(2 rows)

And have a look at the normalized data by running:

SELECT * FROM overwrite_full_refresh_demo ;

Which should be similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_overwrite_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+-------------------------------+---------------------------------------------

1 | Mary X | 770a8f48-b871-4b67-b803-755d4d6521f5 | 2022-07-27 14:55:11.578+00 | 2022-07-27 15:00:53.715733+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 282ee38c-b909-465b-be0c-9ceecc10a7f8 | 2022-07-27 14:55:11.578+00 | 2022-07-27 15:00:53.715733+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(2 rows)

You can see that the _airbyte_emitted_at timestamp has been updated for all of the documents in both the raw data and in the normalized data, which confirms that every record in both tables have been overwritten. Additionally the _airbyte_normalized_at timestamp confirms that every record has been re-normalized.

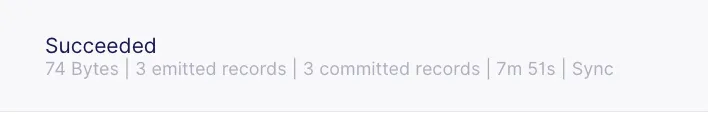

What will happen if you execute a sync on data that hasn’t been modified since the previous sync? Find out by clicking on the Sync now button! Once the sync has completed, you will see that two records have been emitted as shown below.

Have a look at the raw data by executing:

SELECT * FROM _airbyte_raw_overwrite_full_refresh_demo ;

Which should respond with something similar to:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

7c220c2b-c844-469c-8d49-92b248fe5d2a | {"id": 1, "name": "Mary X"} | 2022-07-27 15:47:33.788+00

354bd111-c44c-4b51-9a16-0c3fa9fbca48 | {"id": 2, "name": "John D"} | 2022-07-27 15:47:33.788+00

(2 rows)

And have a look at the normalized data by running:

SELECT * FROM overwrite_full_refresh_demo ;

Which should be similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_overwrite_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+-------------------------------+---------------------------------------------

1 | Mary X | 7c220c2b-c844-469c-8d49-92b248fe5d2a | 2022-07-27 15:47:33.788+00 | 2022-07-27 15:53:49.016524+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 354bd111-c44c-4b51-9a16-0c3fa9fbca48 | 2022-07-27 15:47:33.788+00 | 2022-07-27 15:53:49.016524+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(2 rows)

Looking at the _airbyte_emitted_at timestamp and the _airbyte_normalized_at timestamp confirms that every record has been overwritten and re-normalized. In other words, even if no data was modified in the Postgres source, the entire table is copied and overwritten in the destination.

To drive data into the Postgres database that you created earlier, you will first need to define a destination. Configure an Airbyte destination destination called Postgres-destination as follows:

In this section you will create an Airbyte connection that reads data from the Postgres source and drives it into the Postgres destination using the full refresh | append replication mode. In this mode, as with the full refresh | overwrite replication mode, all data in the source database is sent to the destination database, regardless of whether it has been synced before or not. However, in the append variant, new syncs will take all data from the most recent sync and append it to the raw destination table. If normalization is enabled, then records that have recently been appended to the raw table will be normalized and appended to the normalized table. This is easiest to see for yourself via a hands-on example, as presented below.

Set up a new connection that will demonstrate full refresh | append functionality, using the connectors that you created earlier in this tutorial.

First, select Postgres-source as the source for this connection.

Then select Postgres-destination as the destination for this connection.

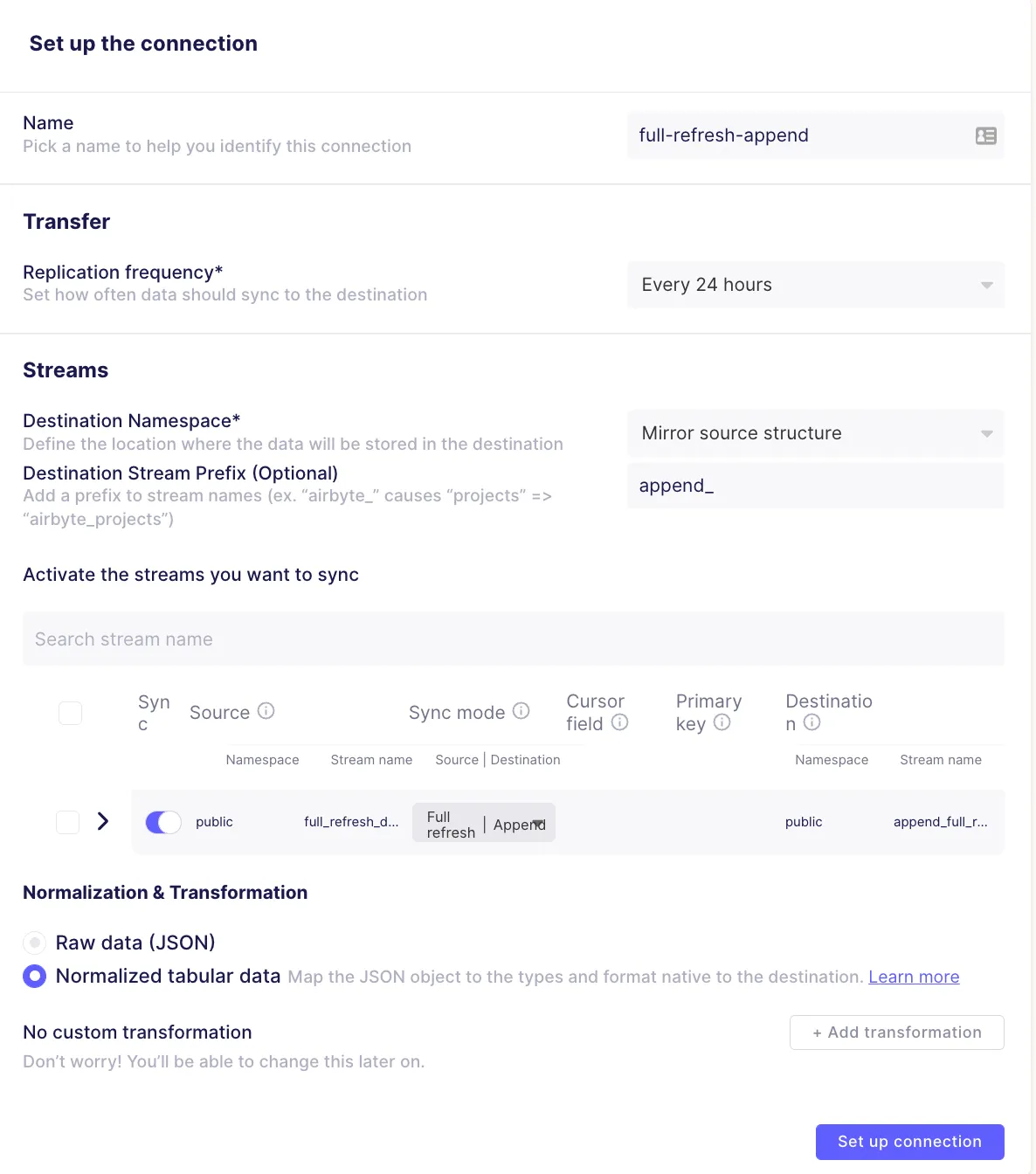

Then create a new connection and name it full-refresh-append, set the prefix to append_ , and select full refresh | append as the sync mode, as shown below.

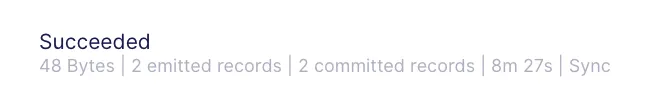

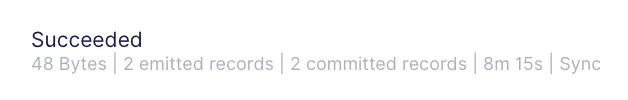

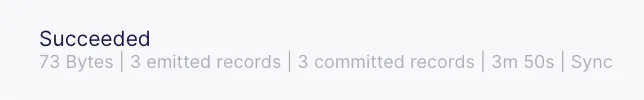

A sync should automatically start after you create the connection. Wait for the sync to complete, and you should see a message like the following:

If you don’t already have a shell open to your Postgres destination, execute the following commands:

docker exec -it airbyte-destination /bin/bash

psql --username=postgres

You can view the tables in the destination Postgres database by executing the following command from the Postgres shell that you have just opened .

\dt;

Which should respond with the following:

List of relations

Schema | Name | Type | Owner

--------+------------------------------------------+-------+----------

public | _airbyte_raw_append_full_refresh_demo | table | postgres

public | _airbyte_raw_overwrite_full_refresh_demo | table | postgres

public | append_full_refresh_demo | table | postgres

public | overwrite_full_refresh_demo | table | postgres

(4 rows)

Two new tables have been created, _airbyte_raw_append_full_refresh_demo and append_full_refresh_demo.

You can look at the raw data as follows:

SELECT * FROM _airbyte_raw_append_full_refresh_demo;

Which should respond with a table that looks very similar to the raw table that you saw created with full refresh | overwrite replication, as follows:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

972a8d74-d840-4c43-826e-b0a1042c1681 | {"id": 1, "name": "Mary X"} | 2022-07-27 16:46:44.351+00

fc5bae3e-cbde-4790-921f-bab7a6dce51f | {"id": 2, "name": "John D"} | 2022-07-27 16:46:44.351+00

(2 rows)

You can look at the normalized data with the following command:

SELECT * FROM append_full_refresh_demo;

Which should return a table that looks similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_append_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+------------------------------+------------------------------------------

1 | Mary X | 972a8d74-d840-4c43-826e-b0a1042c1681 | 2022-07-27 16:46:44.351+00 | 2022-07-27 16:52:21.69786+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | fc5bae3e-cbde-4790-921f-bab7a6dce51f | 2022-07-27 16:46:44.351+00 | 2022-07-27 16:52:21.69786+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(2 rows)

So far, the replication functionality appears to be the same as what you saw in the section about full refresh | overwrite replication.

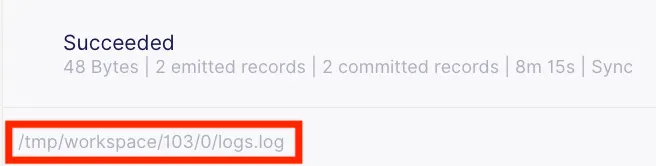

As with the previous tutorial, you can see the job ID and attempt ID, which you will use for finding and viewing the SQL, as shown below:

If you don’t already have bash shell into the airbyte-server docker container, create one as follows

docker exec -it airbyte-server /bin/bash

In my case the job ID of 103 and the attempt ID of 0 can be used to view the generated SQL that has been executed on the Postgres destination to normalize the raw data. Have a look at the generated SQL by replacing your job ID and attempt ID in the path below.

cat /tmp/workspace/103/0/build/run/airbyte_utils/models/generated/airbyte_incremental/public/append_full_refresh_demo.sql

This shows you the SQL that has been used for converting the raw data into the normalized table. In this initial synchronization run, the SQL that is generated is basically identical to the SQL that was demonstrated earlier for the full refresh | overwrite sync. One small difference is that the SQL directly creates the append_full_refresh_demo table, rather than creating a temporary table and renaming it. The top of the SQL file looks as follows:

create table "postgres".public."append_full_refresh_demo"

as (

... etc ..

However, you will see that follow-up synchronizations have different SQL generated. I will discuss this in more detail after the next sync.

If you don’t have a terminal open on the Postgres source database, open one as follows:

docker exec -it airbyte-source /bin/bash

psql --username=postgres

Add a new record to the source Postgres database as follows:

INSERT INTO full_refresh_demo(id, name) VALUES(3, 'Alex M');

And view the source table by executing:

SELECT * FROM full_refresh_demo;

The source table should look as follows:

id | name

----+--------

1 | Mary X

2 | John D

3 | Alex M

(3 rows)

Execute a new sync by clicking on Sync Now in the connection pane and wait for the sync to complete.

Once complete, you should see that three records have been emitted. Also take note of the job ID and attempt ID, which are 104 and 0 for this run.

Go back to the destination Postgres terminal, and have a look at _airbyte_raw_append_full_refresh_demo as follows:

SELECT * FROM _airbyte_raw_append_full_refresh_demo;

Which should look similar to the following:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+-----------------------------+----------------------------

972a8d74-d840-4c43-826e-b0a1042c1681 | {"id": 1, "name": "Mary X"} | 2022-07-27 16:46:44.351+00

fc5bae3e-cbde-4790-921f-bab7a6dce51f | {"id": 2, "name": "John D"} | 2022-07-27 16:46:44.351+00

15264229-8022-415e-8b5a-939f048dc15e | {"id": 1, "name": "Mary X"} | 2022-07-27 17:18:35.355+00

f33488c0-ac2b-4c01-b054-7d8534ea0199 | {"id": 2, "name": "John D"} | 2022-07-27 17:18:35.355+00

8708359b-bad9-4a1f-9172-073cc5469d32 | {"id": 3, "name": "Alex M"} | 2022-07-27 17:18:35.355+00

(5 rows)

This is quite different from the destination table that was generated after inserting an additional record into the source data table in the previous section on the full refresh | overwrite mode! It contains all of the data from the first sync, as well as all of the data from the second sync. You can see from the _airbyte_emitted_at timestamp which records were sent in the first sync versus the second sync.

The corresponding normalized data in the append_full_refresh_demo table can be seen by executing:

SELECT * FROM append_full_refresh_demo;

Which should respond with a table that looks similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_append_full_refresh_demo_hashid

----+--------+--------------------------------------+----------------------------+-------------------------------+------------------------------------------

1 | Mary X | 972a8d74-d840-4c43-826e-b0a1042c1681 | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | fc5bae3e-cbde-4790-921f-bab7a6dce51f | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | 85aefb77a6bc2acc426eedf5a822b8b3

1 | Mary X | 15264229-8022-415e-8b5a-939f048dc15e | 2022-07-27 17:18:35.355+00 | 2022-07-27 17:24:38.025622+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | f33488c0-ac2b-4c01-b054-7d8534ea0199 | 2022-07-27 17:18:35.355+00 | 2022-07-27 17:24:38.025622+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Alex M | 8708359b-bad9-4a1f-9172-073cc5469d32 | 2022-07-27 17:18:35.355+00 | 2022-07-27 17:24:38.025622+00 | fc1a25c4fe1949b371a42920320021ee

(5 rows)

The normalized destination table has copies of all of the records that existed from the first sync along with all of the records that have just been inserted in the second sync.

If you look at the _airbyte_normalized_at field, it appears that every row in the normalized table has been created by running the normalization SQL on the rows in the raw data table. Let’s look at this in more detail.

You can view the normalization code by executing the following command on the airbyte-server container (replacing the job ID and attempt ID with values you extracted from the UI). In my case I execute the following:

cat /tmp/workspace/104/0/build/run/airbyte_utils/models/generated/airbyte_incremental/public/append_full_refresh_demo.sql

Which responds with the following SQL:

delete from "postgres".public."append_full_refresh_demo"

where (_airbyte_ab_id) in (

select (_airbyte_ab_id)

from "append_full_refresh_demo__dbt_tmp172440183629"

);

insert into "postgres".public."append_full_refresh_demo" ("id", "name", "_airbyte_ab_id", "_airbyte_emitted_at", "_airbyte_normalized_at", "_airbyte_append_full_refresh_demo_hashid")

(

select "id", "name", "_airbyte_ab_id", "_airbyte_emitted_at", "_airbyte_normalized_at", "_airbyte_append_full_refresh_demo_hashid"

from "append_full_refresh_demo__dbt_tmp172440183629"

)

This is totally different from the SQL that was used in the first full refresh | append sync!

The first command finds the records that exist in a temporary table, and then deletes these records from the normalized table. The very next command inserts all of the records from that same temporary table into the normalized table.

To understand what has happened, you can see how that temporary table was created. Execute the following command on the airbyte-server container (replacing the job ID and attempt ID with values you extracted from the UI):

cat /tmp/workspace/104/0/logs/dbt.log

You should be able to find the SQL that was used to create the temporary table. The SQL is very similar to SQL from the previous normalizations, and the beginning of the SQL code block looks as follows:

create temporary table "append_full_refresh_demo__dbt_tmp172440183629"

as (

with __dbt__cte__append_full_refresh_demo_ab1 as (

...etc...

However at the very end of this SQL block there is a SELECT statement which limits the records that will be selected for normalization from the raw table to only those that have an _airbyte_emitted_at timestamp that is greater or equal to the highest _airbyte_emitted_at value in any of the records that are already in the normalized data.

where 1 =1

and coalesce(

cast(_airbyte_emitted_at as

timestamp with time zone

) >= (select max(cast(_airbyte_emitted_at as

timestamp with time zone

)) from "postgres".public."append_full_refresh_demo"),

true)

);

This reduces the number of records which will be selected for normalization to only the records that were sent in the most recent sync, along with records from the previous sync. This is much more efficient than re-normalizing all of the rows in the raw table.

ℹ️ Similar SQL is generated for each subsequent sync that is done during the full refresh | append mode. If you want to see this for yourself, then in the subsequent synchronizations you can note the job ID and attempt ID and view the SQL code and the dbt log file as you have just done.

Update a record on the source full_refresh_demo table and look at the resulting table with the following commands:

UPDATE full_refresh_demo SET name='Brett D' WHERE id=3;

SELECT * FROM full_refresh_demo;

And the source table should now look as follows:

id | name

----+---------

1 | Mary X

2 | John D

3 | Brett D

(3 rows)

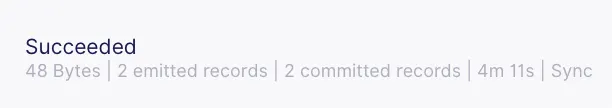

As before, execute a new sync by clicking on Sync Now in the connection panel, and wait for it to complete.

Once complete you should see that again, three records have been emitted. Have a look at the _airbyte_raw_append_full_refresh_demo table as follows.

SELECT * FROM _airbyte_raw_append_full_refresh_demo;

Which should look similar to the following:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+------------------------------+----------------------------

972a8d74-d840-4c43-826e-b0a1042c1681 | {"id": 1, "name": "Mary X"} | 2022-07-27 16:46:44.351+00

fc5bae3e-cbde-4790-921f-bab7a6dce51f | {"id": 2, "name": "John D"} | 2022-07-27 16:46:44.351+00

15264229-8022-415e-8b5a-939f048dc15e | {"id": 1, "name": "Mary X"} | 2022-07-27 17:18:35.355+00

f33488c0-ac2b-4c01-b054-7d8534ea0199 | {"id": 2, "name": "John D"} | 2022-07-27 17:18:35.355+00

8708359b-bad9-4a1f-9172-073cc5469d32 | {"id": 3, "name": "Alex M"} | 2022-07-27 17:18:35.355+00

282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | {"id": 1, "name": "Mary X"} | 2022-07-28 12:17:01.493+00

6a0e706f-4e94-422f-abd0-e6635678840c | {"id": 2, "name": "John D"} | 2022-07-28 12:17:01.493+00

4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | {"id": 3, "name": "Brett D"} | 2022-07-28 12:17:01.493+00

(8 rows)

The corresponding normalized data in the append_full_refresh_demo table can be seen by executing:

SELECT * FROM append_full_refresh_demo;

Which should respond with a table that looks similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_append_full_refresh_demo_hashid

----+---------+--------------------------------------+----------------------------+-------------------------------+------------------------------------------

1 | Mary X | 972a8d74-d840-4c43-826e-b0a1042c1681 | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | fc5bae3e-cbde-4790-921f-bab7a6dce51f | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | 85aefb77a6bc2acc426eedf5a822b8b3

1 | Mary X | 15264229-8022-415e-8b5a-939f048dc15e | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | f33488c0-ac2b-4c01-b054-7d8534ea0199 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Alex M | 8708359b-bad9-4a1f-9172-073cc5469d32 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | fc1a25c4fe1949b371a42920320021ee

1 | Mary X | 282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:19:16.246736+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 6a0e706f-4e94-422f-abd0-e6635678840c | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:19:16.246736+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Brett D | 4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:19:16.246736+00 | 4e852075c9fff871c1a5186f09042a16

(8 rows)

Every time a sync is performed, the entire source full_refresh_demo table is appended to the destination table. Additionally, if you look at the _airbyte_normalized_at values, you will notice that only the documents from the current and previous sync have been normalized, as expected based on the previous analysis of the SQL normalization code.

See what happens if you delete a record on the source database by executing the following commands:

DELETE FROM full_refresh_demo where id=3;

SELECT * FROM full_refresh_demo;

The source full_refresh_demo table should look similar to:

id | name

----+--------

1 | Mary X

2 | John D

(2 rows)

Then perform a sync by clicking on the Sync Now button in the connection panel.

Once the sync has completed you will see that two records were emitted — and once again, you can look at the raw data in _airbyte_raw_append_full_refresh_demo with:

SELECT * FROM _airbyte_raw_append_full_refresh_demo;

Which now looks as follows:

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+------------------------------+----------------------------

972a8d74-d840-4c43-826e-b0a1042c1681 | {"id": 1, "name": "Mary X"} | 2022-07-27 16:46:44.351+00

fc5bae3e-cbde-4790-921f-bab7a6dce51f | {"id": 2, "name": "John D"} | 2022-07-27 16:46:44.351+00

15264229-8022-415e-8b5a-939f048dc15e | {"id": 1, "name": "Mary X"} | 2022-07-27 17:18:35.355+00

f33488c0-ac2b-4c01-b054-7d8534ea0199 | {"id": 2, "name": "John D"} | 2022-07-27 17:18:35.355+00

8708359b-bad9-4a1f-9172-073cc5469d32 | {"id": 3, "name": "Alex M"} | 2022-07-27 17:18:35.355+00

282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | {"id": 1, "name": "Mary X"} | 2022-07-28 12:17:01.493+00

6a0e706f-4e94-422f-abd0-e6635678840c | {"id": 2, "name": "John D"} | 2022-07-28 12:17:01.493+00

4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | {"id": 3, "name": "Brett D"} | 2022-07-28 12:17:01.493+00

2145fa8f-c6e5-4b74-8ffb-a8323f77fa00 | {"id": 1, "name": "Mary X"} | 2022-07-28 12:34:29.472+00

27bdb85a-72ec-494d-9cc2-7a5fdd87ad3a | {"id": 2, "name": "John D"} | 2022-07-28 12:34:29.472+00

(10 rows)

And the then execute the following to see the normalized data:

SELECT * FROM append_full_refresh_demo;

The append_full_refresh_demo table should look similar to the following:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_append_full_refresh_demo_hashid

----+---------+--------------------------------------+----------------------------+-------------------------------+------------------------------------------

1 | Mary X | 972a8d74-d840-4c43-826e-b0a1042c1681 | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | fc5bae3e-cbde-4790-921f-bab7a6dce51f | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | 85aefb77a6bc2acc426eedf5a822b8b3

1 | Mary X | 15264229-8022-415e-8b5a-939f048dc15e | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | f33488c0-ac2b-4c01-b054-7d8534ea0199 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Alex M | 8708359b-bad9-4a1f-9172-073cc5469d32 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | fc1a25c4fe1949b371a42920320021ee

1 | Mary X | 282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 6a0e706f-4e94-422f-abd0-e6635678840c | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Brett D | 4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | 4e852075c9fff871c1a5186f09042a16

1 | Mary X | 2145fa8f-c6e5-4b74-8ffb-a8323f77fa00 | 2022-07-28 12:34:29.472+00 | 2022-07-28 12:37:02.009314+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 27bdb85a-72ec-494d-9cc2-7a5fdd87ad3a | 2022-07-28 12:34:29.472+00 | 2022-07-28 12:37:02.009314+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(10 rows)

Again, the entire source table has been appended into the destination. Additionally, you can see that only the data from the current and the previous sync have been normalized, as expected based on the previous analysis of the normalization code.

It should be clear by now that regardless of the action that is taken on the source table, whenever a sync is performed, the entire table is appended to the destination table. Let’s do one last sync, without modifying any data on the source, just to be sure.

SELECT * FROM _airbyte_raw_append_full_refresh_demo;

Which responds with the following

_airbyte_ab_id | _airbyte_data | _airbyte_emitted_at

--------------------------------------+------------------------------+----------------------------

972a8d74-d840-4c43-826e-b0a1042c1681 | {"id": 1, "name": "Mary X"} | 2022-07-27 16:46:44.351+00

fc5bae3e-cbde-4790-921f-bab7a6dce51f | {"id": 2, "name": "John D"} | 2022-07-27 16:46:44.351+00

15264229-8022-415e-8b5a-939f048dc15e | {"id": 1, "name": "Mary X"} | 2022-07-27 17:18:35.355+00

f33488c0-ac2b-4c01-b054-7d8534ea0199 | {"id": 2, "name": "John D"} | 2022-07-27 17:18:35.355+00

8708359b-bad9-4a1f-9172-073cc5469d32 | {"id": 3, "name": "Alex M"} | 2022-07-27 17:18:35.355+00

282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | {"id": 1, "name": "Mary X"} | 2022-07-28 12:17:01.493+00

6a0e706f-4e94-422f-abd0-e6635678840c | {"id": 2, "name": "John D"} | 2022-07-28 12:17:01.493+00

4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | {"id": 3, "name": "Brett D"} | 2022-07-28 12:17:01.493+00

2145fa8f-c6e5-4b74-8ffb-a8323f77fa00 | {"id": 1, "name": "Mary X"} | 2022-07-28 12:34:29.472+00

27bdb85a-72ec-494d-9cc2-7a5fdd87ad3a | {"id": 2, "name": "John D"} | 2022-07-28 12:34:29.472+00

53708d05-9fdf-4552-a8e6-d0e4171390de | {"id": 1, "name": "Mary X"} | 2022-07-28 12:56:35.261+00

68311abe-9fe4-4831-b529-42c018b00cfc | {"id": 2, "name": "John D"} | 2022-07-28 12:56:35.261+00

(12 rows)

And look at the normalized data with:

SELECT * FROM append_full_refresh_demo;

Which should respond with something similar to:

id | name | _airbyte_ab_id | _airbyte_emitted_at | _airbyte_normalized_at | _airbyte_append_full_refresh_demo_hashid

----+---------+--------------------------------------+----------------------------+-------------------------------+------------------------------------------

1 | Mary X | 972a8d74-d840-4c43-826e-b0a1042c1681 | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | fc5bae3e-cbde-4790-921f-bab7a6dce51f | 2022-07-27 16:46:44.351+00 | 2022-07-27 17:24:38.025622+00 | 85aefb77a6bc2acc426eedf5a822b8b3

1 | Mary X | 15264229-8022-415e-8b5a-939f048dc15e | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | f33488c0-ac2b-4c01-b054-7d8534ea0199 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Alex M | 8708359b-bad9-4a1f-9172-073cc5469d32 | 2022-07-27 17:18:35.355+00 | 2022-07-28 12:19:16.246736+00 | fc1a25c4fe1949b371a42920320021ee

1 | Mary X | 282ea34c-3b69-4c0b-a3e0-f581c4f351a3 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 6a0e706f-4e94-422f-abd0-e6635678840c | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | 85aefb77a6bc2acc426eedf5a822b8b3

3 | Brett D | 4b0eeaf6-499c-41f7-9aff-0c134c8e0ac4 | 2022-07-28 12:17:01.493+00 | 2022-07-28 12:37:02.009314+00 | 4e852075c9fff871c1a5186f09042a16

1 | Mary X | 2145fa8f-c6e5-4b74-8ffb-a8323f77fa00 | 2022-07-28 12:34:29.472+00 | 2022-07-28 12:59:53.570143+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 27bdb85a-72ec-494d-9cc2-7a5fdd87ad3a | 2022-07-28 12:34:29.472+00 | 2022-07-28 12:59:53.570143+00 | 85aefb77a6bc2acc426eedf5a822b8b3

1 | Mary X | 53708d05-9fdf-4552-a8e6-d0e4171390de | 2022-07-28 12:56:35.261+00 | 2022-07-28 12:59:53.570143+00 | e20f46dc3a88fcf0ef2101b89d832709

2 | John D | 68311abe-9fe4-4831-b529-42c018b00cfc | 2022-07-28 12:56:35.261+00 | 2022-07-28 12:59:53.570143+00 | 85aefb77a6bc2acc426eedf5a822b8b3

(12 rows)

As expected, even if no source data is modified, a copy of the entire source table is appended onto the destination tables. Additionally, by looking at the value of _airbyte_normalized_at you can see that only the data from the current and previous syncs have been normalized.

In this tutorial I have guided you through a hands-on deep-dive into Airbyte’s full refresh synchronization modes. The main focus of this article has been to discover how replicated data will look, and to understand the SQL code that is executed to transform raw data into normalized data.

While it may be feasible to use Airbyte’s full refresh | overwrite or full refresh | append modes to repeatedly sync an entire source table that contains a small amount of data, this approach may become infeasible for large (100s of MB or TB of data) data sets or for data that is frequently synchronized. For these cases, other replication modes may be more appropriate.

This tutorial is followed-up with the following related tutorials:

You may be interested in other Airbyte tutorials, and in Airbyte’s blog. You can also join the conversation on our community Slack Channel, participate in discussions on Airbyte’s discourse, or sign up for our newsletter. Furthermore, if you are interested in Airbyte as a fully managed service, you can try Airbyte Cloud for free!

Alex is a Data Engineer and Technical writer at Airbyte, and was formerly employed as a Product Manager and as a Principal Consulting Architect at Elastic. Alex has an MBA from the IESE Business School in Barcelona, and a Masters degree in Electrical Engineering from the University of Toronto. Alex has a personal blog at https://alexmarquardt.com.

Alex is a Data Engineer and Technical writer at Airbyte, and was formerly employed as a Product Manager and as a Principal Consulting Architect at Elastic. Alex has an MBA from the IESE Business School in Barcelona, and a Masters degree in Electrical Engineering from the University of Toronto. Alex has a personal blog at https://alexmarquardt.com.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.

Experience swift Postgres replication, effortlessly transferring data between databases in just 10 minutes.

Learn how to easily export Postgres data to CSV, JSON, Parquet, and Avro file formats stored in AWS S3.

Learn how to easily automate your LinkedIn Scraping with Airflow and Beautiful Soup.