What is ETL Data Modeling: Working, Techniques, Benefits and Best Practices

Summarize this article with:

✨ AI Generated Summary

ETL data modeling combines the ETL process with data modeling to structure and organize data efficiently for analytics, involving steps like source identification, extraction, transformation, and loading. Modern paradigms such as ELT and Zero-ETL enhance flexibility and real-time capabilities, while AI integration automates quality checks and schema evolution.

- Key modeling techniques include Dimensional, Data Vault, and Anchor modeling, each suited for different business needs.

- Best practices emphasize strategic materialization, clear data grain, partitioning, documentation, and continuous testing.

- Platforms like Airbyte simplify ETL with extensive connectors, real-time change data capture, AI support, and enterprise-grade security.

Modern data teams face mounting pressure to extract value from exponentially growing datasets while maintaining compliance with stringent regulations. Organizations struggle with data preparation and pipeline maintenance, creating bottlenecks that stifle innovation and competitive advantage.

Extract, Transform, and Load (ETL) is a crucial process in data management that ensures data is migrated efficiently from disparate sources to a centralized repository. The process involves extracting data from operational and other source systems, transforming it, and loading it into a centralized repository such as a data warehouse or data lake.

However, to make the most out of the process, you must have a clear roadmap for every step involved. That's where data modeling comes into play.

In this article, we will discuss ETL data modeling, and you will learn what it is, how it works, its benefits, and best practices in detail.

What Is ETL Data Modeling and How Does It Work?

ETL data modeling can be broken down into two terms: ETL and data modeling. Understanding what is ETL in data management reveals it as a process for centralizing data, while data modeling is the theoretical representation of various data figures, objects, and rules.

Combined, the theoretical representation of the ETL process for storing data in a data warehouse can be defined as ETL data modeling. Data modeling analyzes the structure of data objects and their mutual relations, while ETL applies the rules, inspects them for abnormalities, and loads them into the data warehouse.

Overall, it defines a relationship between the types of data used and how it is grouped and organized in a visual format. This approach makes data actionable and easy to understand for various stakeholders across the organization.

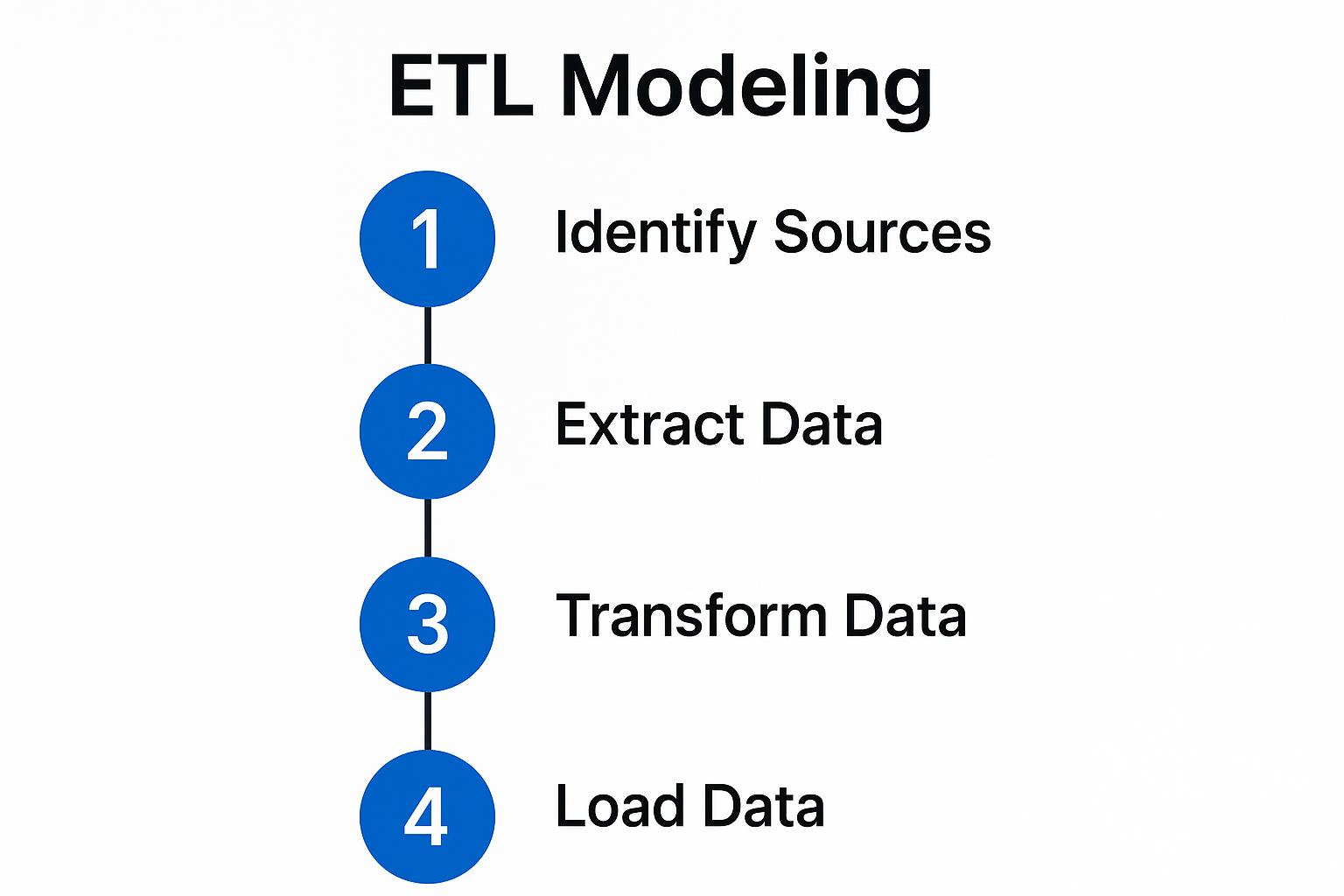

How Does the ETL Data Modeling Process Function?

The ETL data modeling process follows a systematic approach that ensures data flows efficiently from source to destination. Each step builds upon the previous one to create a comprehensive data integration strategy.

1. Identify Data Sources

Begin by cataloging all available data sources including APIs, spreadsheets, databases, and other systems. Understand where data resides, what formats it's in, and any limitations or constraints. This foundational step determines the scope and complexity of your data modeling effort.

2. Data Extraction

Consider freshness requirements, extraction frequency, and methods, which vary by source system. Data extraction strategies must account for system performance impacts and business requirements for data availability.

3. Data Transformation

Convert extracted data into a standard format that supports your analytical needs. Key tasks include data cleansing, validation, normalization, aggregation, and enrichment. This step often requires simulating ETL scenarios to test transformation logic before production deployment.

4. Data Loading

Insert the transformed data into the centralized repository following established patterns. Plan target schema design, table structures, indexing strategies, and relationships. Loading strategies must balance performance requirements with data consistency needs.

What Are the Most Effective ETL Data Modeling Techniques?

Different modeling techniques serve various business requirements and technical constraints. Selecting the right approach depends on your specific use case, data volume, and analytical needs.

Dimensional Modeling

Dimensional modeling organizes data into facts and dimensions, typically using a star or snowflake schema. This approach simplifies complex business data into understandable structures that support analytical queries and reporting requirements.

Facts contain measurable business events while dimensions provide descriptive context. This separation enables efficient query performance and intuitive data exploration for business users.

Data Vault Modeling

Data vault modeling tracks changes and history over time with hubs (entities), links (relationships), and satellites (attributes). This methodology excels at incremental loading, parallel processing, and comprehensive auditing capabilities.

The approach provides flexibility for evolving business requirements while maintaining complete data lineage. Organizations benefit from improved data governance and the ability to reconstruct historical states.

Anchor Modeling

Anchor modeling combines aspects of dimensional and data vault modeling using anchors, attributes, ties, and knots. This graph-based approach excels at handling complex relationships and temporal data requirements.

The technique supports agile development practices by allowing incremental schema evolution. Complex business relationships become more manageable through the systematic decomposition of data elements.

What Are Modern ETL Paradigms: ELT and Zero-ETL Approaches?

Traditional ETL approaches are evolving to meet modern data processing requirements. New paradigms leverage cloud computing power and advanced data platforms to improve efficiency and flexibility.

ELT: Leveraging Warehouse Computing Power

ELT loads raw data first, then transforms it inside modern warehouses like Snowflake, BigQuery, and Redshift. This approach preserves complete source data while enabling flexible transformation strategies.

Benefits include preservation of raw data for future analysis, schema-on-read flexibility for changing requirements, and parallel processing capabilities at scale. Data models in ELT favor denormalized, wide tables optimized for analytical workloads.

The ELT paradigm enables data teams to iterate quickly on transformation logic. Raw data availability supports exploratory analysis and reduces the risk of losing important information during initial processing.

Zero-ETL: Direct Data Access Revolution

Zero-ETL uses data virtualization and federation to query heterogeneous sources directly without traditional extraction and loading steps. This approach reduces infrastructure complexity while enabling real-time analytics capabilities.

Data modeling shifts to logical abstractions and virtual schemas that present unified views across distributed systems. Organizations benefit from reduced data movement and improved query performance for certain use cases.

Zero-ETL approaches work best when source systems can handle analytical query loads. The paradigm supports real-time decision making by eliminating data latency introduced by traditional batch processing.

How Can AI-Enhanced ETL Data Modeling Transform Your Operations?

Artificial intelligence and machine learning capabilities are revolutionizing traditional ETL processes. These technologies automate complex tasks and improve data quality while reducing manual intervention requirements.

Automated Data Quality and Anomaly Detection

Machine learning models establish baselines for normal data patterns and flag deviations in real time. This proactive approach reduces downstream errors and improves overall data reliability across analytical systems.

Automated quality checks identify data inconsistencies, missing values, and format violations before they impact business processes. Pattern recognition algorithms adapt to changing data characteristics over time.

Intelligent Schema Evolution and Mapping

AI systems detect source schema changes and suggest appropriate target modifications automatically. These systems predict downstream impacts and recommend optimization strategies to reduce manual effort and deployment risks.

Intelligent mapping capabilities learn from historical transformation patterns to suggest optimal field mappings. Machine learning algorithms improve accuracy over time by analyzing successful transformation outcomes.

GenAI Integration and Vector Database Support

Modern ETL pipelines must handle unstructured data and embeddings for vector databases like Pinecone, Weaviate, and Milvus. These capabilities enable real-time AI applications and retrieval-augmented generation workloads.

Generative AI integration supports automated documentation creation and code generation for transformation logic. Vector embedding processes transform unstructured content into searchable representations for advanced analytics.

What Are the Key Benefits of ETL Data Modeling?

Proper ETL data modeling delivers significant advantages that extend beyond technical implementation. These benefits impact organizational efficiency, data quality, and strategic decision-making capabilities.

Enhances Data Quality

Logical structure and standardized formats expose inconsistencies and improve overall data quality across systems. Consistent data models enable automated validation and cleansing processes that maintain high standards.

Data quality improvements reduce time spent on manual corrections and increase confidence in analytical results. Standardized formats facilitate data sharing and collaboration across organizational boundaries.

Increases Operational Efficiency

Clear models streamline pipeline development and reduce manual effort required for maintenance tasks. Automated processes replace repetitive manual work, enabling teams to focus on higher-value analytical activities.

Well-designed models support self-service analytics capabilities that reduce dependency on technical teams. Efficient data access patterns improve query performance and reduce infrastructure costs.

Improves Awareness

Greater visibility into data sources, governance policies, and security controls simplifies compliance management and cross-team collaboration. Comprehensive documentation supports knowledge sharing and reduces onboarding time for new team members.

Enhanced awareness enables better decision making around data investments and technology choices. Clear data lineage supports impact analysis when changes are required across interconnected systems.

What Are the Essential Best Practices for ETL Data Modeling?

Following established best practices ensures successful ETL implementations that scale with organizational growth. These practices address common challenges while promoting maintainable and efficient data architectures.

Implement Strategic Materialization

Pre-aggregate data to speed queries and simplify complex joins across multiple tables. Materialization strategies balance storage costs against query performance requirements for different usage patterns.

Consider creating summary tables for frequently accessed metrics and maintaining detailed data for exploratory analysis. Regular evaluation of materialization strategies ensures optimal resource utilization.

Define Clear Data Grain

Establish the smallest unit of measurement for each table to ensure consistent detail levels across your data model. Clear grain definitions prevent confusion and ensure accurate aggregations in downstream analytics.

Document grain decisions and communicate them clearly to all stakeholders. Consistent grain implementation supports reliable data integration and prevents analytical errors.

Optimize Through Data Partitioning

Split large tables by logical keys such as date ranges to improve query performance and storage efficiency. Data partitioning strategies should align with common query patterns and maintenance requirements.

Partition pruning reduces scan volumes and improves query response times. Consider both horizontal and vertical partitioning strategies based on data access patterns and storage constraints.

Maintain Comprehensive Documentation

Document ER diagrams, transformation rules, and attribute definitions to support team collaboration and system maintenance. Living documentation reflects current system state and supports troubleshooting efforts.

Include business context and rationale for modeling decisions to support future modifications. Regular documentation reviews ensure accuracy and completeness as systems evolve.

Test and Improve Continuously

Perform unit testing, integration testing, and end-to-end validation to ensure data quality and system reliability. Establish automated testing frameworks that catch issues before they impact production systems.

Refine pipelines iteratively based on performance monitoring and user feedback. Continuous improvement processes adapt to changing requirements and optimize system performance over time.

How Does Airbyte Streamline Data Modeling for ETL?

Airbyte is an open-source ELT platform with 600+ connectors that automates data integration and replication across diverse systems. The platform simplifies complex data modeling challenges while maintaining enterprise-grade security and governance capabilities.

Custom Connector Development

Build connectors quickly via the Connector Development Kit or Python SDK for specialized data sources. Custom connector capabilities enable integration with proprietary systems and unique data formats without vendor dependencies.

The platform supports rapid prototyping and testing of connector logic. Community-driven development ensures continuous expansion of available integrations and best practices sharing.

GenAI and Vector Database Support

Native ingestion capabilities support vector stores and AI workloads including retrieval-augmented generation applications. Modern data architectures require seamless integration between structured and unstructured data processing workflows.

Vector embedding pipelines transform unstructured content into searchable representations. Integration with popular vector databases enables real-time AI applications and advanced analytics capabilities.

Enterprise Monitoring and Scheduling

Robust observability features and enterprise integrations support production-scale data operations. Comprehensive monitoring enables proactive issue detection and resolution before business impact occurs.

Flexible scheduling options accommodate various data freshness requirements and system constraints. Integration with existing enterprise tools reduces operational complexity and supports unified monitoring strategies.

Real-Time Change Data Capture

CDC capabilities enable incremental synchronization that maintains data freshness while minimizing system impact. Real-time processing supports operational analytics and time-sensitive business processes.

Event-driven architectures reduce latency between source changes and analytical availability. CDC implementations preserve complete change history for audit and compliance requirements.

Enterprise Security and Compliance

End-to-end encryption, role-based access control, and PII masking capabilities ensure data protection across all processing stages. SOC 2, GDPR, and HIPAA compliance support regulated industry requirements.

Comprehensive audit logging provides complete visibility into data movement and transformation activities. Security controls integrate with existing enterprise identity and access management systems.

PyAirbyte Integration

PyAirbyte enables connector usage as code within Python data pipelines and analytical workflows. This approach supports flexible data integration patterns and custom processing logic.

Python integration facilitates machine learning workflows and advanced analytics applications. Developers benefit from familiar programming interfaces while leveraging pre-built connector capabilities.

What Are Common ETL Data Modeling Challenges and Solutions?

Understanding these common challenges enables proactive planning and solution design. Each challenge requires specific technical approaches balanced against organizational requirements and constraints.

Schema evolution requires flexible architectures that accommodate changing source systems. Automated tools reduce manual effort while ensuring consistency across transformation processes.

Data quality frameworks provide systematic approaches to validation and cleansing. Consistent quality standards improve analytical accuracy and reduce troubleshooting overhead.

Frequently Asked Questions

What is the difference between ETL and ELT data modeling?

ETL data modeling focuses on transforming data before loading into the target system, requiring predefined schemas and transformation logic. ELT data modeling loads raw data first and performs transformations within the target system, offering greater flexibility for schema-on-read approaches and iterative development.

How do you handle schema changes in ETL data modeling?

Schema changes require careful planning and automated detection systems that identify modifications in source systems. Implement backward-compatible transformation strategies, maintain version control for schema definitions, and establish clear processes for communicating changes across teams and downstream systems.

What role does data governance play in ETL data modeling?

Data governance establishes policies, standards, and procedures that guide ETL data modeling decisions. It ensures data quality, security, and compliance requirements are met while supporting business objectives and regulatory requirements across the entire data lifecycle.

How can you optimize ETL performance for large datasets?

Performance optimization involves implementing data partitioning strategies, using appropriate storage formats, leveraging parallel processing capabilities, and designing efficient indexing strategies. Consider ELT approaches for cloud-native environments and implement incremental processing where possible.

What are the key considerations when simulating ETL processes?

When simulating ETL scenarios, focus on realistic data volumes, representative data quality issues, and actual transformation complexity. Test error handling, recovery procedures, and performance characteristics under various load conditions to ensure production readiness.

Conclusion

Data modeling is a crucial part of ETL that gives your data stack an understandable and actionable structure for analytical workloads. Modern ETL has evolved to include ELT paradigms, Zero-ETL approaches, and AI-enhanced automation capabilities that address today's complex data requirements.

Organizations benefit from following established best practices including strategic materialization, clear grain definition, effective partitioning, comprehensive documentation, and continuous testing methodologies. Platforms like Airbyte provide open-source, flexible tooling with 600+ connectors that streamline data integration and modeling processes, empowering modern data teams to focus on generating insights rather than managing infrastructure complexity.

.webp)