9 Top Big Data Analytics Tools For 2026

Summarize this article with:

From survey reports, sales records, and transaction files to social media applications, data is present everywhere. In order to leverage these datasets effectively for your business activities, you must perform a detailed analysis and visualization. Data analytics help you uncover facts and figures that you could miss otherwise. So, using this information, you can accordingly focus on consumer preferences, devise new marketing campaigns, and improve business performance.

This article will explore some of the popular big data analytics tools in 2025. So, without further ado, let’s get started:

What is Big Data Analytics?

Big Data analytics is the process of analyzing large amounts of data to draw meaningful conclusions. It facilitates transforming raw datasets into useful information by collecting, cleaning, and organizing them effectively. This information can be utilized to recognize patterns, identify trends, and formulate strategies to build a scalable business. To facilitate this process, big data analytics employs various tools and techniques, such as quantitative data analysis, EDA, and others.

Some of the benefits of big data analytics are as follows:

- Big data analytics allows your business to examine huge data volumes and come up with optimized solutions. This helps you make data-driven decisions, mitigate risks, and enrich the end-user experience.

- With big data analytics, you can redefine your marketing strategies by analyzing past and present records to predict future sales. This step not only saves your time and resources but also takes your business to new heights.

Top 9 Big Data Analytics Tools In 2026

Here is a list of the top 9 big data analytics tools that you can use in 2026 to unlock the potential of your datasets:

Tableau

Developed in 2003, Tableau is a popular visual analytics platform. It allows you to create various maps, dashboards, tables, charts, and graphs for data visualization and analysis, thus facilitating improved decision-making. Using its drag-and-drop feature, you can seamlessly develop infographics without the need for complex programming. You can also share these visualizations with other members of your organization to leverage diverse insights.

Here are a few key features of Tableau:

- Tableau enables you to establish connections with multiple data sources, such as cloud services like Google Analytics, Big Data platforms like Redshift, and many more.

- For quick data discovery, Tableau Catalog automatically catalogs all your data sources and stores them in one place. It also provides metadata of the data you use so you can comprehend and trust it.

Power BI

Created by Microsoft, Power BI is one of the most powerful big data analytics tools. It lets you integrate data from diverse sources to create coherent and engaging insights. It offers data modeling and visualization options to build basic and complex charts, graphs, or tables. You can generate live dashboards with Power BI displaying real-time data updates. This is especially helpful for monitoring key performance indicators and quickly making data-driven decisions.

Here are a few key features of Power BI:

- Power Query is one of the most robust features of Power BI. You can directly load your data in the Power Query editing interface and perform simple modifications like filtering rows and columns. You can also carry out complex changes like pivoting, grouping, and text manipulation.

- With Power BI, you can leverage strong security and governance measures such as audit logs, TLS protocols, and user authentication. These features help you control data access and maintain compliance.

Qlik Sense

Qlik Sense is a popular data analysis tool owing to its in-memory data processing, which accelerates data manipulation and visualization. With the help of its connectors, you can effortlessly extract data from different sources into a single repository to facilitate data analytics. You can create visually appealing dashboards and share the reports or applications with other users through a centralized hub.

Here are a few key features of Qlik Sense:

- Qlik Sense’s augmented data analytics enables the creation of simple yet attractive data interactions by combining artificial and human intelligence. You can ask questions about data, and by evaluating the data, it will generate insights.

- Qlik's alerting feature allows you to monitor your datasets and respond quickly to changes.

KNIME

KNIME is an open-source big data analytics tool designed for users with little to no coding experience to analyze and visualize data. To facilitate these processes, KNIME utilizes nodes that can read and write files, manipulate data, train models, or create visualizations. Additionally, it provides more than 300 connectors, which you can use to effortlessly access data from various sources.

Here are a few key features of KNIME:

- KNIME offers a low/no-code intuitive interface with AI and machine learning capabilities for performing scalable data analytics. It also empowers you to explore data in the form of bar charts, box plots, or histograms using its interactive data views.

- KNIME facilitates text processing to enrich textual data in a dataset. You can load your files in various formats, such as Docx, DML, or PDF, to perform text filtering and manipulation.

SAS Visual Analytics

SAS Visual Analytics is a powerful platform that offers an integrated environment for exploring and discovering data. It offers an intuitive interface where you can effortlessly employ data preparation capabilities such as access, clean, profile, and transform to make your data analytics-ready. This gives you the ability to interpret and understand patterns, trends, or correlations in data effectively. Moreover, you can also assess data and quickly summarize the key performance metrics with no programming required.

Here are a few key features of SAS Visual Analytics:

- With SAS Visual Analytics, you can leverage the feature of augmented analytics, which uncovers the hidden meanings of your dataset within seconds. It also provides you with suggestions and corrective measures that you can take to enhance the quality of the dataset.

- You can customize your data visualization process by utilizing its self-service data preparation feature. It gives you the flexibility to import your tables according to your needs and apply different data quality functions to create a personalized report.

Alteryx

Alteryx is a big data analytics platform that provides an extensive range of features. These features are used for quickly cleaning, processing, and analyzing big data from various sources without any coding or programming experience. Along with these features, you can use its intuitive interface to carry out complex tasks such as advanced reporting, geographical analysis, and predictive modeling.

Here are a few key features of Alteryx:

- With Alteryx, you can perform streamlined data integration processes like ETL and ELT. These methods allow you to extract data from external and internal sources and load it into a unified system for data analytics.

- Alteryx facilitates augmented analytics through automated insight generation, which helps you identify hidden meanings or trends in your dataset.

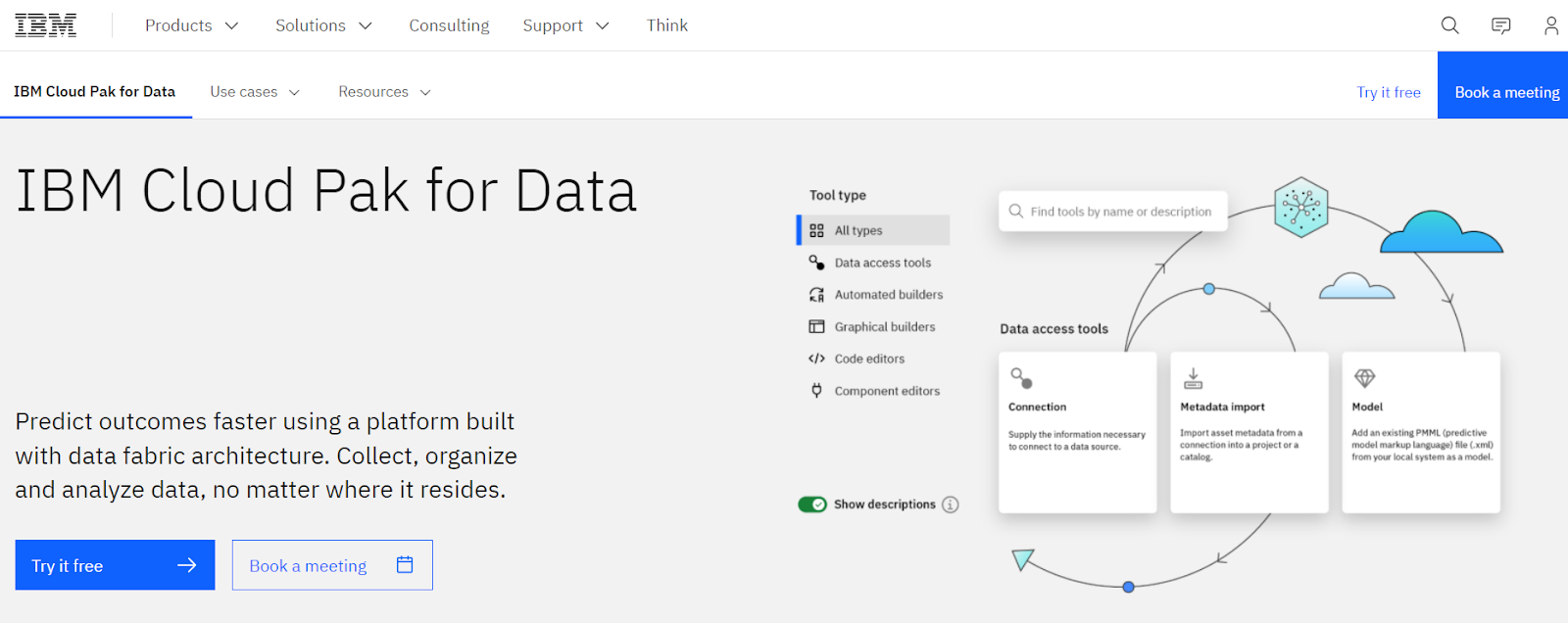

IBM Cloud Pak For Data

With IBM Cloud Pak for Data, you can gather, organize, and analyze data from multiple sources on a single platform. Its wide range of features for data preparation, analysis, governance, and machine learning assist your business in optimizing the decision-making process.

Here are a few key features of Cloud Pak for Data:

- You can run Cloud Pak for Data on public, private, or hybrid clouds. This enables you to easily move your data and applications within the IBM ecosystem according to your business requirements.

- IBM Cloud Pak for Data employs Cognos Analytics, a self-service analytics platform, to perform streamlined data analysis. You can utilize its features like automated data modeling and generation of dashboards or reports. Moreover, you can also share the reports with others.

Zoho Analytics

Zoho Analytics is a self-service platform for data analytics that allows you to analyze data graphically, create unparalleled visualizations, and uncover insights. You can use Zoho Analytics to evaluate large datasets, import data, and create custom reports. Moreover, its data integration feature allows you to extract data from over 250 sources, such as flat files or databases, into a unified workspace.

Here are a few key features of Zoho Analytics:

- With Zoho Analytics, you can leverage fine-grained access control over what your clients or employees can view and do with the reports you share with them. It offers various options, such as read-only, read-write, report authoring, and drill-down, that you can employ according to your business needs.

- Zoho Analytics provides flexible deployment options such as on-premise and cloud to fulfill diverse data requirements and perform seamless analytics.

Sisense

Sisense is a popular business intelligence and data analytics platform that enables you to work with small and big data volumes. Using its intuitive drag-and-drop interface, you can quickly create and explore visualization in your datasets. Its extensive features, like interactive dashboards and predictive data analytics, allow you to draw actionable insights from the dataset.

Here are a few key features of Sisense:

- Using Sisense's various frameworks, you can connect to multiple data sources such as spreadsheets, cloud services, databases, or web applications. These frameworks include connecting to JDBC, the customer REST framework, and the New Connectors framework. This allows you to combine your data in a central system for performing data analysis.

- Sisense offers a wide range of data security and governance capabilities to ensure your data is secure and compliant with regulations. To protect account credentials and authorization, it employs many encryption methods, such as TripleDES and SHA-256.

What to Look for When Choosing a Big Data Analytics Tool?

Choosing a suitable Big Data analytics tool depends on various factors. These factors include the volume of data, the data type, ease of accessibility, and security compliances. In this section, let’s take a look at some of the most crucial factors that you must consider while selecting a platform:

Data Sources Availability

It is important to identify the different Big Data sources, such as cloud storage, databases, IoT devices, or CRM platforms your enterprise uses. The chosen analytics tool must integrate with these data sources or support a third-party tool for leveraging integration.

Supported Data Types

Big Data analytics tools can handle various data types, including structured and unstructured. However, structured data can be quickly analyzed, unstructured data might require some additional pre-processing.

Real-Time or Batch-Processing Needs

If you want to perform real-time data analytics, like monitoring sites for live insights, you must choose a platform that supports real-time data processing. However, batch processing might be more economical and efficient for analyses that don't require immediate results.

Ease of Use

While selecting any Big Data analytics tool, you must go for a platform with an intuitive and user-friendly interface. It should be easily navigable for users with no prior technical expertise so that they are able to build dashboards and draw conclusions effortlessly.

Data Visualization Capabilities

A powerful Big Data analytics tool is also equipped with data visualization and reporting features such as graphs, charts, or dashboards. This visualization lets you deal with large and complex datasets quickly and generate customized reports based on your business needs.

Data Security Features

While all the above-mentioned factors are essential for choosing an analytics platform, security and compliance are the most uncompromising. To prevent unauthorized access, ensure the tool offers security measures such as encryptions, access controls, and authentication mechanisms.

Experience Optimized Data Analysis With Airbyte

The above-mentioned tools are suitable for fulfilling your big data analytics needs as they are equipped with robust features and functionalities. However, before you perform data analysis, it is crucial to bring data together in a centralized system. You can employ Airbyte, a robust data integration platform, to facilitate this process.

Introduced in 2020, Airbyte follows a modern ELT approach to extract data from various sources and load them into a destination system. It has a rich library of more than 550 pre-built connectors that you can employ to automate data pipelines without writing a single piece of code.

In addition, you can also build custom connectors using its no-code Connector Builder, low-code Connector Development Kit (CDK), or language-specific CDK. To speed up the custom connector development process, Airbyte provides an AI assistant with Connector Builder. The AI assistant reads the API documentation automatically and offers intelligent suggestions for fine-tuning your connector configurations process.

Some of the unique features offered by Airbyte are as follows:

- Simplified GenAI Workflows: You can load your unstructured data directly into vector databases like Chorma, Milvus, Pinecone, and Qdrant. This simplifies your GenAI workflows and streamlines other downstream tasks for building LLM applications.

- Data Refreshes: You can perform data refreshes and pull all your historical data from the sources with zero downtime. If the sources and destinations support the checkpointing feature, then your refreshes will be resumable. This implies that you can continue your data syncs right from where you stopped.

- Leverage PyAirbyte: Airbyte has an open-source Python library, PyAirbyte, that is designed specifically for Python programmers. It helps you to develop data pipelines efficiently using the connectors supported by Airbyte.

- Flexible Pricing: Airbyte offers a free, open-source version. Apart from this, it also has multiple paid plans, Airbyte Cloud, Airbyte Team, and Self-hosted Enterprise solutions. All of them have custom pricing based on the features they provide.

- Self-Managed Enterprise Version: Airbyte has announced the general availability of the self-managed enterprise version to future-proof your data needs. It provides you with the best data access while maintaining data governance. You also have full control over your sensitive data and can operate the self-managed enterprise solution in air-gapped environments using API, UI, and Terraform SDK.

- Vibrant Community: With Airbyte, you can leverage its open-source community of over 15,000 members who contribute to the platform's development. You can collaborate with others to share resources, discuss the best integration practices, and rectify queries arising during data ingestion.

Final Word

This article has extensively covered the top nine big data analytics tools and their key features to help you decide on a suitable platform for your business needs. Each platform is equipped with modern features and functionalities to enable you to perform streamlined analysis.

However, before performing data analysis, combining data from multiple sources is crucial to avoid any data discrepancy. We suggest employing Airbyte to accomplish seamless data integration, as it offers various features like diverse deployment options, data pipeline management, and an active open-source community. Sign in on its platform today.

Suggested read:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: